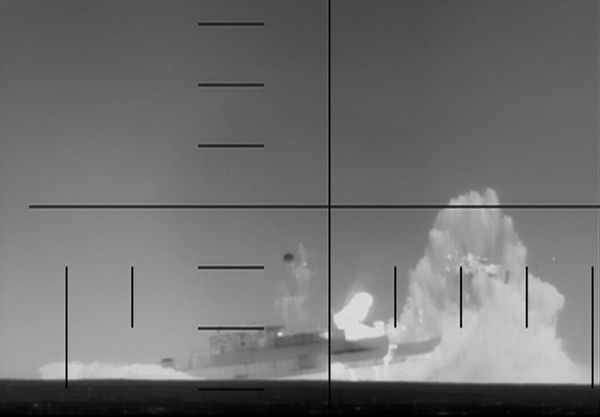

Israel’s bombing campaign in Gaza—which, according to reports, has struck hundreds of targets a day—has been described by experts as the deadliest and most relentless in recent history. It’s also one of the first such campaigns being coordinated, in part, by algorithms.

In its quest to eliminate Hamas in Gaza following the group’s deadly October 7 attack last year, Israel appears to be relying heavily on artificial intelligence to map out the battle space and inform tactical responses. While the country is very guarded about its technology, statements from the Israel Defense Forces, along with details gleaned from investigative reporting, suggest that AI is being used to help with everything from identifying and prioritizing targets to assigning weapons to be used against those targets. The promise of AI-assisted decisions is that they sharpen the accuracy of military actions. Some experts, such as Robert Work, a former United States deputy secretary of defence, argue that governments have a moral imperative to adopt this technology if it can be shown to reduce collateral damage and protect civilian life. In Gaza, however, evidence points to the contrary.

While the number of Palestinian casualties remains a subject of debate—the Hamas-run ministry of health estimates an average of about 250 deaths a day, a figure Israel disputes—there is little doubt about the devastation. The UN assesses that the military operation has damaged or destroyed over 60 percent of homes and housing units in Gaza.

This reality underscores a grave reckoning with the actual and ethical deployment of AI in conflict zones. If such systems are so advanced and precise, why have so many Gazans been killed and maimed? Why so much destruction of civilian infrastructure? In part, blame the distance between theory and practice: while AI tools might work well in a laboratory or in simulations, they are far from perfect in a real war, particularly one fought in a densely populated area. AI and machine learning are also only as effective as the data they are trained on; even then, it is not magic but rather pattern recognition. The speed at which mistakes are made is a critical concern.

According to the Lieber Institute, an academic group which studies armed conflict, the IDF is using “geospatial and opensource intelligence” to alert troops to possible threats on the ground (it’s unclear what that intelligence is comprised of, but it could conceivably include satellite imagery, drone footage, conversations intercepted from cellphones, and even seismic sensors). Another AI system provides the IDF with details of Hamas’s vaunted system of tunnels, everything from their depth and thickness to the routes they take. Targeting data is then sent directly to troops on hand-held tablets. Like a violent orchestral ensemble, individual AI parts harmonize to identify Hamas combatants.

Flaws in this strategy were already visible from an earlier round of conflict between Israel and Hamas when, in 2021, Israel launched Operation Guardian of the Walls. Dubbed the first AI war by the IDF, it arguably marked the advent of algorithmic warfare. A former Israeli official, quoted in +972 Magazine, claimed that the use of AI in 2021 helped increase Hamas targets from fifty a year to 100 a day. And the Jerusalem Post published the IDF’s claim that one strike on a high-rise building successfully hit a senior Hamas operative, with no other civilian deaths. But despite the IDF’s praise for its algorithmic system, the 2021 operation had significant costs: according to Gaza’s ministry of health, 243 Palestinians were killed and another 1,910 injured. (Israel has countered that more than 100 of the casualties were Hamas operatives; other deaths, according to the Jerusalem Post, were attributed to “Hamas rockets falling short or civilian homes collapsing after an airstrike on Hamas’s tunnel network.”)

Israel, once again, seems to be relying on AI in its larger-scale operation in Gaza. And, once again, the scale of destruction undermines any claims of greater precision. It should be said that this AI-aided weaponry is not fully autonomous: a human must approve targets. However, it is not clear how decisions are made or how often recommendations are rejected. Also unknown is the extent to which the individuals who approve targets are fully aware of how the AI system arrived at a recommendation. By what means do humans determine the accuracy and trustworthiness of the system’s data? According to a report compiled by the think tank Jewish Institute for National Security of America, one noted flaw in the 2021 use of the technology was that “it lacks data with which to train its algorithms on what is not a target.” In other words, the training data ultimately fed to the system did not include the targets—be it civilian property or particular individuals—that flesh-and-blood analysts had deemed inappropriate. Any AI system not trained on what to avoid can inadvertently include the wrong objects on its kill list—especially if it’s deciding on a target every five minutes, in a twenty-four-hour period, for months.

This leads to another widely acknowledged concern: automation bias, or the human tendency to put too great a trust in the technology. A source from +972 Magazine said that a human eye “will go over the targets before each attack, but it need not spend a lot of time on them.” Researchers Neil Renic and Elke Schwarz have argued on the international law blog Opinio Juris that AI-powered weapons illustrate the technology’s potential “to facilitate systems of unjust and illegal ‘mass assassination.’” In dynamic and chaotic contexts such as war zones, the quickest decision often wins out, even if it causes more damage than necessary.

It’s unclear how the laws of war, established well before the current era, can address these new scenarios. States have not agreed to new legal frameworks on AI targeting. But irrespective of the lack of regulations specific to AI targeting, if humans who approve a target don’t fully understand how a recommendation was determined, the quality of their decision could be seen as insufficient to meet the standards of international humanitarian law: namely, the requirement that military forces distinguish between civilians and combatants.

Several countries, including the United States, China, India, Russia, and South Korea, are rushing ahead with the development of AI for military use. Other states, like Turkey, have been keen to export their AI tech—namely, drones—to countries embroiled in conflicts, such as Ukraine and Azerbaijan. The vast array of targets available to AI-driven systems presents a dangerous allure for nations eager to flex their military muscle or prove their defensive mettle to their citizens. This seduction of power can escalate conflicts, potentially at the expense of strategic restraint and humanitarian considerations.

Though we don’t know the full extent of the Israeli military’s use of AI, it’s clear what is happening in Gaza is far from the promised vision of “clean” war. The ongoing outcome also suggests that advanced militaries might be prone to accept speed in decision making over moral and perhaps even legal obligations. Simply put, the use of technology in warfare does not change the fundamental realities of the fog of war and changing contexts. AI works well in predictable environments with clear categories; war zones are filled with uncertainty.

In the face of the rising adoption of AI by militaries worldwide, the unregulated spread of the technology calls for an immediate global oversight, not just in the current conflict but in the broader landscape of international security. Without clear and enforceable rules and norms on the military use of AI, we risk backsliding on the legal measures intended to protect civilians. The alternative is to let the unchecked algorithms dictate the course of conflicts, leaving humanity to grapple with the consequences.