Tuesday’s announcement that the National Ignition Facility at Lawrence Livermore National Laboratories had successfully achieved fusion ignition — a net gain of energy — sent a wave of public excitement around the globe. But the research’s history is much more directly shaped by its origins in Cold War secrecy.

Investigations into fusion energy date back to the 1920s, when astronomers realized it could explain the way stars keep themselves burning. But the modern field began to take shape in 1951, when Ronald Richter, a German physicist who had emigrated to Argentina in 1947, announced to the world that his “Thermotron” had successfully achieved nuclear fusion.

Richter made “bizarre claims, that he had achieved fusion on this tiny scale,” Alex Wellerstein, a historian of physics and nuclear secrecy at Stevens Institute of Technology, tells Inverse. Wellerstein says they were quickly found not to be the case, but the investigation into whether or not he had was more than enough to kickstart research into peaceful fusion energy in both the US and USSR.

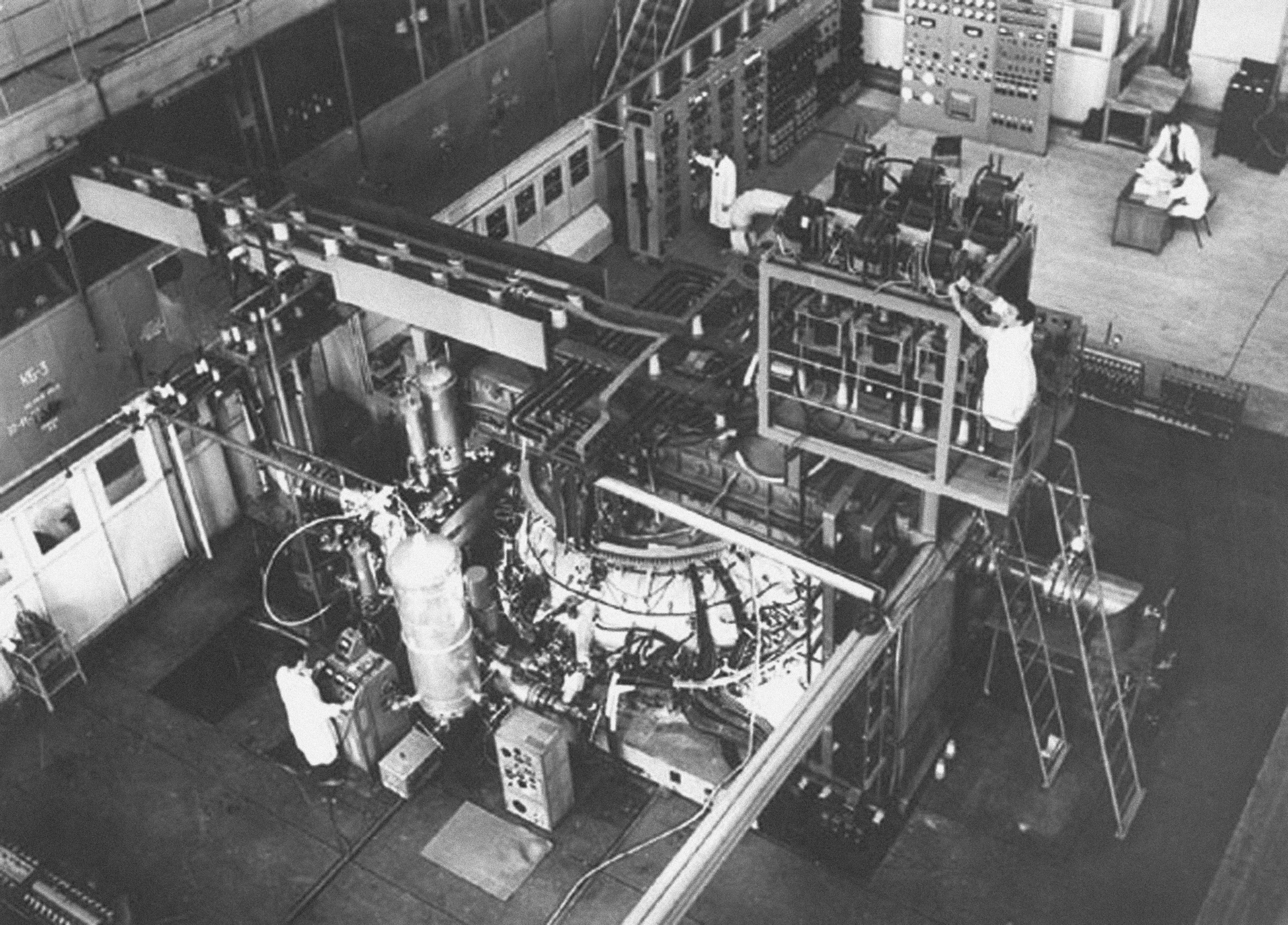

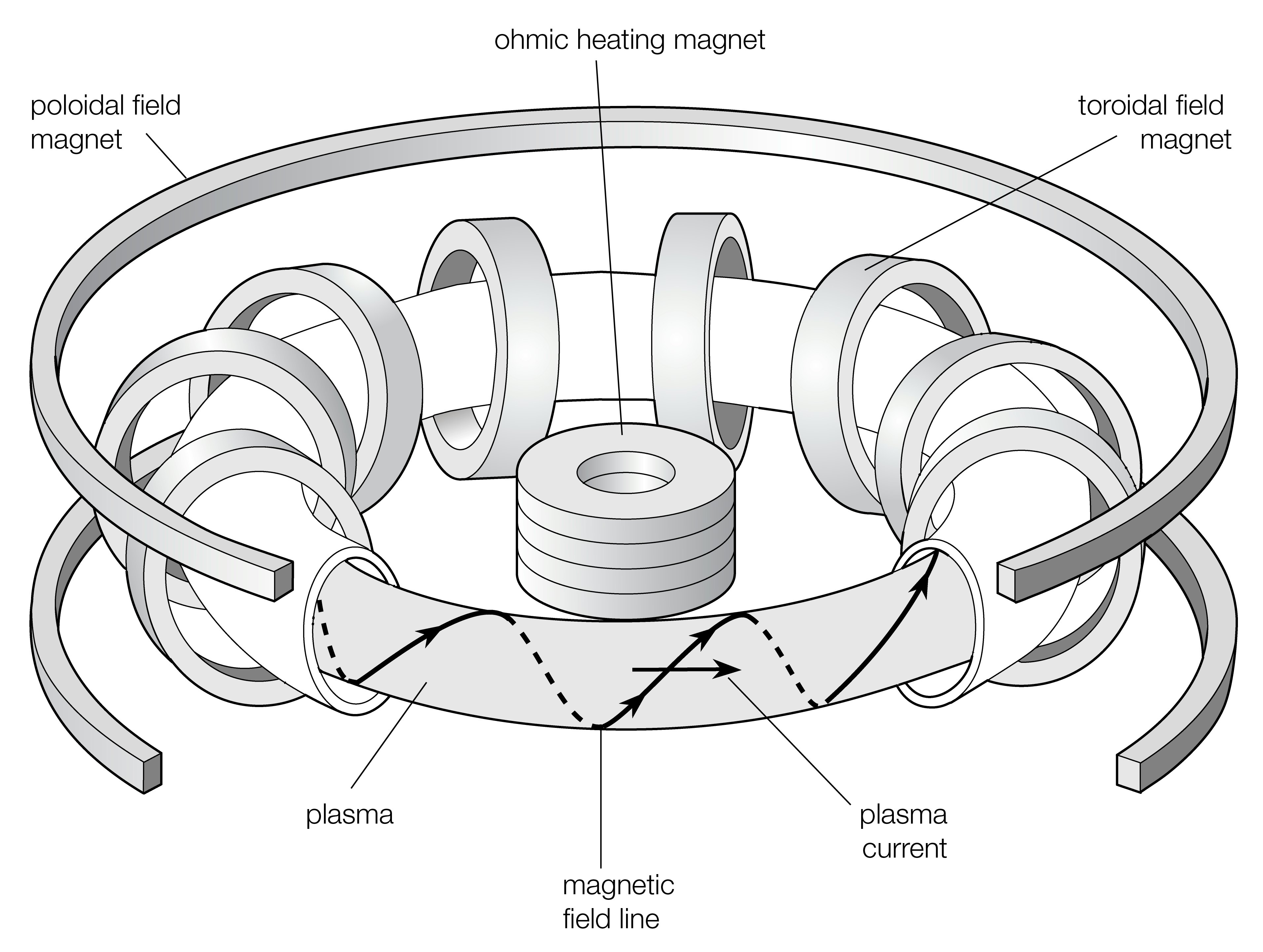

To sustain fusion, rather than just releasing the energy in a single blast, Wellerstein notes, you need to maintain extremely high temperatures, “and no material substance would be able to hold something like that.” So instead, physicists focused on shaping the plasma into a donut-shaped torus, then using magnets to keep it from crashing through the sides. This design, a Tokamak, became public knowledge in 1958. It forms the basis of most fusion research to this day, including the massive ITER megaproject under construction in France, which aims to come online in 2025.

Hot fusion and the Cold War

In 1958, Cold War secrecy concerns inadvertently split the way fusion research worked in the United States. At the same time Soviet Tokamaks were being revealed to the world’s assembled physicists in Geneva, American and Soviet diplomats were negotiating a temporary halt of nuclear weapons testing, a moratorium that would last until 1961.

With their regular regimen of testing hydrogen bombs on pause, Wellerstein explains, “the various laboratories in the US had to pivot their work. If you’re not allowed to test, that really screwed things up.” Instead, physicists were reassigned to various related questions, including a young physicist named John Nuckolls.

Nuckolls was set to find the answer to a straightforward question: with America considering using nuclear bombs to dig out canals, ports, and mines, could you set off a nuclear bomb and generate cheap electricity from it? Perhaps unsurprisingly, he could not. Nuclear weapons are expensive, uranium is expensive, and the easiest way to do it still would generate a massive plume of radioactive steam. But it set Nuckolls down a path: what if you got rid of the uranium? What if you got rid of the fission that causes the radiation?

To do that, Wellerstein explains, physicists turned to another brand-new invention, the laser. Enough laser, Nuckolls realized, could be used to replicate the inside of a hydrogen bomb, compressing and heating a tiny pellet of fuel and causing fusion.

This, however, is much easier said than done. Over sixty years ago, Nuckolls decided to try solving this problem by shifting focus from hitting a tiny pellet with many powerful lasers (known as the “direct drive” approach) to hitting a slightly larger radioactive container that distributes energy into the fuel pellet, an “indirect drive” approach that NIF used to achieve its breakthrough ignition.

In 1961, all this was put on hold, and American physicists went back to applying these techniques to weapons as the Kennedy administration resumed tests on nuclear weapons. By the late 1960s, though, other people “were starting to figure out that things at the end of a laser get hot,” Wellerstein says. Ex-nuclear weapons physicist Keith Brueckner began filing patents on a “very optimistic” version of laser-driven fusion, leading to the declassification of Nuckolls’ earliest work in the 1970s. But eventually Brueckner’s company, KMS Fusion, fell apart after some success–but much less success than he had promised.

“The earlier ‘70s ideas about making power plants out of this involve this being a lot easier than it is,” says Wellerstein. The National Ignition Facility has to be carefully tended and adjusted in order to feed a football field’s worth of laser energy into a tiny pellet, but “they would involve shooting off many of these shots per minute, almost like an internal combustion engine.”

Fusion: The modern era

The big shift came with the end of the Cold War. If you have a nuclear arsenal, but you’re not testing it, Wellerstein says, “how do you know that your weapons will continue to work? How do you have confidence in this?” In the early nineties, Nuckolls, who had risen to director of Lawrence Livermore National Laboratories, pushed for the construction of a gigantic interior confinement fusion facility — which became NIF.

Martin Pfeiffer, a doctoral candidate in anthropology of nuclear research at the University of New Mexico, tells Inverse that Lawrence Livermore’s National Ignition Facility “was sold to Congress in order to create conditions necessary for understanding thermonuclear processes involved in the secondary stage” of fusion weapons.

Both Wellerstein and Pfeiffer emphasize that the primary focus of interior confinement research is “stockpile stewardship,” the maintenance of “better, more reliable, more predictable nuclear weapons that can be believed in in absence of explosive nuclear testing,” as Pfeiffer puts it.

NIF had expected to reach this ignition point over a decade ago. Wellerstein notes that Nuckolls and others “may have overstated the ease of it. Which is sort of the history of this kind of work: you may think ‘how hard is to to compress this pellet,’ but the answer is ‘it’s really hard to compress this pellet.’”