Scientists from the University of Maryland have taken inspiration from the human eye and developed a new camera system.

The design mimics the miniscule involuntary movements of the human eye to maintain clear and stable vision.

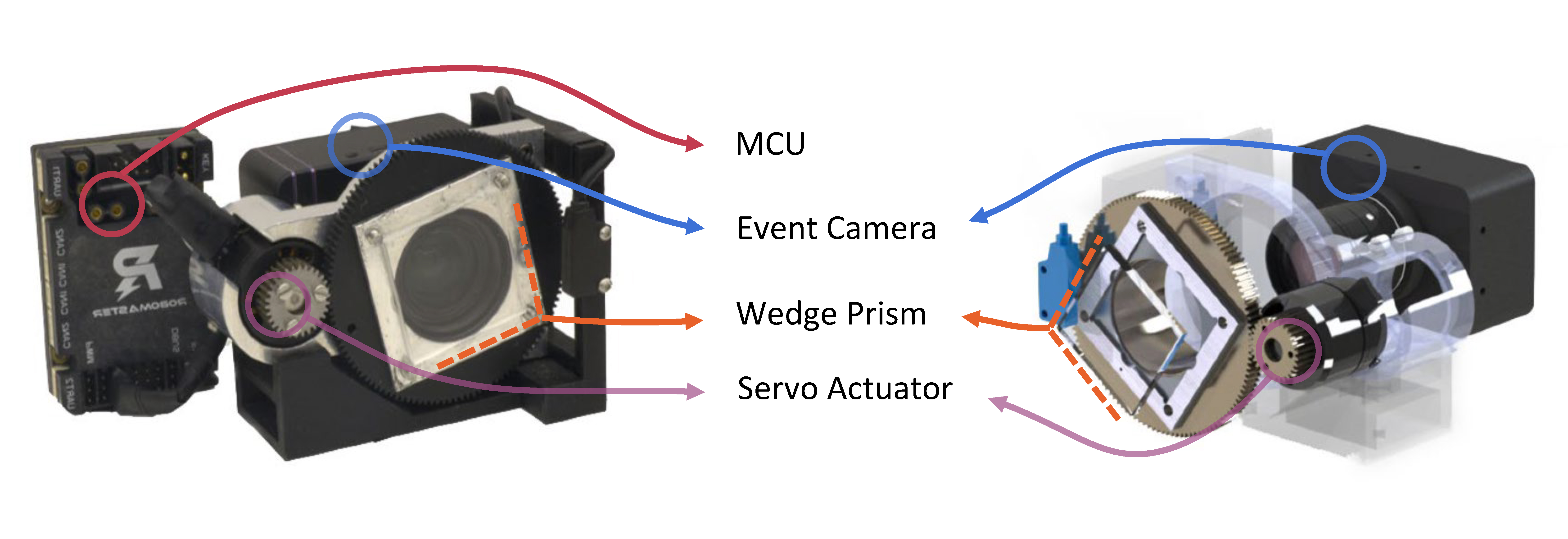

The camera is called the Artificial Microsaccade-Enhanced Event Camera (AMI-EV) and the scientific paper is published in Science Robotics.

Lead author of the research paper Botao He, a computer science PhD student at the University of Maryland said:

“Event cameras are a relatively new technology better at tracking moving objects than traditional cameras, but today’s event cameras struggle to capture sharp, blur-free images when there’s a lot of motion involved.

“It’s a big problem because robots and many other technologies - such as self-driving cars - rely on accurate and timely images to react correctly to a changing environment. So, we asked ourselves: how do humans and animals make sure their vision stays focused on a moving object?”

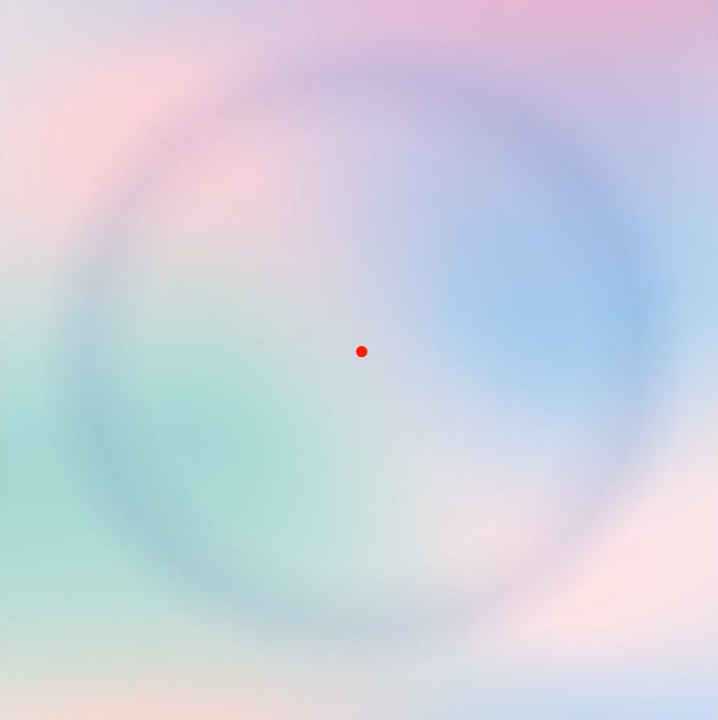

The answer lies in microsaccades, small, rapid eye movements that happen when a person is trying to focus their gaze on a stationary object. Through these movements, the human eye can stay accurately focused on an object and its visual textures, such as color, depth, and shadowing, for prolonged periods of time.

This research could be the key to improving how robots, self-driving cars, and camera phones see more clearly.

According to the research team, they replicated microsaccades by inserting a rotating prism inside the AMI-EV camera, to redirect light beams captured by the lens.

The continuous rotational movement of the prism simulated the movements that naturally occur in the eye, enabling the camera to stabilize the textures of a recorded object.

He said:

“We figured that just like how our eyes need those tiny movements to stay focused, a camera could use a similar principle to capture clear and accurate images without motion-caused blurring.”

While still in the very early stages of innovation, the technology could be an advancement in camera phones, robotics, and self-driving cars, which famously struggle to recognize humans.

Researchers also believe that the technology could be hugely beneficial in a wide range of industries that rely on accurate image capture and shape detection.

Research scientist and senior author of the paper Cornelia Fermüller said:

“With their unique features, event sensors and AMI-EV are poised to take center stage in the realm of smart wearables.

“They have distinct advantages over classical cameras—such as superior performance in extreme lighting conditions, low latency and low power consumption. These features are ideal for virtual reality applications, for example, where a seamless experience and the rapid computations of head and body movements are necessary.”