What you need to know

- Google highlighted the rollout of its new SAIF Risk Assessment questionnaire for AI system creators.

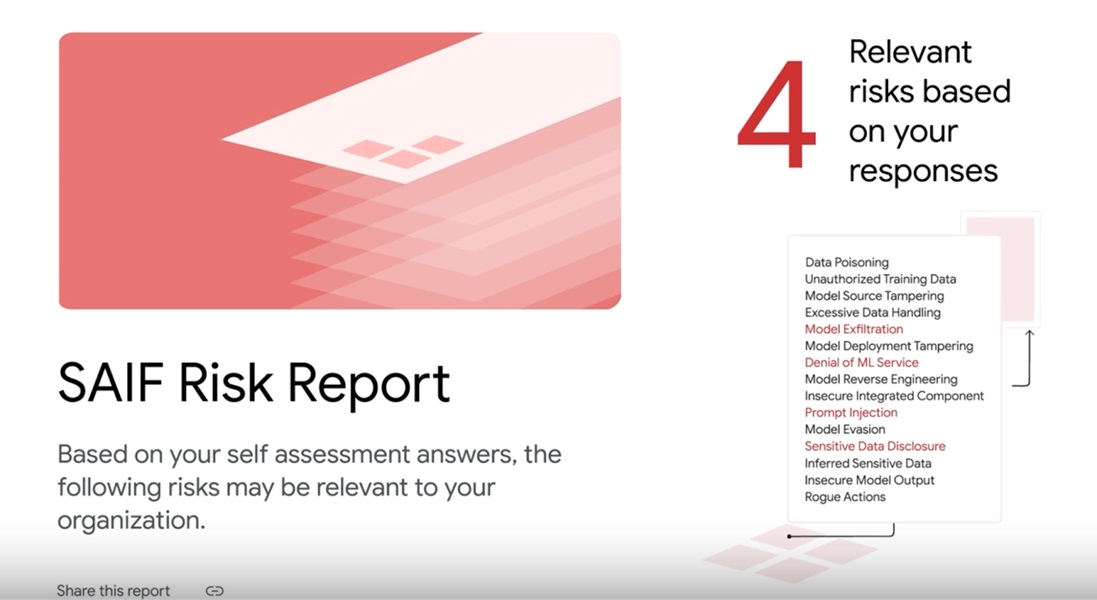

- The assessment will ask a series of in-depth questions about a creator's AI model and deliver a full "risk report" for potential security issues.

- Google has been focused on security and AI, especially since it brought AI safety practices to the White House.

Google states the "potential of AI is immense," which is why this new Risk Assessment is arriving for AI system creators.

In a blog post, Google states the SAIF Risk Assessment is designed to help AI models created by others adhere to the appropriate security standards. Those creating new AI systems can find this questionnaire at the top of the SAIF.Google homepage. The Risk Assessment will run them through several questions regarding their AI. It will touch on topics like training, "tuning and evaluation," generative AI-powered agents, access controls and data sets, and much more.

The purpose of such an in-depth questionnaire is so Google's tool can generate an accurate and appropriate list of actions to secure the software.

The post states that users will find a detailed report of "specific" risks to their AI system once the questionnaire is over. Google states AI models could be prone to risks such as data poisoning, prompt injection, model source tampering, and more. The Risk Assessment will also inform AI system creators why the tool flagged a specific area as risk-prone. The report will also go into detail about any potential "technical" risks, too.

Additionally, the report will include ways to mitigate such risks from becoming exploited or too much of a problem in the future.

Google highlighted progress with its recently created Coalition for Secure AI (CoSAI). According to its post, the company has partnered with 35 industry leaders to debut three technical workstreams: Supply Chain Security for AI Systems, Preparing Defenders for a Changing Cybersecurity Landscape, and AI Risk Governance. Using these "focus areas," Google states the CoSAI is working to create useable AI security solutions.

Google started slow and cautious with its AI software, which still rings true as the SAIF Risk Assessment arrives. Of course, one of the highlights of its slow approach was with its AI Principals and being responsible for its software. Google stated, "... our approach to AI must be both bold and responsible. To us that means developing AI in a way that maximizes the positive benefits to society while addressing the challenges."

The other side is Google's efforts to advance AI safety practices alongside other big tech companies. The companies brought those practices to the White House in 2023, which included the necessary steps to earn the public's trust and encourage stronger security. Additionally, the White House tasked the group with "protecting the privacy" of those who use their AI platforms.

The White House also tasked the companies to develop and invest in cybersecurity measures. It seems that has continued on Google's side as we're now seeing its SAIF project go from conceptual framework to software that's put to use.