Google's I/O 2024 keynote presentation by Google CEO Sundar Pichai has now finished, and as predicted the event at Google's HQ at Mountain View, California, mainly focussed on artificial intelligence – especially its Google Gemini AI chatbot.

The focus on Gemini was so great that we got little in the way of other announcements, which we've helpfully rounded up in our guide to the best of Google I/O 2024. So, only a fleeting mention of the new Android 15 operating system for tablets and smartphones, and nothing about Chromebooks, Google Nest products or the Chrome web browser.

Here are the main takeaways so far from the event. Throughout the rest of the week we’ll be digging into Google’s announcements in more depth, and Phil Berne, our US mobile editor, is at the event for hands-on time with the new and improved Gemini, so keep your eyes peeled for his thoughts.

- Google is 'reimagining' Android to be all-in on AI – and it looks truly impressive

- Google reveals new video-generation AI tool, Veo, which it claims is the 'most capable' yet – and even Donald Glover loves it

- Google I/O showcases new 'Ask Photos' tool, powered by AI – but it honestly scares me a little

- Google's Project Astra could supercharge the Pixel 9 – and help Google Glass make a comeback

- No thanks Google, I don’t want an AI yoga bestie

There was also talk about Google’s new video and music generation tools, Gemini integration in Gmail and Workplaces, and chat about a slightly creepy virtual workmate.

Read on below for a blow-by-blow account of the keynote speech.

Hello! Welcome to our Google I/O liveblog! I’ll be running this live blog as the hype builds for what Google is going to show today.

As I mention above, we’ll have Phil Berne, our US Phones Editor, on the ground at the event in California (lucky him), while myself and a lot of the TechRadar team will be following along online from the much less sunny climes of New York and London.

If you want to watch along with the live stream, which starts at 10am PT / 1pm ET / 6pm BST (or May 15 at 3am AEST), then check out our guide on how to watch Google I/O 2024.

So, what’s everyone looking forward to at this year’s I/O event? There is a huge amount of excitement about artificial intelligence, and it seems like Google is going to be doubling down with its AI chatbot Gemini.

We’ll likely hear more about what Gemini can do, as well as how it integrates into Google’s major products, such as the Chrome browser and Pixel smartphones.

Personally, while AI has huge potential, I’ve not really found any of the big AI releases to have fundamentally changed my day-to-day life. So, I’ll be interested to see if Google can change that.

Otherwise, as an owner of an Android smartphone, I’ll be really keen to see what Google has in store with Android 15, which is likely to appear.

Could we see some new Pixel smartphones as well? There’s certainly convincing rumors that the Pixel 9 series will be launched today.

I’ll be interested in seeing what Google does here. I’ve had Pixel phones since the original, and my last handset was the Pixel Pro 7. So, I like Pixels a lot, especially how they come with relatively bloat-free versions of Android.

However, my Pixel Pro 7 sadly got run over (a long story that I don’t fully remember), so I have a new phone - the Samsung Galaxy 24 Ultra. If Google releases a Pixel 9 that surpasses that, I might be a bit upset.

I’d also love to see some cool new Chromebooks. Maybe a showcase on how Chromebook Plus devices can stand out amongst the best Chromebooks on the market.

Chromebook Plus devices are more powerful variants of the humble Chromebook, and offer better specs, screens and build quality, all while keeping prices relatively cheap.

However, for people looking for the best cheap laptops, or laptops for students, for example, a standard Chromebook remains your best option. But, could Google I/O change that?

One thing that I would absolutely love to see at Google I/O is a follow-up to one of my favorite laptops of all time: the Pixelbook Go.

The Pixelbook Go was a brilliant Chromebook with superb performance, a lovely screen, long battery life and one of the best keyboards ever included in a laptop.

It was a joy to use, and for a few years it was my go-to laptop for when I needed to work away from my desk.

Sadly, Google has never released a followup to the Pixelbook Go. It seems to have abandoned making its own Chromebooks, which is a real shame. The Pixelbook lineup showed how Chromebooks could be more than just budget laptops with a limited operating system.

Pixelbooks were premium and powerful Chromebooks that could go toe-to-toe with much more expensive premium laptops from the likes of Dell and Apple.

Sounds familiar? Yep, they were essentially Chromebook Plus devices before Chromebook Plus was a thing.

So, today’s Google I/O keynote could be the perfect time to resurrect the Pixelbook. Sadly, I don’t think this will happen - when I’ve spoken to Google in the past it showed little interest in a follow-up to the Pixelbook Go.

But, if Google was to launch a surprise Pixelbook, it would make me very happy indeed.

I’m also a fan of the Pixel Watch. It’s one of the few Wear OS-powered smartwatches that offers excellent features, good performance and an attractive design.

I skipped the Pixel Watch 2 as my OG model still runs fine. However, if there’s a Pixel Watch 3 launch alongside the Pixel 9 family, I’d be very interested to see if this time the generational leap is much larger.

However, most rumors suggest a Pixel Watch 3 launch will be later on this month. Still, I’d like Google to show off some Wear OS improvements that can make my beloved original Pixel Watch even better.

While Google will likely launch the Pixel 9 series in October, as it has previous generations, a new leak today apparently shows off three models.

The leak suggests that the Google Pixel 9 has a 6.24-inch screen, the Pixel 9 Pro has a 6.34-inch display, and the Pixel 9 Pro XL has a 6.73-inch screen.

The timing of this leak is particularly unfortunate as it’s on the day of I/O 2024, and potential images of Google’s upcoming flagship phones could overshadow the event.

I certainly got a bit excited and thought this could mean we may get to see new Pixel smartphones today, but on reflection, I think Google will likely hold a dedicated Pixel launch event later this year.

The company will also likely not mention the leaks, though who knows? Maybe the leak, if accurate, forces Google to show a quick preview of the phones early.

Hi. It's Roland Moore-Colyer, Managing Editor of Mobile Computing, taking over the live blog for a bit while Matt Hanson goes to shoot some iPad Air and iPad Pro videos.

So speaking of tablets, I'd love to see Google give the fondle-slate version of Android some love. As it stands, Android isn't really up to par when it comes to offering a large-screend experience so I feel Google has some work to do there.

I doubt we'll hear too much on the tablet front from Google as there's been very little in the way of murrurings about any tablet-centric features coming to Android 15.

Then again with devices like the Samsung Galaxy Tab S9 Ultra, I get the feeling Google is content to let other devices makers coax Android onto tablets rather than put too much of the legwork in itself.

On the subject of Android 15, I expect we'll get a much deeper look at what to expect from the mobile operating system. AI will surely play a part, but I'd rather Google intergrated better AI tools into the backend of Android rather than focusing on generative AI stuff.

What do I mean by that? Well I want to be able to ask my phone where I was on a particular drunken night out and have it serve up where I may have wandered off too.

Or just use a combination of natural language processing and learning how I've been using my phone to serve up better answers to various queries. I want an autocorrect that actually gets me, rather than play 'guess what the clumsy typer is trying to write'.

As Matt mentioned earlier, there's scope for Google to tease the Pixel 9 series, as the search giant has got into the habit of teasing its upcoming phones. But from Android 15 we could get an idea of what to expect from the ninth-generation Pixel phones.

Oh and look who's popped up! It's our very own Philip Berne at Google I/O.

Hello! It's Matt, taking the live blog back after a short break! We are just over an hour out from the Google I/O keynote!

Our US phones editor is on the ground! Here's some pictures he's sent us, using a Pixel 8a.

So, I said that here in London it wouldn't be as sunny as in California... but going from Phil's photos it actually doesn't look much different to what we're currently seeing in the UK.

People are taking their seats! We are now around an hour from the event starting. Exciting!

While we wait, Google has created a new version of its popular Dino game, which you can play using the QR code below.

This new version uses generative AI - which could give us a hint at what Google will be showing off.

Right, almost 30 minutes until the show. If you want a recap of what we expect, head to our Google I/O 2024 hub for everything you need to know before the show itself.

Fun fact: Google's livestream is playing waiting music that's "created using image-to-music generation by our AI models"

Another clue? Using AI-generated art is a very controversial topic right now (and Apple managed to upset quite a few artists with its latest iPad Pro advert).

There's a guy making some weird noises using Google's generative AI tools. It's... uh... a choice. The crowd don't seem to into it.

"It's too early for this," Phil tells us.

He's now shooting t-shirts into the crowd. Hopefully Phil catches one!

Oooh something is happening! And no, Phil did not manage to get a t-shirt :(

If that was supposed to give us a taste of Gemini's music-creation tools... It wasn't really anything we've not seen/heard before.

Oooh Android Auto hint in the countdown?

Here is Google CEO, Sundar Pichai, talking about Gemini. He's really pushing how revolutionary AI, and especially Gemini, is. More than 1.5 million developers use Gemini, apparently.

We are going to see a lot about how Gemini can work with Google's various products (called it!).

AI Overviews is going to be available to everyone in the US this week - and other countries 'soon'. What is AI Overviews? Good question...

He's now talking about how to find a photo in Google Photos. You can now talk to Gemini and it will be able to cleverly find your car's registration plate, a process that could have taken a lot longer in the past.

Known as 'Ask Photos', this tool will come later in the summer. Some people may not be comfortable with how much of your data Google is helping itself to, however...

Now we are getting some devs talking about tokens and stuff. It's times like this you remember that Google I/O is an event primarily aimed at developers.

I use Google Photos, and I've used it in the past to find the licence plate of my car, so the demo that Sundar Pichai just showed was of particular interest to me!

If it works as well as the demo suggests, I'll definitely be using it to find photos in my huge library.

Now we've gone on to Google Workspace and Gemini. You can ask it to summerize emails from certain contacts (in the demo it's a school).

You can also ask Gemini to give you highlights of meetings in Google Meet. It can then draft replies. Handy. If you and your work are all-in on Google Workplace.

"It's basically Google's version of the Eras Tour with fewer costume changes." #GoogleIO pic.twitter.com/sVeC7LL0C2May 14, 2024

We are now being told how Gemini can help your kids do homework. Again, impressive, but this is putting a lot of pressure on the accuracy and dependability of Gemini.

Getting some more use cases. Shopping for shoes is the first one. If you want to return it, Gemini could find your receipt, fill out a returns form then arrange collection.

Sounds cool but... will it actually work like that?

'Making AI helpful for everyone' - Sundar Pichai.

To be fair, this is what companies really need to do. And it's something I've not yet seen from the likes of OpenAI and Microsoft.

Another reference to 2 million tokens!!! Yey!! I don't know what they mean.

It's a high number though. Big number = good, yeah?

Project Astra announced - a multi-modal AI that can better understand the context of your requests.

This is VERY technical, but we have a video demo of how Astra can identify things you point your phone's camera at.

This is impressive - they are asking various questions and the AI is answering in a realistic manner. It can even tell you where you left things, apparently. This would, of course, mean you're constantly filming yourself and your home. I don't think many people will love that.

One device they mentioned that could be used are smart glasses. Could this be a hint of a future Google Glass sequel?!

Coming to Google services like Gemini later this year. Phil will be testing it out live at the event, so keep your eyes peeled on our home page and socials for that!

Now we are being told about generative AI music-making tools. Another video showing how you can sample music and create whole new tracks - with AI.

Now talking about Veo, a generative video model.

You can prompt for things to create videos - using a tool called VideoFX. This is the kind of stuff that's very interesting, but also something I have quite a few reservations about. Could this replace human artists? That is the worry.

Now we have Donald Glover (on video). He says with these tools anyone can be a director - and should be.

I admire Glover a lot, but that is quite a statement...

Now talking about CPUs - Trillium. Will be available for cloud customers in 2024. Also will be offering Nvidia Blackwell GPU capabilities in 2025. That's cool!

Now talking about an AI hypercomputer. Thanks to liquid cooling in its data centers, it can push a huge amount of cloud processing across the world.

Now Sundar Pichai is wrapping things up with the Gemini and AI tools. Google search is now "Generative AI at the scale of human creativity," apparently.

Now we are hearing about how Google Search is going to change.

"Google is going to Google that for you" with the power of Gemini.

"Search in the Gemini era." Google is VERY bullish about Gemini, it seems.

By the end of the year, AI Overviews will come to over a billion people in Google Search. So, we should get much smarter Google results, and can handle search terms that use various different questions. It's a much more 'human' way of breaking down questions and explaining results, all based on Google's huge index of websites.

Research that can take hours will be done by Google in a few seconds, apparently. For people like like researching things and finding new things, this might not be that thrilling.

But for boring things, it's pretty cool.

You can now get an AI-organized search results page, with Gemini organizing results into useful 'clusters.' Contextual factors are taken into account - for example if you're looking for places to visit when on holiday.

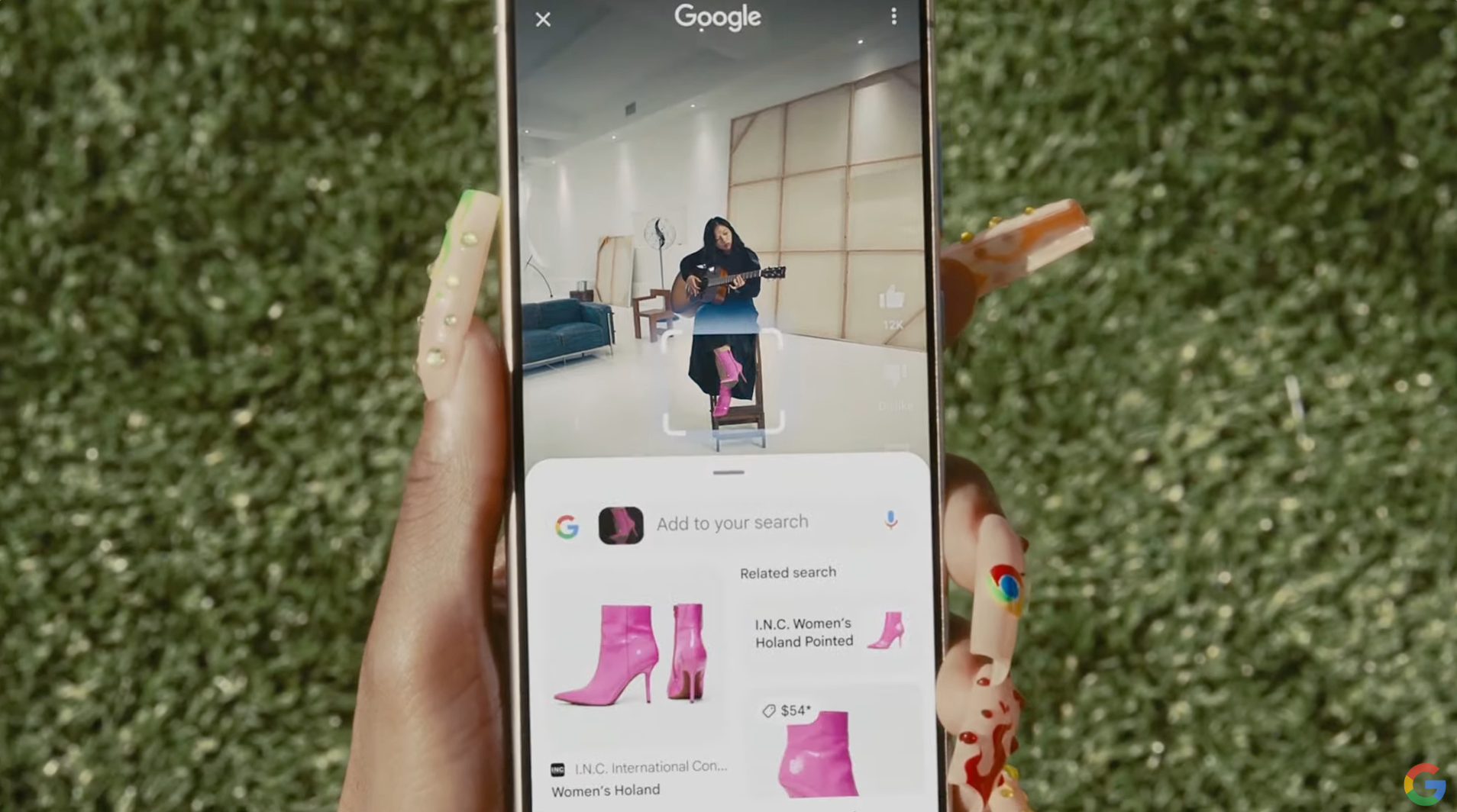

Now we're talking about 'Ask with video'.

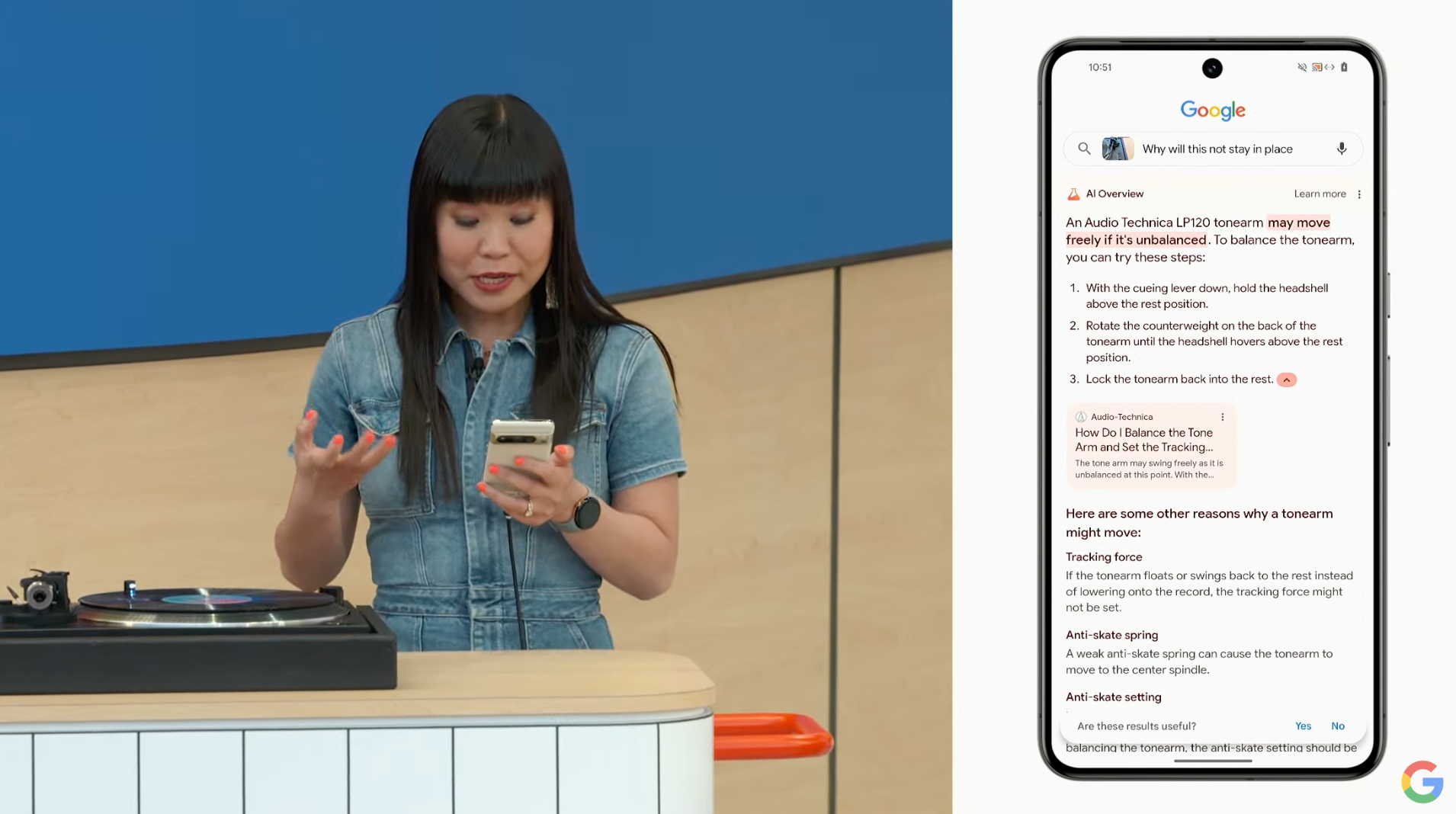

We're being told about how users can take a video and ask Google a question - and Google will return an AI Overview. So in a demo, we're shown someone recording a video of a vinyl player that's broken and asking Google why it doesn't work.

Google Search, via Gemini, then identified the type of player, saw what could be the issue, and then suggested some fixes.

What would have been quite a bit of manual searching in Google (such as trying to find the model of the vinyl player) is now pretty quick and user friendly!

It'll be rolling out to Google Search in the next few weeks. It does look pretty helpful - I often go to YouTube for videos on how to do various DIY things without killing myself/my family, so this could be quite the time saver.

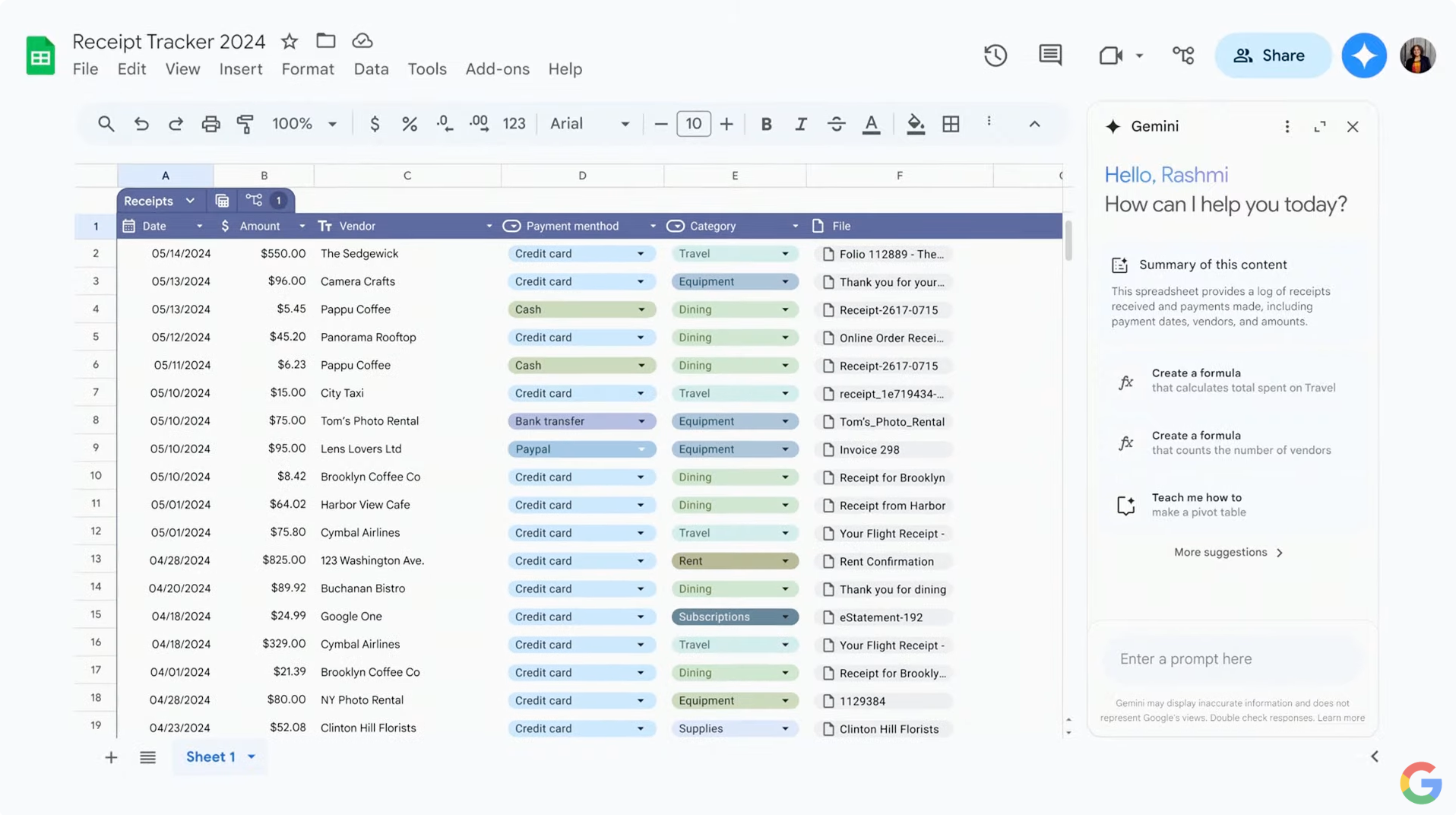

Gemini-powered Side panel in Google Workspace now. Google is excited about this. Powered by Gemini Pro 1.5. We are now looking at Gmail for mobile. Not sure if this will be for every user, or just Workspace users.

A card overlay gives you an overview of your emails, and from there you can ask questions in the included text box - all without having to open other apps, search emails, etc.

As someone who struggles to find certain emails when I need information, this could be very useful. I've kind of accepted that Google will be reading my emails anyway.

This 'Summerize' feature will roll out this month - but to people signed up to Labs for testing new services and software.

We are being shown the Side panel and how it uses AI to give you suggestions and information based on what you're doing. It's a lot like Copilot's integration in Windows 11.

Which I have used once, then never again. Hopefully Google makes Gemini and Side panel more useful.

Now talking about 'Virtual AI team mate'. They have an account, name and even personality. So you can then talk to it via instant messaging.

The virtual team mate can then search conversations and gives you answers. The team mate can then be added to chats, email chains and projects, and it will remember it all.

This seems both cool, and a little creepy. Especially if you work in an office where people have lost jobs - will virtual team mates be used to fill the gaps? Unsurprisingly, Google doesn't touch on these issues.

We are now being told about the Gemini app - and it'll be the 'most useful AI assistant' that uses the latest Gemini model.

This is what will likely replace Google Assistant at some point. The app is 'natively multi-modal' which means you can type, voice chat, photos and videos to interact with Gemini.

Gemini can respond to your voice later this year, and can see what you're seeing through your smartphone's camera.

Now they are talking about Gems. These are like personas for Gemini that can respond to certain prompts depending on what kind of thing you're looking for (such as a Gem that concentrates on recipes).

We're being shown how Gemini can be personalized and edited to give you the best advice - such as putting together an itinerary for a trip depending on what you like to do, and what kind of times your family get up at (so it doesn't start suggesting events that begin early in the morning if your family like sleeping in).

2 million tokens! Drink!

Seriously, it looks like Gemini is going to be EVERYWHERE for Android users. There's support for over 35 languages as well.

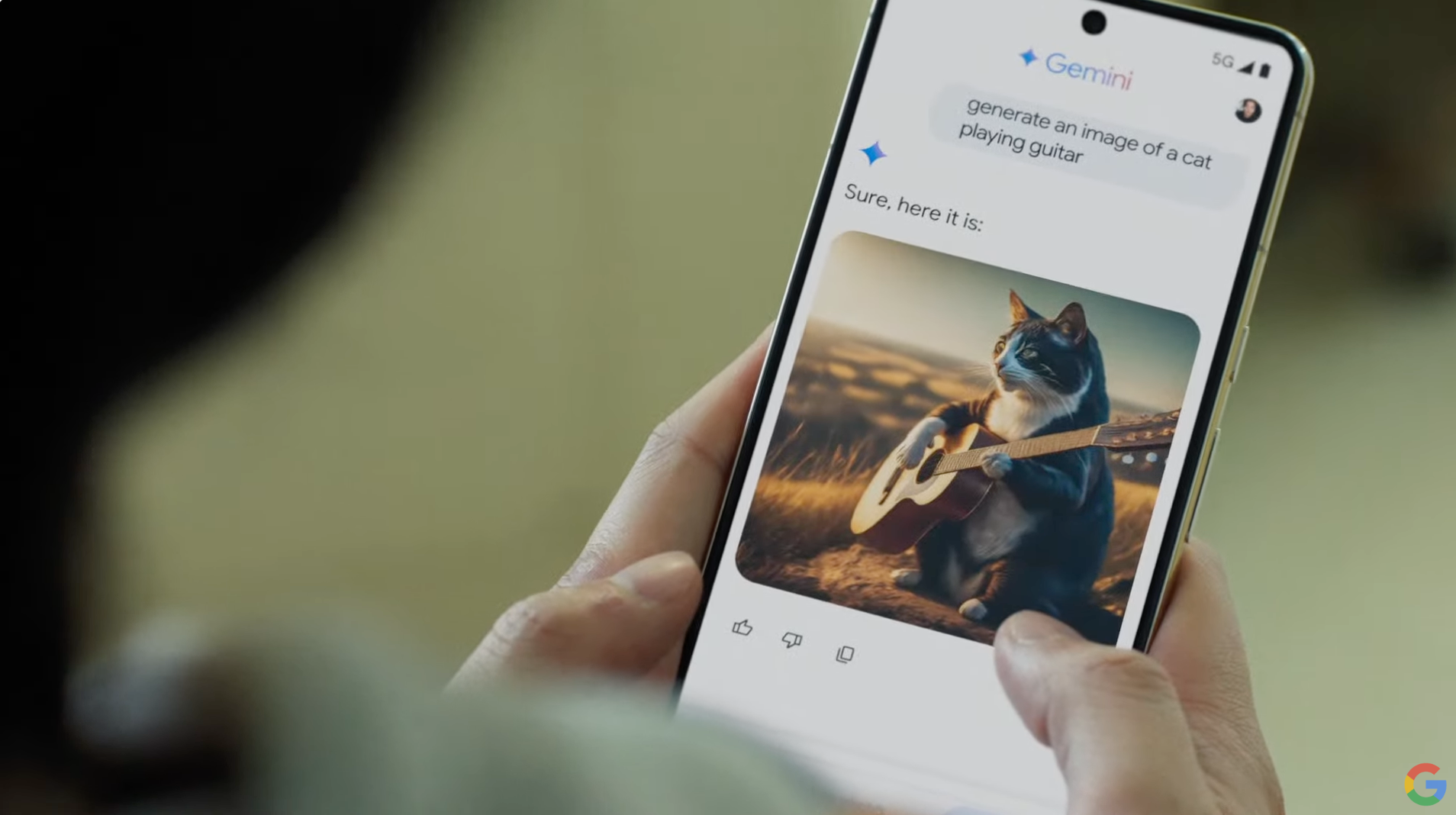

Now getting a musical video of how Gemini can make life easier (and generate images of cats playing guitars).

'Android is the best place experience Google AI'

It's a 'once in a generation opportunity to redefine the smartphone'.

Circle to Search gets a shoutout. First shown at Samsung's Unpacked event. This feature is one of the ways Android is being revolutionized.

Circle to Search can be used to help students find solutions to problems - and shows how those problems are solved, not just giving you the answers.

Later this year it'll be able to solve more complex problems, and it's exclusive to Android devices.

Now we're going into Gemini for Android. It's integrated at system-level, and it'll now be context aware, so it can anticipate what you're going to ask of it.

We're getting a demo on the Pixel 8a.

Gemini app now appears over your open app, so your workflow isn't disruptive. You can then drag things it generates into the app you're using (such as a messaging app).

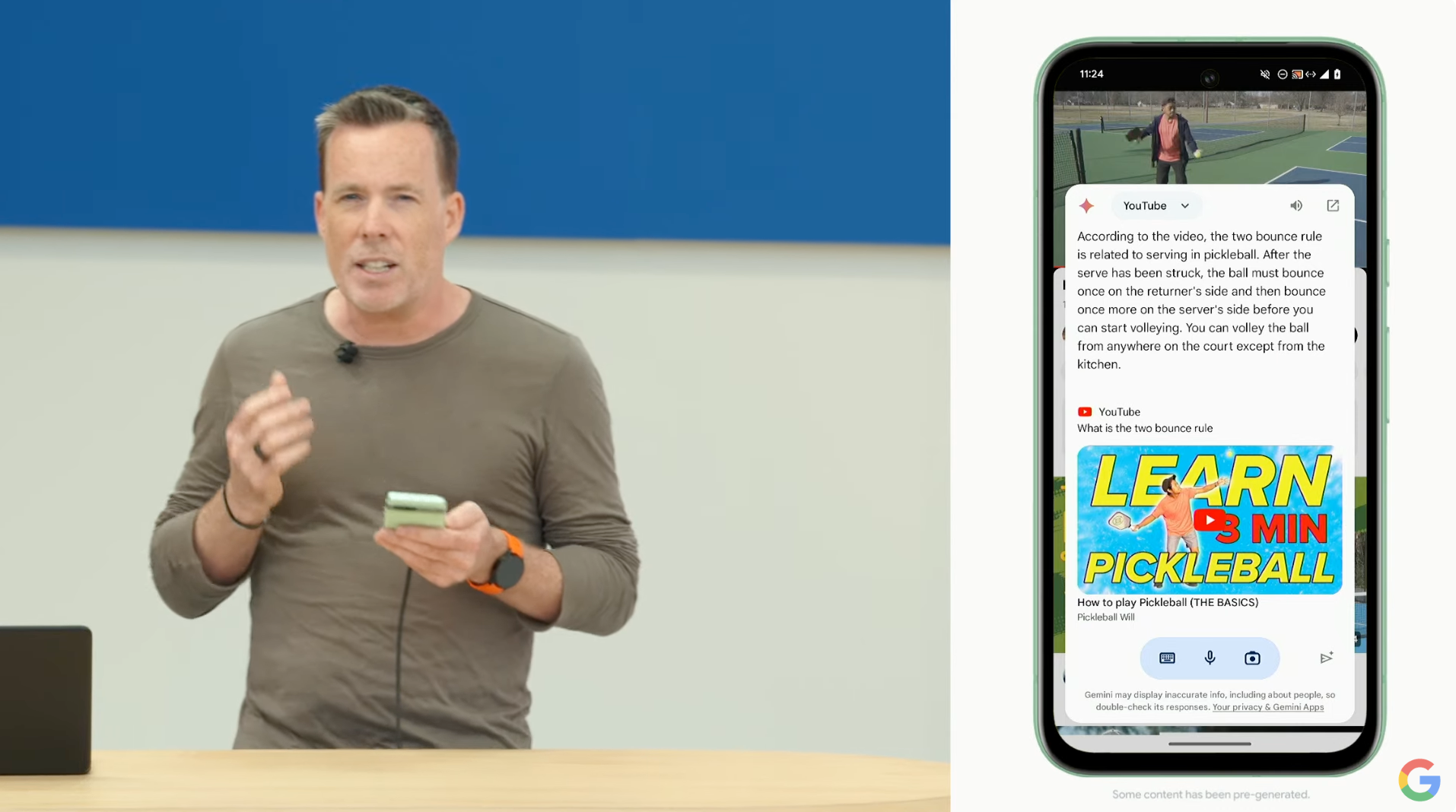

When watching a video, Gemini will know you're watching, and will anticipate your questions about the video.

Now Gemini is shown reading a PDF on Pickle Ball rules, and you can then ask questions, and Gemini will read the PDF, find your answer and give you a clear, easy to understand overview of the content. It will also show you where in the PDF it got the information from.

Neat!

Gemini Nano with Multimodality is coming later this year. Sounds like it'll debut in new Pixel smartphones.

This uses on-device AI that's faster and more secure, and will adapt to how you use your device.

We're being shown how this can help accessibility - this is very cool.

Gemini can detect if you get suspicious phone calls that might try to scam you and warn you. It seems it can understand the conversation you're having on your phone. Some people may not be too comfortable with this, though I assume it will be all done on-device so no information is shared with Google.

Still, might feel a bit weird knowing Gemini is listening to your calls...

Now the devs in the audience (that's everyone, except Phil) are being told about Gemini APIs, so developers can then create apps that use these AI features.

It's not free, but some prices are quoted and there's applause so I guess that means those are good prices. I am not a dev, so I have no idea.

We're now being shown how easy it is to create apps with Gemini AI features. Again, this is all over my head a bit, and mainly targeted at developers, but this should mean that we'll likely get some pretty cool AI apps for Android soon.

Google is still talking about AI. But it's now touching on ethics, which is interesting. Google wants to build AI responsibly by addressing the risks and maximising the potential to help people.

This is all very interesting and it's good to see Google take this so seriously - it's something a lot of us are worried about. We'll be digging into this after the keynote, as there is a lot to take in here.

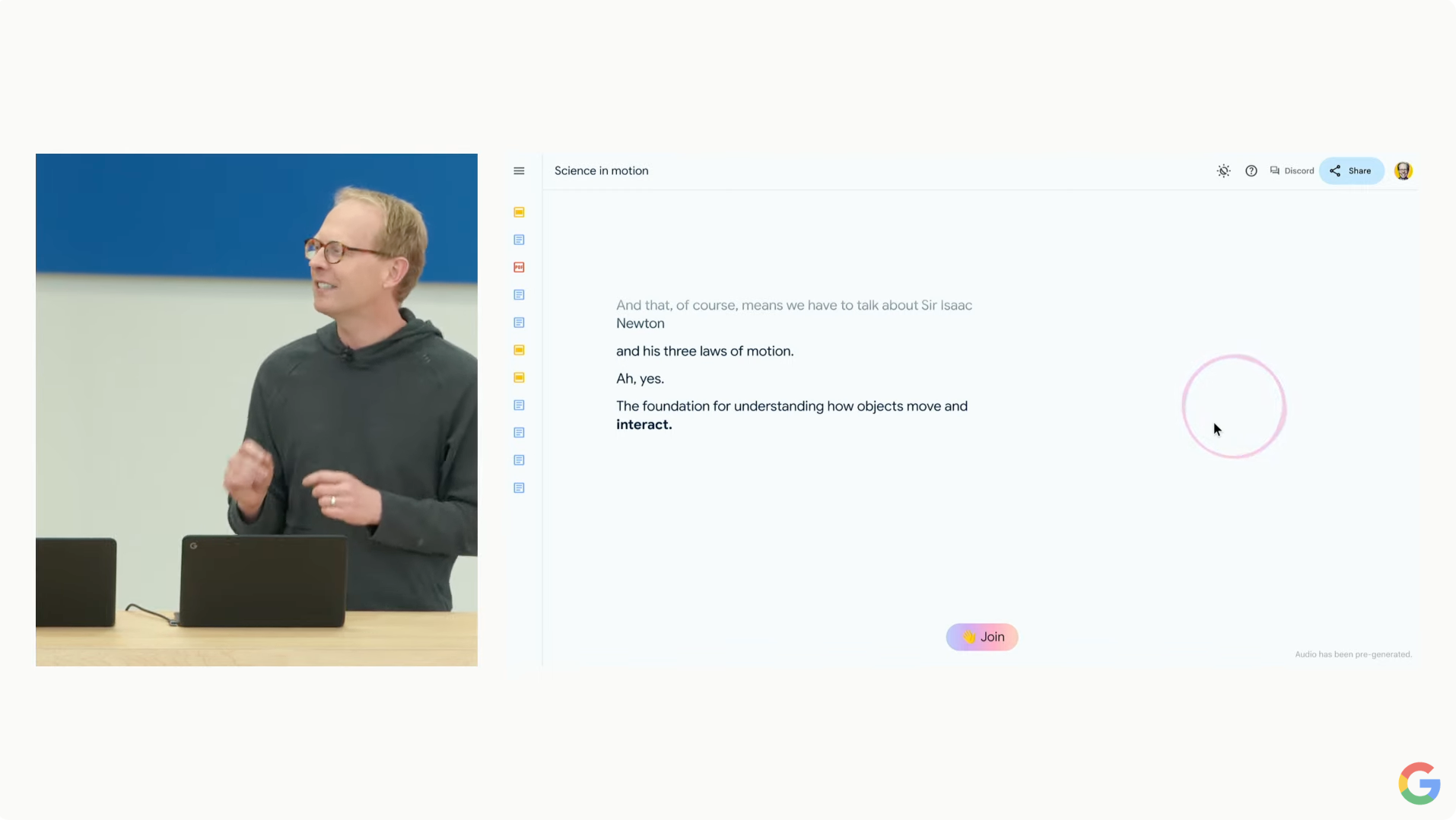

We've now been introduced to LearnLM, a new family of AI models designed to help out in the classroom. These tools aim to assist teachers with curriculums, lesson plans, and more.

There's also a new pre-made 'Gem' personality called Learning Coach, who provides helpful explanations and even quizzes using Gemini's long context capabilities, which the goal of helping students understand concepts and questions rather than simply giving them the answer.

Google has been working with MIT to create a course that helps teachers and other educators learn how to integrate AI into their workflows and classrooms. A tiny bit dystopian, perhaps, but this is well-intentioned stuff that many overworked schoolteachers could truly benefit from.

Sundar is back on stage, and he's reiterating the ethos of 'making AI helpful for everyone'. He's also used Gemini to count how many times they've mentioned 'AI' in today's keynote - 121 times so far!

It looks like we're wrapping up - Sundar has offered a sincere thanks to all the developers pushing ahead with AI software, and now we're being treated to another classic Google video clip, this time providing a slick summary of everything we've seen today.

'Welcome to the Gemini Era' is the last message we're given. We're truly in it now, folks!