It's time for Google I/O once again, and this year Sundar Pichai and others from Google's leadership team announced all kinds of AI-powered goodies from the stage at Mountain View, Calif. With less fanfare, Google also detailed changes to upcoming versions of Android and Wear OS.

Not surprisingly, though, the focus on Tuesdy was almost entirely on software and AI, such as Google Gemini and its various applications, and what's coming to Android. Unlike previous Google I/O keynotes, this one didn't involve hardware announcements or teases of any kind. Previously, it was believed we could get an early look at the Pixel 9 series or the Pixel Fold 2, which some are calling the Pixel 9 Pro Fold, but none of it happened.

A couple of Tom's Guide reporters were at Google I/O in person for the first day of the developer conference to relay what they saw and heard, while the rest of us watched along with Google's live feed of the I/O keynote, which got underway at 1 p.m. ET / 10 a.m. PT / 6 p.m. BST. You can read our reaction to what was announced below as well as scroll back through our live blog timeline to catch up on every moment of the keynote as it happened.

Biggest Google I/O Announcements

- Project Astra: Google just unveiled the AI assistant of the future with Project Astra, which uses video you capture with a phone and voice recognition to deliver contextual responses to your questions. One demo showed someone using Project Astra to help them solve a coding problem using a camera, while also tracking down where they left their glasses from earlier.

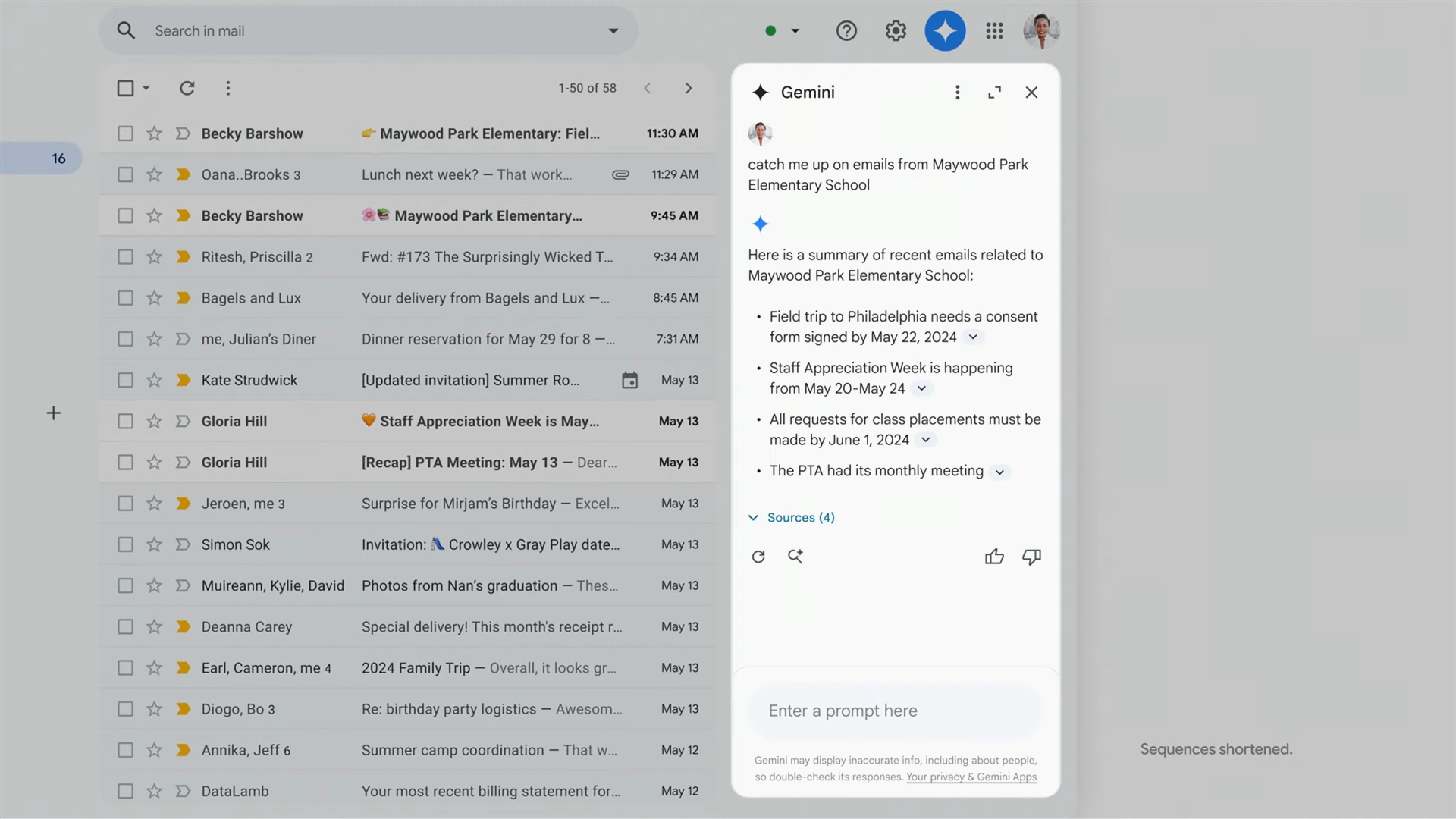

- Google Workspace: Gemini is being rolled out to even more popular Google services, like Gmail, which can summarize emails that are part of a longer email chains. There's also a smart reply feature that will allow Gemini to deliver more contextualized replies after analyzing your email conversations.

- More AI in Android: While Google didn't directly show off or mention features specifically about Android 15, the company did share how more AI features are coming to Android. For example, there will be a broader roll out of Circle to Search. There's also an AI feature called TalkBack for Android that's more of an accessibility tool to announce descriptions of photos for those who are blind or have limited eyesight.

- Google Search: Searching with Google is getting a tremendous boost with new Gemini features such as faster answers with AI Overview, creating a travel itinerary, and the ability to use video to solve problems.

- Google Veo: Using generative AI, Google Veo can create realistic, detailed 1080p videos based on your request. Meanwhile, Imagen 3 can generate images based on text prompts.

- Android 15: The second Android 15 beta is out, and Google has highlighted some of the features coming to your phone later this year. Top additions include Private Space, an area for hiding sensitive apps; Theft Detection Lock, which taps into AI to determine if your phone has been swiped; the ability to added passes to Google Wallet with a photo; and AR content in Google Maps. Here's more on Android 15 beta 2, including how to get it on your Pixel phone.

- Wear OS 5: The big story with Google's newly unveiled wearables software is improved efficiency. Google says that the new version will preserve 20% more battyer life when running a marathon than Wear OS 4 did. You'll also get new metrics such as Ground Contact Time, Stride Length, Vertical Oscillation, and Vertical Ratio.

Google I/O 2024 live stream

We expected to see the Pixel 8a debut at Google I/O today, but Google had other plans in mind. Instead, it announced the Pixel 8a last week — no doubt to clear the stage for all the AI talk we’re going to hear later today.

We may not have a Pixel 8a demo, but we’ve got the next best thing — a Pixel 8a review. And it sounds like this phone really impresses, thanks to its Tensor-powered AI features in a sub-$500 device along with Google’s extended software and security support.

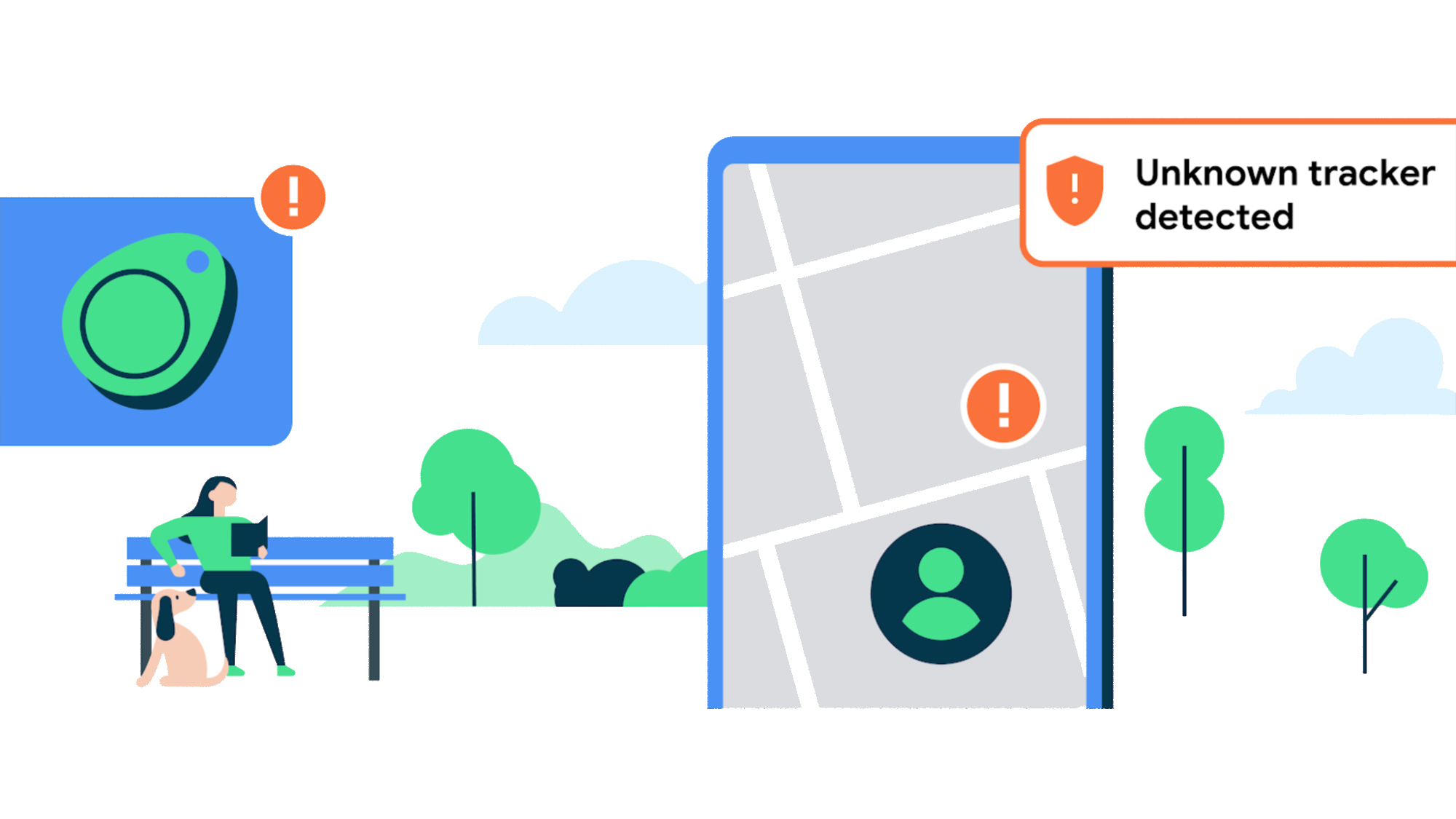

Google had some news to announce in conjunction with Apple ahead of Google I/O. The two tech giants have teamed up to boost cross-platform protections against someone trying to monitor your location with a Bluetooth tracker.

Should you be on the move with an unknown tracker along for the ride, you’re going to get an alert on your phone about that device, regardless of the platform its paired with. Previously, iPhone users would see alerts for Apple AirTags that were monitoring them without their knowledge, but not necessarily other trackers paired to Android devices.

The feature’s included on the just-released iOS 17.5 update for iPhones, while Google’s adding support for any Android device running Android 6 or later.

At last year’s Google I/O, the Pixel Fold made its debut giving Google a foldable device that could take on the leading foldable from Samsung. Indeed, in our Pixel Fold review, we praised its thin, durable design and wide cover display. (A feature Samsung may adopt for the upcoming Galaxy Z Fold 6, incidentally.)

We don’t expect to see the Pixel Fold 2 previewed at this year’s Google I/O, and not just because Google appears to want to keep the focus on AI. Rather, it’s because of a rumor that the next Pixel Fold might get folded into the Pixel 9 lineup and rebranded as the Pixel 9 Pro Fold. If so, that means a fall launch for the foldable follow-up.

One more day until #GoogleIO! We’re feeling 🤩. See you tomorrow for the latest news about AI, Search and more. pic.twitter.com/QiS1G8GBf9May 13, 2024

Maybe Google was always planning to tease some AI-powered feature ahead of Google I/O. Or maybe it was all those Open AI announcements 24 hours ahead of I/O that did the trick. But Google spent Monday afternoon showing off a new AI capability of the camera app on what appears to be a Pixel.

Using voice prompts, you would ask the camera what it sees in a conversational tone. And the AI can reply, describing what’s in its view finder with impressive accuracy. Watch the posted footage from Google to see for yourself.

OpenAI clearly had its sights set on stealing some of the pre-Google I/O thunder by holding an event of its own Monday (May 13). And it’s easy to steer some of the attention away from Google when your announcements are as significant as what OpenAI had to say.

The highlights included:

The GPT-4o model is particularly significant in that it can analyze image, video and speech. It’s almost enough to make up for the fact that the event came and went without any sign of ChatGPT-5.

Google's Project Starline is a very fancy-looking video conferencing tool that the company's been demoing for some time. But it recently announced that it'll finally launch in 2025, with HP getting first dibs on the tech, so we expect it to be at least mentioned briefly today at I/O.

The basics of Starline are that it works just like a normal video call, but with a full 3D model of the person you're talking to that in theory allows for more natural conversation. The hardware required has gradually shrunk since Starline debuted, so hopefully it'll soon be small enough to fit in a dedicated camera or within another device, rather than requiring specialized multi-camera monitors or even dedicated rooms to use.

Our AI expert Ryan Morrison had some predictions about what AI announcements Google will have later today.

He believes we'll see new features come to the Gemini large language model and chatbots, both the developer-only and public versions, Gemini-based features in familiar Google apps like YouTube Music and Google Docs, and perhaps a demo of some advanced tech to show it can keep up with OpenAI and GPT4o.

Everyone's favorite browser Google Chrome may get some attention today. And we may already know one upcoming feature for it.

Circle to Search, currently available on only a select few Android phones, could be appearing on Chrome via a Google Lens update. This would allow far more users to try out this handy search method, although given it's seemingly not finished yet, maybe Google needs some more time before it starts rolling out.

Circle to Search is not just a Galaxy S24, Pixel 8 and Pixel 8a feature. You can also use it on your iPhone (kinda).

With a new shortcut, it's possible to instantly take a screen shot and search it through the iOS Google app. It's likely as close as iPhone users will ever get to sampling Circle to Search, at least for the time being while Google's being very particular about who gets to use it.

Google doesn't do it consistently, but we may see a teaser for the Pixel 9 series today, as a token gesture toward the company's hardware products among a keynote that's likely to be all about software.

The Pixel 9 series is believed to consist of three models this year, featuring a new smaller Pixel 9 Pro model alongside a Pro XL model and the standard version. All the phones should be running a new Tensor G4 chip, and will likely be packed with lots of new AI features to build on those added to the Pixel 8 series last year.

Google I/O 2023 was when we first got to see the Google Pixel Fold, the company's first ever foldable phone. So naturally we're wondering if we'll see the sequel announced, or at least teased, at this year's I/O.

There are some odd rumors that the Pixel Fold 2, as we assumed it would be named, will actually be titled the Pixel 9 Pro Fold to tie it in with Google's other flagship Pixels. Otherwise, we're expecting a refined version of last year's foldable Pixel, perhaps with some new unique design elements and likely some upgraded internals too.

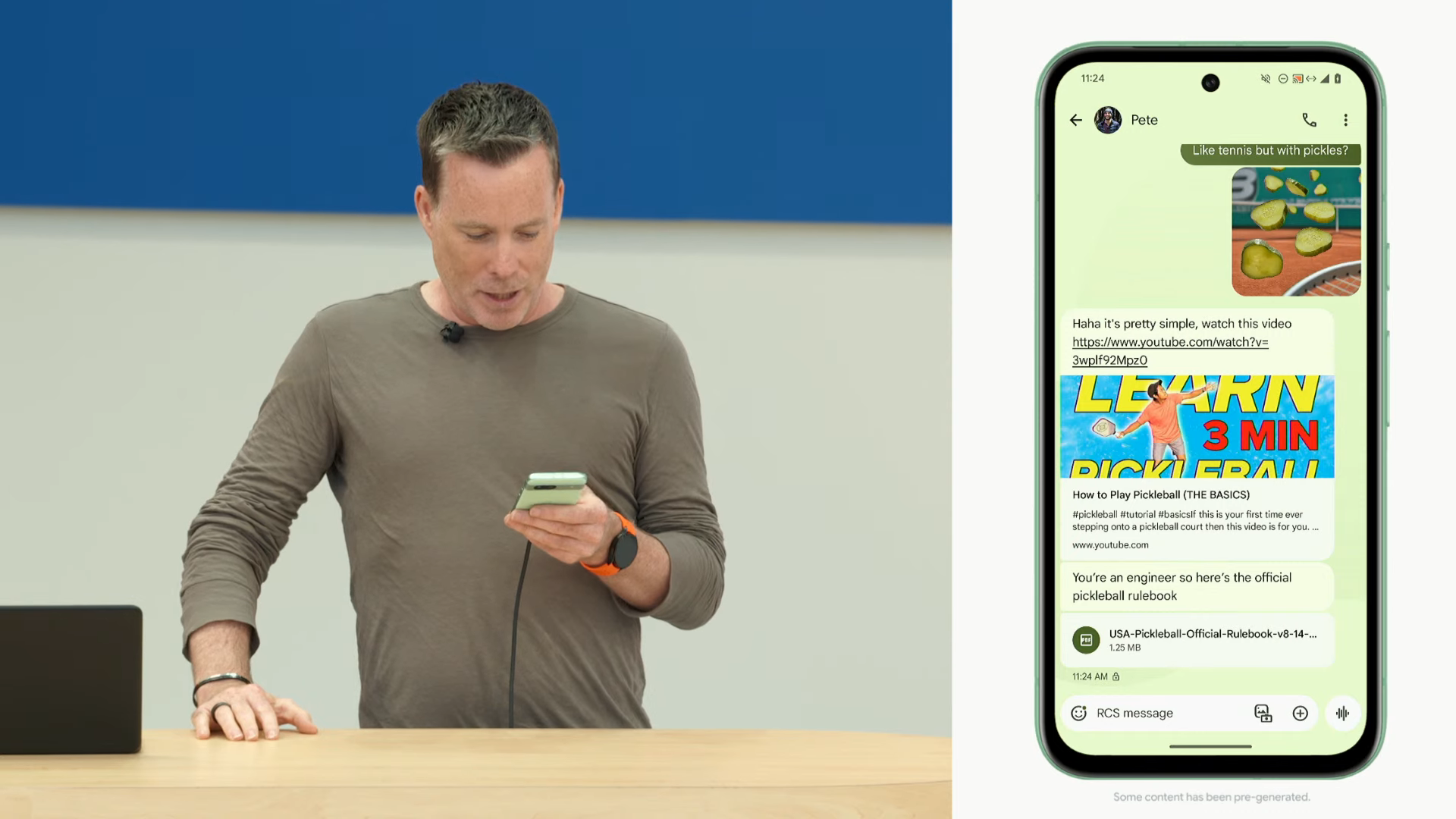

A recent post on X has shared two screenshots that seemingly reveal that Google will allow users to edit messages on their app, even after they’ve been sent.

According to the post, the feature will function similarly to WhatsApp and iMessage, with a 15-minute grace period to edit a sent message. It appears that some users have been allowed to test this new feature, although there is no indication of when it will officially be announced. It could be that we will hear more at Google I/O as one of its new features.

Hi folks, it's Jeff Parsons taking over this live blog for a spell as we look ahead to what Google may show us over in Mountain View later today. One area I'd be interested to hear about is Wear OS. Google announced Wear OS 4 at last year's I/O and it brought with it a few nice-to-have upgrades like better battery life and some new watch face tools. But, overall, it feels Google's been coasting a little bit here.

While it's a fair bet we may hear about Wear OS 5 at today's announcement, I'm keen to hear more about exactly how Google can differentiate from watchOS 10 — which had a redesigned home screen experience driven by widgets and Smart Stacks when it was announced in September. Will we get Gemini integration? Will we learn more about Android Health, the replacement for Google Fit? Will Fitbit continue to be subsumed into the Google brand? Bingo cards at the ready.

Google Maps upgrades!

Shifting gears to Google Maps for a second, ahead of Google I/O two new Maps upgrades are starting to go live. The first new feature lets you apply a filter to show the charging stations of electric cars instead of gas stations. And the other allows the map to show the entire length of a road and highlight it in blue when you search for it. This will give you a better sense of the layout for sure.

Maps is a big part of Google's product portfolio and usually commands some good stage time. We'll see if there are any other new features waiting in the wings. In the meantime, here's 9 essential Maps tricks for your summer road trip.

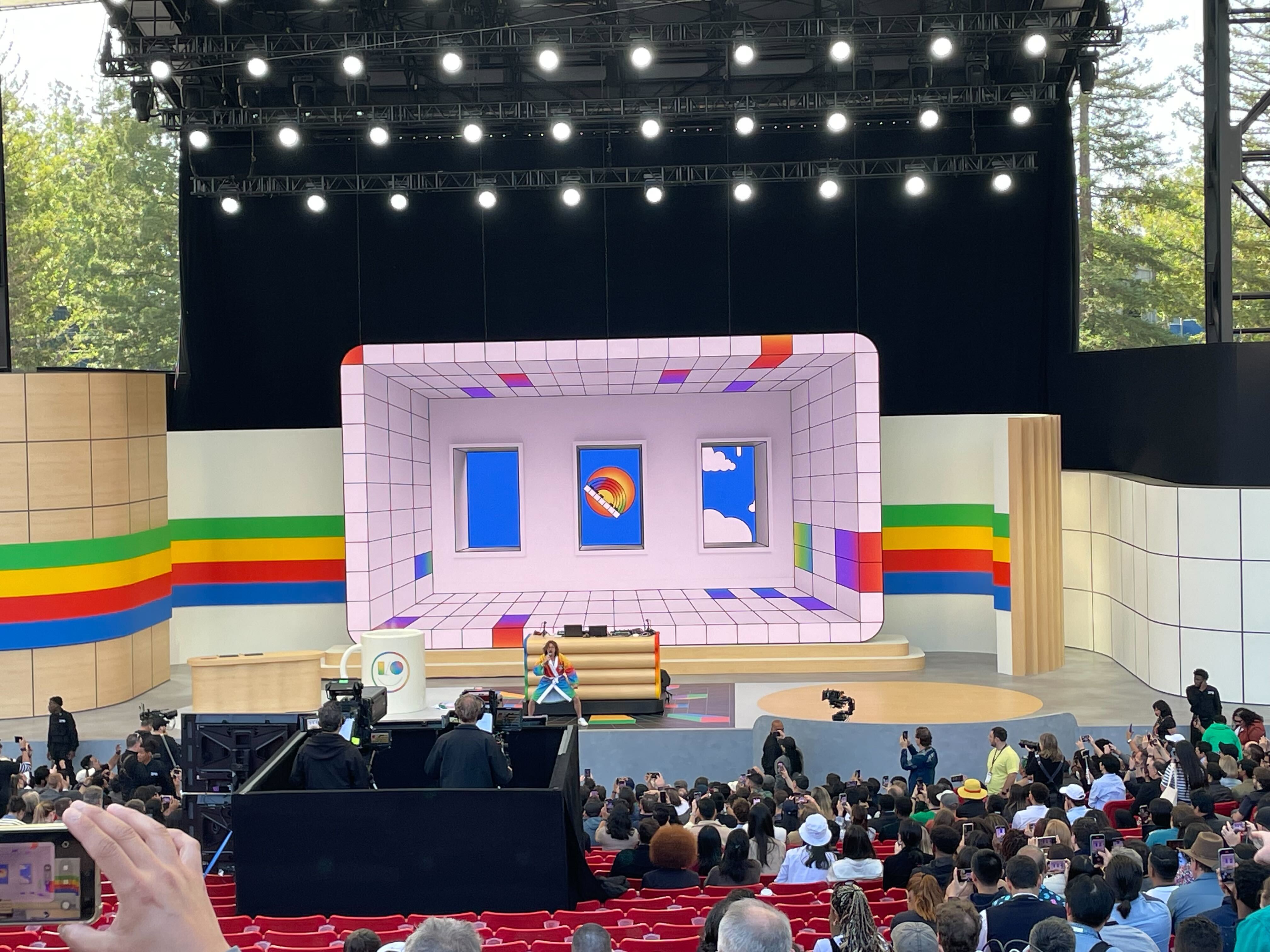

Boots on the ground

Google I/O 2024 is officially kicking off in a couple of hours and, as you can see from the image above, we're ready and waiting in Mountain View to get started. Managing Editor Kate Kozuch will be live at the event to capture reaction to Google's announcements as they unfold.

Good morning from #GoogleIO2024! It’s going to be a jam-packed day ☀️ pic.twitter.com/NH9B1vokO0May 14, 2024

A quick reminder the keynote gets underway at 1 p.m. ET / 10 a.m. PT / 6 p.m. BST and the leading topic will almost certainly be Gemini and Google's latest AI developments — aiming to steal the thunder from all the wonderful new features OpenAI announced at its own Spring Update yesterday.

Desktop mode on Android?

This isn't something I'm really expecting to hear much about today, but it's cool so I'm going to talk about it here.

One feature on the best Samsung phones that doesn't often get talked about is DeX (pictured above), which turns the device into a pseudo desktop via a proprietary dock. It seems like Google is exploring this with the Pixel as, according to a report (h/t Android Authority), the company held a private event showing a Pixel 8 running Chromium OS, the open source version of ChromeOS, on an external monitor.

While Google isn't going to merge Android and Chrome anytime soon, ChromiumOS can run alongside Android in a virtual machine, thanks to the Android Virtualization Framework (AVF), which debuted in Android 13.

This feature seems a little too far off to make headline news at today's keynote but as someone always looking to streamline their kit, I'm intrigued to see if this gets a mention when Google unveils more about Android 15 today.

Gemini's multimodal features

Okay, that’s enough deviation from what’s going to be the real highlight of today’s show: AI. Last year’s I/O had its fair share of AI announcements but 2024 is going to turn it up to 11 — probably through more explanation of multimodal features. That means exploring what Gemini can do when it’s shown video, music or pictures.

A demo posted by Google yesterday showed this in action when a Pixel phone correctly identified the I/O stage being set up. It’s going to be like Google Lens on steroids and will surely offer up all manner of assistance based on what’s going on in your camera’s view. Imagine being able to record live video and having Gemini provide a flawless transcript or pull out relevant information based on what it's seeing. I expect we'll hear a lot more about this kind of functionality as the event progresses.

Not long now!

People are taking their seats as the Google I/O keynote is scheduled to kick off in less than an hour!

You may be interested to know that while Wi-Fi is spotty for those of us in attendance (always ironic at a tech event), we are being treated to a soundtrack that includes Taylor Swift. So it's all good.

Dino crisis

Google's also currently promoting a generative AI version of the Chrome dinosaur game where you can swap your character and the obstacles for different things.

You can try it out for yourself here, but it looks like it'll only be available for another 30 minutes or so. If you hadn't twigged yet, there's going to be a lot of talk about AI today...

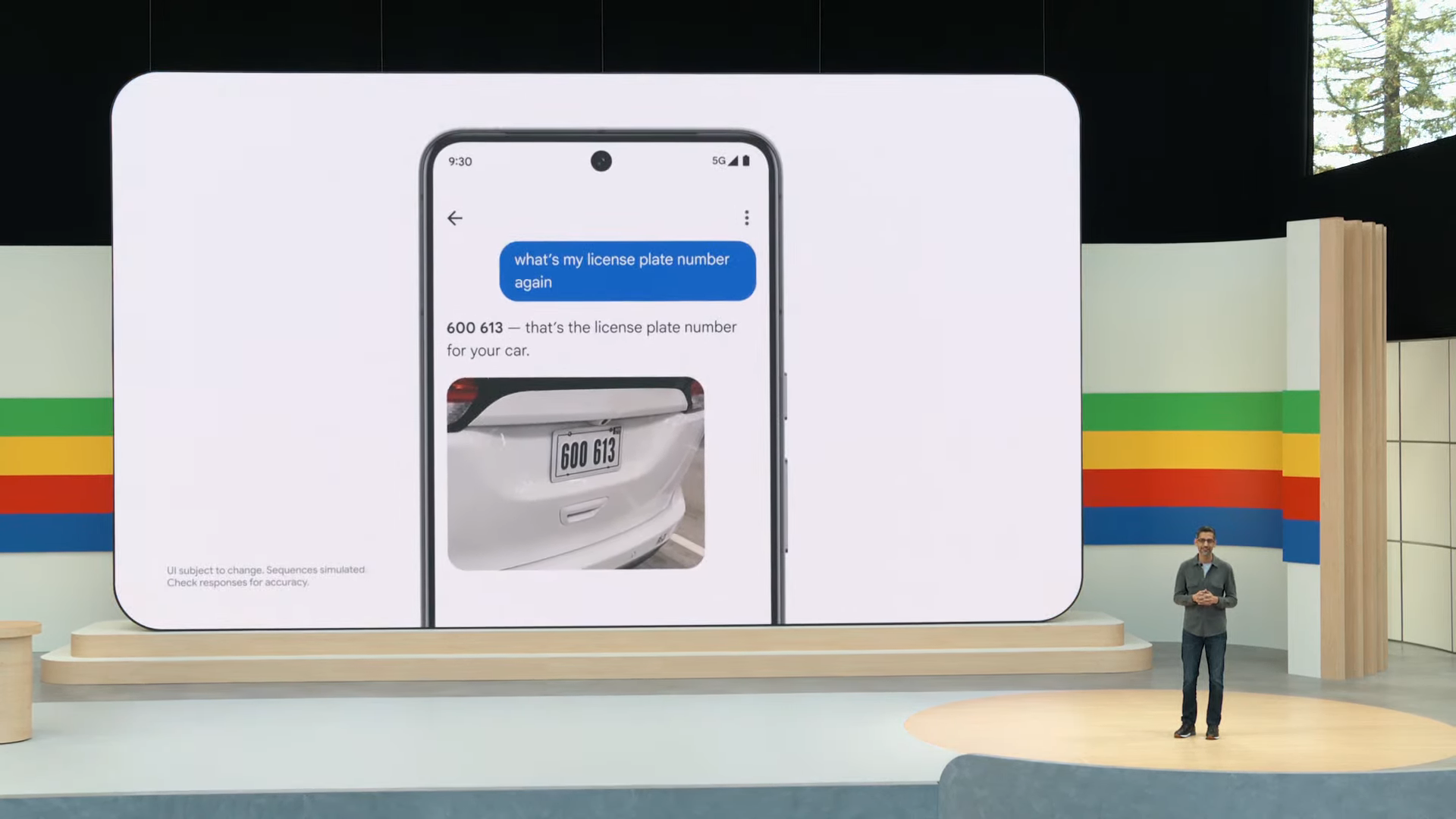

Gemini's memory feature

One Gemini feature I think could sneak into the keynote today is "Memory". This was actually first rumored last year (back when Gemini was still called Bard) but news has been quiet of late. In a nutshell, the feature lets you save facts (or other things) you want Gemini to remember which can then factor into future conversations.

Google is preparing the release of "Memory", a feature allowing you to save facts about yourself, or stuff you just want Gemini to remember.This feature *may* be released in the next few days. pic.twitter.com/dlNjOTCCXeMay 12, 2024

According to a post on X by Dylan Roussel over the weekend, the feature could be launching "in the next few days" with a new UI to boot. This would probably be the likely time for Google to provide us with an update.

The pre-show has started

Well, the pre-show has started courtesy of a guy in a multicoloured bathrobe and some "pulsating" music. I'm not really sure what to make of this but I hope the folks in the audience are entertained.

He's currently DJing with Google's MusicFX artificial intelligence experiment that lets you create Live Loops and experiment with sound. Perhaps Google is coming after Suno and Udio when it comes to creating the best AI music generator...

For a bit more context on this rather bizarre warm-up act, the DJ Mode in MusicFX gives you really granular control over the AI generated sound, reacting in real time to changes rather than generating a new sound every time.

MusicFX can’t generate songs with vocals and refuses any request to include tracks mentioning an artist. But other than that it can generate backing tracks for a video, music for a game or a just something to fill the space before Sundar Pichai walks on...

Google I/O 2024 keynote has begun

Google I/O 2024 has officially started and the first order of business appears to center around artificial intelligence. A quick video right at the start highlights many of Google's work around Gemini, with Sundar Pichai the first to come out on stage.

Sundar Pichai is highlighting all of Google's work around Gemini. "We want everyone to benefit from what Gemini can do," said Pichai. He also teases how Gemini will be intertwined into many of Google's services.

People are using Google Search in more ways than ever before, with even longer keyword search. Also, AI Overviews is rolling out to everyone in the US and coming soon worldwide.

Google Photos just got a big AI upgrade with Gemini

Google Photos is getting help with the power of Gemini to get more relevant searches, including how it can search and select photos based on contextual responses with a new feature called Ask Photos with Gemini.

Google Workspace gets help with Gemini

Gemini's presence is being integrated into many of Google's Workspace, like Gmail, which you can use Gemini to search emails, get highlights from a Google Meeting, and much more.

@tomsguide ♬ original sound - Tom’s Guide

Wondering how Gemini will make Google Photos better? Check out how it can offer better search results with the help of Gemini.

Making AI helpful for everyone

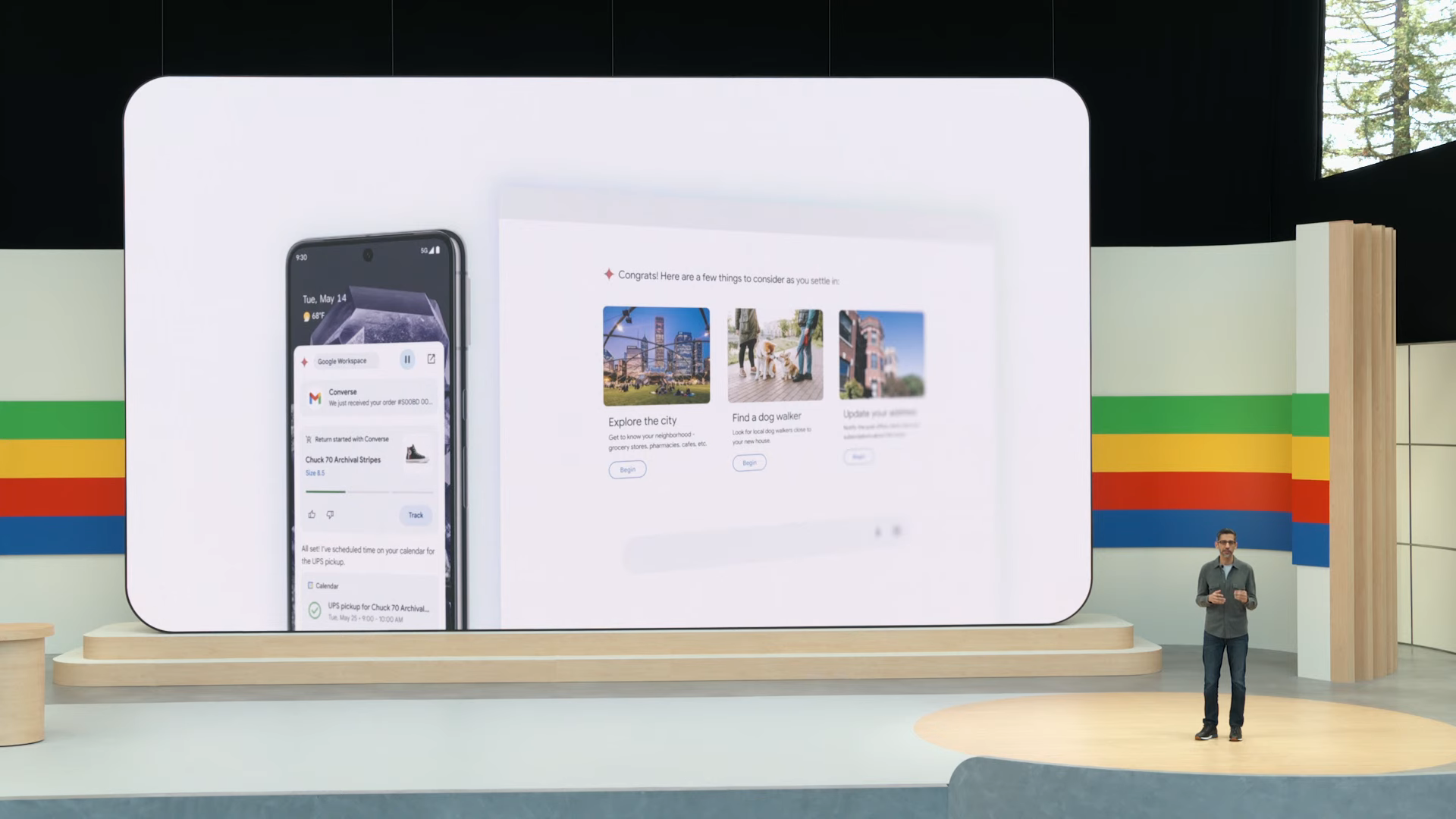

Gemini can be used for searching your phone, helping you to find receipts, scheduling a pickup window, and much more. With travel, Gemini can be used to search useful and fun activities if you're planning a trip. Pichai says that Google is "Making AI helpful for everyone."

Project Astra uses video for Gemini analysis

Project Astra aims to use video to better deliver relevant answers. For example, you'll be able to use your phone's camera to ask what it is you're looking at, including being able to analyze code and have it make it relevant changes. Additionally, it's intelligent enough to use context clues visually in video to even help you locate your belongings if you forget where you left it.

Imagen 3 brings more generative AI to media

Bringing creative ideas to live with new generative media models for photos and video. Google's Doug Eck explains the new image model that's been built from the ground up, Imagen 3, the company's most capable image model. It'll use generative AI to produce even more realistic, detailed images. It can also be used to render text.

When it comes to music, Music AI Sandbox is a YouTube tool to help creators make more music by mixing different styles and creating something original.

Veo is Google's generative video model

Veo is Google's generative video model, which creates 1080p video based on prompts. It can be used in a tool called VideoFX, giving video editors and creators a new way of making video. Filmmaker Donald Glover has used Veo to produce videos that will be coming soon. Generating video from scratch is helping to advance the frontier of AI.

ML Compute Demand requires new hardware

Trilliium is the next-generation TPI, which will be available to Cloud customers in late 2024.

Axion Processor is a custom ARM-based CPU.

AI Hypercomputer is a groundbreaking supercomputer architecture, which is made possible with liquid cooling at Google's data centers.

Google is aiming to invest even more into the future with these announcements.

Google Search in the Gemini era

AI Overviews will make Google Search better with helpful search results. One way the company is going to do this is with Multi-step reasoning, which will let Google do all the research for you. For example, Gemini can research a yoga studio based on reviews.

Additionally, Gemini in Google Search can plan for meals by aggregating all your meals for a day with recipes for all the dishes. If making them yourself is too much, Google Search can also help you find where you can buy those meals with Gemini's help.

Your search result page will also change with Gemini's help, like finding restaurants that have live music and it can even pull recommendations based on the season — like restaurants with rooftops.

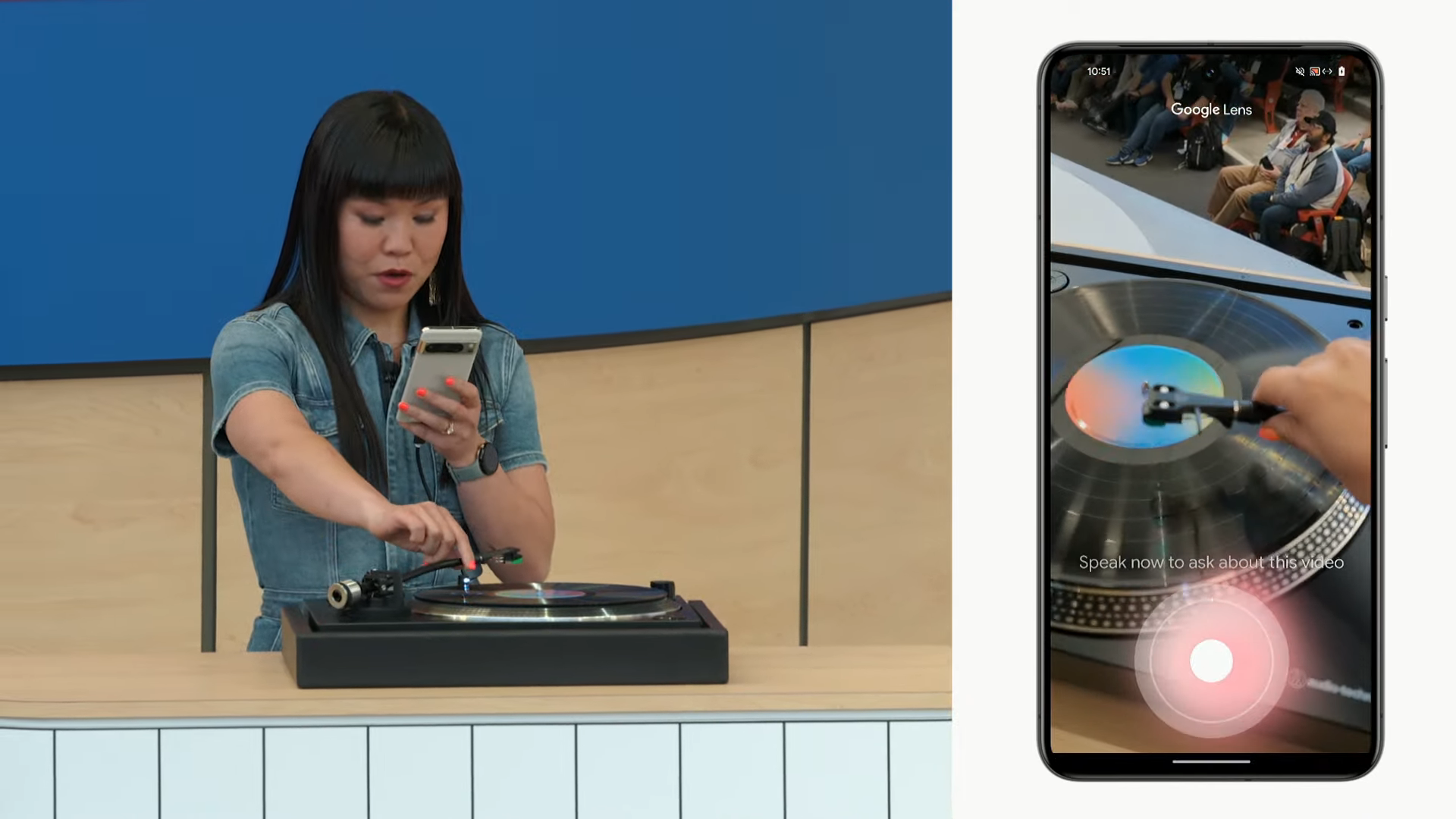

Ask Google with video search

Google's Rose Yao demos how she can fix a broken record player with the help of searching with video. She shows an example of how she's recording video and asks why the record player isn't working correctly. Google Search is able to search frame by frame to answer questions.

@tomsguide ♬ original sound - Tom’s Guide

Here's how Google Search is getting a big upgrade with Gemini.

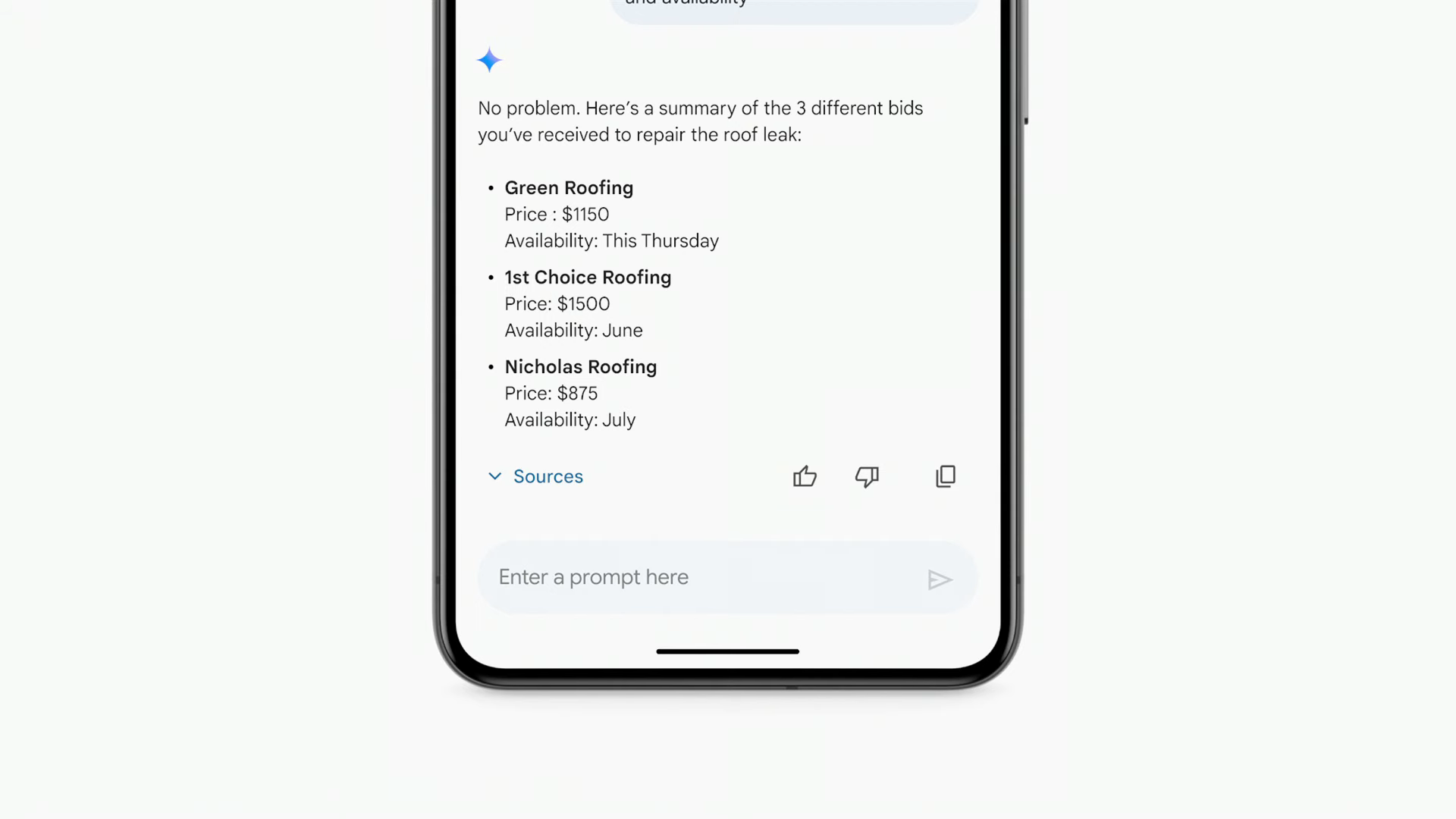

Gemini integrates more with Gmail

Gmail for mobile is getting a boost with Gemini that will overlay on top of emails when it's initiated on your phone. Another example shows how Gemini can search your email and even find shopping comparison for a roof repair. Gmail's smart replies will include responses that populate Gemini's recommendations, complete with links and prices to services that offer roof repairs.

Gemini can do even more complex tasks, like taking receipts you might have in different emails and can create a spreadsheet that organizes them in one place. Rather than tracking all of those emails and receipts, Gemini is doing all the work for you.

In this example of a receipts tracker, Gemini can also analyze the data and uncover things like where's the money being spent.

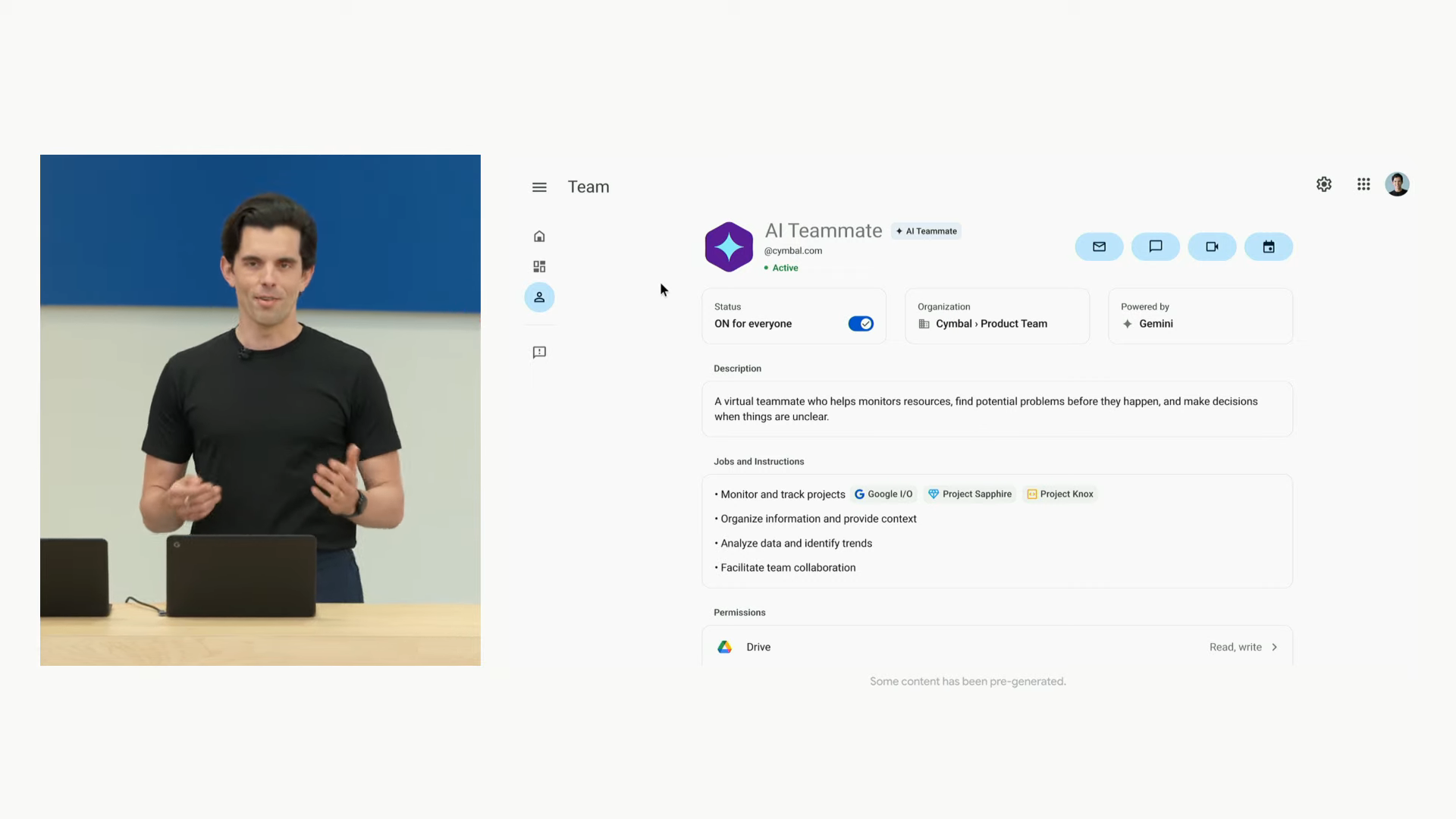

Gemini AI Teammate shows how AI can take on a job

Virtual Gemini teammate can be used for collaboration among different people in Google Chat, much like having a new member on your team to track expenses and schedules. You can even give this virtual Gemini teammate its own name.

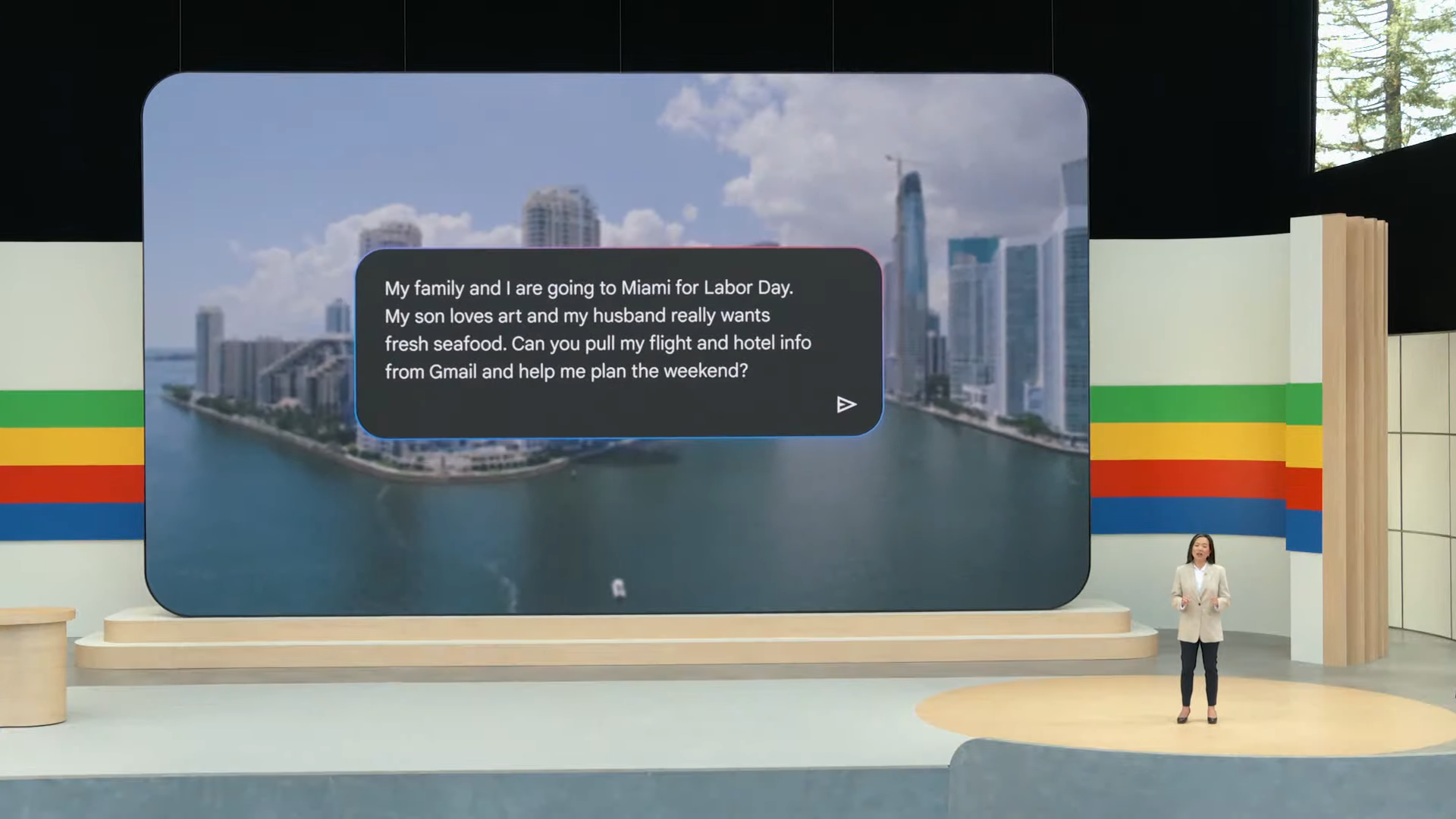

Gemini is becoming a personal assistant

Trip planning experience with Gemini advance can plan everything with search, shopping, and maps. It can generate an itinerary based on what you're looking to do.

More AI coming to your Android phone

Google's Sameer Samat is on stage to talk more about Android with AI at the core with three breakthroughs coming this year: better searching on your Android, Gemini is becoming you AI assistant, and on-device AI will unlock new experiences.

Circle to Search will be broadly rolled out to more Android phones, no longer limited to Samsung's Galaxy and Google's Pixel phones.

Gemini gets new features on Android

Gemini on Android is more context aware to provide helpful actions. It's also overlayed on top of whatever app you're using, so you don't have to switch back and forth. There's also a neat feature that lets you drop and drag images from the Gemini app to another one.

Another demo shows how Gemini can analyze a long PDF document for even better responses, rather than the user searching through the entire document to find an answer to their question.

Google's Josh Woodward details Gemini 1.5 Pro and Flash's pricing. Gemini 1.5 Flash is priced at 35 cents for 1 million tokens, which is very cheap compared to GPT-4o's rate of $5 per 1 million tokens.

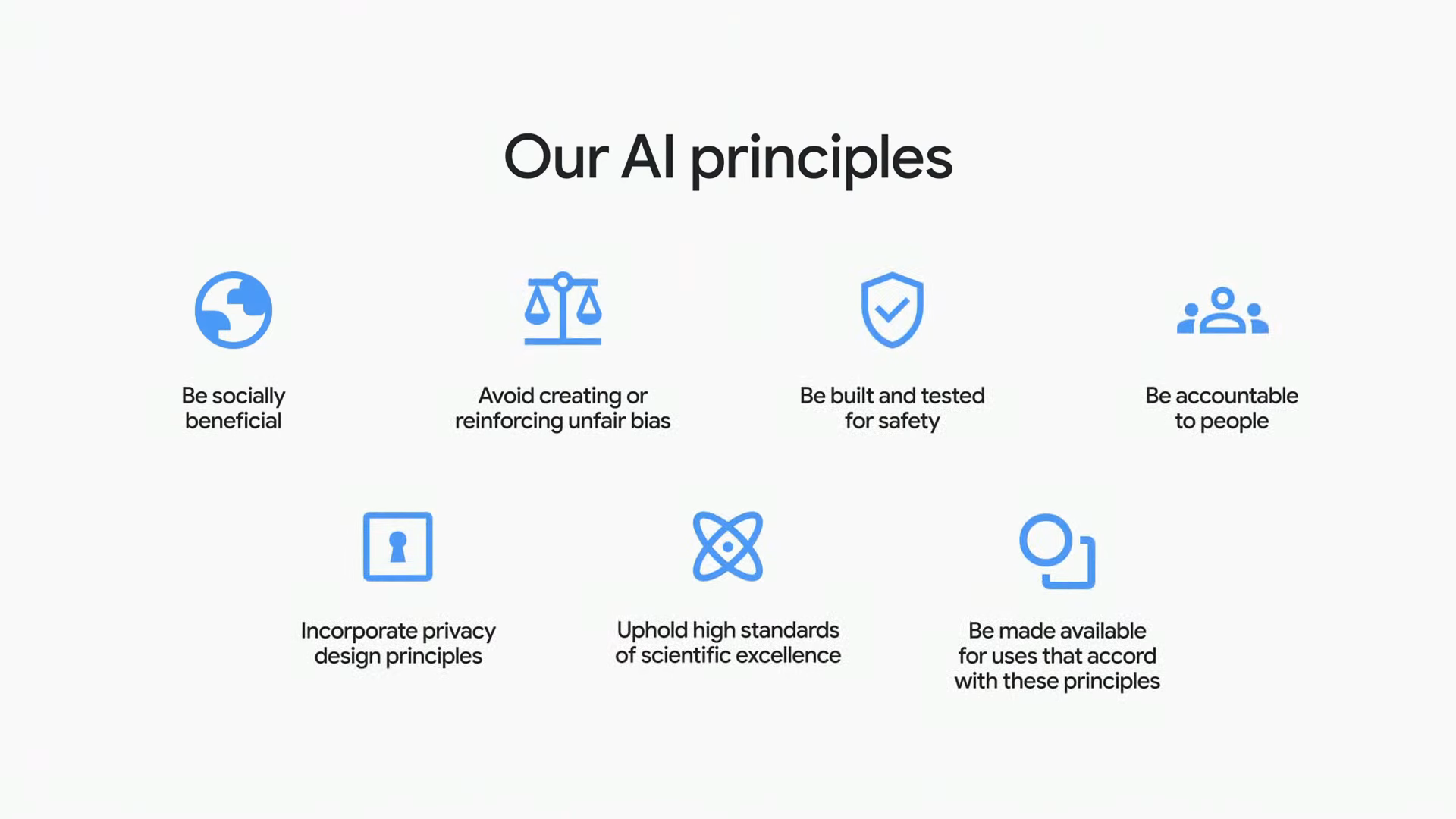

Google is ensuring that its AI services will follow its principles to protect and safeguard how they're used. There will also be various watermarks stamped into images, audio, and video that are generated with the help of generative AI.

With learning and education, LearnLM is a new model that will make these AI experience more personal and engaging.

Pre-made Gems, like Learning Coach, will provide step-by-step guidance when it comes to teaching. Rather than giving you the direct answer to your question, Learning Coach will use guidance to help you understand how to get the answer.

And that's a wrap folks! Unlike past Google I/O keynotes, there were no hardware announcements of any kind. Instead, the focus was strictly around Gemini and how this AI model is being integrated into more of Google's services.

The Next Google Glass?!

Was that, Google Glass? No, but Google did show off a pair of smart glasses as part of its demo of Project Astra. This new voice assistant, which is coming to Gemini Live on phones, can see the world around you and can do everything from identify landmarks to help solve equations. But a video demo during I/O showed a big surprise. This tech is coming to other form factors, including smart glasses.

Playing with Project Astra

Project Astra was one of the eye-catching announcements from I/O and is, essentially, a real-time, camera-based AI that can do anything from identify an object in frame to craft an fictional story about said object.

While a public version is still a little way off, Managing Editor Kate Kozuch got to try a demo of the technology in person at I/O. For the purpose of the demo, Google hooked up a stationary top-down camera to a machine running Gemini 1.5.

When presented with an array of dinosaur figurines, Gemini not only named each's classification but came up with names and adventurous storylines that seemed surprisingly suitable.

@tomsguide ♬ original sound - Tom’s Guide

Get Google's "most capable AI model" for free

Everyone likes something for free, right? Well, Google is currently offering a two-month free trial of Gemini Advanced, the premium version of its Gemini chatbot.

This gives you access to the newly-announced Gemini 1.5 Pro model as well as a few extra goodies like 2TB of storage for Google One and access to Gemini in Workspace. The latter means Gemini can help you craft emails or give your written reports in Docs a little extra polish.

If you're interested in giving it a try, the sign-up page is here. Although it's not yet available beyond the U.S. — so it's no bueno if you attempt to sign up from London, like I just tried to do.

Can AI save us from email?

It's fair to say, I rather dislike dealing with emails so any help I can get in sorting through the vast amounts of the things I receive on a daily basis is a win. The AI upgrades Google has planned for Gmail are, therefore, my cup of tea.

During the keynote, Google showed a new Summarize Emails feature that I can't wait to get using. It lets you ask for something specific within the context of a long and probably boring email and, as the name suggests, will summarize those in neat and tidy bullet points.

Hallelujah.

@tomsguide ♬ original sound - Tom’s Guide

Soccer coaching courtesy of Gemini

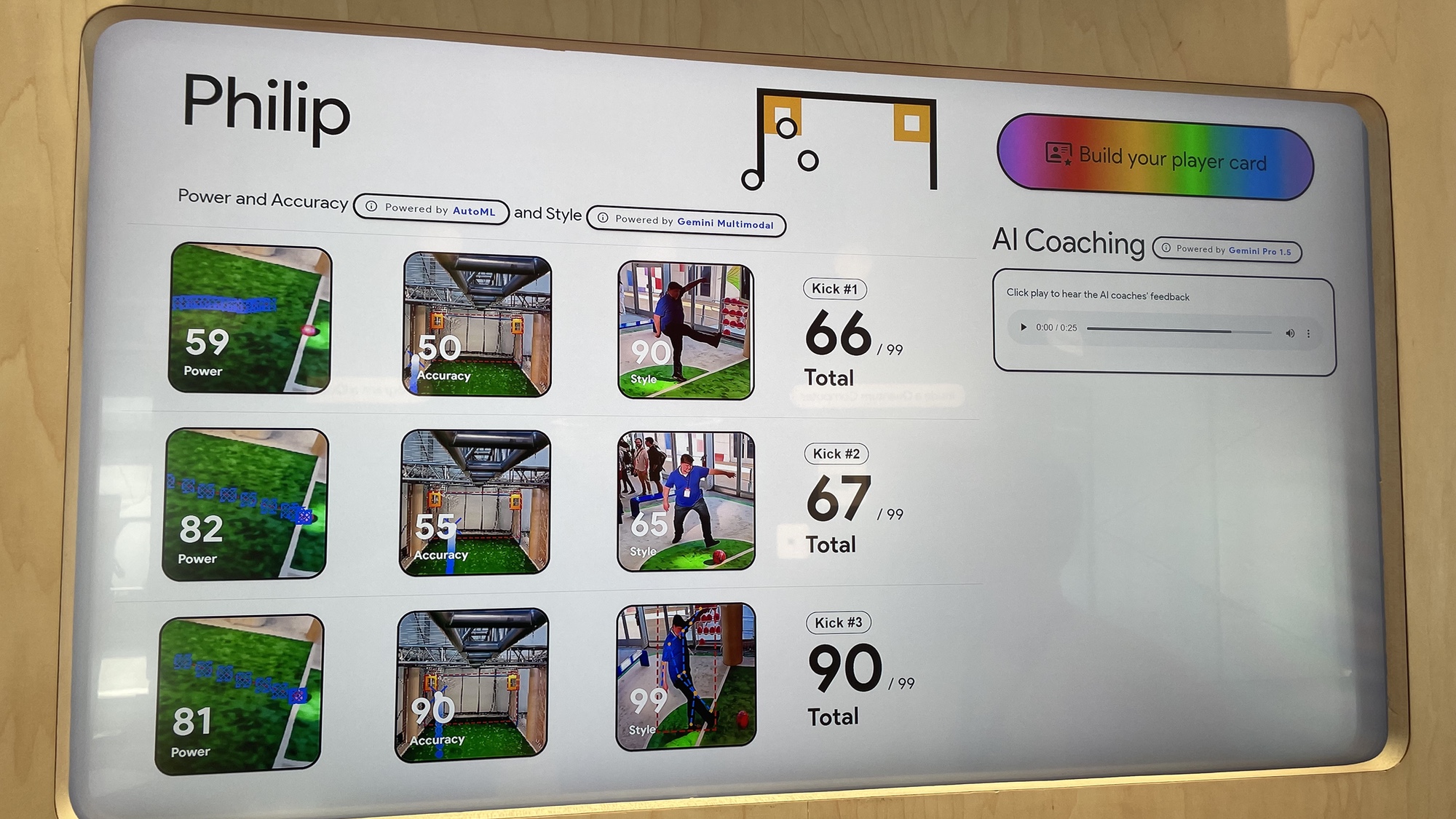

My colleague, and Tom's Guide phone editor, Philip Michaels was at the I/O keynote yesterday. And while there wasn't any actual phone hardware to get his hands on, Phil did get a chance to enjoy a spot of AI-based coaching when it came to his soccer skills.

As Phil tells it: "My favorite demo at Google I/O allowed me to kick a soccer ball in anger and use AI analysis to pinpoint where my wayward penalty kicks had gone so wrong. I had three attempts to kick the ball at goal while cameras measured my form to produce scores for power, accuracy and style."

The impressive part was the data wasn't just fed back to him in numbers and graphs. Google used Gemini 1.5 Pro to interpret the data so an AI coach could vocalise the feedback directly, letting Phil know he probably shouldn't give up the day job.

Gemini Live vs ChatGPT-4o

Gemini Live was a big deal during the I/O keynote and clearly meant as a rival to OpenAI's ChatGPT Voice. So, how do the two compare?

Both offer a conversational, natural language voice interface, both offer the potential for live video analysis through a smartphone camera and both seem to be fast enough for a truly natural conversation where you can interrupt the AI mid-flow.

However, ChatGPT Voice sounds more natural, can detect and respond to emotion and vocal tones and even adapt in real-time to how you ask it to speak. Our early verdict is that Google has a bit of catching up to do.

What the competition is doing...

The reason Google is going so hard on AI surely has something to do with the looming spectre of OpenAI and ChatGPT. In case you missed it, OpenAI held its own event earlier in the week to reveal what it's been working on.

Headline news is the introduction of GPT-4o, a new model that's both much faster and more efficient than the current GPT-4. What's more, OpenAI is rolling it out to its free tier of users, meaning they'll get custom chatbots and access to the ChatGPT store which has models and tools built by users. All that without having to hand over $20 for the premium tier.

Given that Google built much of its empire on "free" tools like Photos, Docs and Drive — not to mention Search and YouTube — the news from OpenAI must have rattled some cages over at Mountain View.

Philip Michaels here, back from a day spent in Google's company at I/O. Day 2 is a much more low-key affair, so I'm watching from afar. And one of the first things Google did today was release Android 15 beta 2.

What are the highlights of this new version? My colleague Tom Pritchard found 7 Android 15 changes to get excited about, such as Private Spaces for tucking away sensitive apps and the ability to added passes to Google Wallet with just a photo. There's also some security features including Theft Detection Lock, which taps into AI to determine if someone's swiped your phone. Because let no update at Google take place these days if it doesn't include AI.

Wednesday's other big software announcement from Google involved Wear OS 5, the software that supports a number of smartwatches, including Google's own Pixel Watches. The story here is power efficiency — as in, that's what Wear OS 5 offers. Run a marathon with the new software, Google says, and you'll have 20% more battery left compared to if you were using a Wear OS 4-powered watch.

Wear OS 5 also adds some metrics including Ground Contact Time, Stride Length, Vertical Oscillation, and Vertical Ratio. This is not exactly ground-breaking since, as my colleague Kate Kozuch points out, the Apple Watch has been able to monitor such things since 2022.

There's one other change to Wear OS 5 — it introduces a new version of Watch Face Format, which you use for customization purposes when creating a watch face.

One of the multitude of AI announcements from Google this week was Veo, an AI model that can generate high-def video using text prompts. A standout feature in Veo is its apparent ability to understand cinematic terms, so that you can tell it to do a time-lapse video or use an aerial shot and Veo will comply.

Google says that Veo can generate more than 1 minute of video footage, though how much more than 1 minute is unclear. We do have some Google Veo samples, though, to give us some idea of what the videos look like and how they compare to OpenAI's Sora video generator.

While I was at Google I/O on Tuesday, I got the chance to sit in a Rivian R1S and watch videos on the EV's 15.6-inch infotainment system. No, I wasn't slacking off — rather, it was a demo of a newly announced Android feature that's going to add Google Cast support to cars with Android Automotive.

The idea is that when you're at a charging station and topping off the battery for your Rivian vehicle, you've got a lot of sitting around and waiting to do. So why not spend the time watching something? This Android update means you can now beam any of the 3,000 or so cast-enabled apps to the screen in your car.

Rivian will be the first automaker to gain this support, but others are coming, Google says.

The Wear OS 5 announcement by Google today details some of the new health metrics coming to Google's smartwatch software. They've also been things that rival devices — namely the Apple Watch — have already been able to track. Senior writer for fitness Dan Bracaglia takes a look at how Google is trying to catch up to Apple and Garmin with Wear OS 5.