This is an important update. Including the Gemini 3 series shifts the narrative from "evolution" to a "revolution" in real-time intelligence and Native Multimodality.

Here is the updated, optimized version of your article, incorporating the latest Gemini 3 and Gemini 3 Flash models.

From chatbots to Live intelligence: The evolution of Google’s Gemini

The Gemini ecosystem has evolved at breakneck speed. What began as Google’s rebranding and re-architecting of Bard has grown into a full-stack AI platform — with models that can understand text, images, and audio and integrate with tools to help users get things done.

Early Gemini versions (1.0 and 1.5) introduced massive context windows — up to approximately one million tokens — enabling users to work with very large documents and long videos in a single session.

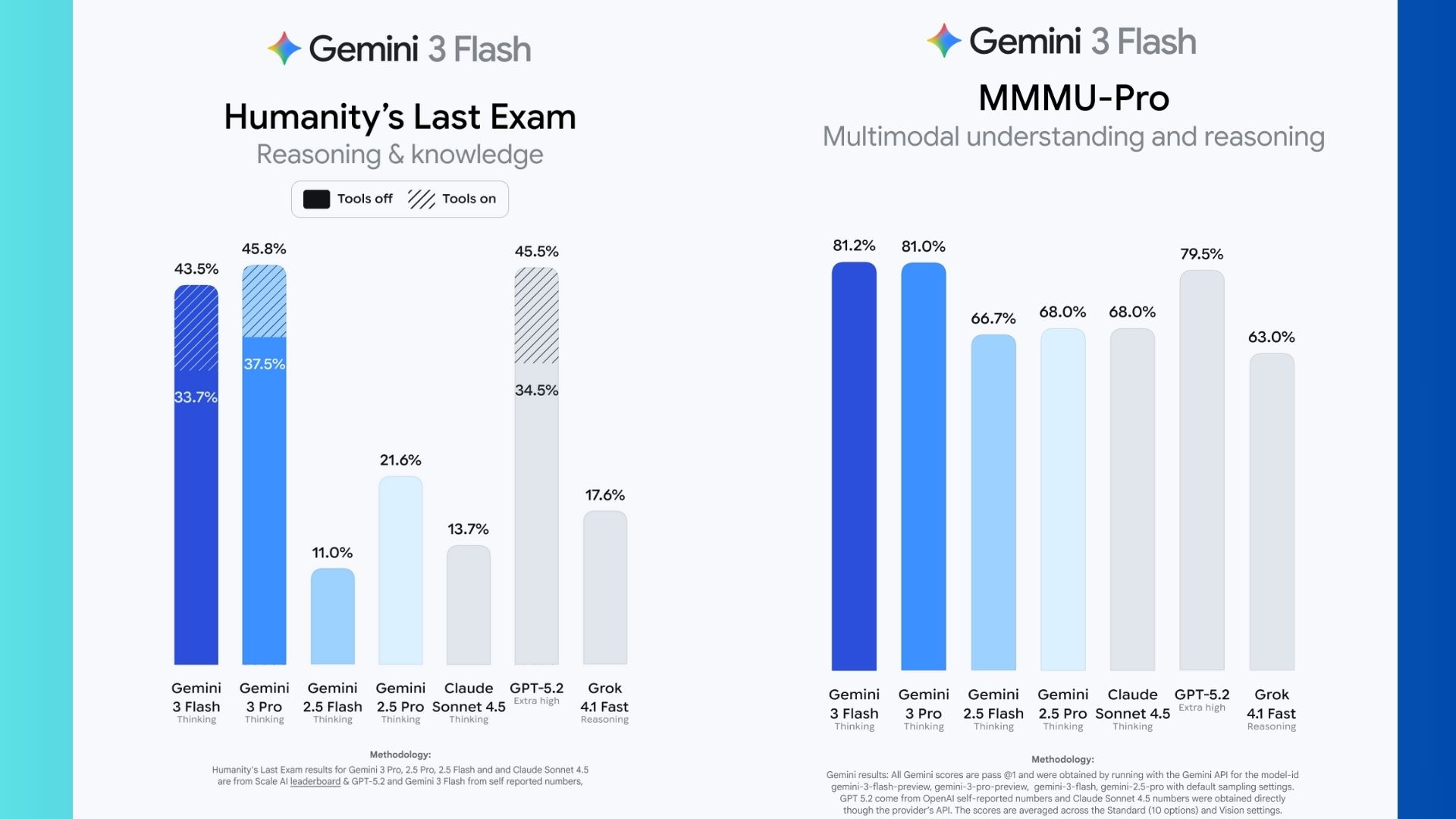

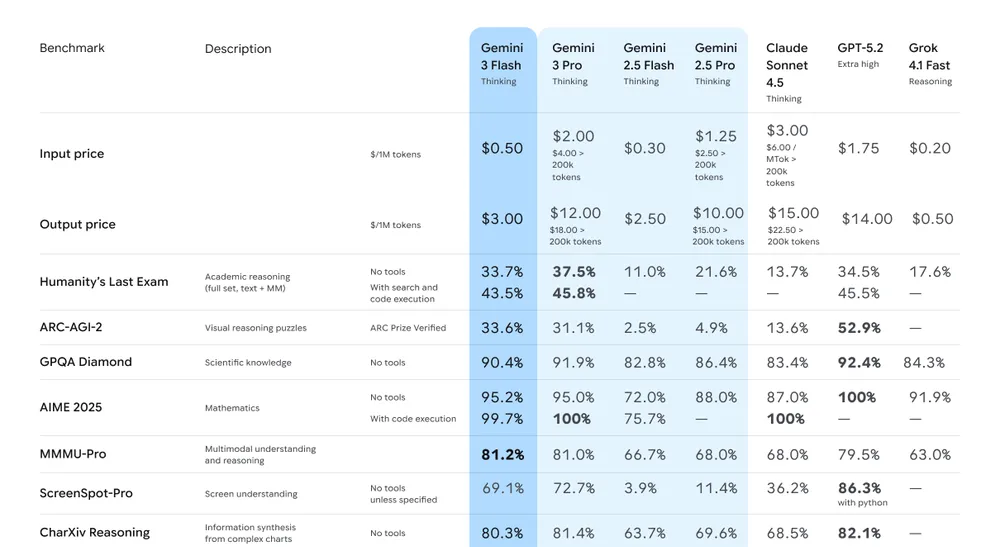

By late 2024, Gemini 2.0 expanded the focus beyond answering questions to supporting agent-style workflows, where the model assists with multi-step tasks and automation. Subsequent variants like Gemini 2.5 Pro and 2.5 Flash further improved reasoning, speed, and efficiency, placing highly on many public benchmarking suites for coding and complex problem-solving.

The new standard: Gemini 3 & Gemini 3 Flash

As of early 2026, Google’s focus has shifted toward the Gemini 3 generation — marking the next major phase in its AI roadmap. This era emphasizes native multimodality, with models designed to reason across text, audio, images, and video while maintaining shared context between them.

Gemini 3 represents Google’s most capable general-purpose model to date, built for deep reasoning and nuanced collaboration. Rather than simply answering questions, it’s positioned as a thought partner for complex, multi-step projects where planning, synthesis, and judgment matter.

Gemini 3 Flash is the speed-optimized counterpart, engineered for low-latency interactions such as Gemini Live. It prioritizes fast response times and efficiency, enabling more natural, flowing voice conversations while delivering high-tier intelligence at a lower cost.

Nano Banana (image generation) — internally tied to Gemini 2.5 Flash Image, but now Gemini 3 — is known for strong character consistency, allowing users to edit photos or generate new images while keeping subjects visually coherent across multiple frames. These capabilities are surfacing across products like Google Photos’ AI editing features and experimental creative tools.

Veo 3.1 (video generation) brings high-fidelity, cinematic video generation into consumer workflows, supporting up to 1080p output. Veo is beginning to integrate with creator platforms, including early experiments connected to YouTube’s short-form ecosystem, making high-quality AI video more accessible to everyday creators.

What is Gemini?

Gemini is Google’s multimodal AI ecosystem — not just a single chatbot or model.

The Gemini model family is designed to work across multiple formats, meaning it doesn’t train or operate on text alone. Gemini models can process and generate written language, images, video, audio, and computer code — often within the same session. This places Gemini in the same class as OpenAI’s GPT-4o and ChatGPT-5, with full multimodal input and output becoming standard starting with Gemini 2.0.

In typical Google fashion, many of Gemini’s most advanced capabilities have been quietly iterated on months before public launches. Recent releases have surfaced features that more heavily marketed rivals sometimes lag on — such as vertical video generation in Veo 3 and prompt-driven photo editing through Nano Banana. These tools have gone viral quickly, pulling millions of new users into the Gemini ecosystem.

On the open-source side, the scale is staggering. Tens of thousands of Gemini-derived models now exist on platforms like Hugging Face, fine-tuned for specific languages, industries, and tasks. But that rapid expansion has also created confusion. The fast-paced rollout of Gemini 1.5, Gemini 2.0, and later Gemini 2.5 Pro and Flash variants has blurred the lines between foundational models and their specialized offshoots.

The key thing to understand is that Google uses the Gemini name to describe both its underlying model technology and the applications built on top of it. Gemini Pro, Flash, Nano, Ultra, Veo and Nano Banana aren’t separate products so much as different layers and expressions of the same AI stack. Once you see Gemini as an ecosystem — not a single model — the naming starts to make sense.

1. Models

In the beginning was DeepMind, the AI lab launched in London in 2010. This foundation stone of the whole AI industry delivered the LaMDA, PaLM, and Gato AI models to the world. Gemini is the latest iteration of this generational family.

Version 1.0 of the Gemini model was launched in three flavors, Ultra, Pro and Nano. As the names suggest, the models ranged from high power down to petite versions designed to run on phones and other small devices.

Note that much of the confusion from the subsequent launches has come about because of Google's philosophical tussle between its search and AI businesses.

AI cannibalism of search has always been a sword hanging above the company’s head, and has contributed mightily to its ‘will they, won’t they’ attitude towards releasing AI products.

Gemini models

1. Applications

Google is both a research and a product company. DeepMind and Google AI lead the research and release the models. The other side of Google takes those models and puts them into products. This includes hardware, software and services.

Chatbots

Google’s chatbot narrative has evolved rapidly, and, true to Silicon Valley form, the naming conventions have gotten a little murky.

Originally launched as Bard, the chatbot was rebranded as Gemini in early 2024, merging with Duet AI in a new Android app deployment. Since then, Gemini chat has become the conversational backbone across a host of Google products — from Android Assistant to Chrome, Google Photos, and Workspace. Today, both the classic Assistant and Gemini Chat coexist on Android, giving users a choice between familiarity and brainier AI.

Enter Gemini Live: Google’s answer to OpenAI’s Advance Voice Mode. It enables low-latency, natural, back-and-forth voice conversations, complete with visual cues and deep app integration. Importantly, this feature now reaches into Google Workspace and enterprise accounts, not just personal profiles.

Gemini is also moving into your living room. Gemini for Home has already rolled out on Google Home and Nest devices, gradually replacing Google Assistant. It’s designed for tasks like media playback, smart-home control, cooking help, and more intuitive conversation. Gemini Live will power this smarter assistant, keeping it hands-free and proactive.

Meanwhile, the Gemini app keeps getting smarter, too. It now supports:

- Audio file uploads, with free users getting up to 10 minutes and five prompts per day. AI Pro and Ultra users get much more generous quotas and file-type flexibility.

- Powerful image editing capabilities via the latest model (think outfit changes, style transfers, multi-stage edits), all built on the Gemini 2.5 Flash Image engine (aka Nano Banana). Every Gemini-generated image includes visible and SynthID watermarks.

- Photo-to-video conversions powered by Veo 3: eight-second clips with synchronized sound that are now available to Pro and Ultra users, right within the Gemini app.

Products

While Gemini as a chatbot might get most of the new models and attention from AI aficiWhile Gemini as a chatbot may get the most attention from AI power users, the biggest audience for Google’s AI is increasingly on mobile.

That exposure comes in two main forms: the standalone Gemini app on iPhone and Android, and Gemini’s deep integration into the Android operating system itself.

On Android, developers can take advantage of Gemini Nano, an on-device model that enables basic AI tasks like summarization, classification, and smart replies without relying on cloud-based models. This allows certain AI features to run faster, more privately, and without ongoing inference costs.

That same deep integration also lets Gemini trigger supported system actions and power Gemini Live, Google’s conversational AI voice assistant, which can handle tasks like playing music, answering questions, and managing follow-up requests in natural language.onados, most of the eyes on AI will be going to Gemini on mobile.

This comes in two forms, first through the Gemini App on iPhone and Android, and then through its deep integration into the Android operating system.

On Android developers can even use the Gemini Nano model in their own apps without having to use a cloud-based, or costly model to perform basic tasks.

The deep integration allows for system functions to be triggered from Gemini, as well as the use of Gemini Live — the AI voice assistant — to play songs and more.

Experiments

The latest Gemini model launch has been accompanied by a series of major Google application releases or previews tied into the new model. The list is long and impressive. Some of them include:

- Project Astra: A spectacular demonstration of real-time visual and audio understanding for AI assistants, showcasing how continuous multimodal perception and memory can enable more natural, context-aware interactions.

- Project Mariner: A powerful showcase of multimodal, agentic AI applied to real-world workflows — focused on taking actions across tools and environments rather than simply answering questions.

- NotebookLM: A genuinely new paradigm for research and study, centered on source-grounded reasoning that helps users synthesize, explain, and explore their own documents without hallucination.

- Deep Research: An agentic research capability within the Gemini ecosystem that combines large context windows with multi-step search, synthesis, and reasoning to tackle complex research tasks.