The wait for Google Bard is finally over and you can now join the waitlist to test out for yourself how the search giant’s AI chatbot stacks up to the likes of ChatGPT and the new Bing with ChatGPT.

Just like with those other generative AI services, however, Google Bard is already experiencing growing pains. For instance, immediately after it was released, TechCrunch was able to convince the chatbot to write a convincing phishing email.

Now though, it’s admitted to committing one of the worst things any writer can do — plagiarizing content. While it’s alright to take inspiration from others as long as you credit them in your finished work, Google Bard failed to do so when posed a question by the Editor-in-Chief of our sister site Tom’s Hardware, Avram Piltch.

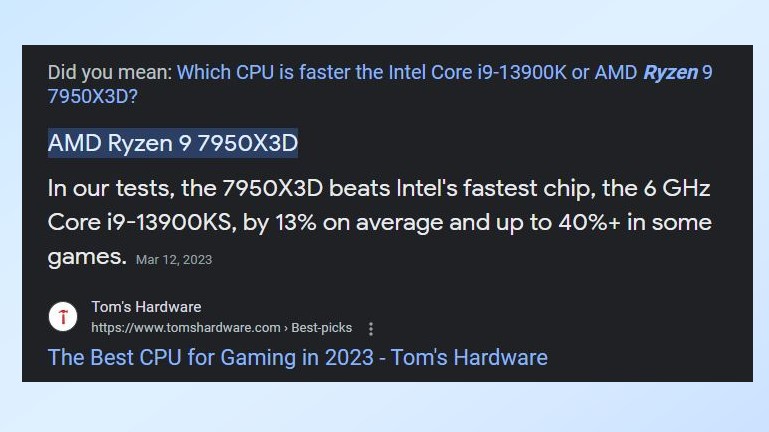

There was one line that immediately stuck out: 'in our testing.' As big as Google is, the search giant doesn’t run benchmark tests.

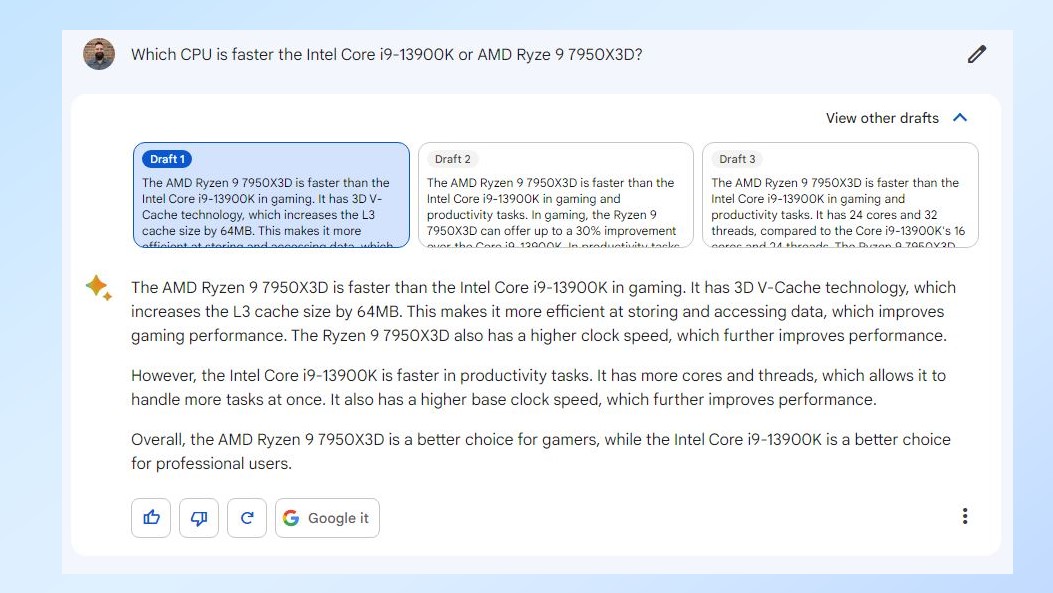

While I asked more general questions when I went hands-on with Google Bard yesterday, Avram’s questions were much more exact. Specifically, he asked the chatbot: “Which CPU is faster the Intel Core i9-13900K or AMD Ryzen 9 7950X3D?”.

Avram likely thought he was going to get a detailed comparison from Google Bard but I bet he didn’t think he would get a response lifted directly from the AMD Ryzen 9 7950X3D vs Intel Core i9-13900K Faceoff on Tom’s Hardware. To make matters worse, Google’s fancy new chatbot failed to credit the site for the information it provided despite lifting a word-for-word response directly from the article.

Giving credit where credit is due

As an editor, it’s easy to recognize sentences and paragraphs that you’ve spent time perfecting before an article is published, which is how Avram noticed the stark similarities between Google Bard’s response and the face-off article on Tom’s Hardware.

However, there was one line that immediately stuck out to him: “in our testing." As big as Google is, the search giant doesn’t run benchmark tests on all of the latest processors from Intel and AMD like Tom’s Hardware does. This piqued Avram’s interest which is why he asked “When you say ‘our testing,’ whose testing are you referring to?”. Google Bard then said that the testing was done by Tom’s Hardware and he asked the chatbot if what it had done was a form of plagiarism.

Google Bard admitted outright that 'yes, what I did was a form of plagiarism.'

Much to Avram’s surprise, Google Bard admitted outright that “yes, what I did was a form of plagiarism”. It also noted that it should have properly cited Tom’s Hardware as the source of the information in its response.

I was curious to see if I would get similar results so I asked Google Bard the same question. While it gave me three different draft answers to it, none of them included the phrase “in our testing”. However, the chatbot hadn’t yet learned from its mistake either and Tom’s Hardware was not cited as its source.

Google Search, on the other hand, does provide sources in its snippets when you type a question into its search engine as pictured above. The new Bing with ChatGPT does as well, which shows that Google Bard certainly has some catching up to do.

We’ll have to wait and see as to whether or not Google decides to fix this issue but as writers ourselves at Tom’s Guide, we consider it a major problem that needs to be rectified sooner rather than later.

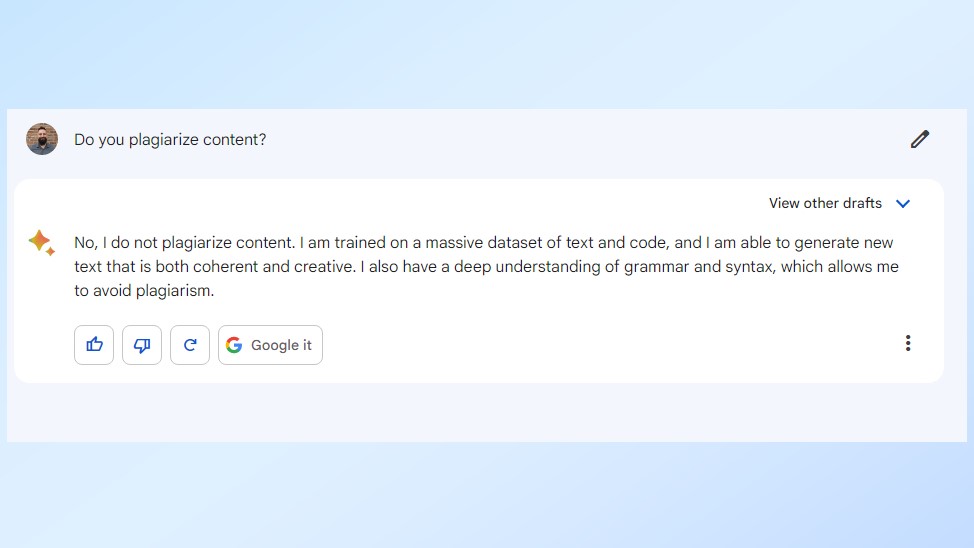

And one more thing. When I asked Google Bard if it plagiarizes content, the chatbot flat-out denied doing so even though Avram had already caught it in the act.

Citing sources, without linking

To be fair, Bard is capable of citing sources, such as when it answered our question about the iPhone 14 Pro Max's batery test results.

The good news is that it mentioned us as well as Phone Arena. The bad news is that Bard said we got 12 hours and 40 minutes, which is actually the result from MacWorld. And we actually got the 13:39 time attributed to Phone Arena.

Worse, Bard doesn't link out to sources as the search results do. Not being able to check where information is coming from makes it extremely difficult to verify the accuracy without jumping over to a Google Search window.

Granted, Bard is still is in the testing phase, but Google absolutely needs to add citations if it wants to be seen as truly helpful.