It turns out that pop stars Drake and The Weeknd didn’t suddenly drop a new track that went viral on TikTok and YouTube in April 2023. The photograph that won an international photography competition that same month wasn’t a real photograph. And the image of Pope Francis sporting a Balenciaga jacket that appeared in March 2023? That was also a fake.

All were made with the help of generative AI, the new technology that can generate humanlike text, audio and images on demand through programs such as ChatGPT, Midjourney and Bard, among others.

There’s certainly something unsettling about the ease with which people can be duped by these fakes, and I see it as a harbinger of an authenticity crisis that raises some difficult questions.

How will voters know whether a video of a political candidate saying something offensive was real or generated by AI? Will people be willing to pay artists for their work when AI can create something visually stunning? Why follow certain authors when stories in their writing style will be freely circulating on the internet?

I’ve been seeing the anxiety play out all around me at Stanford University, where I’m a professor and also lead a large generative AI and education initiative.

With text, image, audio and video all becoming easier for anyone to produce through new generative AI tools, I believe people are going to need to reexamine and recalibrate how authenticity is judged in the first place.

Fortunately, social science offers some guidance.

The many faces of authenticity

Long before generative AI and ChatGPT rose to the fore, people had been probing what makes something feel authentic.

When a real estate agent is gushing over a property they are trying to sell you, are they being authentic or just trying to close the deal? Is that stylish acquaintance wearing authentic designer fashion or a mass-produced knock-off? As you mature, how do you discover your authentic self?

These are not just philosophical exercises. Neuroscience research has shown that believing a piece of art is authentic will activate the brain’s reward centers in ways that viewing something you’ve been told is a forgery won’t.

Authenticity also matters because it is a social glue that reinforces trust. Take the social media misinformation crisis, in which fake news has been inadvertently spread and authentic news decreed fake.

In short, authenticity matters, for both individuals and society as a whole.

But what actually makes something feel authentic?

Psychologist George Newman has explored this question in a series of studies. He found that there are three major dimensions of authenticity.

One of those is historical authenticity, or whether an object is truly from the time, place and person someone claims it to be. An actual painting made by Rembrandt would have historical authenticity; a modern forgery would not.

A second dimension of authenticity is the kind that plays out when, say, a restaurant in Japan offers exceptional and authentic Neapolitan pizza. Their pizza was not made in Naples or imported from Italy. The chef who prepared it may not have a drop of Italian blood in their veins. But the ingredients, appearance and taste may match really well with what tourists would expect to find at a great restaurant in Naples. Newman calls that categorical authenticity.

And finally, there is the authenticity that comes from our values and beliefs. This is the kind that many voters find wanting in politicians and elected leaders who say one thing but do another. It is what admissions officers look for in college essays.

In my own research, I’ve also seen that authenticity can relate to our expectations about what tools and activities are involved in creating things.

For example, when you see a piece of custom furniture that claims to be handmade, you probably assume that it wasn’t literally made by hand – that all sorts of modern tools were nonetheless used to cut, shape and attach each piece. Similarly, if an architect uses computer software to help draw up building plans, you still probably think of the product as legitimate and original. This is because there’s a general understanding that those tools are part of what it takes to make those products.

In most of your quick judgments of authenticity, you don’t think much about these dimensions. But with generative AI, you will need to.

That’s because back when it took a lot of time to produce original new content, there was a general assumption that it required skill to create – that it only could have been made by skilled individuals putting in a lot of effort and acting with the best of intentions.

These are not safe assumptions anymore.

How to deal with the looming authenticity crisis

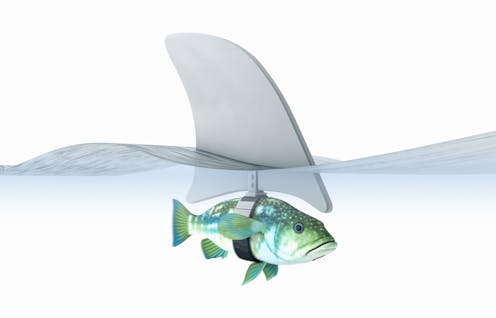

Generative AI thrives on exploiting people’s reliance on categorical authenticity by producing material that looks like “the real thing.”

So it’ll be important to disentangle historical and categorical authenticity in your own thinking. Just because a recording sounds exactly like Drake – that is, it fits the category expectations for Drake’s music - it does not mean that Drake actually recorded it. The great essay that was turned in for a college writing class assignment may not actually be from a student laboring to craft sentences for hours on a word processor.

If it looks like a duck, walks like a duck and quacks like a duck, everyone will need to consider that it may not have actually hatched from an egg.

Also, it’ll be important for everyone to get up to speed on what these new generative AI tools really can and can’t do. I think this will involve ensuring that people learn about AI in schools and in the workplace, and having open conversations about how creative processes will change with AI being broadly available.

Writing papers for school in the future will not necessarily mean that students have to meticulously form each and every sentence; there are now tools that can help them think of ways to phrase their ideas. And creating an amazing picture won’t require exceptional hand-eye coordination or mastery of Adobe Photoshop and Adobe Illustrator.

Finally, in a world where AI operates as a tool, society is going to have to consider how to establish guardrails. These could take the form of regulations, or the creation of norms within certain fields for disclosing how and when AI has been used.

Does AI get credited as a co-author on writing? Is it disallowed on certain types of documents or for certain grade levels in school? Does entering a piece of art into a competition require a signed statement that the artist did not use AI to create their submission? Or does there need to be new, separate competitions that expressly invite AI-generated work?

These questions are tricky. It may be tempting to simply deem generative AI an unacceptable aid, in the same way that calculators are forbidden in some math classes.

However, sequestering new technology risks imposing arbitrary limits on human creative potential. Would the expressive power of images be what it is now if photography had been deemed an unfair use of technology? What if Pixar films were deemed ineligible for the Academy Awards because people thought computer animation tools undermined their authenticity?

The capabilities of generative AI have surprised many and will challenge everyone to think differently. But I believe humans can use AI to expand the boundaries of what is possible and create interesting, worthwhile – and, yes, authentic – works of art, writing and design.

Victor R. Lee receives funding from the National Science Foundation, the Wallace foundation, the Spencer Foundation, the Institute of Museum and Library Services, and the Marriner S. Eccles Foundation. He has done some advisory work for Google on wearable technology.

This article was originally published on The Conversation. Read the original article.