Tinybuild CEO Alex Nichiporchik stirred up a hornet's nest at a recent Develop Brighton presentation when he seemed to imply that the company uses artificial intelligence to monitor its employees in order to determine which of them are toxic or suffering burnout, and then deal with them accordingly. Nichiporchik has since said that his presentation was taken out of context online, and that he was describing hypothetical scenarios aimed at illustrating potential good and bad uses of AI.

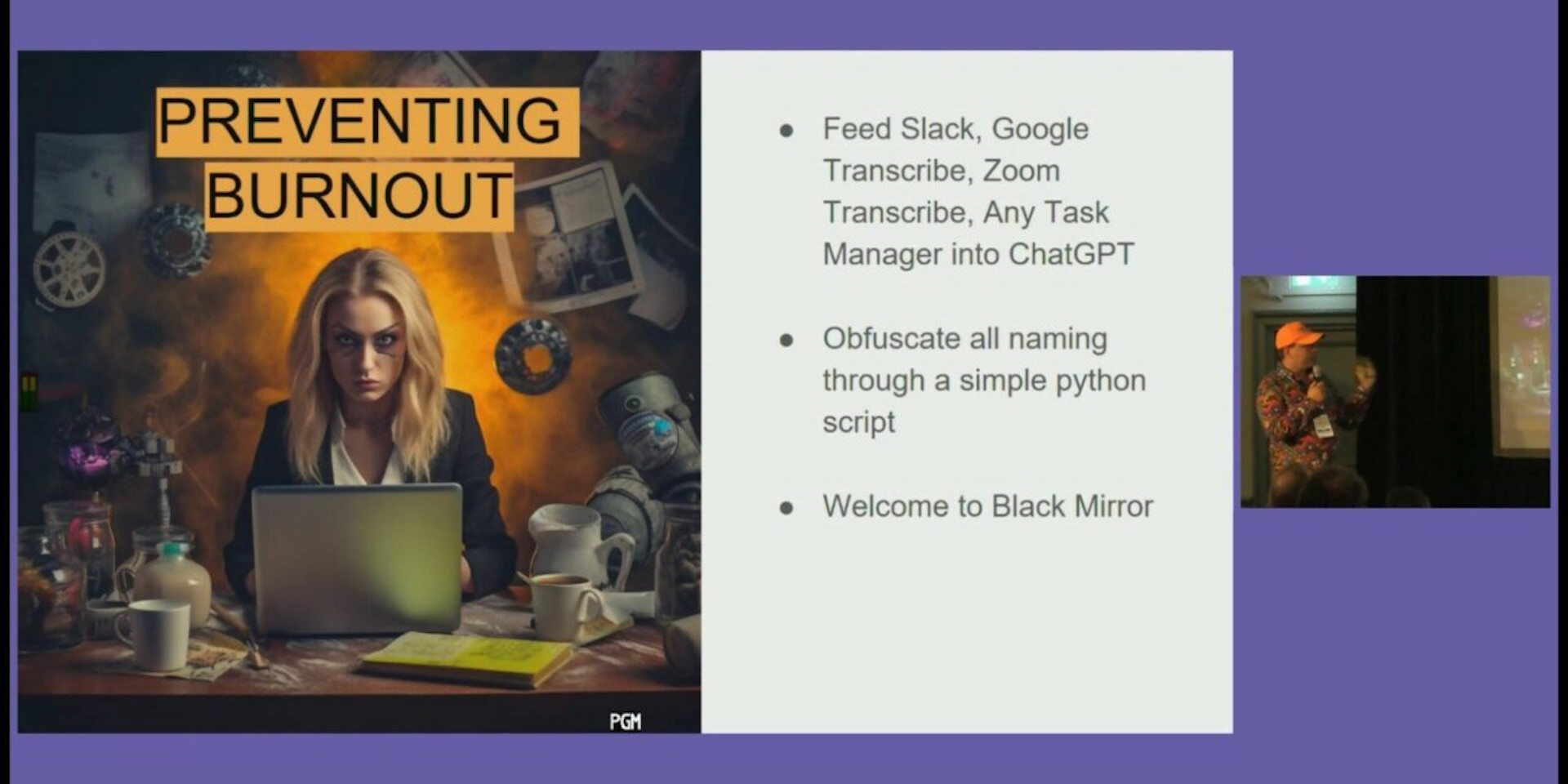

As reported by Whynow Gaming, Nichiporchik said during his presentation that employee communications through online channels like Slack and Google Meet can be processed through ChatGPT in what he called an "I, Me Analysis" that searches for the number of times an employee uses those words in conversation.

"There is a direct correlation between how many times someone uses 'I' or 'me' in a meeting, compared to the amount of words they use overall, to the probability of the person going to a burnout," Nichiporchik said during his talk.

He made similar comments about "time vampires" who "talk too much during meetings" or "type too much [and] can't condense their thoughts," saying that once those people are no longer with the company, "the meeting takes 20 minutes and we get five times more done."

Nichiporchik said combining AI with conventional HR tools might enable game studios to "identify someone who is on the verge of burning out, who might be the reason the colleagues who work with that person are burning out," and then fix the issue before it becomes a real problem. He acknowledged the dystopian edge to the whole thing, calling it "very Black Mirror level of stuff," but added, "it works," and suggested that the studio had already put the system to use to discover a studio lead who "was not in a good place."

"Had we waited for a month, we would probably not have a studio," Nichiporchik said. "So I’m really happy that that worked."

Predictably, just about everyone who read Nichiporchik's comments were not happy: The idea of being monitored by machines that can take away your employment because you violated some kind of unknown rule about talking too much is some full-on Minority Report bullshit. But in comments sent to PC Gamer, Nichiporchik said the systems he described are hypothetical and not actually in use at Tinybuild, and that the point of his talk was to contrast an "optimistic" view of AI tools as a way to accelerate processes, and "a dystopian one."

"We've seen too many instances of crunch and burnout in the industry, and with remote working you will often not be able to gauge where a person is. How are they really doing?" Nichiporchik said. "In the I/ME example—it's something I've started using a couple of years ago, just as an observation in meetings. Usually it meant a person wasn't confident enough in their performance at work, and simply needed more feedback and verification they're doing a good job; or action points to improve."

Nichiporchik acknowledged that the "time vampire" slide he used in the presentation was "terrible in the context of this discussion," but said he believes that sort of behaviour can also point toward burnout. His conflation of burnout and toxicity, he continued, was intended to state that toxic behavior is often just a manifestation of issues that people are having at work, and that those problems can ultimately lead to burnout.

"Burnout can be a cause for toxicity, and if it's prevented, you have a much better work environment," he said. "[Toxicity] can arise from an environment where people don't feel appreciated, or don't get enough feedback on their work. Being in that state may lead to burnout. Most people will think of burnout as a result of crunch—it's not just that. It's about working with the people you like, and knowing you're making a great impact. Without a positive environment it's easy to get burnt out. Especially in teams where people may have not even met in real life, and established a level of trust beyond just chat and virtual meetings."

Regarding the studio lead referenced during his presentation, Nichiporchik said the company used "a principle described in the presentation," and not actual AI, to determine that morale on the development team was suffering because the employee in question was overworked. The employee was not let go but is now on "extended leave," and will be moving to a new project when they return.

The whole situation demonstrates yet again how the topic of AI is a touchy one, to say the least: hypothetical or not, people are rightfully freaked out by the thought of everything they say being analysed by machine learning systems to guess at their state of mind or capabilities. For his part, Nichiporchik says that the "Black Mirror"-like AI systems he described in his presentation aren't something Tinybuild is ever going to use.

"We won't," he said when I asked him how Tinybuild will actually incorporate AI into its HR resources. "We don't use any of these tools for HR. I wouldn't want to work in a place that does."

Nichiporchik said on Twitter that a video of his presentation will be uploaded to YouTube at some point, so it can be seen in its entirety. We'll update when it's available.