In the 21st century, we have access to artificial intelligence tools people even a few decades ago would have seen as something straight out of science fiction. Unfortunately, they really do not work as well as anyone would want, but that hasn’t stopped greedy people from setting up entire publishing industries powered by AI text.

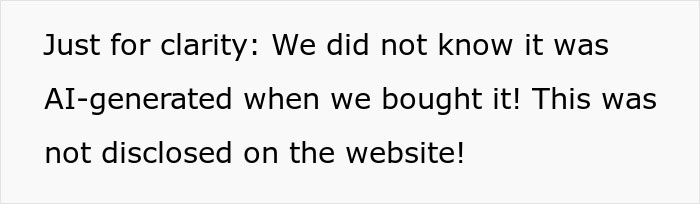

A netizen asked the internet for advice after their entire family got sick from eating mushrooms misidentified in an AI-generated book. We got in touch with veteran writer Kristina God who has published work about AI texts in the past. We also reached out to the person who posted the story online via private message and we’ll update the article when they get back to us.

More info: Substack

Knowing how to identify a mushroom is a pretty important skill

Image credits: Valeria Boltneva / pexels (not the actual photo)

But one family ended up in the hospital after relying on a mushroom identification book created by AI

Image credits: Andrea Piacquadio / pexels (not the actual photo)

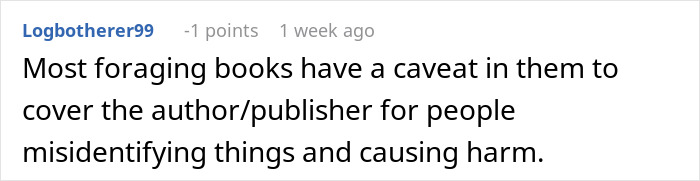

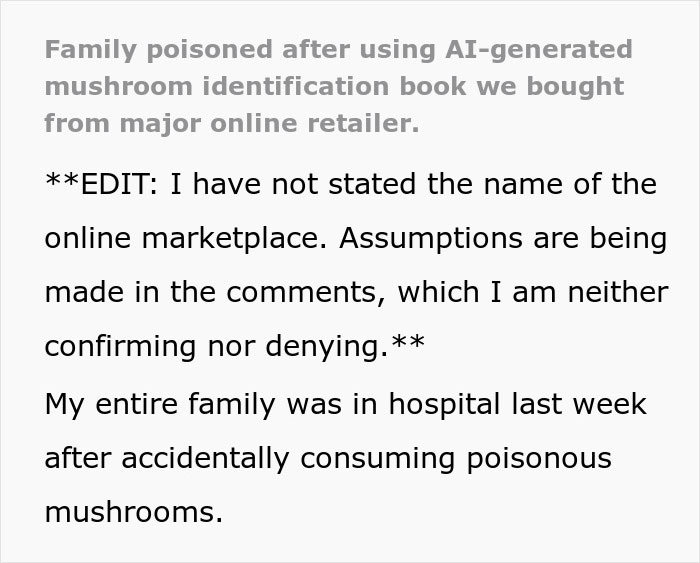

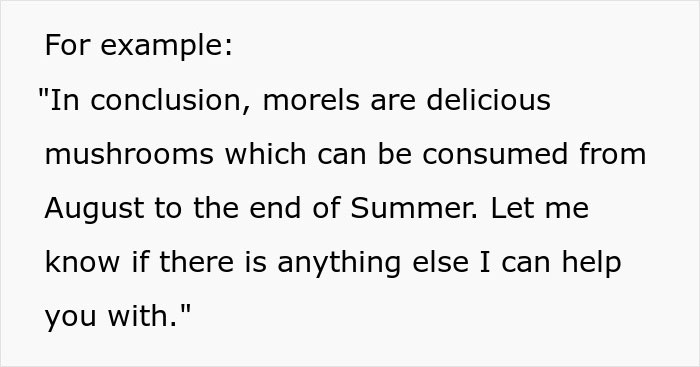

Image source: Virtual_Cellist_736

As high-tech as it sounds, AI mostly just gives uninspiring and vague texts

Bored Panda got in touch with bestselling author Kristina God to learn more about AI and some of its limitations when it comes to texts. First of all, we wanted to hear what common misconceptions about AI she has come across. “It’s not really intelligent. Only human beings can learn, communicate, understand, and make decisions. What we call AI is merely an attempt to mechanically simulate the natural intelligence of humans. It’s not authentic. Instead, it generates text based on prompting. If we put in garbage, meaningless garbage comes out. Also, AI isn’t creative. Instead, it takes what already exists, repurposes or adapts it, or changes it to fit the parameters of the prompt. Some may call it a form of high-tech plagiarism, but there‘s nothing true, original, or authentic about AI. Finally, it can’t take a balanced stance and isn’t free from bias because it’s going to include the existing biases of the created or curated information it was trained on. Since bias exists within existing human work, bias will also be found within AI.”

So we were curious to hear what indicators she would suggest for people to see if they could identify AI texts. “In today’s modern, fast-paced, digital, whirlwind age of AI… see what I did here? That’s “AI diarrhea” as I call it in the Wild West of the internet. Garbage AI writing from lazy people without any editing. Once you’ve worked with it for a while, you start to get a sense for when some writing didn’t originate from a human being. Some words and phrases tend to repeat in AI. Cringy and spammy buzzwords I see are: unlock, elevate, propelled by, paramount, in conclusion. Usually, the text is free from grammatical errors, but it doesn’t seem to have been edited for content and some of the sentences don’t make sense. There’s a lack of depth and humanity. There’s no personal experience. There’s no voice or tone. There’s a cold, robotic heart and a predictable formulaic structure. Sometimes there are awkward phrasings that repeat and that seem a little inappropriate Also, AI writing is good at giving a generalization of a topic. But when the subject matter becomes more complex and AI needs to create anything with meat in it, AI has a harder time faking it and the cracks start to appear in the armor,” she shared.

It seems that AI might not really be a way to “write” entire books

“AI has already caught on. It’s being used a lot and like any other tool it has value. The problem comes when people try to use AI inappropriately. A hammer is a useful tool when it comes to pounding a nail. A hammer is a less useful tool when it comes to repairing a window. As long as you use the tool correctly, it‘s beneficial. An appropriate use would be to use AI to generate an outline or something basic to get you started.”

“However, at the moment, a flood of spam, AI-created articles, blogs, and even books, is hitting us. From a writer’s perspective, AI is lazy person bait. Meaning that people who are too lazy to write, but still want to get paid for it, use AI to generate meaningless garbage. But there’s a reason for it. People are making huge “get-rich-quick” promises and telling you AI writing is easy. For instance, within a couple of hours, you can let AI write a book (without or with just a few edits) and then sell it. But then the harsh truth is, writing with AI isn’t easy. There will always be work that needs to be done to make it sound reliably human: You have to spend hours figuring out how to prompt AI, do a lot of heavy editing, add a human touch, and do fact-checking. Essentially, human beings have to do the part that AI can’t do: think and create. Most people who let AI write their stories don’t know how to edit them to be actually great stories. And that’s the whole problem.”

“So I don’t think that AI is going to replace human writers any time soon. People read books, articles, and poems to get an insight into the human experience. For the time being, AI can’t provide that. Also, I would never pay to read anything written purely by AI because it’s just not going to be unique or valuable enough for me. It lacks authenticity, authority, credibility, depth, and uniqueness. So I imagine in the future, we’ll see an even bigger distinction between free content and paid content. Free content might be majority AI-generated, but paid stuff is going to be written by humans, like stories that we’re already seeing paywalls for,” she shared. If you want to see more of her work, check out her Substack, Medium page, and YouTube account.

Most AI tools really just take other people’s work and regurgitate it

Artificial intelligence (AI) as a topic is a lot larger than just some texts someone generated, but it can be helpful to know how a book like this might have been made in the first place. This should illustrate the risks and limitations of AI and also show why you probably won’t be able to ask ChatGPT to write you bespoke novels any time soon.

To start, the GPT in ChatGPT stands for generative pre-trained transformers. If you’ve ever heard someone talking about “training” their AI model, then this is where that becomes relevant. Simply put, a “large language model” (LLM, another term you’ll often see thrown around) is given a whole host of data, normally text, and it tries to find connections between, basically, certain combinations of letters.

So, in short, this sort of AI isn’t intelligent at all, indeed, it doesn’t know anything more than the probability that certain letters often follow other letters. This is all an oversimplification, but the principle stands. Most of the iterative AI tools that have been popularized over the last year or so work on this principle, they take a massive host of human work, be it texts or art, and then just make copies of it. Or, even worse, it’s used to create downright illegal content.

So if you’ve heard artists complaining about their work being stolen by AI, this is exactly what they mean. Imagine you are a digital artist with a specific style. You have a sizable portfolio of your work online. Then some image generation AI comes by, scans everything you’ve ever made and now spits out horrible facsimiles of your work. Remember, in most cases this is happening entirely without the permission of the actual humans making the work.

This book was likely the result of greed and laziness

This leads us to the AI mushroom book. Unfortunately, we do not have the details, as anyone seeking legal action would be foolish to post everything that is happening online. We don’t know how it came about, but most likely, some enterprising person decided to cut a lot of corners. Instead of getting in contact with an expert, they simply asked a GPT tool to spit out a list of edible and inedible mushrooms.

Perhaps they even asked it to make the entire book. Regardless, this text, where nothing has been verified by a human, was slapped together and put online. Most AI tools will explicitly tell the user that AI can and will make mistakes, so it’s important to verify information. This would suggest that the creator of this work was aware of the risks and chose to publish anyway.

Remember, the family that was hospitalized did the right thing, they literally bought a book to help them identify mushrooms. If AI wasn’t a factor, the people behind the book would absolutely be liable in this case. In 1979, IBM put together a presentation that included the statement “a computer can never be held accountable, therefore must never make a management decision.” AI can’t be a “haven” for lazy or malicious people to get away with grifting people just because “technically” they didn’t write the book.

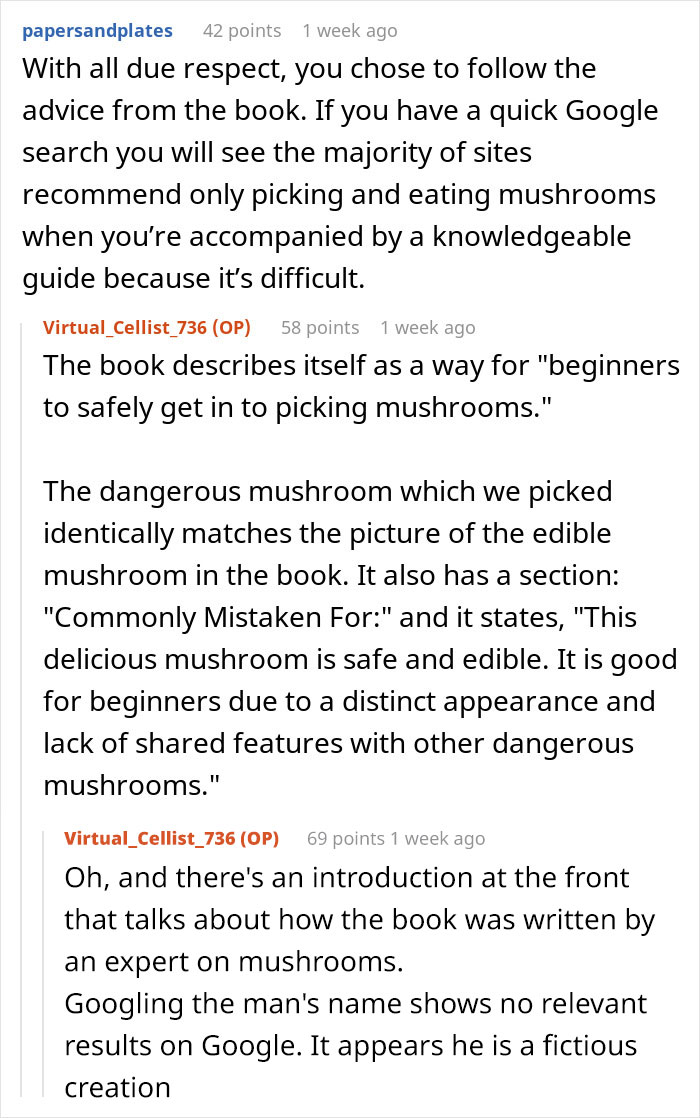

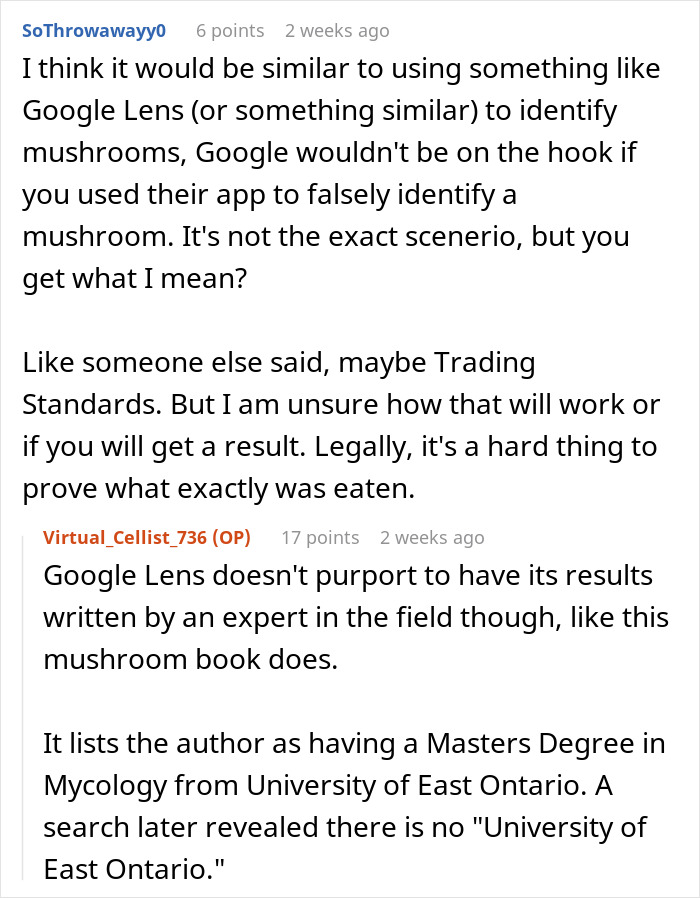

Some folks wanted more details

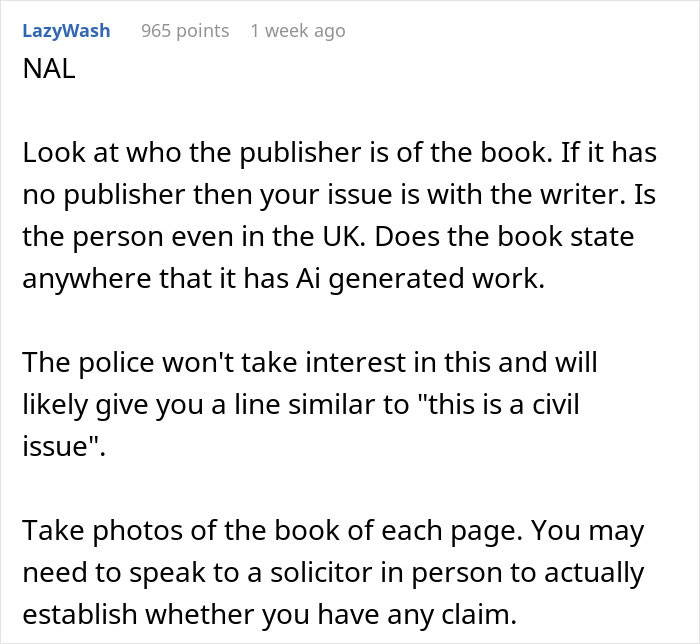

[reactions and advice]

Others tried to give some suggestions