For the past decade, Meta has been investing billions into figuring out what the next big thing is going to look like. Whether that's a VR headset, a pair of AR glasses, or an AI companion that you wear, Meta has been working on it, and Caitlin Kalinowski has almost certainly been at the center of it.

In his weekly column, Android Central Senior Content Producer Nick Sutrich delves into all things VR, from new hardware to new games, upcoming technologies, and so much more.

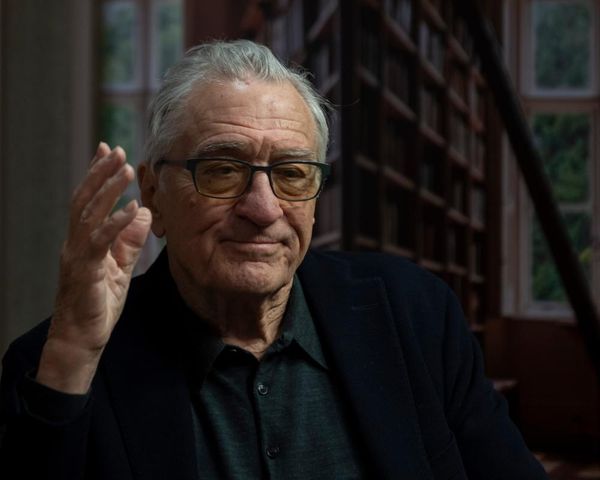

I had the opportunity to chat with Kalinowski—CK, as she likes to go by—who previously led product design and integration for the Oculus Rift, Oculus Go, and Oculus Quest headset lines. She has two decades of product design experience, including the original Unibody MacBook Pro team and every Oculus hardware product released since the original Touch controllers for the Oculus Rift.

But while those chops are impressive, someone like Kalinowski is always looking toward the horizon for the next big thing. Now, she is working on Project Nazare, Meta's first pair of true AR glasses that is set to redefine how we think of smart glasses.

And while Ray-Ban Meta smart glasses have been a huge hit, Kalinowski tells me that what her team has been working on goes above and beyond the expectations set by existing products. Yes, that even includes the new video calling and AI features just launched on Ray-Ban Metas. They're so impressive, she told me that they provide the "same degree of 'oh my God, WOW! I can't believe this!' that the original Rift was" for her.

Making it wearable

Six years ago, Meta went all-in on the standalone concept as it launched the Oculus Quest. Since then, we've seen two new generations of headsets, recently culminating in the launch of Meta Horizon OS, Meta's own Android for VR. Kalinowski told me that there was "a huge debate internally early on" whether to focus on tethered headsets like the Rift or to go fully standalone with the Quest concept.

But well after the dust from those conversations has settled, Meta's next big product is said to have the same degree of "Oh my God, wow! I can't believe this!" that the original Rift had.

In my experience, most people who try a modern VR headset are usually immediately impressed. It's one of those experiences that's hard to put into words until you've tried it for yourself, as the virtual becomes actual reality to your eyes and mind.

Kalinowski told me that she thrives on "giving customers a new experience they haven't had before," something that Project Nazare should be able to deliver if what I'm being told rings true to the final product.

Kalinowski says she thrives on "giving customers a new experience they haven't had before."

"The next big technology that I'm working on now is a full pair of AR glasses," she says. That means not just a pair of glasses that can mirror your smartphone or something like Ray-Ban Metas that can take pictures or play music but one that completely augments the world you see by way of projected imagery.

Meta is working on a version of this for Quest 3 headsets this Fall — a feature appropriately called "Augments" — but it should pale in comparison to what a proper pair of AR glasses can deliver because of the quality degradation that comes with passthrough vision on a VR headset.

Kalinowski explained that "customers are going to be able to see both the original photons of the real world in addition to what overlay you effectively want to have." In plain terms, that means your view of the real world through these glasses isn't any different from how you would see them through a pair of sunglasses. The light isn't being fed through cameras and then reprojected in a "passthrough" fashion as it is on the Meta Quest 3.

"Nothing prepares you for the high field of view immersion" of Project Nazare.

Instead, the micro OLED projectors — going on previous spec leaks of Project Nazare — will overlay virtual objects convincingly onto the world you see. However, current AR glasses that attempt to do this, like Snap Spectacles 4 or Magic Leap 2, have an extremely small field of view. That means the virtual object overlay is only in a tiny square in your field of view, which immediately breaks immersion.

As Kalinowski notes, "Nothing prepares you for the high field of view immersion" of Project Nazare. In other words, it's the game-changer AR glasses have needed because they can provide an immersive experience more like a VR headset while still maintaining a truly comfortable, wearable form factor.

AI and availability

Throughout the interview, Kalinowski was very careful to keep from revealing too much about products like Project Nazare "until they're ready." There's an important expectation to be set and Meta can't afford to release something too early, especially if the company plans to present it as the "iPhone moment" that CEO Mark Zuckerberg reportedly wants.

But the excitement in Kalinowski's voice is unmistakable. The company has clearly made a breakthrough in several important areas and is, undoubtedly, closer to fruition than possibly any company before it has been.

Some of the major breakthroughs have been because of the recent advancements in AI, but it's not just the generative AI like ChatGPT that you might think of when you hear the term. The company has been able to shrink its camera sensors because of AI, which can denoise imagery in real-time. That frees up more space for things like processors and batteries.

There's an important expectation to be set and Meta can't afford to release something too early, especially if the company plans to present it as the "iPhone moment" that CEO Mark Zuckerberg reportedly wants.

Additionally, Kalinowski said that AI can now be used to further enhance SLAM — short for Simultaneous Location and Mapping — and it's how smart robot vacuums know where they are in your home, for example. In this way, AI can be used to compress important data so that it can be processed more quickly, lowering the power requirements of the glasses.

Maybe more excitingly, she admitted that "we don't don't even know what they will all be yet" when referring to all the ways AI can be used to virtually increase the processing power and capabilities of low-power devices like AR glasses.

"We have looked at what's happening in AI and intentionally looked at our roadmap and made changes to take advantage of this AI revolution." In other words, how we thought AR glasses would work is completely different now that modern AI systems exist. "The exciting thing is AI is changing every week," she adds, which would certainly make designing a product as big as Project Nazare a challenge.

"We have looked at what's happening in AI and intentionally looked at our roadmap and made changes to take advantage of this AI revolution."

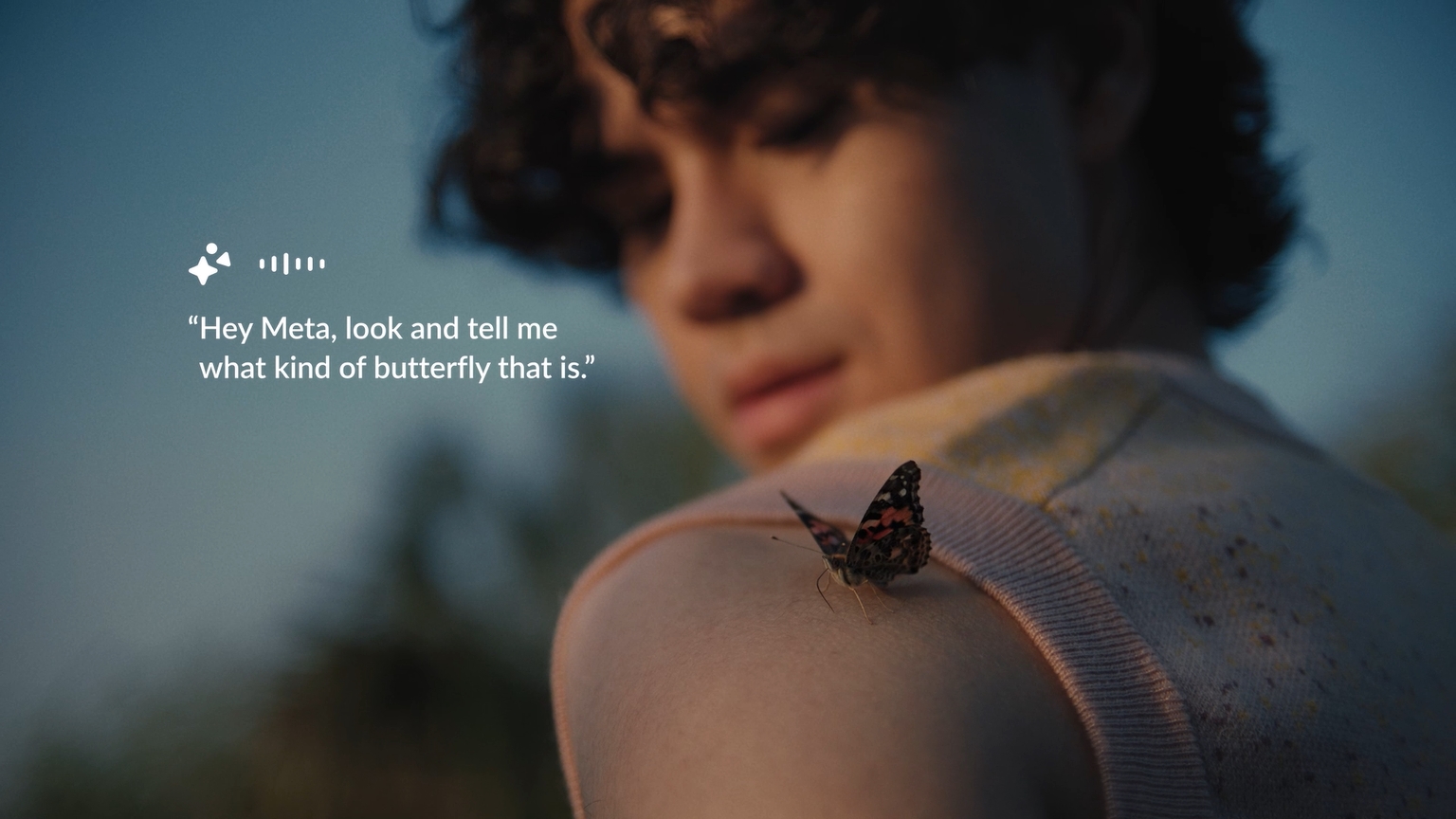

Without using any examples that might reveal too much, Kalinowski described a scenario that would help illustrate AI's visual learning capabilities and how it can start to understand context in surprising ways. The AI might "understand that you're skateboarding" and present different contextual ways of providing input or even help quickly record a video when you pull off a sick trick — that last part is my own example based on the conversation.

But, as Kalinowski points out, sometimes we have to wait to see what's going to happen even when we have all the technology in place. "When GPS first came out, few would have guessed it would be used to call an Uber from anywhere," she noted. It's the clever combination of hardware and software ideas that really helps give these products that "must have" feeling.

The success of Ray-Ban Metas was "unusually awesome," which bodes well for future AR glasses that will do even more.

Realistically, though, that still means consumers likely won't get their hands (or heads) on Meta's AR glasses breakthrough for another three years. The success of Ray-Ban Metas was "unusually awesome," as Kalinowski said, and the product seems to have caught on in the same way the Oculus Quest 2 did when it launched in 2020.

Those good sales bode well for future AR glasses that will do even more and, yet, still look good doing it. The AI component of Ray-Ban Metas "wasn't even originally the core" of the product, yet it seems to be one of the most interesting reasons to buy a pair and use them daily.

As Meta continues to push the "open ecosystem" mantra, openness and other areas of transparency will ultimately help it gain the trust needed for people to use a pair of true AR glasses every day. It's an important piece of the puzzle that shouldn't be overlooked, and it's one that teams like Kalinowski's seem to have on their minds daily.

As Meta continues to push the "open ecosystem" mantra, openness and other areas of transparency will ultimately help it gain the trust needed for people to use a pair of true AR glasses every day.

Kalinowski says her team has been watching the Ray-Ban Meta release closely and has been integrating lessons learned into the next big product. Things like privacy LEDs on the front and other similar features have made people more comfortable with having cameras on (and in) their faces throughout the day.

And while everyone won't be comfortable wearing them all the time, I can certainly foresee a future where most people are walking around with some form of reality-augmenting technology on their bodies. Whether that looks more like Ray-Ban Metas or the Humane AI Pin, it's entirely likely that the current app-based world we live in will become a thing of the past.

Get the future on your face now with Ray-Ban Meta smart glasses, the AI-powered smart glasses that deliver effortless hands-free memory capture and a smart AI companion to help you along the way.