The ESRB recently filed a request with the FTC seeking approval for a "verifiable parental consent mechanism" called Privacy-Protective Facial Age Estimation, which will enable people to use selfies to prove that they are actually adults who can legally provide parental consent to their children. It struck me and many others as not a great idea—literally inviting Big Brother into your home and all that—but in a statement sent to PC Gamer, the ESRB said the system is not actually facial recognition at all, and is "highly privacy protective."

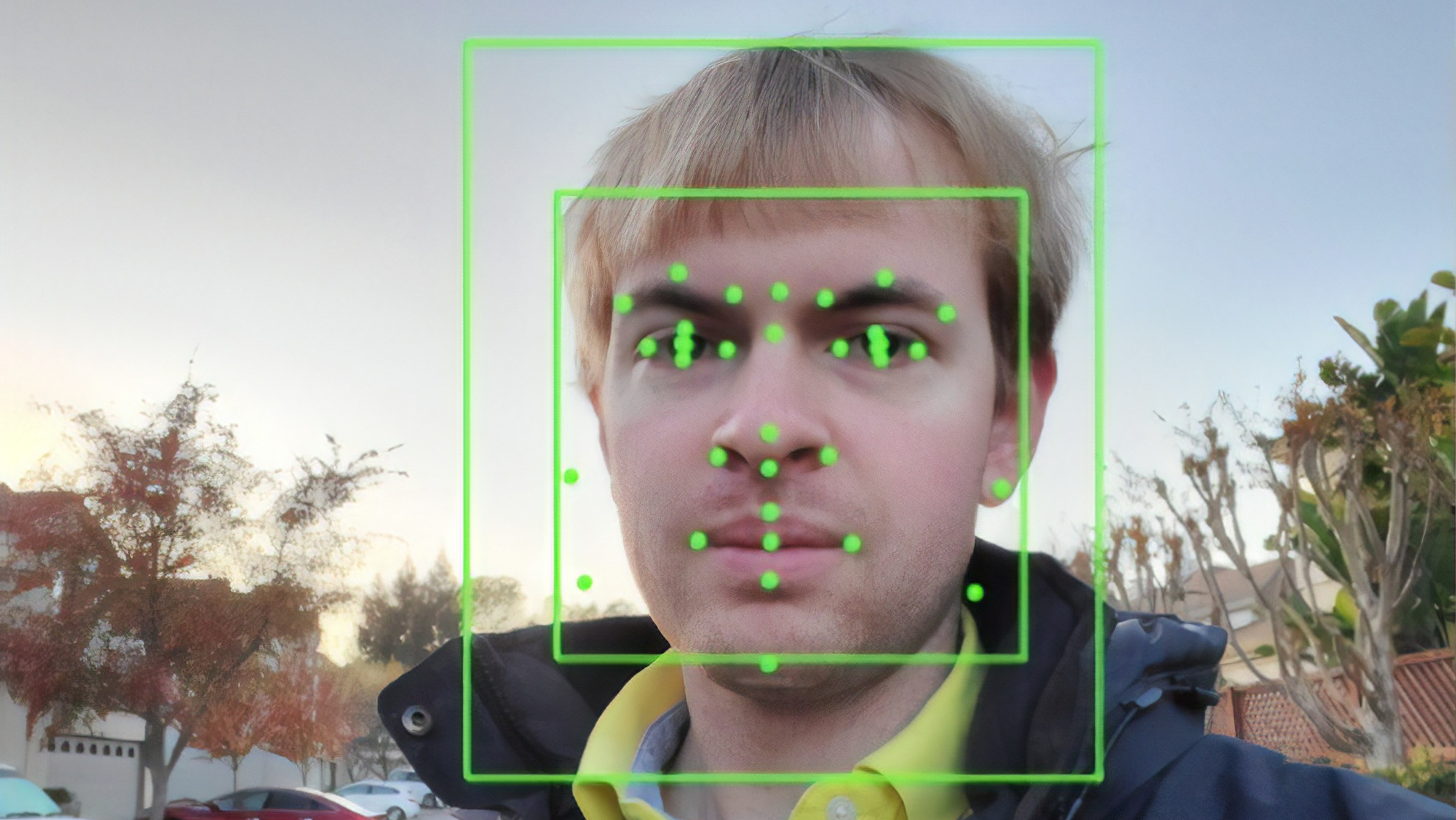

The filing, made jointly by the ESRB, digital identity company Yoti, and "youth digital media" company Superawesome, was made on June 2 but only came to light recently thanks to the FTC's request for public comment. It describes a system in which parents can opt to submit a photo of themselves through an "auto face capture module," which would then be analyzed to determine the age of the person in question. Assuming an adult is detected, they could then grant whatever permissions they feel are appropriate for their children.

It's basically a photo-verified age gate, then, not terribly different from showing your driver's license to the guy behind the counter before you buy booze—except that the guy behind the counter is a faceless machine, and you're not flashing government-issued ID, you're handing over a live image snapped within the confines of your own home. At a time when corporate interests around the world are racing to develop increasingly complex AI systems, while experts are warning us about the dangers inherent in that race, the idea of willingly submitting one's face for machine analysis understandably raised some hackles.

There were some misunderstandings, however, which the ESRB wants to correct. The system does not "take and store 'selfies' of users or attempt to confirm the identity of users," an ESRB rep said (which is a little confusing, since the FTC filing states plainly that "the user takes a photo of themselves (a selfie) assisted by an auto face capture module" which is then uploaded to a remote server for analysis), and it also would not scan the faces of children to determine if they're old enough to purchase or download a videogame. It would be used by adults, and has to do with a US privacy law, not the ESRB's age ratings for games.

In the US, it's not actually illegal to sell M-rated games to minors: The age rating system developed and maintained by the ESRB represents a de facto policy for virtually all retailers, but legally there's nothing that says a 12-year-old can't buy Grand Theft Auto 5 if they want. In 2011, the US Supreme Court actually struck down a California law banning the sale of violent videogames to minors, declaring that videogames—like other forms of media—are protected speech under the First Amendment.

The Children's Online Privacy Protection Act (COPPA) is a different matter, however. It requires that companies gain "verifiable parental consent" before collecting or sharing any personal information from children under the age of 13, and it is legally required—in fact, Epic Games, the parent company of SuperAwesome, ate a $500 million fine in December 2022 for violating it. Acceptable methods of COPPA consent include submitting a signed form or a credit card, talking to "trained personnel" via a toll-free number or video chat, or answering "a series of knowledge-based challenge questions." Those systems haven't been updated since 2015, according to the ESRB, and apparently an update is overdue.

But while the scanning system proposed in the application sounds like facial recognition, the ESRB took pains to emphasize that it does not determine identity beyond estimating age in order to establish that the requirements of COPPA compliance have been met.

"To be perfectly clear: Any images and data used for this process are never stored, used for AI training, used for marketing, or shared with anyone; the only piece of information that is communicated to the company requesting VPC is a 'Yes' or 'No' determination as to whether the person is over the age of 25," the ESRB rep said. "This is why we consider it to be a highly privacy protective solution for VPC."

(The proposed system sets 25 as the minimum threshold in order to "prevent teenagers or older-looking children from pretending to be a parent." Anyone determined to be in the "buffer zone" of 18-24 will have to pursue verification through other channels.)

Fair play to all involved, the proposed system doesn't function like a conventional facial rec system, but there's definitely an element of semantics to the ESRB's argument, too. The proposed system may not be used to recognize me, but it's using my photo to guess my age and I feel like that places the whole thing within a definition of "recognition" by any reasonable, non-technical measure.

Concerns about biases, accuracy, and privacy remain valid—at the end of the day, you are granting a corporate entity permission to take a peek in your home just to be sure that everything is on the up-and-up—and just as a matter of principle, I can't shake the feeling that this commitment to the letter of privacy laws is pulling away from its spirit: We are protecting the privacy of children by allowing corporations to snap mugshots in our living room.

Interestingly, the ESRB said the FTC filing that brought all of this to light wasn't actually necessary at all: Because the ESRB Privacy Certified [EPC] program is a "COPPA Safe Harbor," it's authorized to make changes to verifiable parental consent systems without the FTC's involvement. "That said, EPC takes its role (and its responsibilities to member companies) very seriously and, considering how new the technology is, EPC preferred to obtain approval directly from the FTC through their application process before approving its use by EPC members," the ESRB rep said.