AV-over-IP solutions have become ubiquitous. Whether powering conference rooms in corporate offices, distributing and reinforcing content in classrooms and lecture halls throughout colleges and universities, driving interactive content at sporting events, or driving multimedia staging at rock concerts and theaters, AV is everywhere, and its presence is growing.

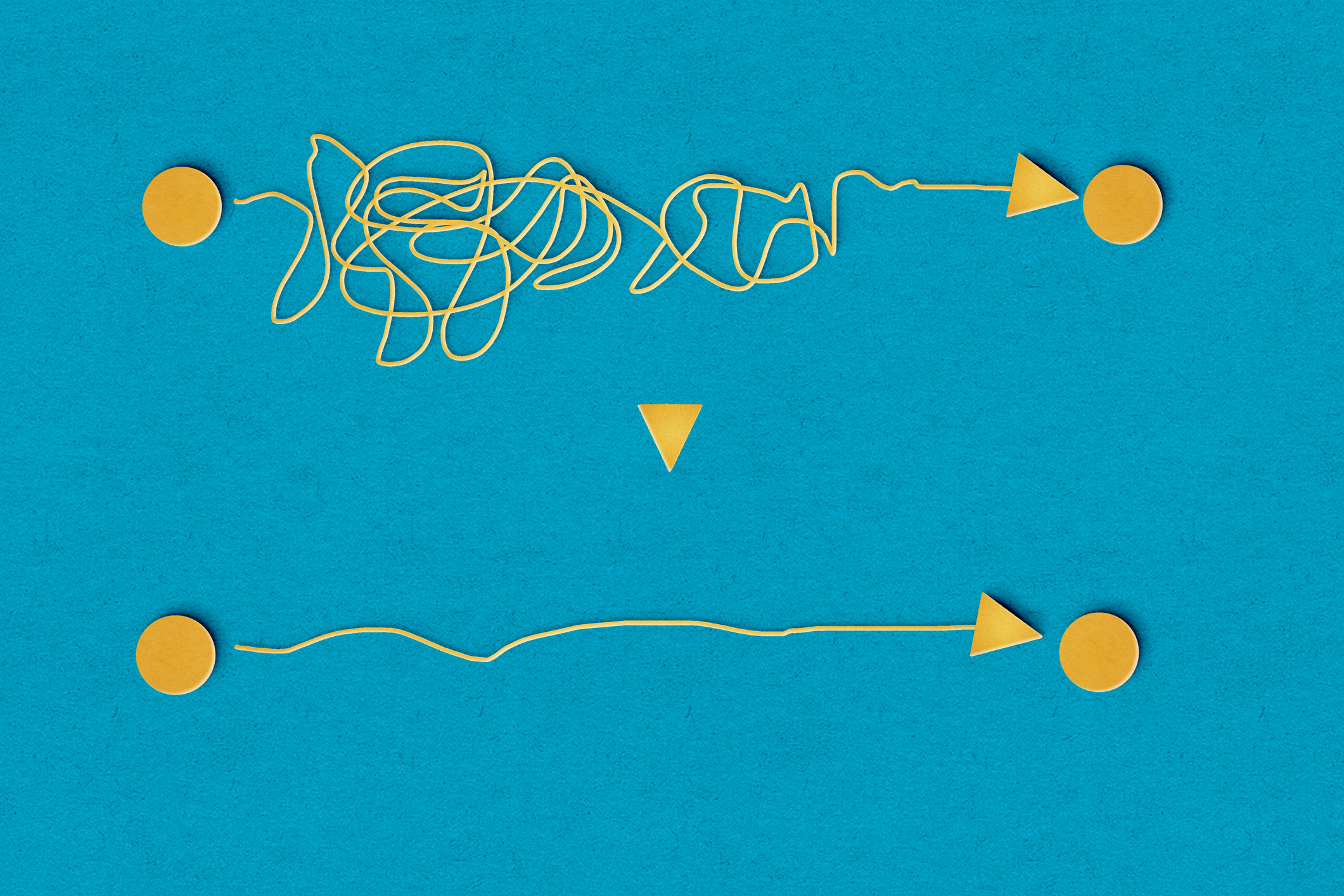

AV over IP solves many major issues such as eliminating legacy AV cable snakes, reducing interface incompatibilities, breaking through cable length limitations, providing flexibility and choice in equipment selection, and enabling remote diagnostics and support. But AV over IP does come at a cost. As AV over IP proliferates, so does the complexity of network design, implementation, and support. Networking is inherently more complex than base band, analog, point to point systems.

That said, there are resources that are available which can mask or even eliminate much of the complexity in AV over IP systems.

Be on Time

One of the very first considerations of most AV-over-IP systems is to minimize delay and synchronize audio, video, metadata (such as HDR or captioning), and control across all network devices. With analog or baseband point to point distribution systems time synchronization was a problem only for the most demanding applications, as data moved more quickly, since it was uncompressed and only flowing from one point to the next. With networks time synchronization is a little more complicated.

“Your lips move but I can't hear what you're saying”

The most obvious application of this is lip sync. This can also be seen, heard, and felt in such applications as immersive sound, video projection and edge blending, ptz camera operation, and AR/VR applications. In general, if you have proper lip sync, most everything else will follow.

Ideally, lip sync should be within -125 to +45 milliseconds in order for most audiences and end users not to notice synchronization variances. In general, if the audio is offset by more than 200 milliseconds from the video, people notice and begin to suffer from audio fatigue (trying to match the audio to the video in their heads).

For performers listening to each other play over an audio system, even without video, the latency tolerances are much less, ideally below 4 milliseconds. When you combine the use of audio, video, metadata and control over an AV over IP system in a live event, where the audience and talent are all present and aware of every minute (as in small) delay, your tolerances become very, very tight.

In Essence It’s About Essences

Contemporary AV-over-IP solutions use “essences” (separate channel flows) for audio, video, metadata and control. It is critical that all essences be clocked/time stamped using a universal time source, which is usually PTP (Precision Time Protocol), so that these separate flows can be synchronized. This is where time can get complicated.

PTP requires the assignment of a clock leader, boundary leaders, and clock following by every device sending or receiving data. It also requires configuration of a network to assure prioritized delivery of the time to all devices, and a method for monitoring and adjusting the time alignment of each network device so that synchronization is maintained. Also, this capability of time synchronization needs to universal across all network devices, as well as monitoring and management.

There are solutions, such as Dante, which fully automate, monitor and manage time in an AV over IP network. These solutions deliver a technology stack to every AV over IP device, enabling them to send and receive network time from a common time source, align the data they send to that time, stamp the time the data was received in, sampled or compressed, processed, and sent out, allowing their peers to synchronize all network elements.

These solutions also provide complex algorithms which automatically adjust to network conditions, communicate with other devices on the network regarding their health, and alert operators and technical support of impending issues, and in particular time issues, so that they can be quickly, and in many cases automatically, resolved.

Case Study: HIT Productions

In the central business district of Makati, Philippines, HIT Productions is a 31-year-old post-production studio known for creating award-winning work serving the advertising, music, film, and streaming localization markets. When a new building became available in 2021, HIT Productions implemented Dante AV as the transport mechanism for all audio and video between rooms. They recognized the new facility needed a flexible AV design to support the physical reconfiguration of the studios for production needs, with seamless re-routing of audio and video streams as required. “Dante AV gave us the flexibility to connect rooms in any number of combinations with standard Cat 6 Ethernet cables,” said Dennis Cham, founding partner, and Chief Technology Officer of HIT Productions. “Audio and video quality is exceptional, and the flexibility to route audio and video around the facility on just a few cables was very liberating.”

Processing Takes Time

In many AV over IP solutions audio has imperceptible latency, because it is sampled and sent as uncompressed data, but the same isn’t true of video endpoints. Video, in almost all but the most high-end solutions, must be compressed in order to get across a network. Compression takes time and in many cases that time is variable. Depending on what codec is used, video in ProAV can have as much as 100 – 200ms of latency and even 2-3 seconds for streaming due to compression and other processing which is many times the latency of most audio.

Additionally, depending on the codec used, that video compression processing time can be variable, particularly with MPEG based codecs which introduce interframe dependencies by relating video frames to each other. While significantly reducing bandwidth used, the MPEG encoding algorithms create difficulties in time synchronization with audio, metadata and control signals. AV-over-IP solutions have to account for those differences in latency not only between video, audio, metadata and control, but variability (jitter) within video itself.

Again, there are AV over IP system solutions, like Dante, which are not only latency and jitter aware, but can adjust playout to compensate for differences in signal arrival and processing times. These systems can also report out their performance and current running state to operators and technical support for corrective action.

How Much Bandwidth Can You Afford?

How much bandwidth is required by your AV over IP system largely depends on your application requirements. There is a continuum between quality, bandwidth (cost), and time (latency) that all AV over IP systems conform to.

Most broadcasters have optimized for low latency and high quality, at a much higher cost. They tend to use intraframe codecs like JPEG2000 over 10Gbps networks in order to have consistent encoding and decoding quality and timing. Intraframe codecs are very predictable as to the length of time is takes to encode and decode a discrete frame, allowing them to be synchronized with audio samples. Of course, these higher bit rate codecs cost more to operate.

Corporate, education, and house of worship applications tend to be more constrained by cost, opting to trade off premium quality, fast service, for lower costs. In these applications they may choose to compress their video more, in order to get smaller streams which are somewhat delayed. This leads them to interframe, MPEG based codecs, like AVC, HEVC, and NDI, which offer much greater compression and bandwidth efficiency at the cost of timing and quality. These applications typically can run in less than 1Gbps.

The Achilles’ Heel of MUXing

Fortunately, again, the complexity of AV over IP can be greatly reduced with solutions that provide time synchronization, manage network flows, provide real-time network monitoring, and have future-looking features such as comprehensive support for control and various metadata types. Exposing all these capabilities through an API (Application Program Interface) will allow users and system integrators to build control and management systems today, and leverage AI (Artificial Intelligence) to automate AV over IP systems in the near future.

Broadcasters have always been concerned about maintaining separate essences in contribution flows (separate audio, video, metadata, and control channels). Intraframe codecs allow them to have high-quality essences which can be easily synchronized with sampled audio, metadata, and control essences.

Corporate, education, and houses of worship users are only starting to learn why this is necessary. Rooms, theaters, venues, and even remote locations can have microphones, media players, mixing consoles, amplifiers, DSPs, and loudspeakers. All of these devices assume that audio is flowing in essences and can be routed and processed (mixed) as discrete channels.

A corollary to this is that devices can be made with much less expensive parts if they only have to support the number of channels they actually need.

These rooms also have cameras, displays, projectors, multiviewers, and switchers. There is definitely a need for essences that are time-synchronous for both video and audio in these markets too.

Cost-sensitive applications want to use MPEG-based codecs because of their compression. However, MPEG codecs were designed primarily for the distribution of video signals, not contribution and production. As such, discrete channels did not really matter much as the content was already post-produced before distribution. So, within the MPEG codec is included a multiplexer which takes in compressed video, audio, and metadata, interleaves (multiplexes) the channels together as one stream, along with time alignment between the various content flows. The video, audio, and metadata are, for the most part, inseparably linked.

Simple, right? Not exactly. How do you synchronize uncompressed audio with MPEG-compressed video for post-production (e.g., mixing, editing, processing, etc.)? MPEG codecs have no external clock to synchronize with. One way to do it is to route your audio signals immediately into your video source and then have it mux the audio and video together with an internal clock. Think of a microphone routed from the lectern to the ceiling-mounted PTZ MPEG camera in a classroom. Hardly practical or easy.

Or you could manually adjust the audio delay to wait for the video compression. Of course, this can be painful if you are trying to use the audio and video within the room in which it was captured. And your adjustment may fall out of sync as soon as the codec has to do more processing such as a lighting, costume, or background change. Will you have to manually adjust? And how do you sync audio between multiple video sources? What about variability in network traffic causing delays in the video?

Final Thoughts

There are several ways to deploy AV-over-IP applications. Considering architectures and solution stacks that not only serve today’s needs, but anticipate tomorrow’s is extremely important. Choosing the right solution which recognizes the need for time synchronization; the separate but time synchronous nature of audio, video, metadata and control flows; supports a wide variety of products from an array of manufacturers; has proven solutions that are widely deployed, and market tested; and provides a roadmap for future innovation will greatly simplify an otherwise complex world of AV.