Datacenter GPUs may only last from one to three years, depending on their utilization rate, according to a high-ranking Alphabet specialist, quoted by Tech Fund. As GPUs do all the heavy lifting for AI training and inference, they are the components that are under considerable load at all times and therefore they degrade faster than other components.

The utilization rates of GPUs for AI workloads in a datacenter run by cloud service providers (CSP) is between 60% and 70%. With such utilization rates, a GPU will typically survive between one and two years, three years at the most, according to a quote allegedly made by a principal generative AI architect from Alphabet and reported by @techfund, a long-term tech investor with good sources.

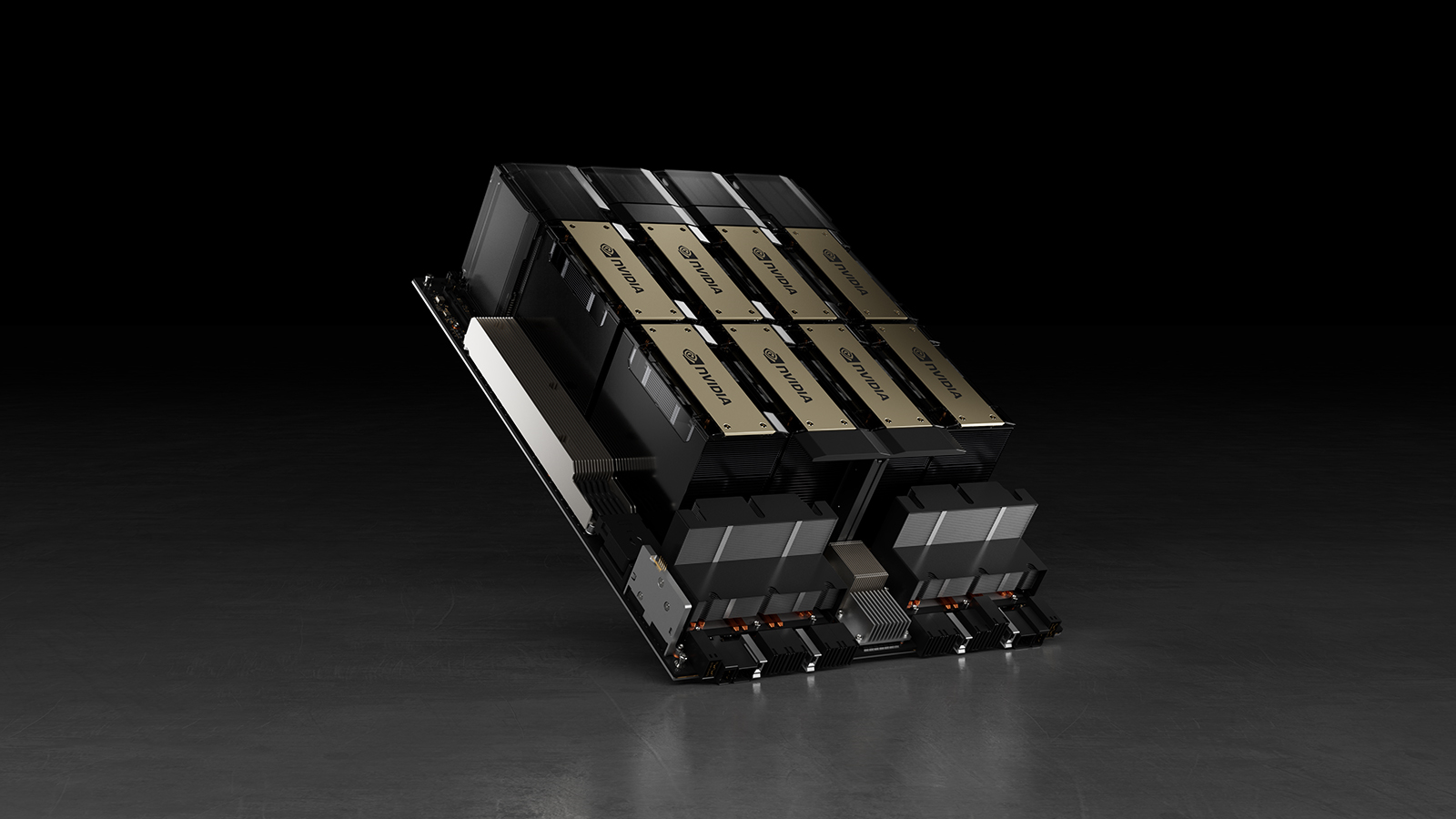

We could not verify the name of the person who describes themselves as 'GenAI principal architect at Alphabet' and therefore we cannot 100% trust their claims. Nonetheless, we understand the claim to have merit as modern datacenter GPUs for AI and HPC applications consume and dissipate 700W of power or more, which is tangible stress for tiny pieces of silicon.

There is a way to prolong the lifespan of a GPU, according to the speaker: reduce their utilization rate. However, this means that they will depreciate slower and return their capital slower, which is not particularly good for business as a result, most of cloud service providers will prefer to use their GPUs at a high utilization rate.

Earlier this year Meta released a study describing its Llama 3 405B model training on a cluster powered by 16,384 Nvidia H100 80GB GPUs. The model flop utilization (MFU) rate of the cluster was about 38% (using BF16) and yet out of 419 unforeseen disruptions (during a 54-day pre-training snapshot), 148 (30.1%) were instigated by diverse GPU failures (including NVLink fails), whereas 72 (17.2%) were caused by HBM3 memory flops.

Meta's results seem to be quite favorable for H100 GPUs. If GPUs and their memory keep failing at Meta's rate, then the annualized failure rate of these processors will be around 9%, whereas the annualized failure rate for these GPUs in three years will be approximately 27%, though it is likely that GPUs fail more often after a year in service.