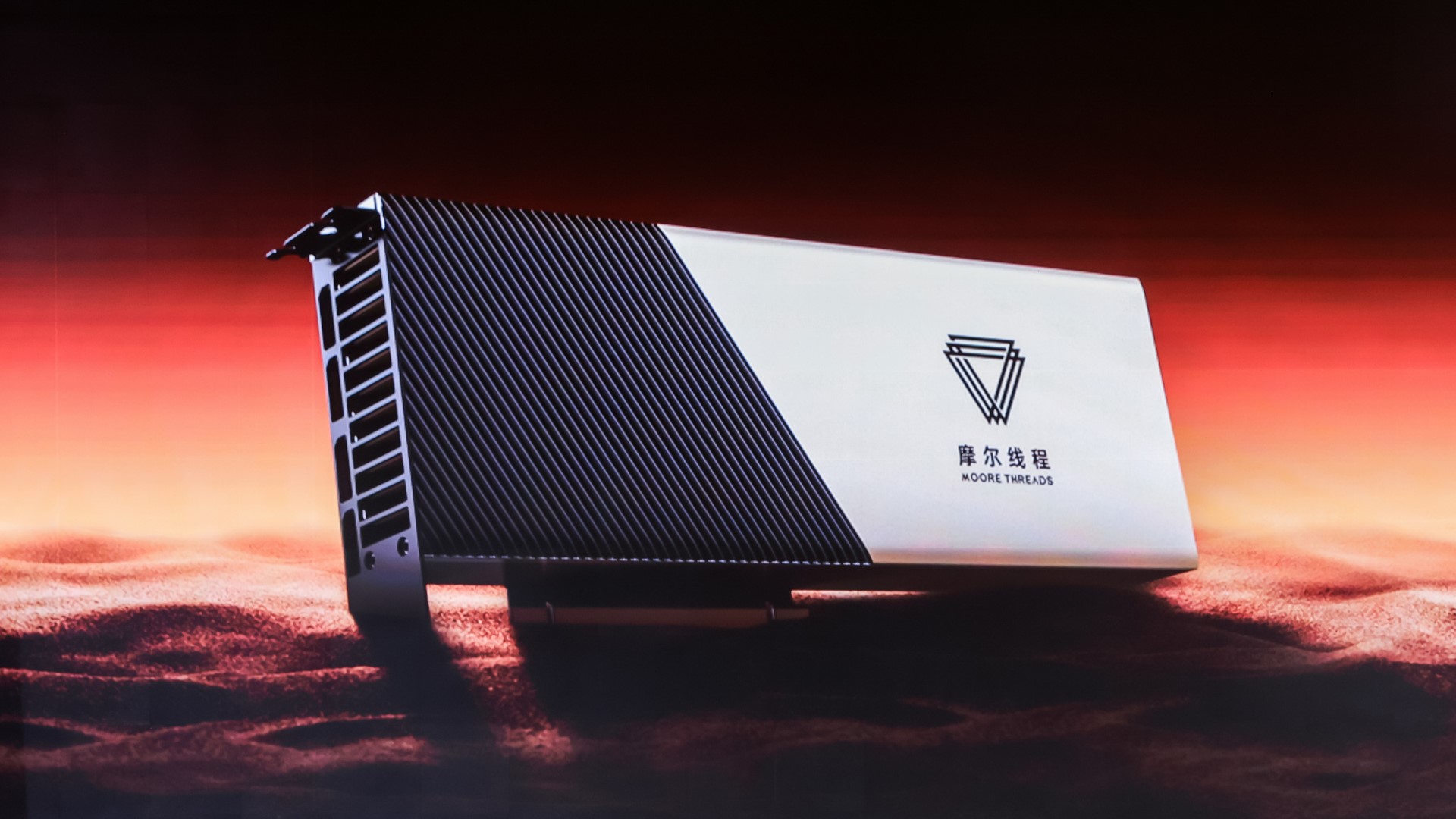

Moore Threads claims to be making great strides in its AI GPU development, with its latest S4000 AI GPU accelerator being exponentially faster than its predecessor. As reported by cnBeta, a training regimen of a new Kua'e Qianka Intelligent Computing Cluster sporting S4000 GPUs ranked third fastest in AI testing, outperforming several counterparts consisting of Nvidia AI GPU clusters.

The benchmark run was taken from a stability test of the Kua'e Qianka Intelligent Computing Cluster. Training took a total of 13.2 days and supposedly ran perfectly with no faults or interruptions for the duration of the run. The AI model used to benchmark the new computing cluster was the MT-infini-3B large language model.

The new computer cluster reportedly ranks among the top AI GPU clusters of the same scale (using the same number of GPUs, presumably). However, the above table is decidedly lacking in details. The MTT S4000 cluster was compared to unspecified Nvidia GPUs, for example — we don't know if those are A100, H100, or H200 GPUs, but we suspect A100 to be most likely. The workloads are also not the same. Training MT-infini-3B could be quite different from training Llama3-3B for example. Sprinkle liberally with salt, in other words.

Even without being apples-to-apples, however, training LLMs on the Moore Threads GPUs represents an important step in China's domestic GPU roadmap. The Kua'e Qianka computing cluster at least suggests the MTT S4000 AI GPUs are competitive with Nvidia's prior-generation A100 architecture. This is backed up by the S4000's raw performance numbers, which not only significantly outperform Moore Thread's S3000 and S2000 AI GPU predecessors but also outperform Nvidia's Turing-based AI accelerators. The S4000 doesn't match Nvidia's A100 AI GPU accelerators, but perhaps it's not far away from Ampere performance levels.

For Moore Threads, the Kua'e Qianka's performance capability is a huge win regardless of what Nvidia GPUs or LLMs were tested. It demonstrates that Moore Threads is now capable of building AI GPUs that can perform similar work as AI GPU competitors from Nvidia, AMD, and Intel. It might not perform better, but it's an important stepping stone on the path to faster and more capable supercomputers and AI clusters.

It's an impressive feat for a GPU manufacturer that was founded less than five years ago. If Moore Threads can keep delivering significant generational performance improvements, it could have an AI GPU accelerator that can go toe-to-toe with its Western counterparts in the next few years. That's a big "if" of course, and we know from historical precedent that GPU development doesn't always go as planned.

We'll also be interested to see if Moore Threads can put its seemingly good AI performance capabilities into its gaming graphics cards. To date, the MTT GPUs have suffered badly in gaming tests, thanks in part to immature drivers/optimizations. While AI needs lots of computational power, it's different from real-time computer graphics, so expertise in one area doesn't imply similar capabilities in the other.