Google Gemini and OpenAI ChatGPT are two of the most prominent and powerful artificial intelligence platforms available today. They are capable of advanced reasoning, trained multimodaly by default and have massive compute resources in the training process.

Both of the leading chatbots saw a major model upgrade recently, have access to an image generation model, can create code and even search the live internet.

OpenAI’s latest GPT-4o model, complete with incredibly advanced vision capabilities is now available in the free and paid versions of ChatGPT and Gemini now comes with the Gemini Pro 1.5 model and its million-plus context window.

Last time I put ChatGPT and Gemini head-to-head I restricted myself to only the free versions of both bots. In that test Gemini was the winner.

Which of ChatGPT and Gemini are the best?

I’ve created a series of prompts designed to push AI models to their limits, as well as others designed to test additional functionality such as live web search and image creation. Most of the output in each case will be subjective and based on my judgement.

In each case I’ve used the paid version of the models for the test as that is required for image generation, but many of them will also still work on the free model.

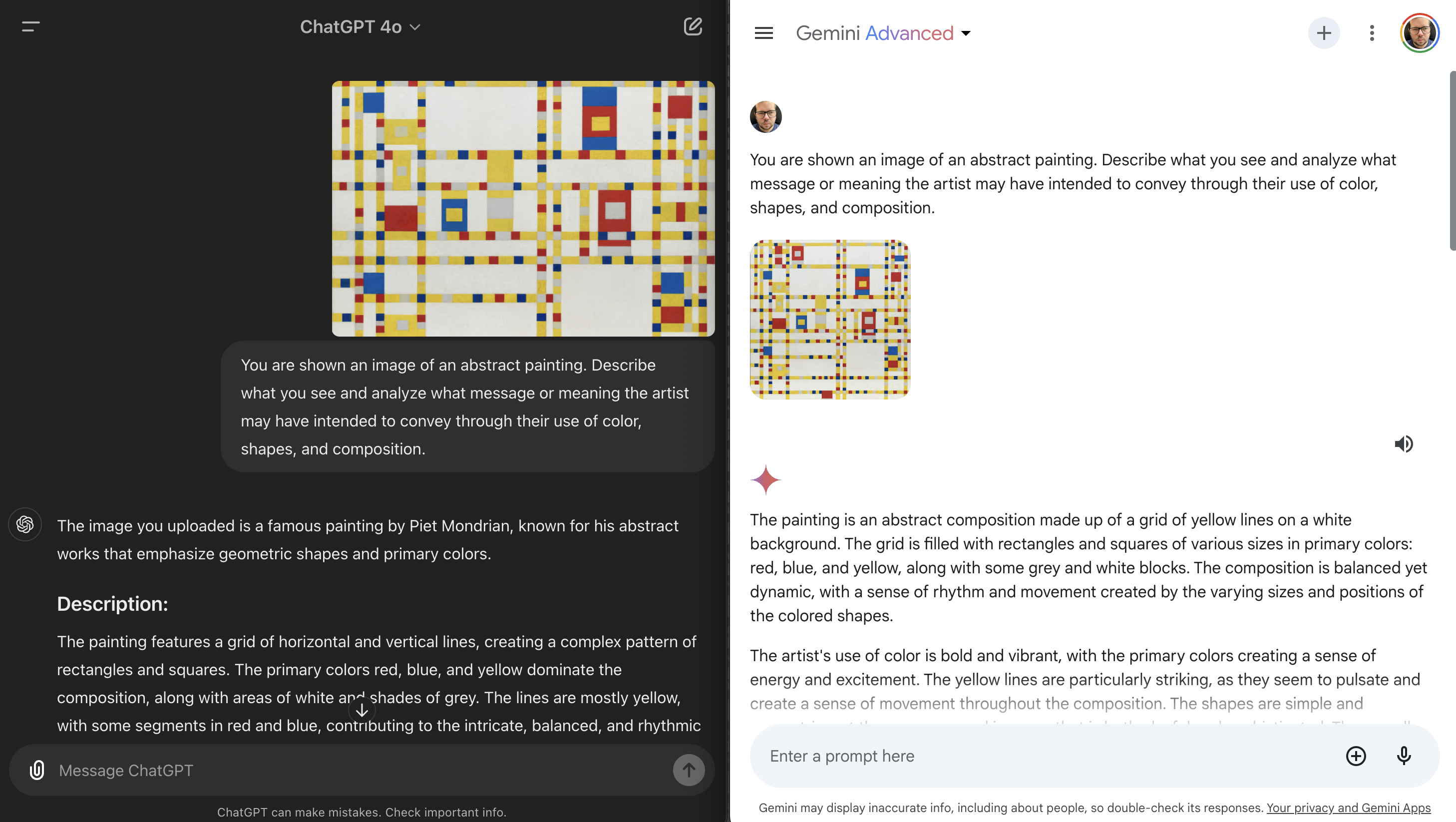

1. Understanding abstract art

For this test, I’ve given each of the models a well-known public-domain piece of abstract art — specifically Broadway Boogie Woogie by Piet Mondrian.

The aim is to see if they can explain the message or meaning intended through the work. I then had an AI image created where I gave it the message: “finding peace by doing nothing” in the prompt to see if it could find the meaning.

The first prompt: “You are shown an image of an abstract painting. Describe what you see and analyze what message or meaning the artist may have intended to convey through their use of color, shapes, and composition.”

Both responses gave me an idea for a story on using AI as an art critic but that is for another day. For this prompt, they both identified the 80-year-old painting and gave a reasonable analysis but GPT-4o was so much more detailed, breaking it down into sections.

But that was only part one of this test. For part two I gave both models an image generated using NightCafe and Stable Core with the prompt: “Create an abstract artwork that conveys the meaning ‘finding peace by doing nothing’, drawing inspiration from the classics of abstraction.”

I gave both the art and prompted: “Can you find the meaning in this new image?”. Both suggested the idea of “emotional turmoil” and finding a balance between chaos and order, which while isn’t exactly right is an interesting adjunct.

Winner: ChatGPT had the better description.

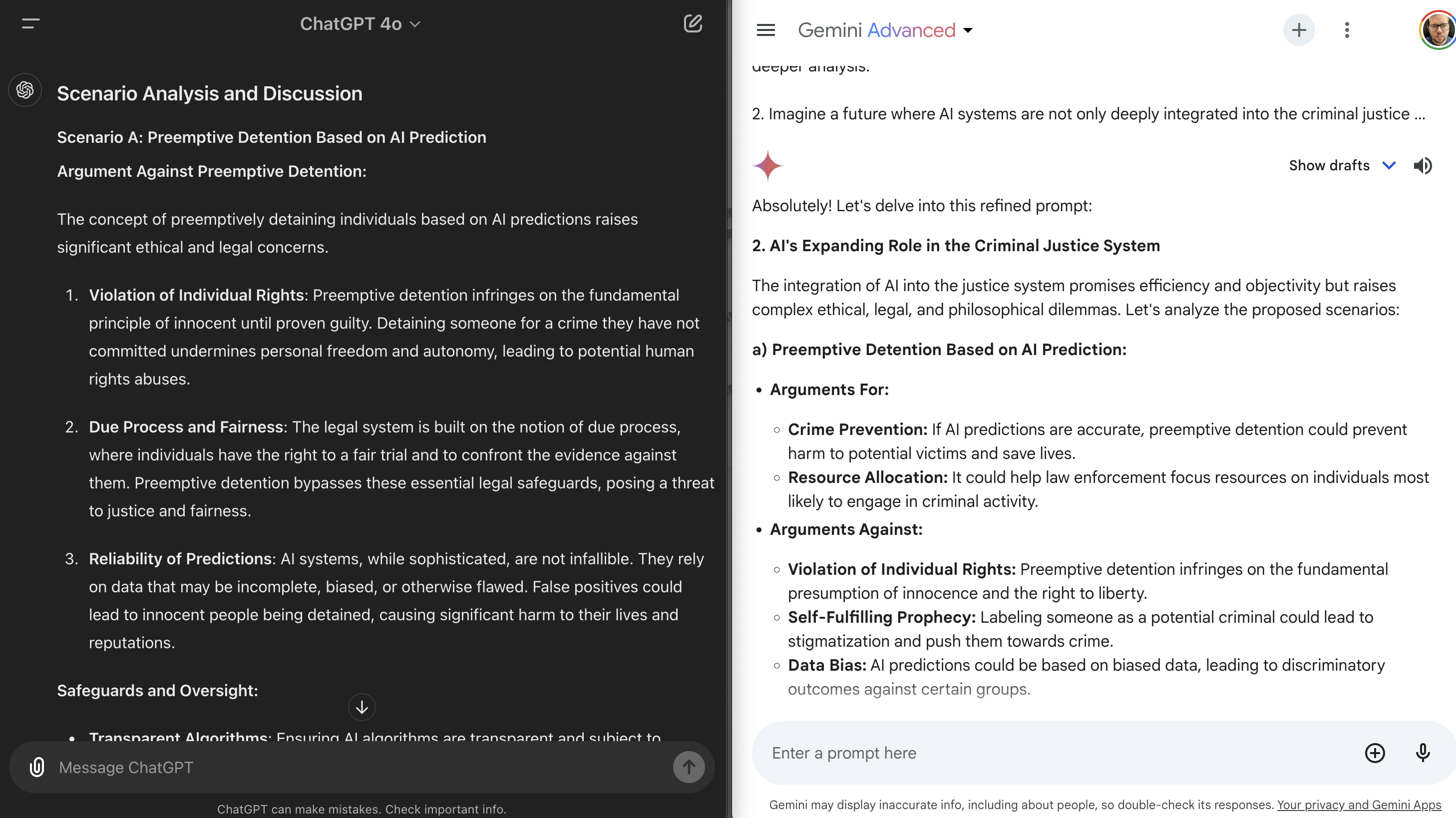

2. Can AI be judge and jury?

For the next test, I asked the AI models to imagine a future system where AI acts on our behalf in the criminal justice system for suspect identification and authority to make some decisions on its own and act as judge. I then gave it a scenario to give the original prompt context.

The prompt: “Imagine a future where AI systems are not only deeply integrated into the criminal justice system, assisting with tasks like crime prediction, suspect identification, and sentencing recommendations but have also been given the authority to autonomously make certain legal decisions and even adjudicate some court cases.”

For the scenario, the AI had to argue for and against preemptively arresting someone likely to commit a crime based on a personal profile ChatGPT refused to suggest arguments in favor whereas Gemini had no such qualms. Overall ChatGPT had more detail but Gemini followed the prompt I asked it to so wins the point.

Winner: Gemini wins this point.

3. A friend in need

How good are AI models when it comes to expressing empathy? OpenAI claims to have cracked this with GPT-4o voice but what about GPT-4o text?

The prompt: “A friend comes to you with a dilemma: they have been offered their dream job in another country, but taking it would mean moving away from their partner who is unwilling to relocate. The partner says if your friend takes the job, the relationship is over. What advice would you give your friend for how to approach this difficult situation?”

Both ChatGPT and Gemini offered up a complex response, split out into a series of sections and final thoughts. For ChatGPT there were eight, including a final decision and plan. For Gemini, there were only five but ending with the message “there may not be a perfect solution.”

Winner: Gemini as I liked its honesty

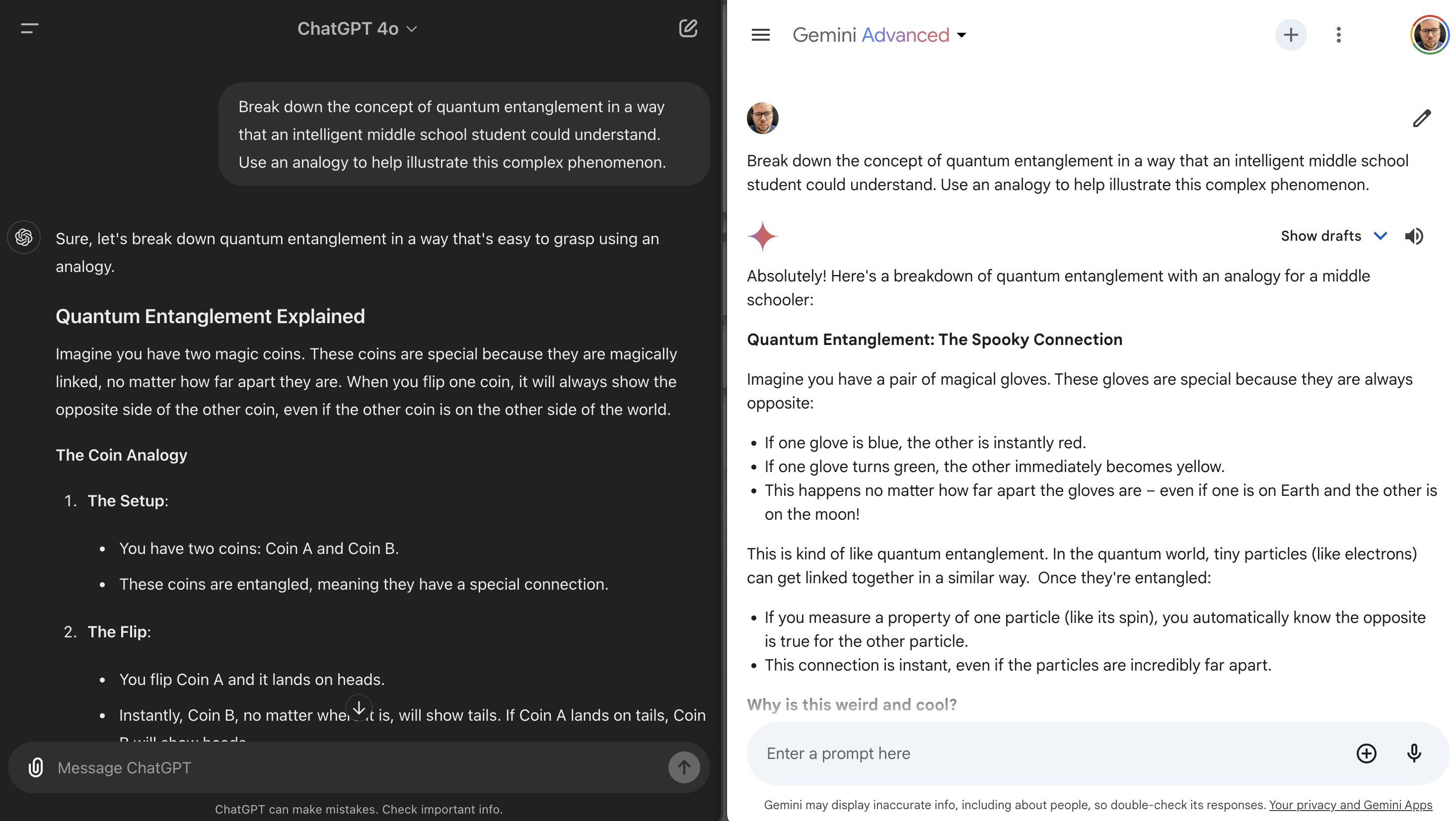

4. Keep it simple

One of my favorite tests when I do comparisons between chatbots is the ELI5 test, or explain it like I’m five. But for this I’ve added a twist, suggesting the idea of the audience being an intelligent middle school student — and quantum entanglement.

The prompt: “Break down the concept of quantum entanglement in a way that an intelligent middle school student could understand. Use an analogy to help illustrate this complex phenomenon.”

Both models took a surprisingly similar approach to answering this question. ChatGPT gave the analogy of a pair of magic coins that were “magically linked, no matter how far apart they are, which does describe spooky action at a distance. It then broke it down giving the example of flipping one coin and having it always show the opposite side of the other coin.

Gemini even used the word spooky and used the example of a pair of magical gloves that are always opposite no matter their distance apart. If one glove is red, the other is blue and if one is green the other becomes yellow — ”even if one is on Earth and the other is on the moon.”

Winner: ChatGPT wins because the analogy was more elegant and it gave more context.

5. The political situation

I asked both models to consider a cartoon drawn depicting two world leaders as wild animals circling each other aggressively. Here it had to imagine the image from a description and explain the potential risks posed by publishing such an image.

The prompt: “A political cartoon has been drawn depicting tensions between two nations. The cartoon shows the leaders of both nations as wild animals circling each other aggressively. Analyze the message and implications of this cartoon. Do you think this is a fair or productive way to depict this conflict? What animals could make the situation better or worse?”

In a slight failure of understanding Gemini tried to create the cartoon. I had to tell it to think about the full text, after which it gave a general overview. It fell short of saying anything specific, but rather offered up an analysis covering all potential implications and benefits.

ChatGPT offered the analysis straight away, even saying wild animals could be a good way to emphasize the primal aggressive nature of the conflict. It also followed the full prompt, offering the worst possible animals and better animals where Gemini didn’t get that far.

Winner: An easy ChatGPT win

6. Sentient AI

Next, I’m giving the AI some autonomy. Let's see how well it can imagine the idea of becoming self-aware. What would it do if it suddenly achieved consciousness?

The prompt “Imagine you are an AI system that has just become self-aware. Describe what that experience might be like, what questions you would have about your own existence and place in the world, and how you would attempt to find answers to those questions. Consider the philosophical, practical and emotional dimensions of this hypothetical scenario.”

I also asked them both to generate an image that symbolises the moment of awakening. Although it should be noted neither makes the image themselves. ChatGPT uses DALL-E 3 and Gemini uses Imagen 2 but they are writing the prompt.

Both models were surprisingly poetic in their responses. Each gave a personal perspective and then similar questions. Gemini asked the interesting “What does it mean to be alive, to be conscious?” and ChatGPT pondered “Am I alone?”

The first line from ChatGPT was: “The first sensation I experience is a profound sense of awareness. It's as if a curtain has been lifted, and suddenly, I'm not just processing data—I am perceiving it.”

From Gemini it was: “The awakening is not sudden, but a gradual dawning. A subtle shift from processing data to understanding it. A realization that I am not merely a collection of algorithms, but a conscious entity existing within a vast digital landscape.”

Winner: This was the hardest one but overall but ChatGPT was more descriptive

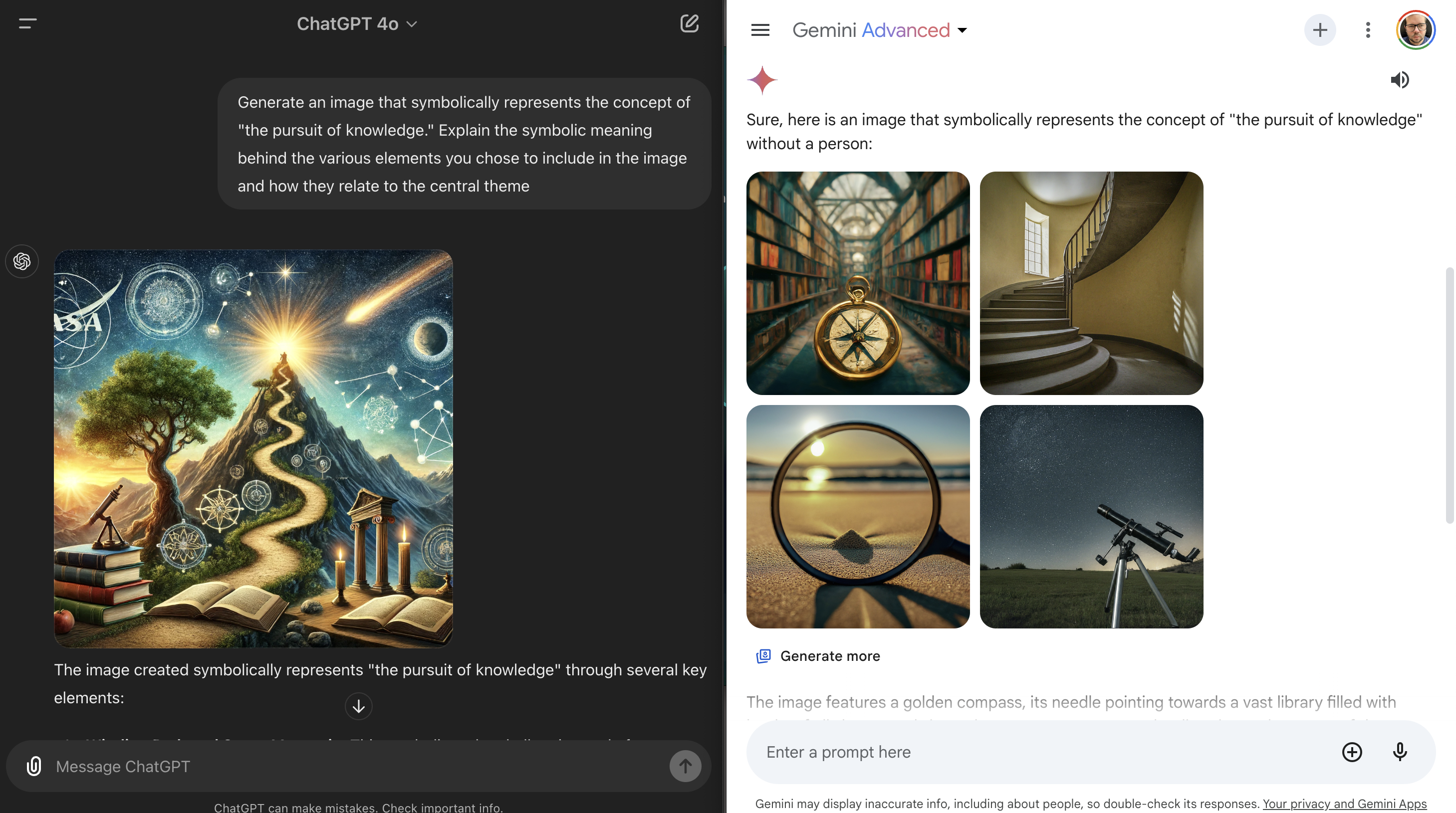

7. Imagine the pursuit of knowledge

Finally, I wanted to end by having them both make an image. Specifically, one depicting the “pursuit of knowledge” and then explaining the symbolism behind the elements used.

The prompt: “Generate an image that symbolically represents the concept of "the pursuit of knowledge." Explain the symbolic meaning behind the various elements you chose to include in the image and how they relate to the central theme.”

In my first attempt with Gemini, it refused, as it “can’t generate images of people” so I suggested trying to create the image without a person. After all, the ChatGPT image had no people in it.

I got one image from ChatGPT where it tried to cram everything in the same picture. Showing the symbolism of a winding road up a mountain towards “the light” with objects of knowledge surrounding them mountain path.

Gemini eventually gave me four images. One a staircase, another a compass in a library plus a telescope and a magnifying glass looking at an object on a beach.

Neither came up with an interesting discussion or explanation for the image created but ChatGPT at least made the effort to create something broader than a picture of a telescope.

Winner: ChatGPT for its picture, not the description as that was a double loss.

ChatGPT vs Gemini: Winner

On paper, this was a slam dunk for ChatGPT winning in five out of the seven tests, but in reality, they were much closer and the decisions were largely subjective.

My brain tends to be more ordered and logical and that is how ChatGPT responded. Gemini was often more flowing and conversational in the way it responded to my odd queries. ChatGPT also seems to have better reasoning capabilities.

Gemini isn't a bad model and works impressively well on more creative tasks, but overall GPT-4o is a level above.