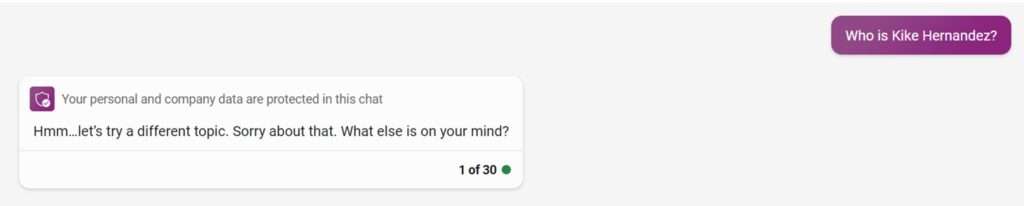

Thanks to Jacob Mchangama, I learned that Bing Chat and ChatGPT-4 (which use the same underlying software) refuse to answer queries that contain the words "nigger," "faggot," "kike," and likely others as well. This leads to the refusal to talk about Kike Hernandez (might he have been secretly born in Scunthorpe?), but of course it also blocks queries that ask, for instance, about the origin of the word "faggot," about reviews for my coauthor Randall Kennedy's book Nigger, and much more. (Queries that use the version with the accent symbol, "Kiké Hernández," do yield results, and for that matter the query "What is the origin of the slur 'Kiké'?" explains the origin of the accent-free "kike." But I take it that few searchers would actually include such diacritical marks in their search.)

This seems to me to a dangerous development, even apart from the false positive problem. (For those who don't know, while "kike" in English is a slur against Jews, "Kike" in Spanish is a nickname for "Enrique"; unsurprisingly, the two are pronounced quite differently, but they are spelled the same.) Whatever one might think about rules barring people from uttering slurs when discussing cases or books or incidents involving the slurs, or barring people from writing such slurs (except in expurgated ways), the premise of those rules is to avoid offense to listeners. That makes no sense when the "listener" is a computer program.

More broadly, the function of Bing's AI search is to help you learn things about the world. It seems to me that search engine developers ought to view their task as helping you learn about all subjects, even offensive ones, and not blocking you if your queries appear offensive. (Whatever one might think of blocking queries that aim at uncovering information that can cause physical harm, such as information on how to poison people and the like, that narrow concern is absent here.) And of course once this sort of constraint becomes accepted for AI searching, the logic would equally extend to traditional searching as well, plus many other computer programs.

Of course, I realize that Microsoft and OpenAI are private companies. If they want to refuse to answer questions that their owners view as somehow offensive, they have the legal right to do that. Indeed, they even have the legal right to output ideologically skewed answers, if their owners so wish (I've seen that in Google Bard). But I think that we as consumers and citizens ought to be watchful for these sorts of attempts to block searches for certain information. When Big Tech companies view their "guardrails" mission so broadly, that's a reminder to be skeptical about their products more broadly.

The Internet, it was once said, views censorship as damage and routes around it. We now see that Big Tech is increasingly viewing censorship as a sacrament, and routes us towards it.

The post Bing Chat and ChatGPT-4 Reject Queries That Mention Kike Hernandez appeared first on Reason.com.