When we write about AI supercomputers with tens or even hundreds of thousands of processors, we usually mean systems powered by Nvidia's Hopper or Blackwell GPUs. But Nvidia is not alone in addressing ultra-demanding supercomputers for AI, as Amazon Web Services this week said it is building a machine with hundreds of thousands of its Trainium2 processors to achieve performance of roughly 65 ExaFLOPS for AI. The company also unveiled its Trainium3 processor which will quadruple performance compared to the Trainium2.

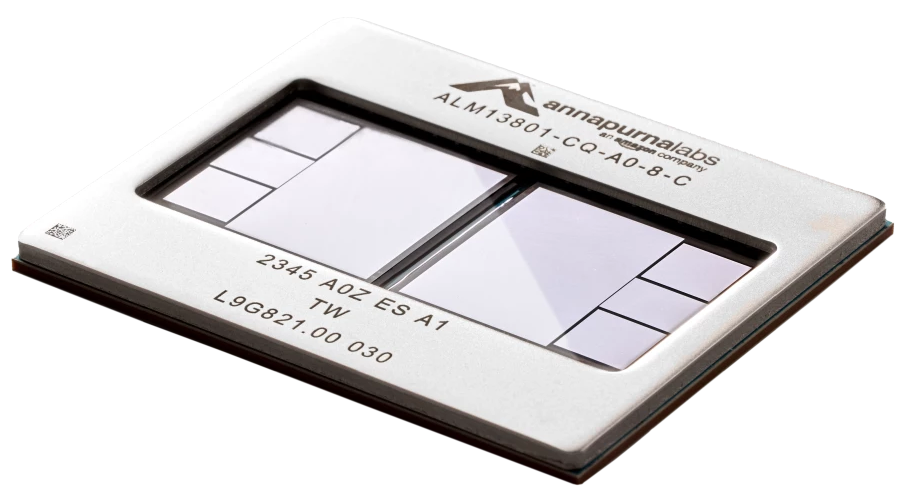

The AWS Trainium2 is Amazon's 2nd Generation AI accelerator designed for foundation models (FMs) and large language models (LLMs) and developed by Amazon's Annapurna Labs. The unit is a multi-tile system-in-package with two compute tiles, 96GB of HBM3 using four stacks, and two static chiplets for package uniformity. When AWS introduced Trainium2 last year, it did not share any specific performance figures for Trainium2 but stated that Trn2 instances could scale up to 100,000 processors, delivering 65 ExaFLOPS of low-precision compute performance for AI, which means that a single chip could deliver up to 650 TFLOPS. But it looks like that was a conservative estimate.

At its re:Invent 2024 conference AWS made three Trainium2-related announcements:

Firstly, the AWS Trainium2-based Amazon Elastic Compute Cloud (Amazon EC2) EC2 Trn2 instances are now generally available. These instances feature 16 Trainium2 processors interconnected with NeuronLink interconnection that deliver up to 20.8 FP8 PetaFLOPS of performance and 1.5 TB of HBM3 memory with a peak bandwidth of 46 TB/s. This essentially indicates that each Trainium2 offers up to 1.3 PetaFLOPS of FP8 performance for AI, which is twice as high compared to the figure discussed last year. Perhaps AWS found a way to optimize the processor's performance, or maybe it cited FP16 numbers previously, but 1.3 PetaFLOPS of FP8 performance is comparable to the Nvidia H100's FP8 performance of 1.98 PetaFLOPS (without sparsity).

Second, AWS is building EC2 Trn2 UltraServers with 64 interconnected Trainium2 chips that offer 83.2 FP8 PetaFLOPS of performance as well as 6 TB of HBM3 memory with a peak bandwidth of 185 TB/s. The machines use 12.8 Tb/s Elastic Fabric Adapter (EFA) networking for interconnection.

Finally, AWS and Anthropic are building a gigantic EC2 UltraCluster of Trn2 UltraServers, codenamed Project Rainier. The system will be powered by hundreds of thousands of Trainium2 processors that offer five times more ExaFLOPS performance than Anthropic currently uses to train its leading AI models, such as Sonnet and Opus. The machine is expected to be interconnected with third-generation, low-latency, petabit-scale EFA networking.

AWS does not disclose how many Trainium2 processors the EC2 UltraCluster will use, but assuming that the maximum scalability of Trn2 instances is 100,000 processors, this suggests a system with performance of around 130 FP8 ExaFLOPS, which is quite massive and equals around 32,768 Nvidia H100 processors.

"Trainium2 is purpose-built to support the largest, most cutting-edge generative AI workloads, for both training and inference, and to deliver the best price performance on AWS," said David Brown, vice president of Compute and Networking at AWS. "With models approaching trillions of parameters, we understand customers also need a novel approach to train and run these massive workloads. New Trn2 UltraServers offer the fastest training and inference performance on AWS and help organizations of all sizes train and deploy the world’s largest models faster and at a lower cost."

In addition, AWS has introduced its next-generation Trainium3 processor, which will be made on TSMC's 3nm-class process technology, deliver higher performance than its predecessors, and become available for AWS customers in 2025. Amazon expects Trn3 UltraServers to be four times faster than Trn2 UltraServers, achieving 332.9 FP8 PetaFLOPS per machine and 5.2 FP8 PetaFLOPS per processor if the number of processors remains at 64.