1. Setting the scene

2. The attic find

3. But can it run Crysis?

4. Riding shotgun in GTA 5

5. Riding horses in The Witcher 3: Wild Hunt

6. Riding rails in Metro Exodus

7. Riding illithid ships in Baldur's Gate 3

8. Crashing and burning in Cyberpunk 2077 and Starfield

9. Conclusion and plans for a rematch

PC gaming never changes. Sure as night follows day, elite PC gaming hardware will, often within just a few months of release, be dethroned by something even faster and more powerful. It may, if a PC gamer is lucky, take more than a year to happen. But one thing is certain, no matter how much money a PC gamer pumps into buying top-shelf components for a rig, nor how much technical expertise they demonstrate when building it, no amount of high-end silicon, extreme overclocking, or liquid cooling loops will halt the inevitable—that their rig's time ruling the gaming world will be as brief as it is glorious.

Setting the scene

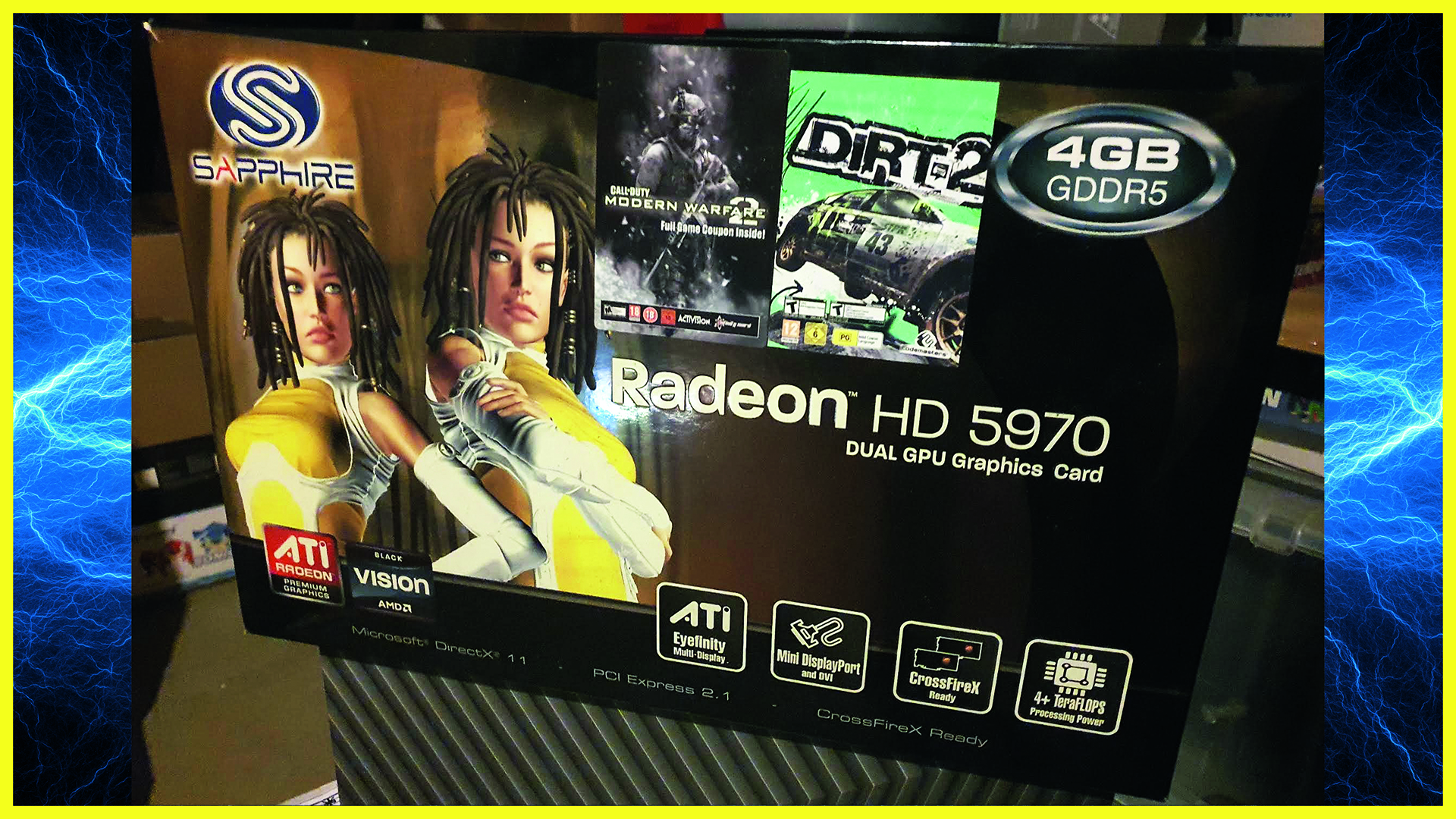

13 years ago, when I was much younger and the 1337 PC gaming hardware fires burned far brighter in my heart, I bought an ATI HD 5970 4GB dual-GPU graphics card for what was for me subjectively at the time (and indeed, now looking back, objectively I'm sure for basically every PC gamer) a horrendous amount of money. But, as I was well aware of at the time, this card was going to be the world's most powerful graphics card on release and, as I'd just got paid for multiple months of work on a big project out of the blue, I actually had the money to buy it burning a hole in my pocket, which up until that point was, trust me, a very rare occurrence.

After a few weeks of anxious waiting for the card to arrive in stock and then dispatch, it finally arrived and I slotted into my PC gaming rig at the time, which was powered by an Intel Core i7 930 CPU, 16GB of DDR3 RAM, and a Gigabyte Extreme mainboard. Was my system the most powerful in the world? No, but right then, my gaming PC was powered by the world's most powerful single graphics card in the world, a dual-GPU monster that was right on the cutting edge of graphics technology. And I recall the glee of having such immense power on tap to play the age's most graphically impressive games.

And if you think it sounds odd that AMD's ATI brand could rule the GPU roost, it's worth remembering that the late 2000s were a very different time for PC gaming in terms of graphics cards compared to today, and it was a landscape far removed from Nvidia's GPU market dominance in 2023, as PC Gamer's hardware expert Dave James recalls:

"It was a different time. A time when AMD could release a new graphics card and it would beat Nvidia's best by a mighty margin. That's what the HD 5870 did when it launched as the fastest single-GPU graphics card in late 2009. But that 'single-GPU' qualification was something we always had to include back then because both companies were filling out the top-ends of their respective ranges with dual-GPU cards, packing SLI and CrossFire GPUs into a single board. In this generation that meant the HD 5970 and GTX 295.

"And honestly it was as bad an idea as SLI and CrossFire were in general, but it did deliver the most powerful graphics cards of their generation… just not the most reliable ones. I've never had more driver issues than when running an AMD multi-GPU card, and I've run a few in my home rig over the years. Y'see, the promise of multi-GPU setups was rarely matched by the implementation, which either offered significantly less than twice the graphics power you would want from two of the best GPUs or games just wouldn't use that extra GPU at all."

Right at the end of the ATI HD 5000 series lifespan, then ATI master hardware partner, Sapphire Technology, which had built a fantastic reputation for releasing HD 5000 GPUs with enhanced performance and features through the generation, decided it would send the series out on a high. It would take the enthusiast-grade ATI HD 5970 dual-GPU card and improve it by bathing it in virtual cheetah blood.

The standard HD 5970 boasted 2GB of GDDR5 memory and a core clock speed of 725MHz, effectively chaining two 1GB HD 5870's together but utilising slower HD 5850 speed frequencies. This card retailed for $700 USD RRP. Sapphire's landmark swansong HD 5970 card, on the other hand, would boast 4GB of GDDR5, chain two completely full-fat HD 5870s together, and then overclock the dual GPU card's memory up to an effective 4,800MHz (an increase of 800MHz) and its core clock speed up to 900MHz (this card was released in some territories at 850MHz for some reason, but it was the same card, and could run at 900MHz out of the box). That was a 24 per cent increase in core clock and 20 per cent increase in memory clock over the standard card, while also doubling the amount of GDDR5 memory on offer. Sapphire's card broke the bank, retailing for $1,100 USD RRP.

And, as surviving reviews of this card show from the time, my bank balance was far from the only thing broken, with this card proceeding to break a wide number of benchmark records. For a very brief period of time this was the most powerful graphics card in the world, even if you could muster similar performance, in certain applications, by chaining multiple weaker cards together, either through ATI Crossfire or Nvidia SLI.

But, as ever with PC gaming hardware, time was not kind to this GPU king, as within a year both AMD and Nvidia had released cards that were more powerful. Further, as I recall, I actually only ran this card in my system for a couple of years before switching allegiance to Nvidia, and by that time I'd moved away from making enthusiast-grade PC gaming hardware purchases anyway, so what I bought was far from the world's best and most expensive graphics card. After boxing the HD 5970 4GB back up and thanking it for its service (although not for its pillaging of my bank account), I dumped it in a box and then proceeded to forget all about it.

The attic find

Well, I forgot about the HD 5970 4GB until last month when, over a decade and multiple house moves later, I re-found the HD 5970 4GB while rooting around in boxes in my attic for old big box PC games. The box was covered in some dust but there it was, still pristine in-box and with all its accessories and paperwork intact. Naturally, I had to take it out the box and admire the once King of the Graphics Cards.

And, boy, had I forgotten just what a monster of a card this GPU was in terms of physical proportions. It's fully 310mm long and with a dual-slot design that, with the card's chunky triple-fan cooler bolted on, takes out three mainboard slots, Sapphire's card remains imposing and absolutely dwarfs most modern cards. You'll need something on the scale of an RTX 3090 Ti in size to match its form factor.

It may still be a monster in size, but the next thought that immediately came to my mind was, could it still deliver some sort of gaming performance today in 2023, running some of today's most popular PC games? It seemed far-fetched and it also seemed like a challenge that was almost certainly going to end in spectacular failure, but that was the thing that most appealed to me, the fact it sounded borderline ridiculous. Could a card that first pushed pixels in anger over 13 years ago come out of retirement and run many of the games I'm playing today on PC in 2023?

My goal was to run these games on the retro rig at FHD resolutions and with as many graphical options turned on to their highest settings as possible, only dropping to a HD resolution and knocking down graphical settings if absolutely necessary. Due to footage being captured using OBS on the actual system while the games were running, though, all footage was captured at 720p. So while the footage you see is HD, many of the games were actually running in FHD.

And, what's more, could I run the card in a machine that was, in terms of hardware, period-accurate and still get results? That was the challenge, and after I'd rooted out and rebuilt my 2009-era machine (I keep all my old PC components as I like building retro rigs, and this system consisted of a socket 1366 Intel Core i7 930 CPU and 16GB of DDR3 RAM housed in a Gigabyte Extreme mainboard), I slotted the card in, banged a fresh copy of Windows 10 onto the machine's SSD drive (the only two things not period accurate to the build as HDDs and Windows Vista can remain in PC gaming hell for all eternity), and started installing games.

But can it run Crysis?

I wanted some sort of benchmark for performance before I began throwing more modern games at the HD 5970 4GB, to remind myself of how the hardware handled the most graphically intensive games of the time. And, if you rewind the clock back to the late 2000s, that game was the now legendary hardware crusher, Crysis. This was the game that created the whole 'But can it run Crysis?' meme, breaking many gaming PCs of the time under its immense requirements. If you had the hardware, though, then no game looked better. Crysis was the graphical powerhouse of the day, the Cyberpunk 2077 running at max specs and with real-time ray tracing overdrive mode turned on. How did it run on the old rig? The video above shows the results.

I knew there was a reason why I bought this card back in 2009, and this video proves it. Crysis runs really well at ultra settings and the gameplay experience delivered by the hardware is excellent still, with a smooth frame rate and strong levels of fidelity. Boy did Crytek reach into the PC gaming future with this game and bring back with them some lovely-looking graphics tech! A stunning game even today, and a big indicator that the HD 5970 4GB still has plenty to offer.

Riding shotgun in GTA 5

With the benchmark established, I now wanted to start moving forward in time. And the next game I thought of that is still very much played today, and indeed still getting new content dropped for it in 2023, is GTA 5, which launched on PC in 2015. This would be a jump forward in time by half a decade from when the card was released, so I was naturally a little worried that this was going to be where my adventure with the HD 5970 4GB ended. Could it run GTA 5? Watch the video above for the surprising results.

What a knockout! Yes, we're absolutely not on maximum settings here, but almost all graphical options are turned to medium and we're gaming at a FHD resolution with a really respectable, smooth frame rate. The HD 5970's 4GB of DDR5 memory really seems to have insulated it well here from the fierce resource demands of future PC games, with GTA 5's own in-options menu showing how resolution and graphical options can be pushed higher thanks to the extra memory head room.

Riding horses in The Witcher 3: Wild Hunt

Buoyed by my ability to smoothly cruise around riding shotgun in GTA V using the HD 5970 4GB, I then moved on to another game that released on PC in 2015 and is still a mainstay of PC gaming in 2023, The Witcher 3: Wild Hunt. One of my favourite games of all time, and one that has had years of patches and graphical improvements, could I ride on Roach and maintain a frame rate north of single figures?

Once again, I was surprised at this 13-year old card's ability to keep punching long after it had been beaten in the power charts by hundreds of other cards. As you can see from the video, the frame rate and fidelity lead to a perfectly playable game, and one that is still presented in a manner that makes CD Projekt Red's fantastic fantasy world shine.

Riding rails in Metro Exodus

Do you know what the most underrated graphically impressive game of the late 2010s is? Yeah, it's 4A Games' Metro Exodus, which first launched on PC in 2019. From the fidelity of the environment, to the detail and animation of the characters, and on to the environmental and atmospheric effects that make it a really atmospheric game, Exodus was a technical beaut on a pimped out ring back in 2019 and remains a gorgeous game today in 2023. It was released a whole decade after the HD 5970 4GB hit the market, though, so being candid, I definitely thought this was going to be the graphics card's knockout blow.

Is Exodus playable? Just about, and I had fun admiring how the dystopian world that 4A Games presents still looks so atmospheric even running on lower graphical settings, but it's also obvious to see the HD 5970 4GB is really on the ropes now and struggling to stay fighting. The scale of what the GPU is being asked to do (along with the, at the time of Exodus' release, decade-old CPU and RAM), is taking its pixel-pushing power to the absolute limit, and frame rates are low even at lower graphical settings. Plus, we've got the first really noticeable example of hitching and stuttering. The big question therefore is, can the HD 5970 4GB avoid a technical knockout when we advance over the decade after its release time boundary?

Riding illithid ships in Baldur's Gate 3

And what better game to try that with a game that initially released within a year of the turn of the decade, with Baldur's Gate 3 launching in Early Access back in October 2020. Since Early Access, running through to the game's full, triumphant release this year, I've played hundreds of hours of Baldur's Gate 3 and I am very familiar with its demands and how it runs on my main system, so I honestly installed this game expecting the worst—a game-over knockout blow to the HD 5970 4GB. The video shows what happened.

The game runs! But the HD 5970 4GB just got a big left hook to face and has dropped to the canvas, needing a standing eight count. In terms of raw power, the card seems to still have what it takes, with the game absolutely playable in terms of frame rate at medium settings. However, it looks like some sort of nasty driver issue is causing communication problems with, to my untrained, unexpert eyes, the game's shader/rendering pipeline, which in turn are causing some really ugly glowing bars to display across the screen horizontally at all times.

At first I thought this was an issue with VSync settings, but after experimenting with and then exhausting all graphical options in the in-game menu, including but not limited to running the game in windowed mode, trying double and triple buffering, turning VSync off entirely, altering resolutions, capping frame rates and more, I hit a brick wall. I couldn't remove the glowing bars. When I reinstalled the HD 5970 4GB I grabbed the last legacy drivers released for the card, which was way back in 2015, so I think in this case it's purely a case of the hardware still just about being capable, but the hardware no longer really supported by the game. I don't think for one moment that any gamer should experience this masterpiece RPG with these graphical issues, but it is also a stone-cold fact that Baldur' Gate 3 does run, and if it weren't for the glowing bars, you could just about play this game using this hardware. That's some serious kudos in my eyes for the HD 5970 4GB and the retro rig in general, which is running a game that was released a full decade after its own heyday.

Crashing and burning in Cyberpunk 2077 and Starfield

Cyberpunk 2077 was released in late 2020 and Starfield released this year in September 2023, and both of these games represent modern AAA gaming in one way or another. Cyberpunk 2077 has been the game that has set graphical benchmarks for PC gaming for the last three years, while Starfield is the brand new, crazy expansive hotness from Bethesda. Could the HD 5970 4GB installed in my 2009 retro PC gaming rig run either of these games?

The short answer is no. And the long answer is also no, but this answer includes the why. Unfortunately, if I had hit a driver compatibility curb in Baldur's Gate 3, with Cyberpunk 2077 and Starfield the HD 5970 4GB slammed brutally into a massive DirectX 12 wall. Neither game would run, at all, and it was down to DX12.

You see, up until this point, all the games I'd been running on the system as we moved forward in time had allowed me to run them in DirectX 11. However, at some point (approximately around 2020 from what I can see) many games started being released as DirectX 12 exclusives. You simply had to have a DirectX 12-compatible GPU to run them and, released over half a decade before DirectX 12 was even a thing, unfortunately, the HD 5970 4GB just didn't offer that compatibility.

Further, I learned that even if I could manage to hack Starfield to run somehow using the AMD HD 5970 4GB, the Intel Core i7 930 CPU I was running in the rig would also finally be fully beaten, as you need an AVX-compatible CPU to run Bethesda's cosmic RPG, and the i7 930 does not support AVX.

Conclusion and plans for a rematch

When I set out on this mission I always knew there wasn't going to be a fairytale ending. It was inevitable that the former most powerful graphics card in the world would be knocked out at some point in time, but what surprised me the most was not just how far forward in time from its release did the card stay competitive (almost a decade), but that actually what brought it down in the end was not a lack of core power. It wasn't a lack of core clock speeds or memory that eventually brought the champion down, but smaller things, like a newer version of DirectX being released, the HD 5970 4GB suddenly not being supported anymore in terms of driver releases, and even small changes in mandatory demands from other components, such as Starfield's need for an AVX-compatible CPU.

What's the take-away from all this? It has reminded me that PC gamers should always remember that any GPU, even the most powerful in the world, is not only going to have a shelf life in terms of the raw power it can potentially offer, but that it will also have a shelf life (which will most likely be an even shorter one) in terms of compatibility within future PC gaming system architectures. And it is that latter point that you really can't control, as it has the potential to render any GPU's core hardware (its manufacturing process/architecture, speed and memory) defunct long before it technically doesn't have the chops to run a game. Buying extra memory or clock speeds now will not save you from this fate in the future.

Yes, if you go out and buy an RTX 4090 today then that card is going to almost certainly deliver a great PC gaming experience for you for years to come, and it absolutely will do so for longer than, say, an RTX 3060. But here's the kicker, there's no guaranteeing for exactly how long it will be able to do that on its own core performance alone, and despite the thousands of dollars a gamer could potentially spend in buying a world champion graphics card, just like I did all those years ago, its time in the sun will be short.

The other, even bigger take away from this crazy little mission of mine, is that it has reinforced my belief that you simply don't need to run a game at maximum settings to unlock its fun. Yes, it's nice to have high resolutions and frame rates, as well as extra fancy graphics effects, but providing your hardware can run the game pretty well, then that game is going to be 95% as much fun as when it's running on the The World's Fastest Gaming PC.

As for the HD 5970 4GB, though, it's not time for retirement just yet. I'm already planning a rematch, this time pitting the card against the same games (and hopefully even more), when it is slotted into my main system as a direct replacement of the modern GPU I'm running in it currently, the imposing Nvidia RTX 3090 Ti. Check back in to PC Gamer soon for this rumble in the silicon jungle.