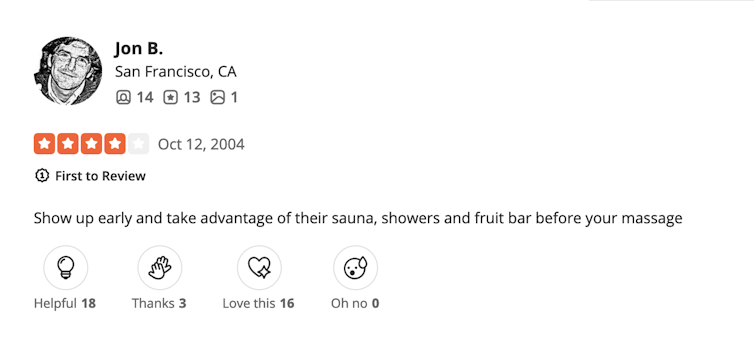

For the past 20 years, Yelp has been providing a platform for people to share their experiences at businesses ranging from bars to barbershops. According to the company, in that time the platform has published 287 million user reviews of over 600,000 businesses.

There’s a reason review sites like Yelp are so popular. No one wants to spend their hard-earned money on a dud product, or fork over cash for a bad meal. So we’ll seek advice from strangers and use various clues to judge if a particular review is authentic and reliable.

But sometimes these cues can lead shoppers down the wrong path. Other times, the reviews are simply fake.

I’m a linguist who studies “word of mouth,” or what people tell each other about their experiences. Advances in text analysis have allowed researchers like me to detect patterns and draw conclusions from millions of product reviews.

Here are some key findings from research that I and others in my field have conducted:

Signs of foul play

How can you tell if the review you’re reading is sincere?

Competition might sometimes push businesses to pay people to compose positive reviews for their products or negative reviews for competitors. Bots can also manufacture fake reviews that sound like they’ve been written by humans.

As a result, fake reviews have become a big problem that threatens to delegitimize online reviews altogether.

For example, a recent study estimates that fake reviews compel consumers to waste 12 cents for every dollar they spend online.

The reality is that people do a pretty poor job at discerning a fake review from a real one. It’s essentially a coin flip – studies have shown that shoppers can correctly identify a fake review only half of the time.

Researchers have also tried to identify what characterizes a fake review. They’ve proposed that those that are too long or too short, in addition to those that don’t use the past tense or a first-person pronoun.

Yelp has long been well aware of the issue. The company developed an algorithm that identifies and filters out “unhelpful” reviews – and that includes reviews that are too short.

It’s important to think about the difference between how you might write a review after you’ve tried a product, or if you’re coming up with something out of thin air.

In a 2023 study, my colleagues and I suggested that the main difference lies in whether specific language is used. For example, a real review will contain words that are more concrete and describe the “what, where, when” of the experience.

By contrast, if someone hasn’t actually stayed at, say, the hotel they are reviewing, or didn’t dine at the restaurant they are writing about, they’ll use abstract generalities loosely related to the experience.

It’s the difference between reading, in a review, “The room was clean and the beach was nice” and “The room was so clean, we felt like it was new. A sandy beach was steps away, which allowed us to easily take a dip after our hike. The shimmering ocean water also made the view from the window special.”

Real reviews can still mislead

Even if all fake reviews were filtered out, do product reviews still help you make better decisions?

As is often the case in marketing research, it depends.

Researchers have delved into this question for years and can point to a variety of review features that can help your decision making.

For example, you might assume that if you’ve read a few reviews for a product, and they are similar to each other, this means there is a consensus about the product. Indeed, studies have shown that similarity across opinions is more likely to make readers more certain.

My research shows that similar reviews increase consumer certainty about the product. However, I’ve also found that these similar reviews are more likely to be written by consumers who are less certain about their experience with the product. It’s likely that they simply repeated what others said in their reviews, pulling from those reviews to craft their own.

This creates a paradox. Readers of reviews that sound similar will be more certain that they’re making the correct decision, even as the writers of those same reviews were less certain. At the same time, reviews that differ from each other will elicit hesitancy in readers, even though the writers of the review were in fact more certain about their experiences.

The text isn’t the only element that influences readers. In ongoing research, I discovered that the sheer number of available reviews on a platform can influence how you perceive each individual review.

So, if you’re reading a review and it is one of, say, 1,572 reviews, you’ll think it is a more credible review than if that same review was one of, say, 72 reviews.

This might seem illogical, but it can be explained by the human tendency to take quantity as a sign of quality: If a product has many reviews, this could mean that it’s popular and that many people have purchased it. A halo effect occurs, and you then subconsciously believe that everything about the product – including its reviews – is better.

But for the most part, despite these issues and others, consumers still make better purchasing decisions with reviews than without them.

It’s just a matter of knowing what to look out for.

Ann Kronrod does not work for, consult, own shares in or receive funding from any company or organization that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.

This article was originally published on The Conversation. Read the original article.