Image: Digital analytics dashboards showing charts, metrics, and performance indicators | Source: Freepik

Predictive analytics has moved from experimental to expected in modern enterprises. Forecasting demand, estimating risk, and prioritizing actions are now common analytical objectives. Despite this widespread adoption, many organizations struggle to show how analytics consistently improves decisions or operational performance.

The challenge is not technical capability. It is the difficulty of translating analytical work into repeatable, production-ready decision systems. Academic data science teaches modeling, optimization, and statistical validation. Enterprises operate under ambiguity, data constraints, and organizational complexity. Closing this gap requires treating applied data science as decision infrastructure rather than as isolated analysis.

The Gap Between Academic Data Science and Enterprise Reality

Academic data science is designed around controlled problems. Data is prepared in advance. Objectives are stable. Success is defined mathematically. These conditions support learning but rarely reflect enterprise environments.

Operational data originates from systems built to execute transactions, not support analysis. Definitions differ across teams. Historical records reflect past rules and incentives that may no longer apply. These realities complicate modeling and weaken assumptions commonly made in academic work.

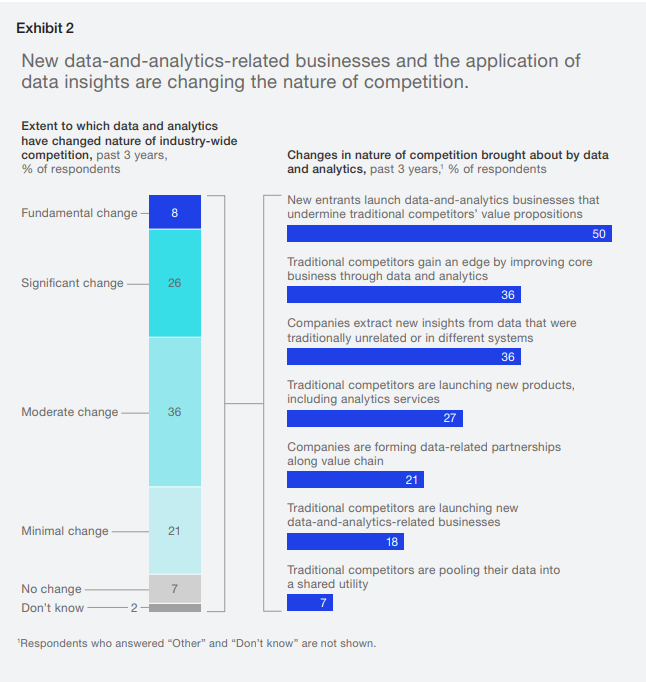

A McKinsey Global Institute report noted that while most large organizations had invested in analytics by the late 2010s, only a small fraction embedded analytics into core workflows in ways that reliably improved performance. The report emphasized that integration into decision processes, not model sophistication, differentiated leaders from laggards.

Image: Chart showing that most respondents report moderate to significant competitive change driven by data and analytics | Source: mckinsey.com

Why Many Predictive Models Fail to Deliver Value in Production

Predictive models often fail because they are built without a clear decision context. Predictions are produced, but no guidance exists on how they should be used. Without defined thresholds, ownership, or escalation paths, outputs become informational rather than operational.

Another common failure is neglecting the model lifecycle. Models are deployed without monitoring or retraining plans. As data and business conditions change, performance degrades quietly. Over time, users lose confidence, even if the model initially performed well.

Common Disconnects Between Data Science Teams and Business Stakeholders

Data science teams are trained to optimize statistical metrics. Business leaders focus on cost, timing, risk, and accountability. When these perspectives are not aligned early, analytics initiatives drift.

Communication gaps worsen the problem. Outputs are delivered without explanation. Assumptions remain implicit. Uncertainty is hidden rather than discussed. This erodes trust and limits adoption.

Foundations of Enterprise Analytics: Data Warehousing

Predictive analytics cannot operate sustainably without a stable data foundation. In enterprise environments, that foundation is almost always anchored by data warehousing, regardless of specific platform or architecture.

Warehousing enables shared definitions, historical consistency, and reproducibility. These properties are essential for analytics that must support decisions over time and across organizational boundaries.

Importance of Clean, Governed, Multi-Source Data Pipelines

Predictive models rely on stable and consistent inputs, but many enterprises lack the governance needed to achieve that consistency across organizational boundaries. When data pipelines are fragmented or owned by individual teams, feature definitions drift, assumptions vary, and model outputs become difficult to interpret or trust.

Gartner research highlights how widespread this challenge is. A Gartner survey found that more than 87 percent of organizations fall into low business intelligence and analytics maturity categories, described as either “basic” or “opportunistic”

Organizations at these maturity levels typically rely on spreadsheet-based analysis or isolated analytics initiatives within individual business units. Data governance is limited, collaboration between IT and the business is weak, and analytics efforts are rarely tied to clearly defined business outcomes. As a result, analytics workflows break down at scale, even when individual models appear technically sound.

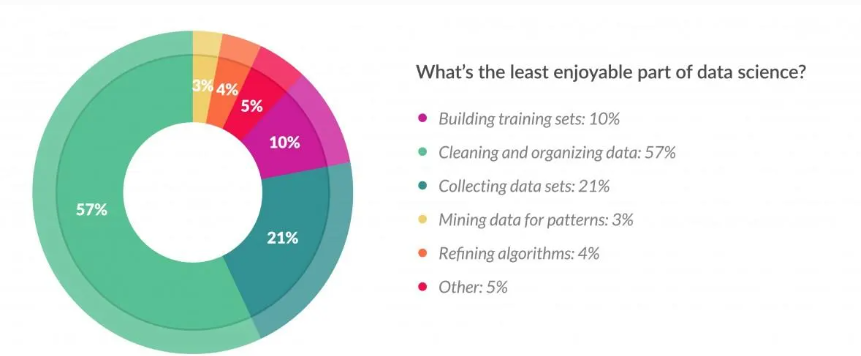

A 2016 CrowdFlower study found that data scientists spend roughly 80 percent of their time collecting, cleaning, and organizing data, with only a small portion devoted to modeling and analysis. The survey reported that data cleaning alone accounted for 57 percent of total effort, making it the most time-consuming and least valued part of the analytics workflow.

Image: Chart showing that cleaning and organizing data is the least enjoyable part of data science at 57 percent | Source: Forbes

Without governed, enterprise-wide data pipelines, predictive analytics struggles to move beyond experimentation. Clean integration across systems, shared definitions, and clear ownership are not supporting activities. They are prerequisites for analytics that can be deployed, trusted, and reused consistently across the organization.

Designing Warehouses That Support Both Reporting and Modeling

Warehouses optimized only for reporting often struggle to support modeling. Reporting favors aggregation and stability. Modeling requires granular data and long historical windows.

Organizations that scale analytics adopt layered designs. Raw data is preserved. Curated datasets apply business logic. Analytics-ready views support modeling without destabilizing reporting. This structure enables growth in enterprise analytics without constant rework.

Data Quality, Latency, and Accessibility Considerations

Latency receives outsized attention. Many enterprise decisions tolerate delay. Few tolerate inconsistent numbers. Predictable refresh cycles, clear lineage, and accessible documentation often matter more than near-real-time data.

Accessibility is equally important. Analysts need reliable self-service access to governed data to iterate models efficiently.

Operationalizing Predictive Analytics

Operationalizing analytics requires more than deploying models. It requires embedding predictions into workflows, defining ownership, and ensuring outputs are actionable.

Analytics creates value only when it influences how decisions are made.

Selecting the Right Problems for Predictive Modeling

High-impact use cases share clear characteristics. Decisions occur frequently. Outcomes can be measured. Better predictions lead to different actions. Modeling problems without a defined decision owner often produces technically sound but operationally irrelevant results.

Feature Engineering Under Real-World Data Constraints

Enterprise data is imperfect by default. Feature engineering must account for missing values, shifting definitions, and inconsistent granularity. Features must also be understandable to stakeholders who rely on them.

Complex transformations tied to fragile upstream logic increase operational risk. Simpler features grounded in stable business concepts often deliver more durable value.

Moving From Experimental Models to Production Deployment

Production models require governance. Version control, monitoring, retraining, and ownership determine whether models remain reliable over time. Without monitoring, performance degrades unnoticed. Without ownership, corrective action stalls.

Interpretability must be applied deliberately. Harvard Business Review shows that excessive transparency can suppress experimentation by making teams overly cautious and focused on appearing correct rather than learning. In analytics, early models need protected space for iteration, while production models require clear explanation of purpose, assumptions, and limitations so decision-makers can use outputs appropriately.

These conditions explain why operationalizing predictive analytics depends as much on organizational design as on technical execution.

Measuring Business Impact

Analytics initiatives succeed only when they improve decisions. Measuring that improvement requires deliberate alignment between analytical outputs and business objectives.

Technical performance metrics describe model behavior, not business value.

Aligning Analytics Outputs With KPIs and Executive Decisions

Predictions must map directly to actions such as reallocating resources, prioritizing cases, or managing risk. Without this linkage, analytics remains descriptive.

Measuring ROI, Efficiency Gains, and Decision Improvement

Impact measurement does not require precision. Pilot programs, before-and-after comparisons, and decision-level metrics provide sufficient insight. These approaches support realistic evaluation of analytics ROI without requiring perfect attribution.

Avoiding Vanity Metrics in Analytics Initiatives

Accuracy and error rates are necessary but insufficient. Overemphasis on these metrics obscures whether analytics changes behavior. Mature organizations focus on sustained improvements in decision quality.

Lessons Learned and Best Practices

Enterprise analytics success follows consistent patterns that reflect organizational discipline rather than technical novelty.

What Works, What Fails, and Why

Initiatives succeed when business, data, and technology teams collaborate early. Failures often stem from unclear ownership, overengineering, and underestimated change management.

Importance of Stakeholder Trust and Interpretability

Trust develops through consistency and transparency. Stakeholders adopt analytics when outputs align with domain understanding and uncertainty is communicated clearly. These principles align with decision science, which emphasizes improving choices under uncertainty rather than optimizing theoretical accuracy.

Building Repeatable Analytics Frameworks

Repeatability distinguishes durable programs from one-off success. Standardized pipelines, modeling practices, and evaluation methods transform projects into reusable analytics platforms.

Looking Forward

Enterprise analytics maturity develops over time. Organizations progress from reporting to prediction to recommendation. Each stage builds on the previous one.

Descriptive analytics establishes shared understanding. Predictive insight introduces foresight. Prescriptive guidance embeds analytics into decisions.

As analytics matures, it becomes embedded in daily operations. Decisions are informed by evidence by default. This reflects broader adoption of data-driven decision making.

When organizations integrate machine learning in business processes rather than isolating it within technical teams, analytics becomes part of how work is performed rather than an external input.

Sustained value does not come from individual models. It comes from building decision infrastructure that persists as tools, data, and priorities change. When predictive analytics and data warehousing are designed with this purpose, they support lasting improvements in how enterprises operate and compete.

About the Author

Svarmit Singh Pasricha is a product leader with deep experience driving data-driven and customer-centric initiatives across travel technology platforms and enterprise analytics, including leading strategic efforts spanning commercial capabilities at Expedia Group. His work focuses on translating complex traveler and partner challenges into scalable platforms that improve booking experiences, operational efficiency, and long-term marketplace growth.

Reference:

- McKinsey & Company. (2018, January). Analytics comes of age.https://www.mckinsey.com/capabilities/quantumblack/our-insights/analytics-comes-of-age

- Gartner, Inc. (2018, December). Gartner data shows 87 percent of organizations have low BI and analytics maturity.https://www.gartner.com/en/newsroom/press-releases/2018-12-06-gartner-data-shows-87-percent-of-organizations-have-low-bi-and-analytics-maturity

- Press, G. (2016, March 23). Data preparation most time-consuming, least enjoyable data science task, survey says. Forbes.https://www.forbes.com/sites/gilpress/2016/03/23/data-preparation-most-time-consuming-least-enjoyable-data-science-task-survey-says/

- Bernstein, E. (2014, October). The transparency trap. Harvard Business Review.https://hbr.org/2014/10/the-transparency-trap