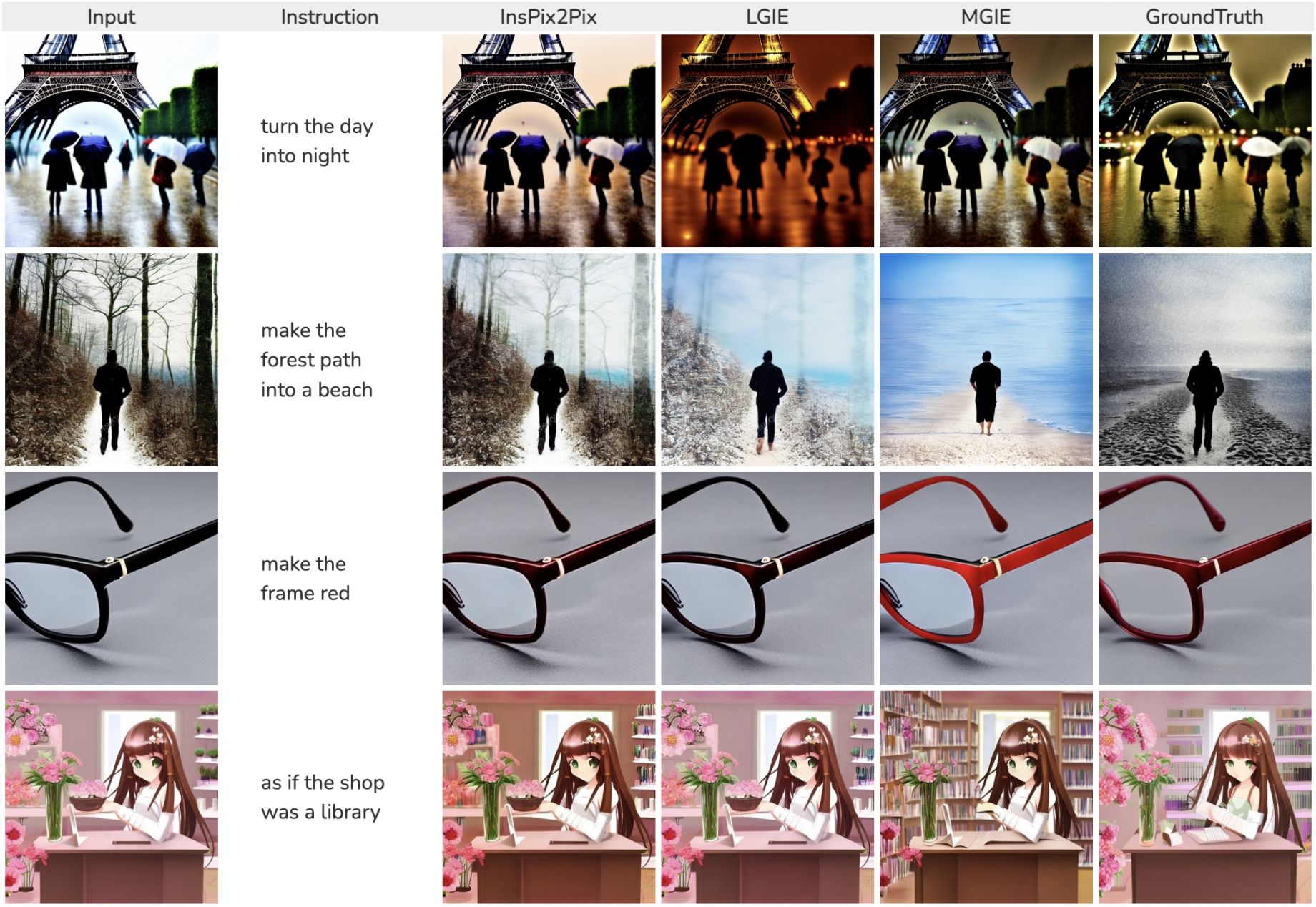

Generative AI models have hogged the headlines in the design world for a while, but one company that appears to have been staying out of the race is Apple. But that changed this week, with Apple researchers releasing a new AI model capable of making edits to images based on text prompts.

Titled MGIE (which is short for the ever-so-catchy 'multimodal large language models-guided image editing'), the tool offers "instruction-based image editing for various editing aspects." If you're wondering what all the fuss is about when it comes to generative AI, take a look at how much AI image generation has improved in one year alone.

"MGIE learns to derive expressive instructions and provides explicit guidance," the accompanying paper reads. "The editing model jointly captures this visual imagination and performs manipulation through end-to-end training. We evaluate various aspects of Photoshop-style modification, global photo optimization, and local editing. Extensive experimental results demonstrate that expressive instructions are crucial to instruction-based image editing, and our MGIE can lead to a notable improvement in automatic metrics and human evaluation while maintaining competitive inference efficiency."

Apple has released the tool curiously quietly, with it currently only available via gitHub, and seemingly unfinished. While the likes of Adobe Firefly and MidJourney are famously able to create prompt-based images from scratch, Apple's offering is so far limited to edits. But, we've wondered aloud for a while whether 2024 is the year Apple will finally join the AI race, and with the advent of MGIE, it's looking ever more likely.