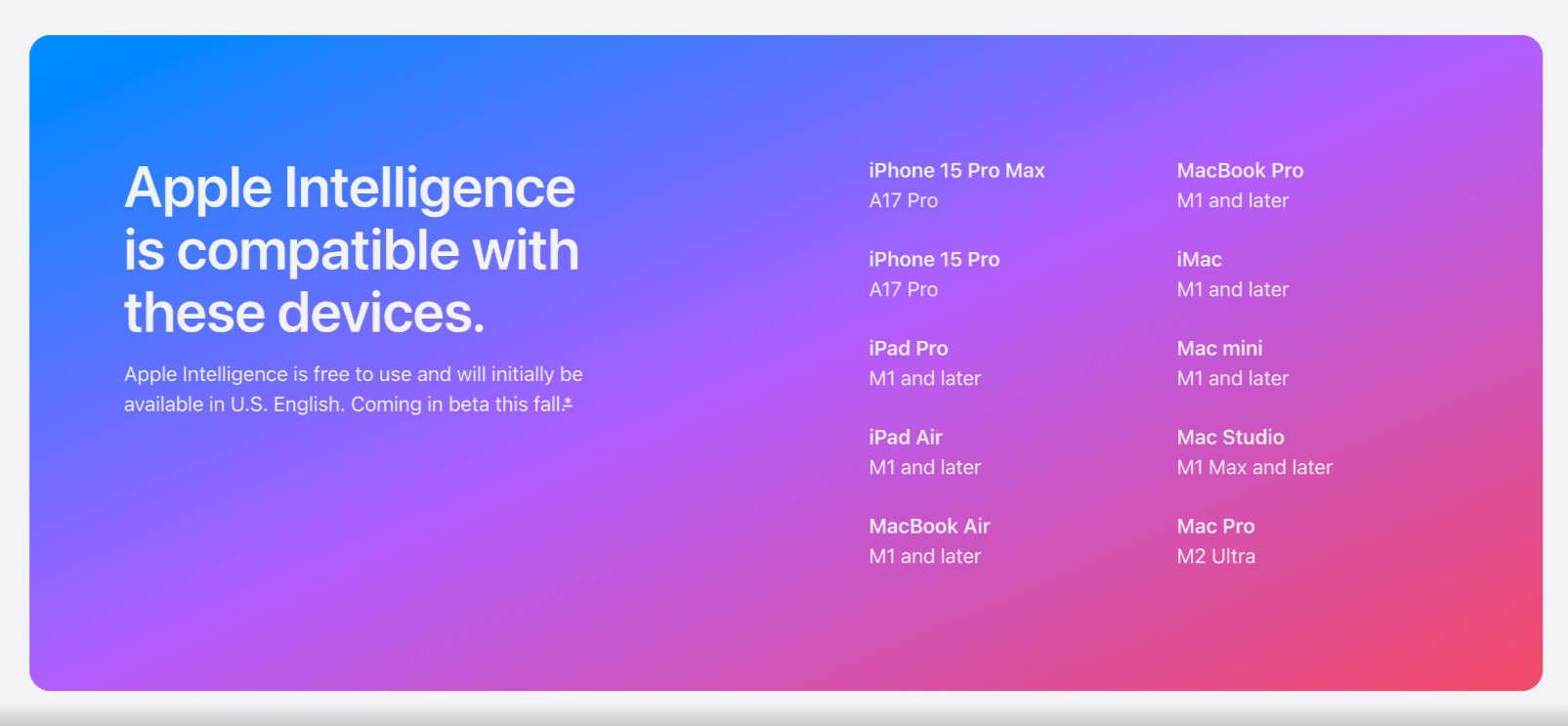

Apple unveiled Apple Intelligence, its take on generative AI, at WWDC 2024. It took a while but it is now finally starting to rollout to users. Still officially in 'beta', Apple Intelligence is now available in the public release versions of iOS 18.1, iPadOS 18.1 and macOS Sequoia 15.1.

Described as a form of “personal intelligence,” it “makes your most personal products more useful and delightful,” according to Tim Cook.

Apple Intelligence is made up of multiple language and image models that run 'on device', meaning only minimal data is sent to the cloud. These models are used to power everything from notification summaries to text re-writing.

Where they stand out is that they have access to your personal information, calendar, Mail and other data stored on your various Apple devices. This is known as personal context and allows the AI to make more accurate recommendations and changes.

What's new in Apple Intelligence (October 28, 2024)

- iOS 18.1 launches with Apple Intelligence — here's how to install it

- iMac M4 announced with Apple intelligence, nano-texture display and Thunderbolt 4 — specs, release date and price

- How to activate Apple Intelligence on your iPhone, iPad and Mac now

Similar to Microsoft Copilot in Windows, Apple's interpretation of AI is able to perform tasks within the operation system, as well as generate text or images inside any app on your phone, tablet or laptop.

Apple says it secures data on the device and only sends information out to the cloud where the cloud servers are built using Apple Silicon chips. From iOS 18.2 it will also have ChatGPT integration but only the specific prompt is sent to ChatGPT, not your personal data.

All of the Apple Intelligence features are system-wide, and work across every Apple device running either an M-series chip or an A17 or above on iPhone. So while older mobile devices have to miss out, there are plenty of devices capable of joining in.

Rewrite and generating text

One of the more notable features of Apple Intelligence is the ability to have it create or rewrite your text to make it more engaging or legible. Known as Writing Tools, this is available through the context menu and can be used to adjust the tone of an email or speech to suit a different audience, add humor to a party invite or clean up class notes.

These are system-wide features are similar to those from Microsoft in Copilot for 365 and Google in Gemini for Docs. They will be accessible in all Apple and third-party apps that use the default text editing system. For example you can use it in Mail, Messages or Notes as well as Keynote, Powerpoint or Word.

The other feature is Proofread which can check grammar, word choice and sentence structure while also suggesting edits and explaining those edits.

Finally, Summarize lets you select text and have that text recapped in the form of an easily digestible paragraph, bullet list or even a table.

Creating images

As well as creating text from a prompt, Apple is adding generative images through diffusion models similar to DALL-E or Midjourney. It also has image editing in Photos to 'smart erase' background elements.

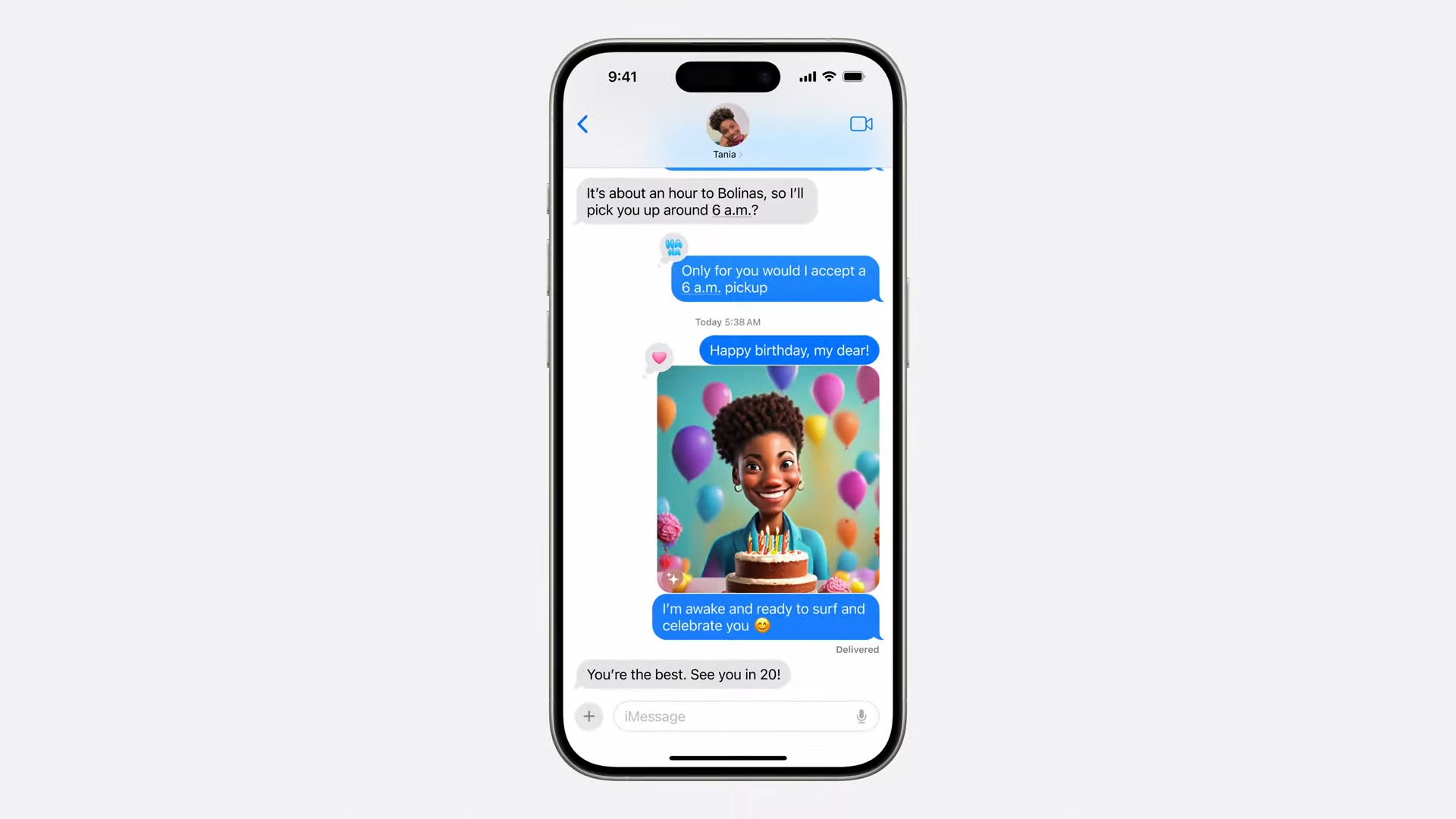

This includes creating emojis and gifs within Notes or Messages. "If you wish a friend a happy birthday you could create an image using generative AI," the company said.

A new app called Image Playground will let users create "fun images in seconds" across three styles — animation, illustration and sketch. It is built into a range of apps such as Messages but will also be its own dedicated app.

All the images are created using a locally running diffusion model, so nothing is sent off the device. You can choose from a range of concepts and themes. Like any AI image tool you type a description to define the image, choice someone from your photo library to include in the image and pick the favorite style.

In Messages you can create personalized concepts related to the conversation your having, such as showing a sketch of a friend on a hike if you're talking about an upcoming hiking trip.

Image editing is available from iOS 18.1, but you'll have to wait for iOS 18.2 before you can get access to Playground and image creation tools including the Emoji maker.

Photo editing

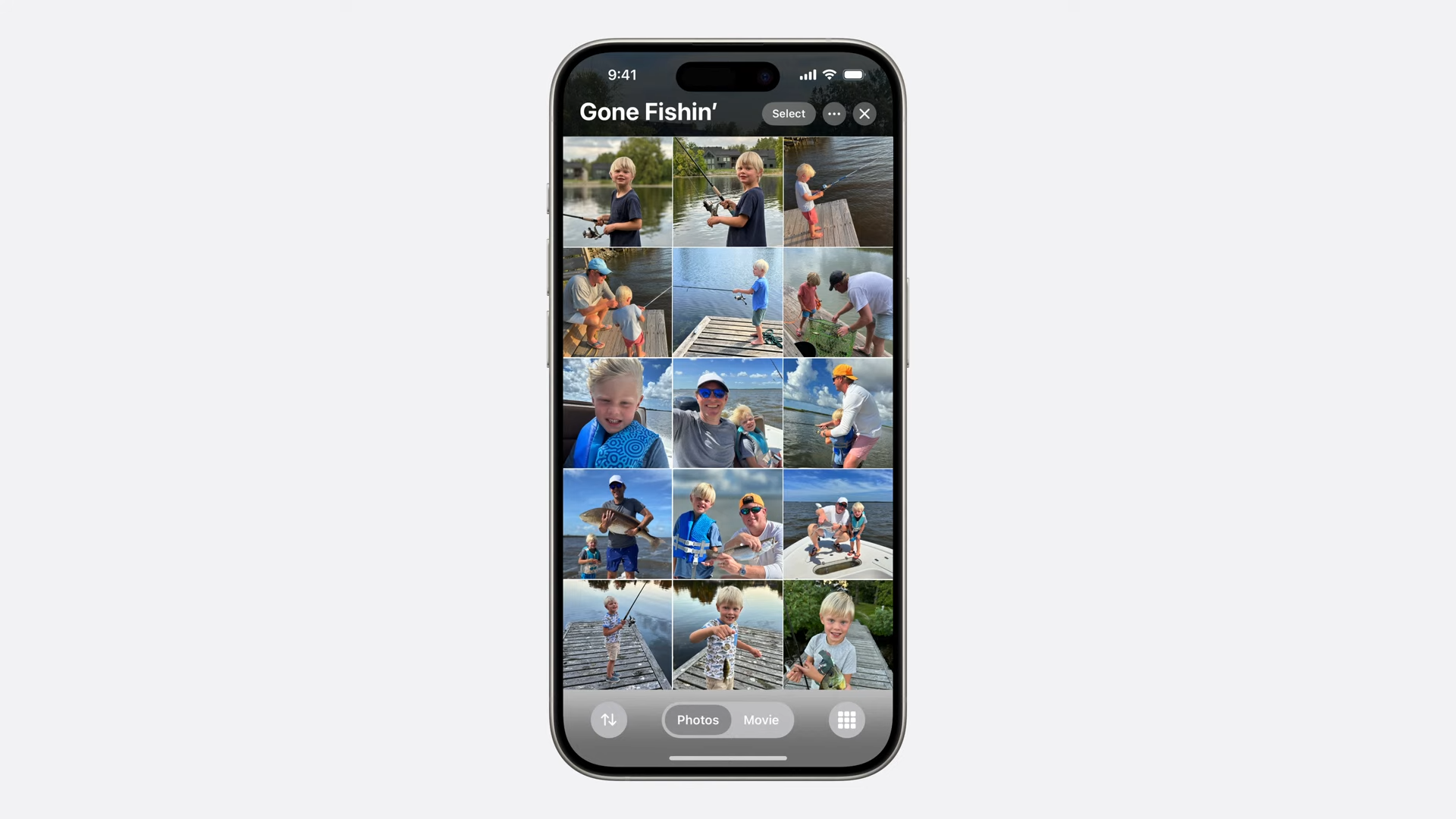

The Photos app itself is getting a major upgrade, with a new layout and the ability to create custom categories and galleries. It is also getting better image editing capabilities thanks to AI... sorry Apple Intelligence.

A new Clean Up tool lets users identify and remove any distracting objects from the background of a photo without having to do any more than tap a button.

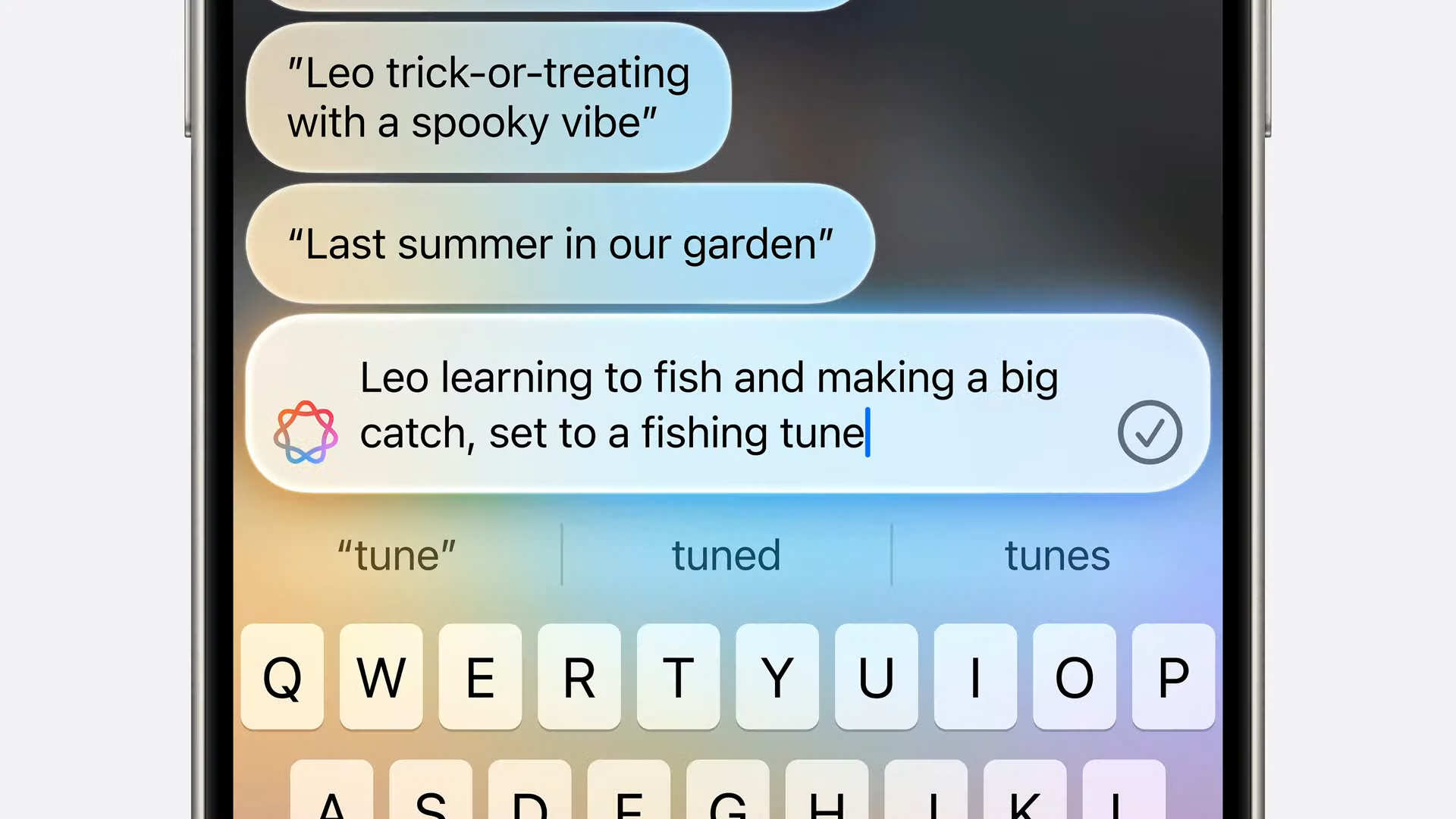

Photos is also getting a better Memories feature that lets you create a story by typing a natural language description, Apple Intelligence will then find the relevant images and make the memory, even pulling in a song from Apple Music.

It will also get much easier to find the image you are looking for thanks to natural language search in Photos, powered by Apple Intelligence.

A new Siri

Siri is also getting a makeover and the ability to understand and respond to more natural language. For example, if you stutter or change your mind mid-sentence, the new Siri will still be able to understand what you are saying.

It will also retain a degree of contextual awareness. For example, if you ask Siri the population of London, then after it responds say 'how about New York', it will know you meant the population of New York and respond accordingly.

Siri will also be able to make calls out to ChatGPT or other AI models in the future where it needs more compute power and AI intelligence, such as analyzing an image or generating complex responses for stories or recipes.

This is optional and made available as a plugin. Siri always asks if you want to use ChatGPT and only sends the specific prompt rather than personal information.

The new Siri also includes information on products and settings within the Apple ecosystem. This means it could make changes based on a rough description, such as writing a message now and sending it later, without you having to dig through multiple menus to find that option.

Agent behavior

What is most remarkable is the agent-like behavior. Apple Intelligence can create actions across your system and apps, carrying out tasks on your behalf. For example, you could ask it to pull up files sent by a friend or play a podcast shared by a partner.

Apple says this is grounded in personal information and context, able to retrieve and analyze personal data such as emails, calendar invite or documents.

Apple Intelligence can process personal details, understand documents you've been sent, meeting times in calendar and other events. It can then offer information on how to adapt your schedule based on the situation.

"With Apple Intelligence, Siri will be able to take hundreds of new actions in and across Apple and third-party apps," the company explained. "For example, a user could say, 'Bring up that article about cicadas from my Reading List,” or “Send the photos from the barbecue on Saturday to Malia,' and Siri will take care of it."

Apple Intelligence will also be able to tailor its actions to the user. For example it can be context aware, knowing what messages you've had from friends and whether they recommended content. You could ask Siri to play the podcast Jamie recommended and it will locate that podcast and play it without you having to open the Mail or Messages apps.

Keeping it secure

Apple says Apple Intelligence goes "hand in hand with powerful privacy". The company says its new AI implementation was "built with privacy at the core".

This is because, to be a truly helpful AI assistant it has to have access to personal and private information. This is data most users wouldn't want sent off device.

"A cornerstone of Apple Intelligence is on-device processing, and many of the models that power it run entirely on device," the company says.

But not every task can be carried out on device due to its complexity, requiring AI tools like ChatGPT rather than a small local model. So they launched Private Cloud Compute, which Apple claims "extends the privacy and security of Apple devices into the cloud to unlock even more intelligence."

They say this is the result of new Apple Silicon server chips that secure data in a similar way to the chips on iPhones and Macs.

The company says it only sends data relevant to task to Apple Silicon servers, not every piece of data. It cryptographically limits the ability of data to go to a server that isn't protected and open to privacy inspection.

ChatGPT

Apple is integrating OpenAI's flagship GPT-4o into its ecosystem, although not to the extent many hoped. It won't replace Siri as the main assistant, rather offer up a way to provide additional resources, reasoning and information.

Users will be able to send an image, document or text prompt off to ChatGPT without having to leave the tool they are currently using. You can just open Apple Intelligence and "send to ChatGPT".

this same functionality is coming to Siri as well, Siri will be able to tap into the power of GPT-4o to answer questions or provide resources it can't do using its own local models. For example you could upload a photo of your fridge and ask for a recipe, it would send the image to ChatGPT to identify the contents and create the recipe.

You wouldn't know it had left Siri as you won't need to switch apps, the full functionality is integrated into the Siri UI.

"Privacy protections are built in for users who access ChatGPT — their IP addresses are obscured, and OpenAI won’t store requests. ChatGPT’s data-use policies apply for users who choose to connect their account," Apple said.

Availability

Apple Intelligence is available for free in iOS 18.1, iPadOS 18.1 and macOS Sequoia. Not all features are available, initially, it is just Writing Tools and some Photos updates, but the rest of the features will come with iOS 18.2 and so on later in the year.