One of the biggest announcements at WWDC this year was the launch of Apple Intelligence, Apple's first foray into AI. With improvements to Siri, and some cool extra features in the Notes and Calculator apps, Apple Intelligence seems like it's going to make your iPhone, MacBook, or iPhone a whole lot easier to use.

Where the controversy begins, however, is with the generative AI portion of Apple intelligence. Thanks to a report from Engadget, it soon becomes obvious that a great deal of artists and creators are concerned about what content Apple has taught its generative models with, and now demand transparency to see if it's been taught ethically.

Teaching AI?

So that generative AI can 'create' the kinds of images, documents, and other material that it spits out, it needs to be taught using hundreds of thousands of existing pieces of content before it can make anything that resembles a reasonable image. Remember when AI art couldn't create hands, or gave its subjects millions of teeth? That's because, at the time, it hadn't been pumped full of enough art yet, amongst other reasons.

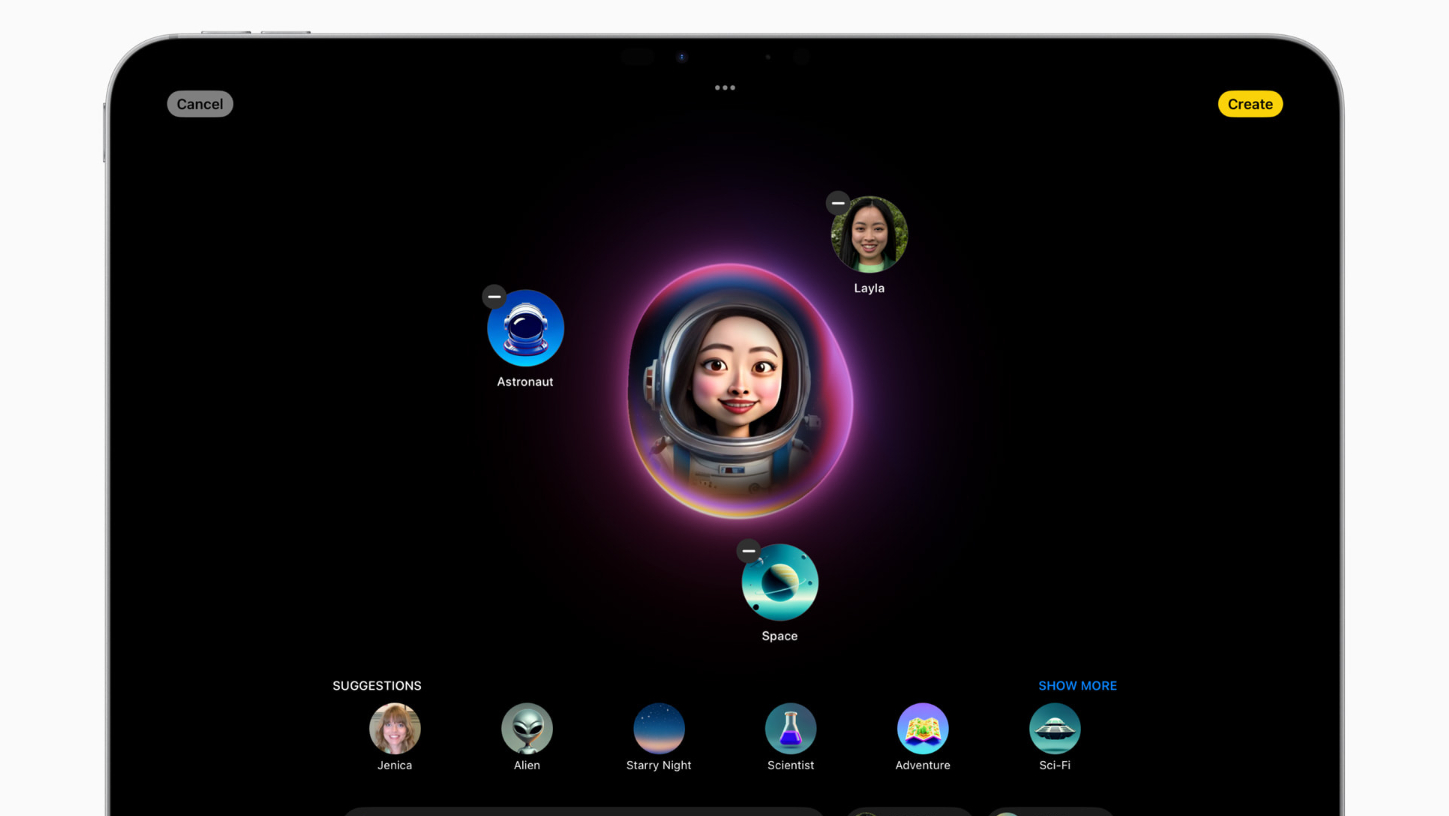

Apple Intelligence holds a generative AI model that will let users create images and emojis straight from their iPhone or iPad. Artists are concerned about the art that Apple has used to teach its models, and that it needs to be more transparent about where it got them from.

See, you really need permission from artists before popping their art into your generative AI model, because it will then use bits of that art to make derivatives of it. Ethically sourced AI models uses art that the creators of the model have permission to use — and artists are concerned that this isn't what Apple has done.

To be clear, no one is sure. Apple could well have ethically sourced the art that it used in the creation of Apple Intelligence, but until it makes things clear, artists are understandably nervous that their art is being used without their permission. Given this isn't the first time that Apple has come at loggerheads with artists and creatives this year, it might have to be careful how it handles generative AI in the future.