When Apple launched its M1-equipped MacBook Air in 2020, we called its in-house chip a "game-changer" – and the tech giant might be planning an equivalent move for iPhone cameras, according to the latest rumors.

According to Bloomberg's Mark Gurman, Apple "is eyeing an in-house strategy for camera sensors". In other words, making its own camera sensors in much the same way as Apple Silicon. And while there's no rumored timescale for this, it would be a big moment for mobile photography and videography.

The benefits of Apple making an in-house camera sensor – beyond the business benefits of moving into an expanding sector – are potentially many. But the main one for the iPhone would be a much better-optimized image processing pipeline, which could unlock image quality and performance improvements when combined with Apple's Photonic Engine. It could also put Apple at the forefront of spatial photos and video.

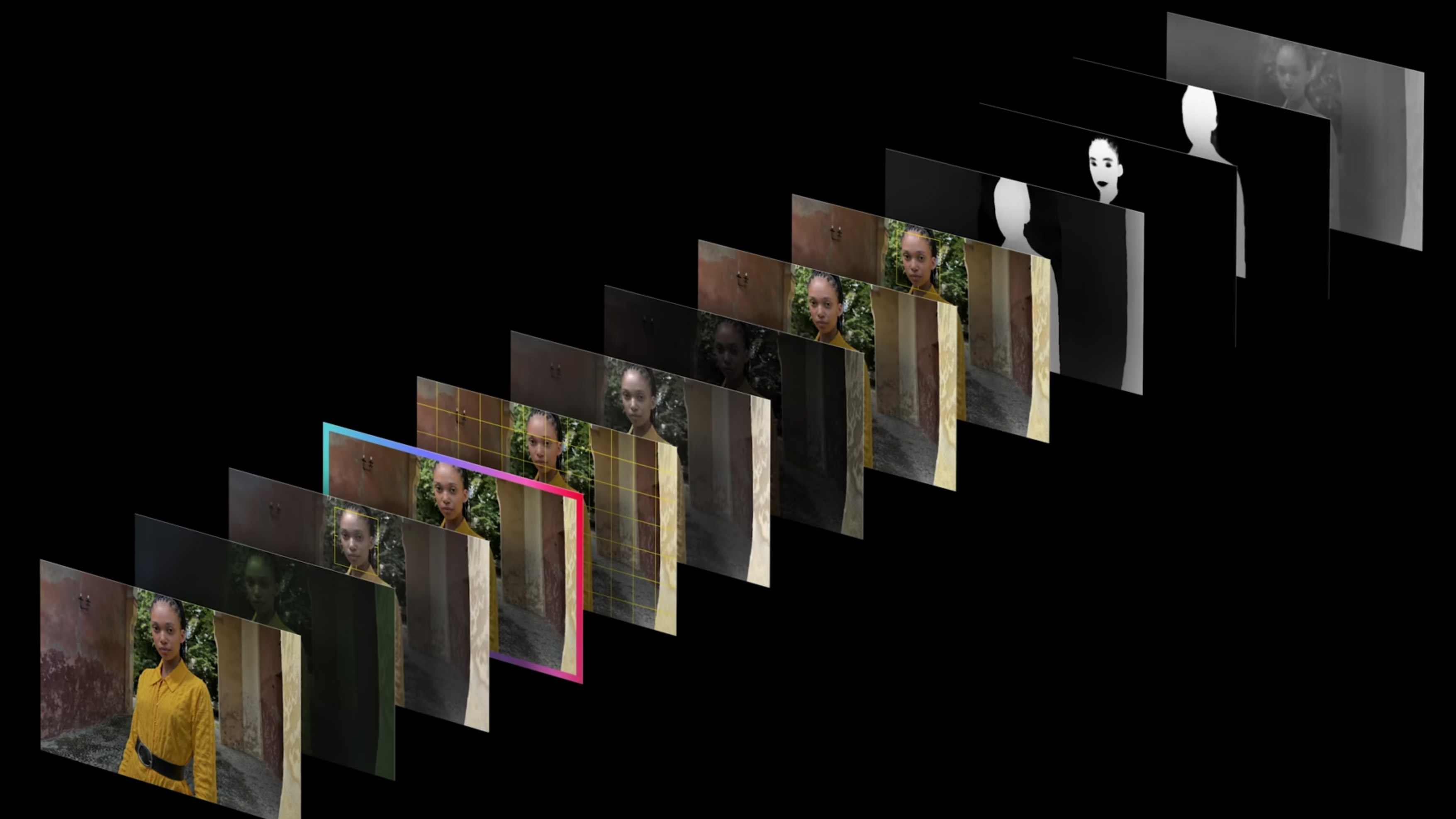

Apple introduced its Photonic Engine – a computational photography development of Deep Fusion – in the iPhone 14 series. Its main advancement was that it applied Apple's multi-frame processing earlier in the image development process than Deep Fusion, this time on uncompressed (or raw) images. This gave Apple more control over detail, color, and exposure – and adding an Apple-made sensor to this mix could again unlock more computational processing advancements.

Apple doesn't disclose the image sensors used in iPhones, but the iPhone 15 Pro's 48MP sensor is strongly rumored to be the Sony IMX803 (also used in the iPhone 14 Pro). Sony dominates the CMOS camera sensor market (according to The Elec, it had a 51.6% market share by the end of 2022), so most iPhone cameras (and their Lidar scanners) are based on Sony sensors – this means an in-house Apple camera sensor would deliver a sizable earthquake through that market.

This rumored move also wouldn't just be about iPhone cameras. As Bloomberg's Mark Gurman reported, image sensors are also "core to future developments in the mixed-reality and autonomous-driving industries" as well. That means the Apple Vision Pro and, potentially, the long-rumored Apple Car would also benefit from the tech giant taking on image sensor giants Sony and Samsung.

Analysis: A distant but likely Apple move

If Apple does decide to pursue this rumored in-house strategy for camera sensors, it won't happen anytime soon. The iPhone 16 Pro is already rumored to be packing a stacked sensor (most likely Sony's IMX903), so the prospect is likely at least a few years off.

But it would also make strategic sense for Apple. Recently, we heard rumors that Apple could be making its own batteries for future iPhones. And this year, the Apple M3 made us rethink the whole concept of integrated graphics on processors and could even turn Apple into a gaming force to be reckoned with.

Today's iPhones aren't exactly being held back by Sony's excellent image sensors and computational processing is now the most important part of the image pipeline for smartphones.

It also isn't uncommon in the professional camera world for Sony rivals to use its sensors – the flagship Nikon Z9, for example, is built around a stacked Sony sensor (the Sony IMX609AQJ), despite the fact that Nikon is competing with cameras like the Sony A9 III for the title of best professional camera.

But aside from making business sense, it's clear from Apple Silicon that Apple has the technological might and financial muscle to optimize and rethink the architecture of chips that have been traditionally outsourced.

If it does the same with image sensors, that could give it far greater control over the future of our pocket cameras – moving them into new spaces, like the spatial video that's already possible on the iPhone 15 Pro. And also helps promote the concept of 'spatial computing' that Apple is so keen to push into the mainstream with the Apple Vision Pro.