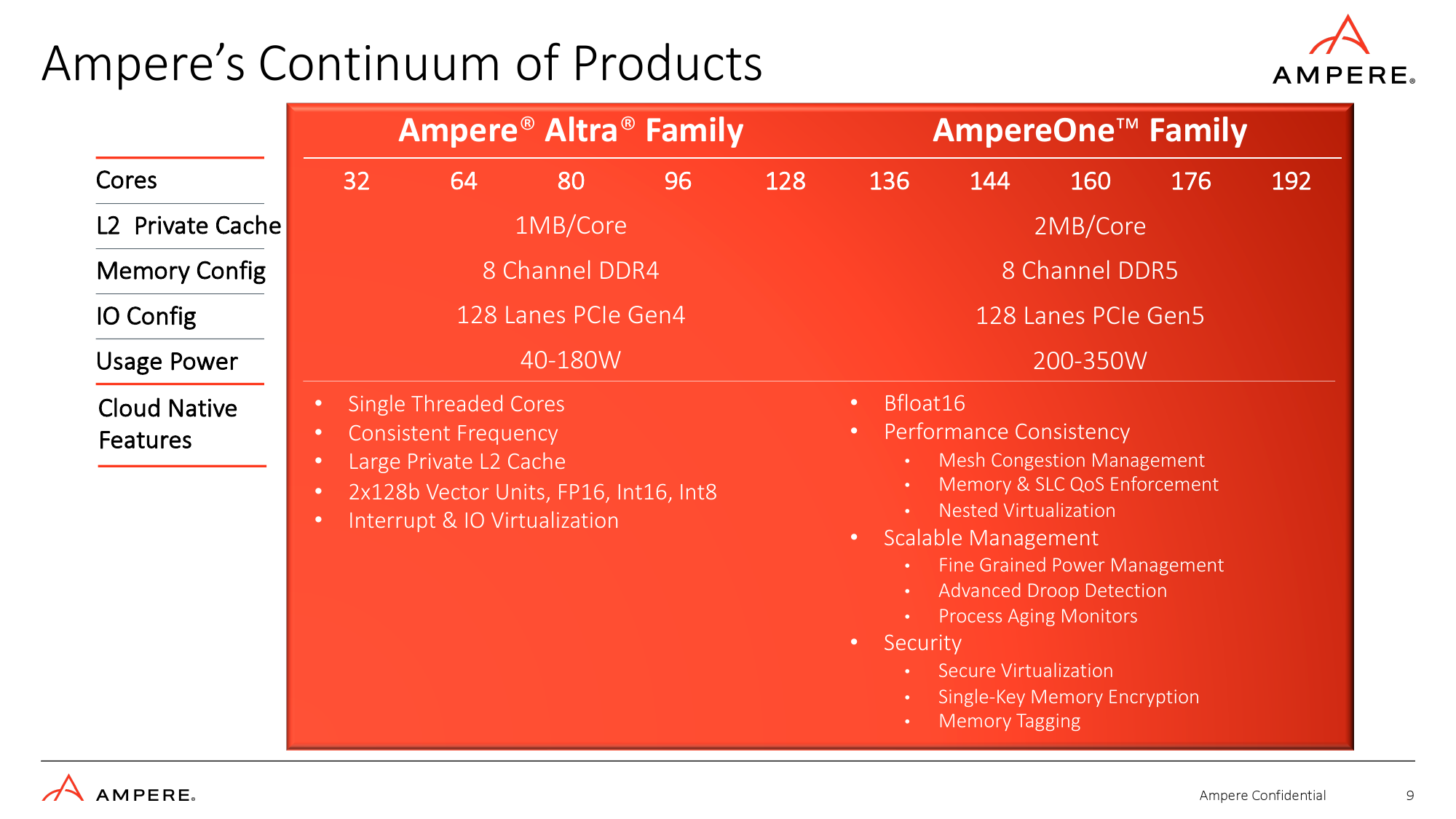

Ampere this week introduced its AmpereOne processors for cloud datacenters that happen to be the industry's first general-purpose CPUs with up to 132 that can be used for AI inference.

The new chips consume more power than their predecessors — Ampere Altra (which will remain in Ampere's stable for at least a while) — but the company claims that despite of higher power consumption, its processors with up to 192 cores provide higher computational density than CPUs from AMD and Intel. Some of those performance claims can be controversial.

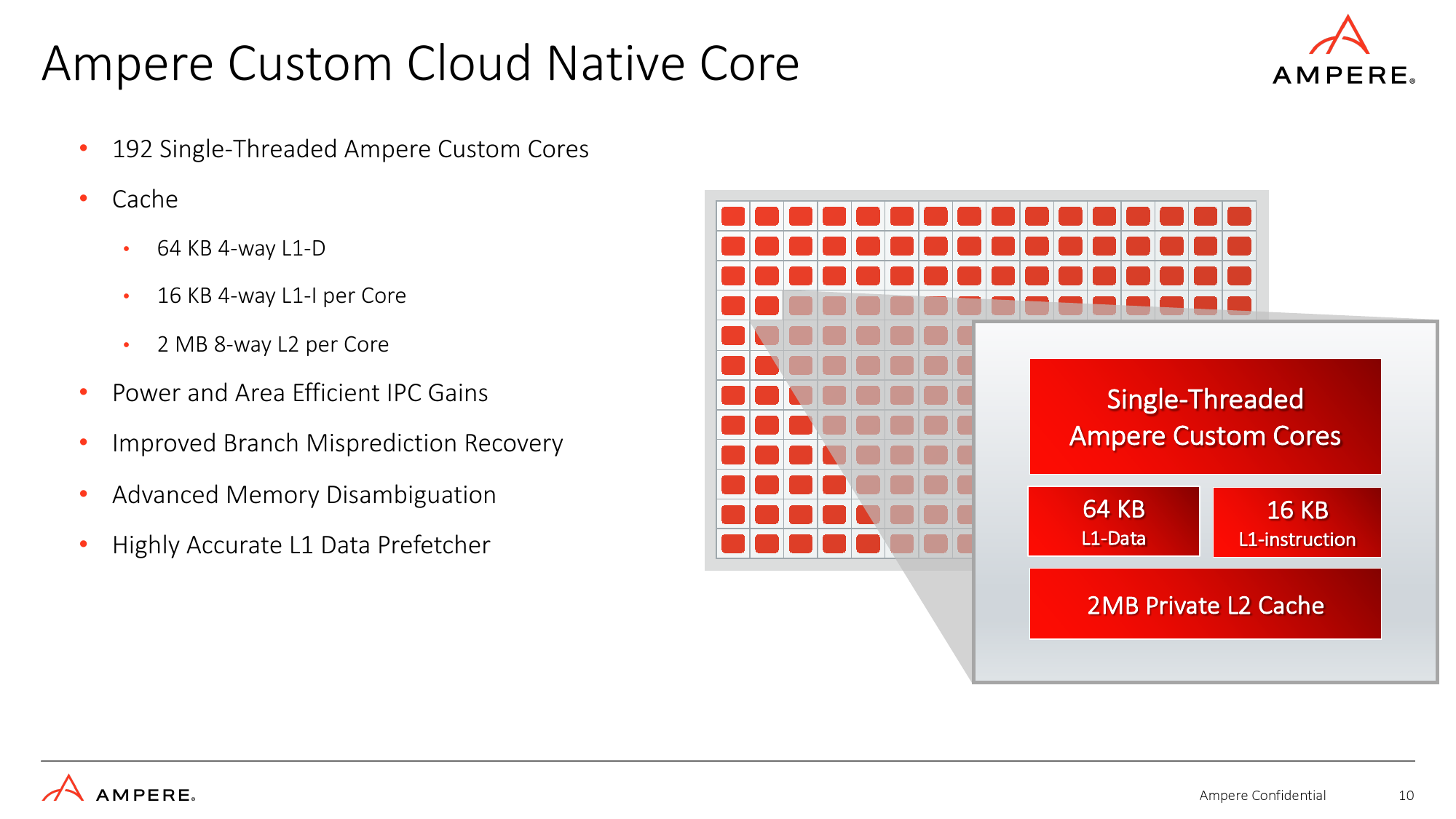

192 Custom Cloud Native Cores

Ampere's AmpereOne processors features 136 – 192 cores (as opposed to 32 to 128 cores for Ampere Altra) running at up to 3.0 GHz that are based on the company's proprietary implementation of the Armv8.6+ instruction set architecture (featuring two 128-bit vector units that support FP16, BF16, INT16, and INT8 formats) that are equipped with a 2MB of 8-way set associativity L2 cache per core (up from 1MB) and are interconnected using a mech network with 64 home nodes and directory-based snoop filter. In addition to L1 and L2 caches, the SoC also has a 64MB system level cache. The new CPUs are rated for 200W – 350W depending on exact SKU, up from 40W – 180W for the Ampere Altra.

The company claims that its new cores are further optimized for cloud and AI workloads and feature 'power and are efficient' instructions per clock (IPC) gains, which probably means higher IPC (compared to Arm's Neoverse N1 used for Altra) without a tangible increase in power consumption and die area. Speaking of die area, Ampere does not disclose it, but says that the AmpereOne is made on one of TSMC's 5nm-class process technology.

Although Ampere does not reveal all the details about its AmpereOne core, it says that they feature a highly accurate L1 data prefetcher (reduces latency, ensures that the CPU spends less time waiting for data, and reduces system power consumption by minimizing memory accesses), refined branch misprediction recovery (the sooner the CPU can detect a branch misprediction and recover, it will reduce latency, and will waste less power), and sophisticated memory disambiguation (increases IPC, minimizes pipeline stalls, maximizes out-of-order execution, lowers latency, and improves handling of multiple read/write requests in virtualized environments).

While the list of AmpereOne core architecture improvements does not seem too long on paper, these things can indeed improve performance significantly and they required a lot of research to be made (i.e., which things slowdown performance of a cloud datacenter CPU the most?) and a lot of work to implement them efficiently.

Advanced Security and I/O

Since the AmpereOne SoC is aimed at cloud datacenters, it is equipped with appropriate I/O, which includes eight DDR5 channels for up to 16 modules supporting up to 8TB of memory per socket, 128 lanes of PCIe Gen5 with 32 controllers and x4 bifurcation.

Datacenters also require certain reliability, availability, serviceability (RAS), and security features. To that end, the SoC fully supports ECC memory, single key memory encryption, memory tagging, secure virtualization, and nested virtualization, just to name a few of them. In addition, AmpereOne has numerous security capabilities like crypto and entropy accelerators, speculative side channel attack mitigation, ROP/JOP attack mitigation, and so on.

Curious Benchmark Results

Without any doubts, Ampere's AmpereOne SoC is an impressive piece of silicon designed to handle cloud workloads and featuring 192 general-purpose cores, the industry's first. Yet, to prove its points, Ampere uses rather curious benchmark results.

Ampere sees compute density of its AmpereOne as its main advantage. The company claims that one 42U 16.5kW rack filled with 192-core AmpereOne SoC-based 1S machines can support up to 7926 virtual machines, whereas a rack based on AMD's 96-core EPYC 9654 'Genoa' can handle 2496 VMs and a rack powered by Intel's 56-core Xeon Scalable 8480+ 'Sapphire Rapids' CPUs can handle 1680 VMs. This comparison makes a lot of sense in the 16.5kW power budget.

But 42U rack power density is rising and exascalers like AWS, Google, and Microsoft are ready for this particularly for their performance-demanding workloads. Based on a survey from UpTimeInstitute in 2020, we can say that 16% of companies deployed typical 42U rakcs with rack power density from 20kW to over 50kW. By now, the number of typical deployments with 20kW racks has increased, not decreased, as the latest and previous-generation CPUs from AMD increased their TDPs compared to their predecessors.

When it comes to performance, Ampere demonstrates the advantages of its 160-core AmpereOne-based system with 512GB of memory running Generative AI (stable diffusion) and AI Recommenders (DLRM) against systems based on AMD's 96-core EPYC 9654 CPU with 256GB of memory (meaning that it worked in an eight-channel mode, not 12-channel mode that is supported by Genoa). Ampere-based machines produced 2.3X more frame/s for generative AI and over 2X more queries/s for AI recommendations.

In this case Ampere compared performance of its systems crunching data with an FP16 precision, whereas AMD-based machines computed with an FP32 precision, which is not an apples-to-apples comparison. Furthermore, many FP16 workloads are now run on GPUs rather than on CPUs and massively-parallel GPUs tend to offer spectacular results with generative AI and AI recommendations workloads.

Summary

Ampere's AmpereOne are the industry-first general-purpose CPUs with up to 192 cores, which certainly deserves a lot of respect. These CPUs also feature robust I/O capabilities, advanced security features, and promise improved instructions per clock (IPC) gains. Also they can run AI workloads with FP16, BF16, FP8, and INT8 precision.

But the company chose to use rather controversial methods to prove its points when it comes to benchmark results, which casts some shadow on its achievements. That said, it will be particularly interesting to see independent test results of AmpereOne-based servers.