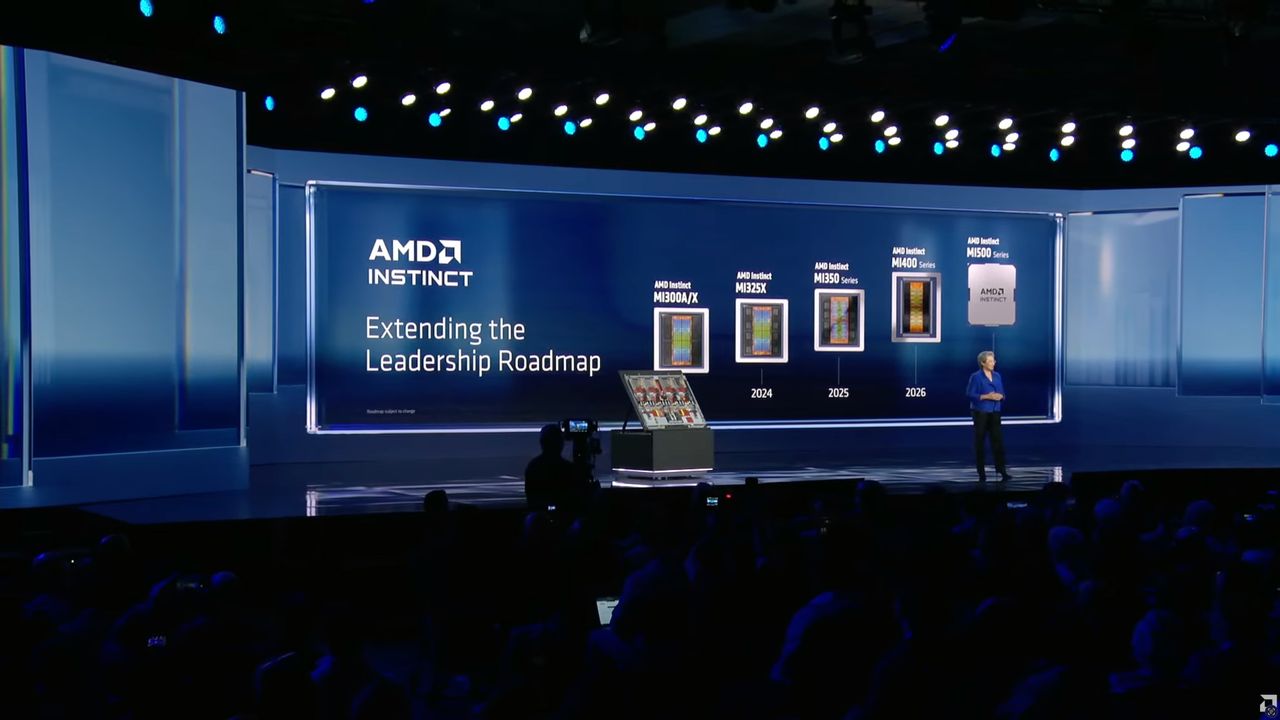

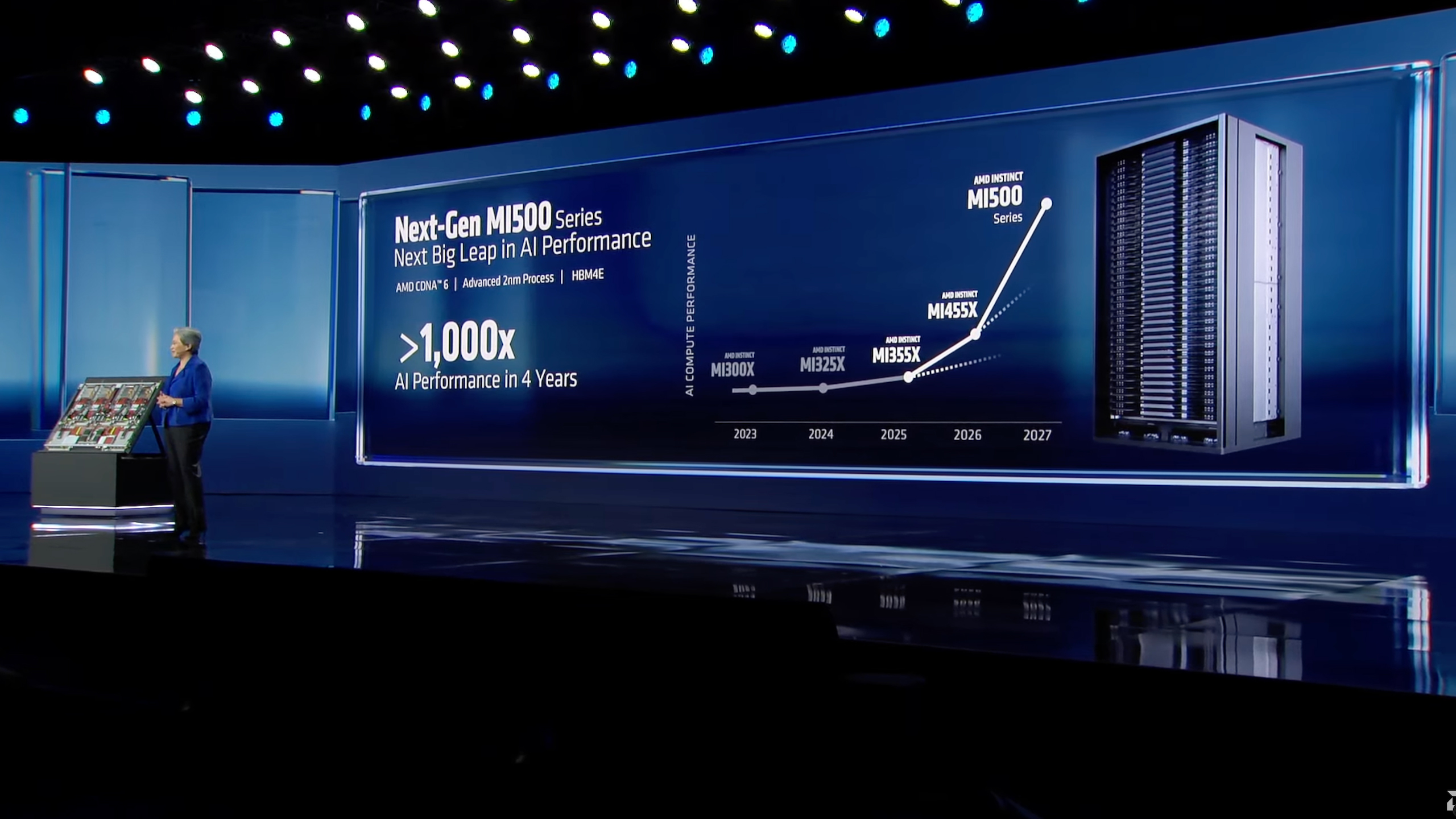

The demands of AI data centers compute capability are set to increase dramatically from around 100 ZettaFLOPS today to around 10+ YottaFLOPS* in the next five years (approximately by about 100 times), according to AMD. Thus, to stay relevant, hardware makers must increase performance of their products across the full stack every year. AMD does its best, so during the company's CES keynote its chief executive Lisa Su announced Instinct MI500X-series AI and HPC GPUs due in 2027.

"Demand for compute is growing faster than ever," said Lisa Su, chief executive of AMD. "Meeting that demand means continuing to push the envelope on performance far beyond where we are today. MI400 was the major inflection point in terms of delivering leadership training across all workloads, inference, and scientific computing. We are not stopping there. Development of our next-generation MI500-series is well underway. With MI500, we take another major leap on performance. It is built on our next gen CDNA 6 architecture [and] manufactured on 2nm process technology and uses higher speed HBM4E memory."

AMD's Instinct MI500X-series accelerators are set to be based on the CDNA 6 architecture (no UDNA yet?) with their compute chiplets made on one of TSMC's N2-series fabrication process (2nm-class). AMD says that its Instinct MI500X GPUs will offer up to 1,000 times higher AI performance compared to the Instinct MI300X accelerator from late 2023, but does not exactly define comparison metrics.

"With the launch of MI500 in 2027, we are on track to deliver 1000 times increase in AI performance over the last four years, making more powerful AI accessible to all," added Su.

Achieving a 1000X performance increase in four years is a major achievement, though we should keep in mind that between the Instinct MI300X and Instinct MI500 there is a three-generational instruction set architecture (ISA) gap (CDNA 3 => CDNA 6), a three generational memory gap (HBM3 => HBM4E), an addition of FP4 and other low-precision formats, faster scale-up interconnects, and possibly PCIe 6.0 interconnection to host CPU.

Nonetheless, the Instinct MI500 will be an all-new generation of AMD's AI and HPC GPUs with major architectural improvements, which probably include substantially higher tensor/matrix-compute density, tighter integration between compute and memory, and significantly improved performance-per-watt perhaps achieved by a combination of ISA and TSMC's N2P fabrication process.

*One YottaFLOPS equals to 1,000 ZettaFLOPS, or one million ExaFLOPS.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.