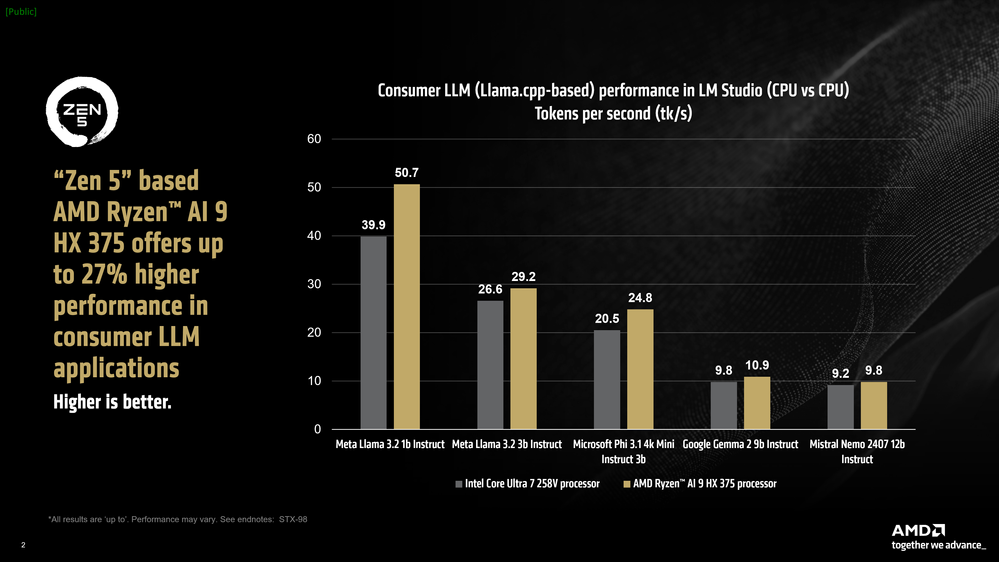

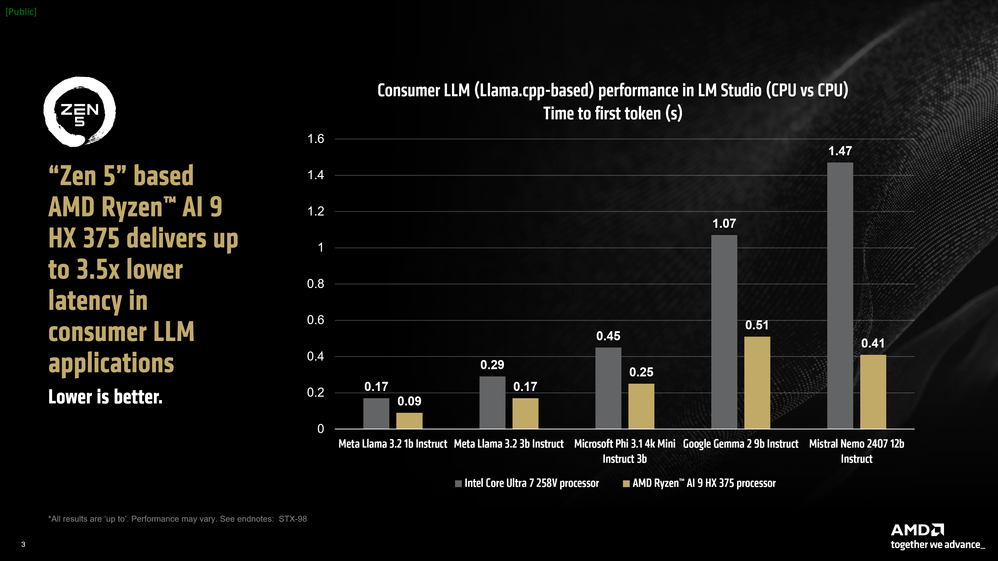

AMD claims that its Ryzen AI 300 (codenamed Strix Point) offerings can easily beat Intel's latest Core Ultra 200V (codenamed Lunar Lake) CPUs in consumer LLM workloads. Team Red has showcased several charts - comparing the performance of processors from both lineups - with AMD in front by upwards of 27%.

Since the AI boom, tech giants—both on the hardware and software fronts—have entered an arms race to outpace each other in the AI landscape. While much of this is purely for the quote-unquote "AI hype," benefits for mainstream customers are starting to materialize. Microsoft demands a minimum of 40 TOPS for any system to be considered a "Copilot+ PC." Almost every CPU manufacturer now reserves valuable silicon space for a Neural Processing Unit (NPU).

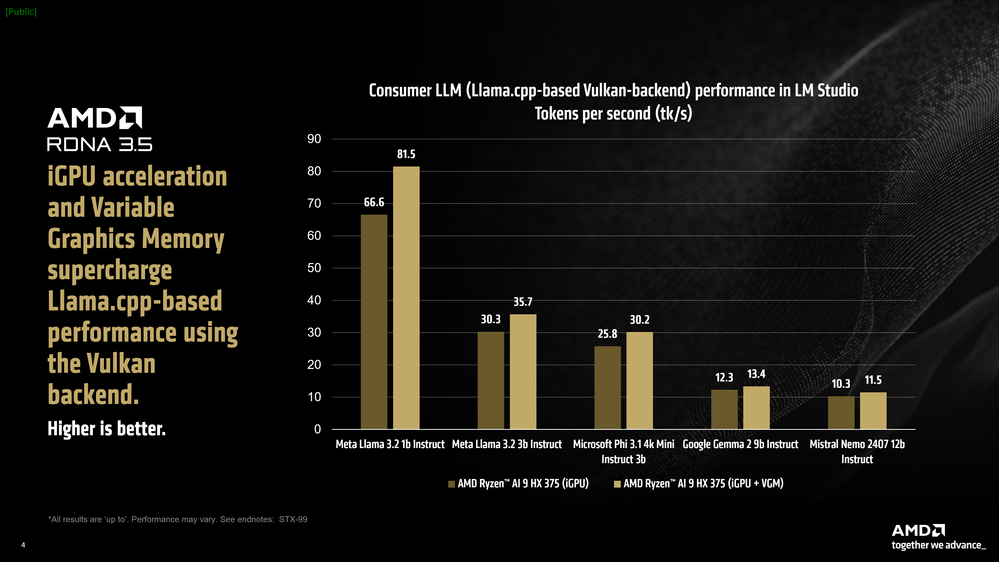

On that note, AMD says that its Strix Point APUs seemingly outperform Intel's Lunar Lake when running LLMs locally. LM Studio's typical consumer LLM is run based on the llama.cpp framework. The Ryzen AI 9 HX 375 leads the Core Ultra 7 258V by up to 27% in the CPU department. The latency benchmarks are an absolute bloodbath for Intel - with AMD reportedly delivering 3.5X lower latency in the Mistral Nemo 2407 12b Instruct model. When switching to the integrated graphics solution, the Ryzen AI 9 HX 375's Radeon 890M iGPU is, at best, 23% faster than Intel's Arc 140V.

Interestingly, while the HX 375 is the fastest Strix Point APU, the Core Ultra 7 258V is not. The flagship Core Ultra 9 288V offers a full 30W TDP, which aligns it relatively better with Strix Point since the latter can be configured as high as 54W.

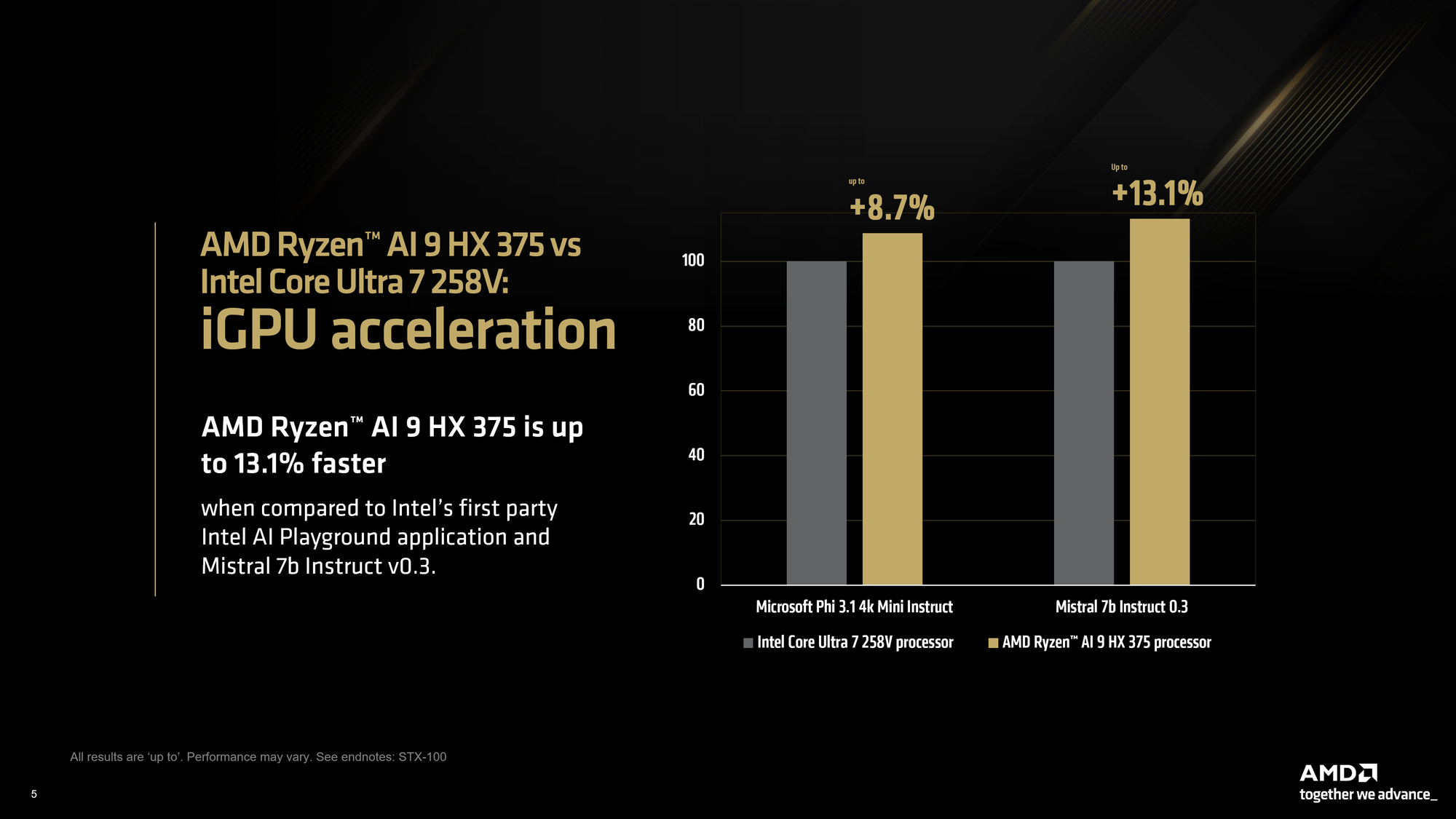

That aside, AMD then tested both laptops in Intel's own and first-party AI Playground - where we see AMD in front by 13.1% using the Mistral 7b Instruct v0.3 model. The Microsoft Phi 3.1 Mini Instruct test is slightly less exciting as the lead drops to 8.7%.

All in all, it is not every day that you fire up your laptop to run a local LLM, but who knows what the future holds? It would've been more exciting to see the kingpin Core Ultra 9 288V in action, but don't expect drastically different results. AMD says that it is actively working to make LLMs, currently gated behind many technical barriers, more accessible to everyone, which highlights the importance of projects like LM Studio.