AMD provided more details on its upcoming Instinct MI350 CDNA4 AI accelerator and data center GPU today, formally announcing the Instinct MI355X. It also provided additional details on the now-shipping MI325X, which apparently received a slight trim on memory capacity since the last time AMD discussed it.

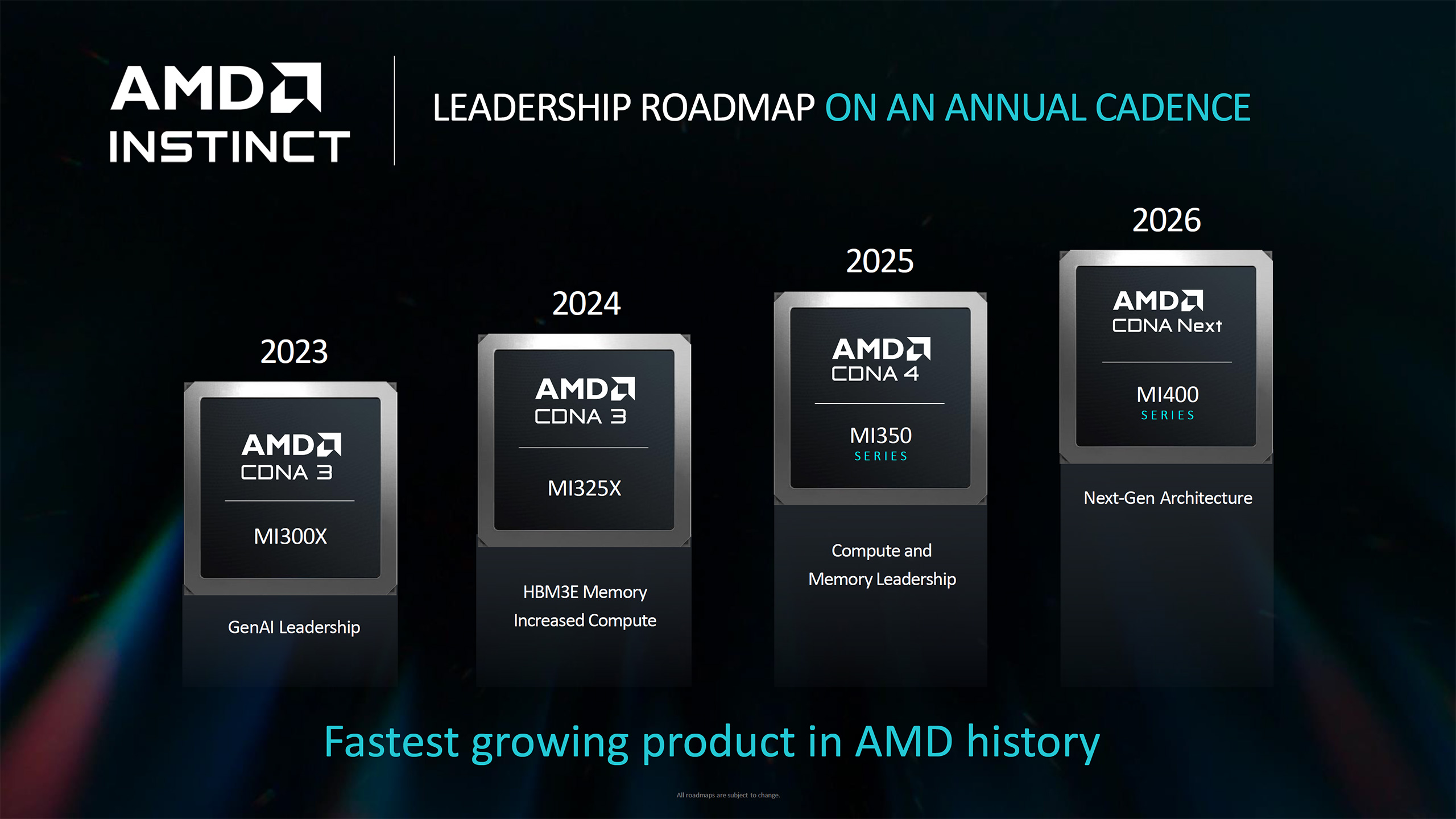

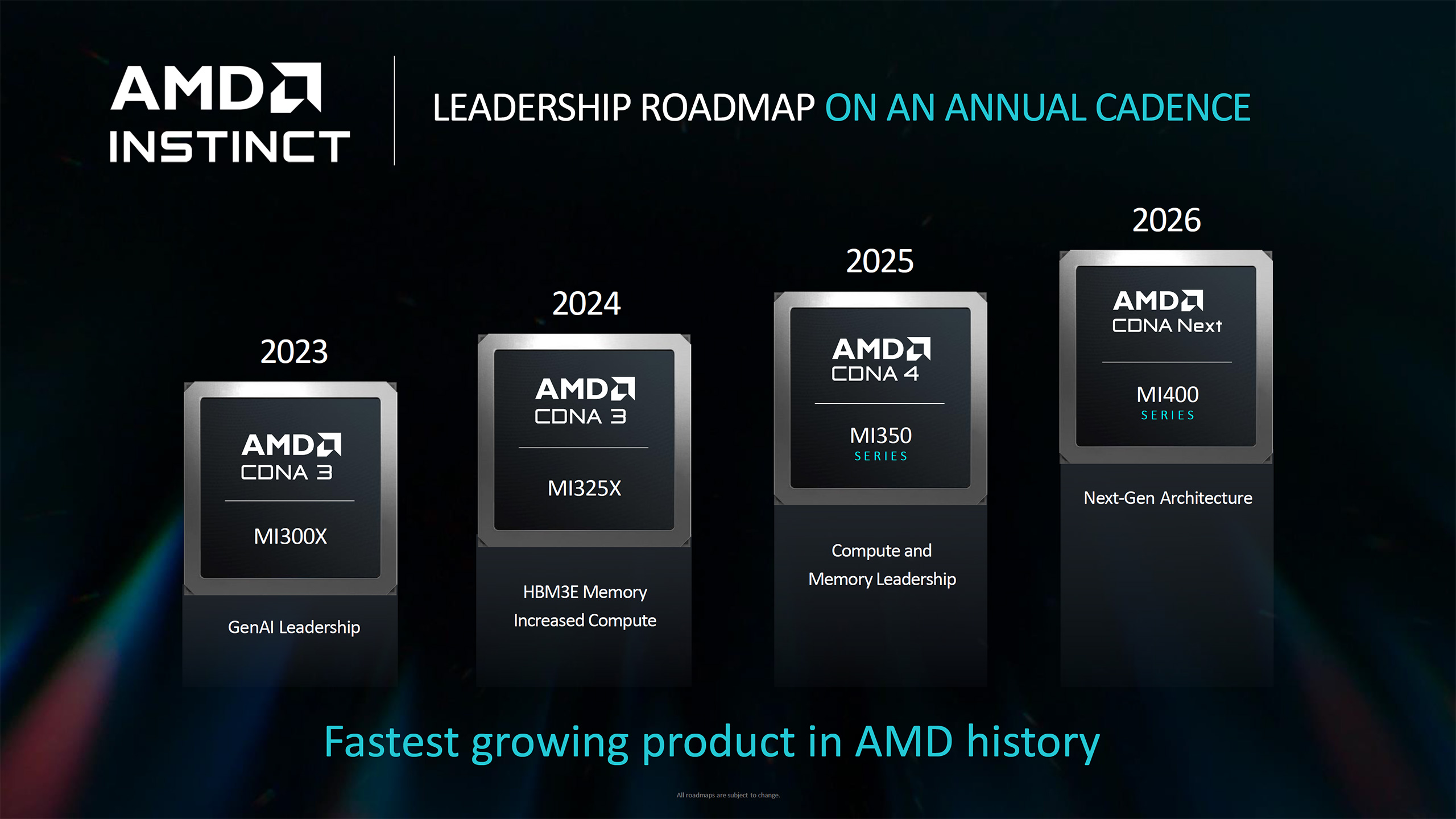

MI355X is slated to begin shipping in the second half of 2025, so it's still a ways off. However, AMD has seen massive adoption of its AI accelerators in recent years, with the MI300 series being the fastest product ramp in AMD's history, so like Nvidia, it is now on a yearly cadence for product launches.

Let's start with the new Instinct MI355X. The whole MI350 series feels a bit odd in terms of branding, considering CDNA was used with the MI100, then CDNA2 in the MI200 series, and CDNA3 has powered the MI300 series for the past year or so. And now, we have CDNA4 powering ... MI350. Why? We've asked and we'll see if there's a good answer. There is an MI400 series in development already, currently slated for a 2026 launch, and maybe that was already in progress before AMD pivoted and decided to add some additional products.

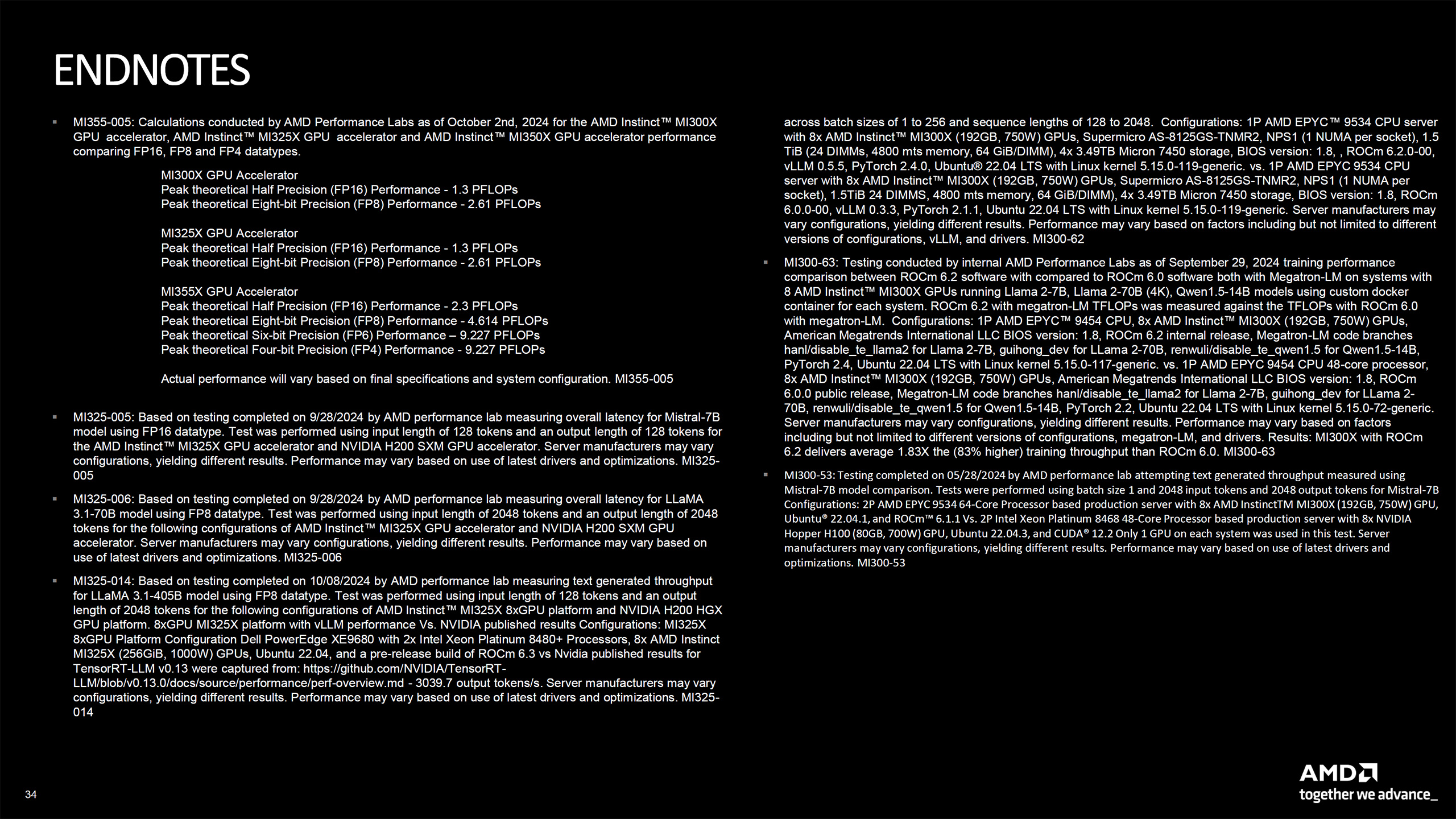

Regardless of the product name, CDNA4 does represent a new architecture. AMD said it was a "from the ground up redesign" in our briefing, though that's perhaps a bit of an exaggeration. MI355X will use TSMC's latest N3 process node, which does require a fundamental reworking compared to N5, but the core design likely remains quite similar to CDNA3. What is new is support for the FP4 and FP6 data types.

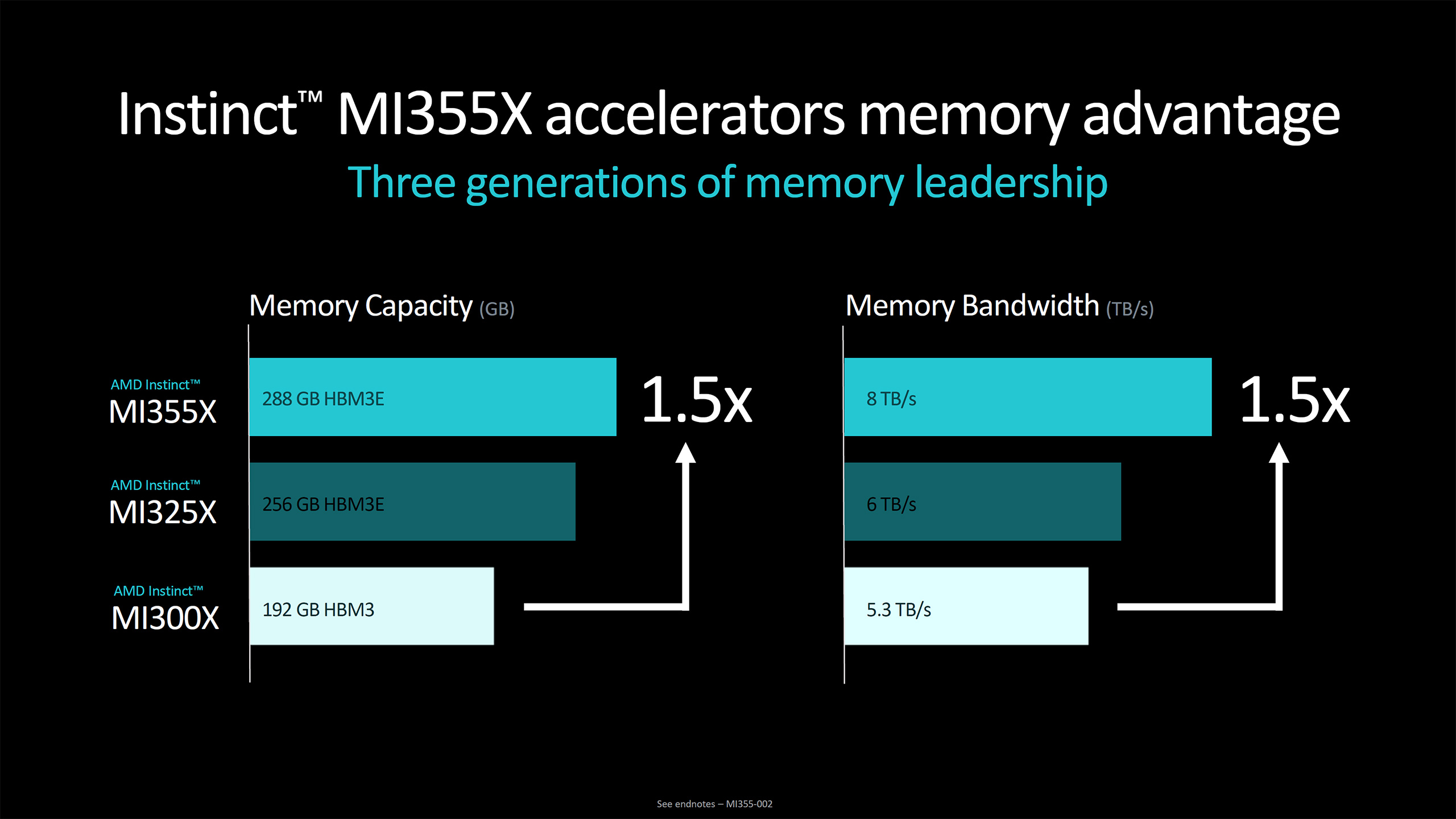

AMD is presenting the MI355X as a "preview" of what will come, and as we'll discuss below, that means some of the final specifications could change. It will support up to 288GB of HBM3E memory, presumably across eight stacks. AMD said it will feature 10 "compute elements" per GPU, which really doesn't tell us much about the potential on its own, but AMD did provide some other initial specifications.

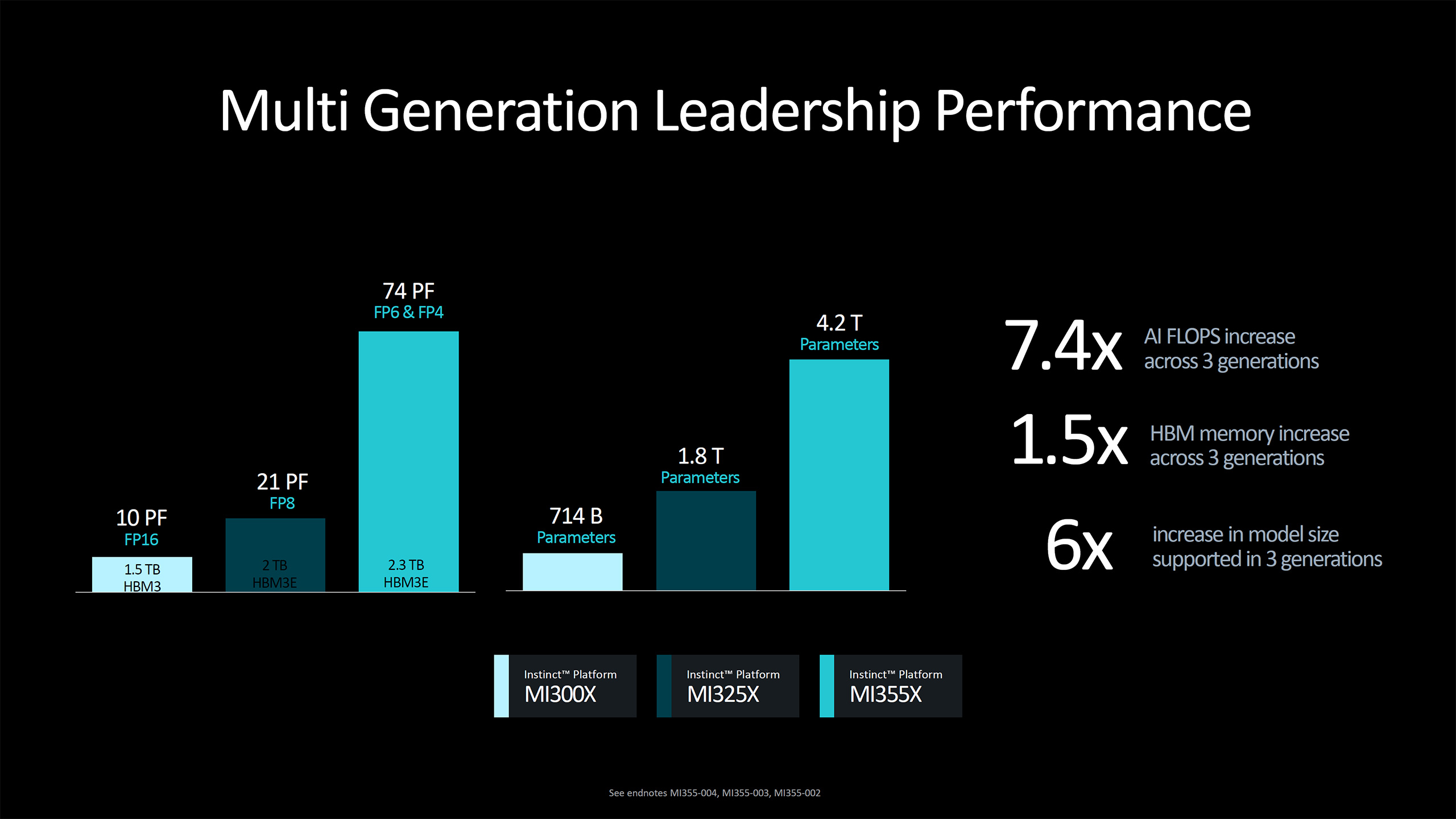

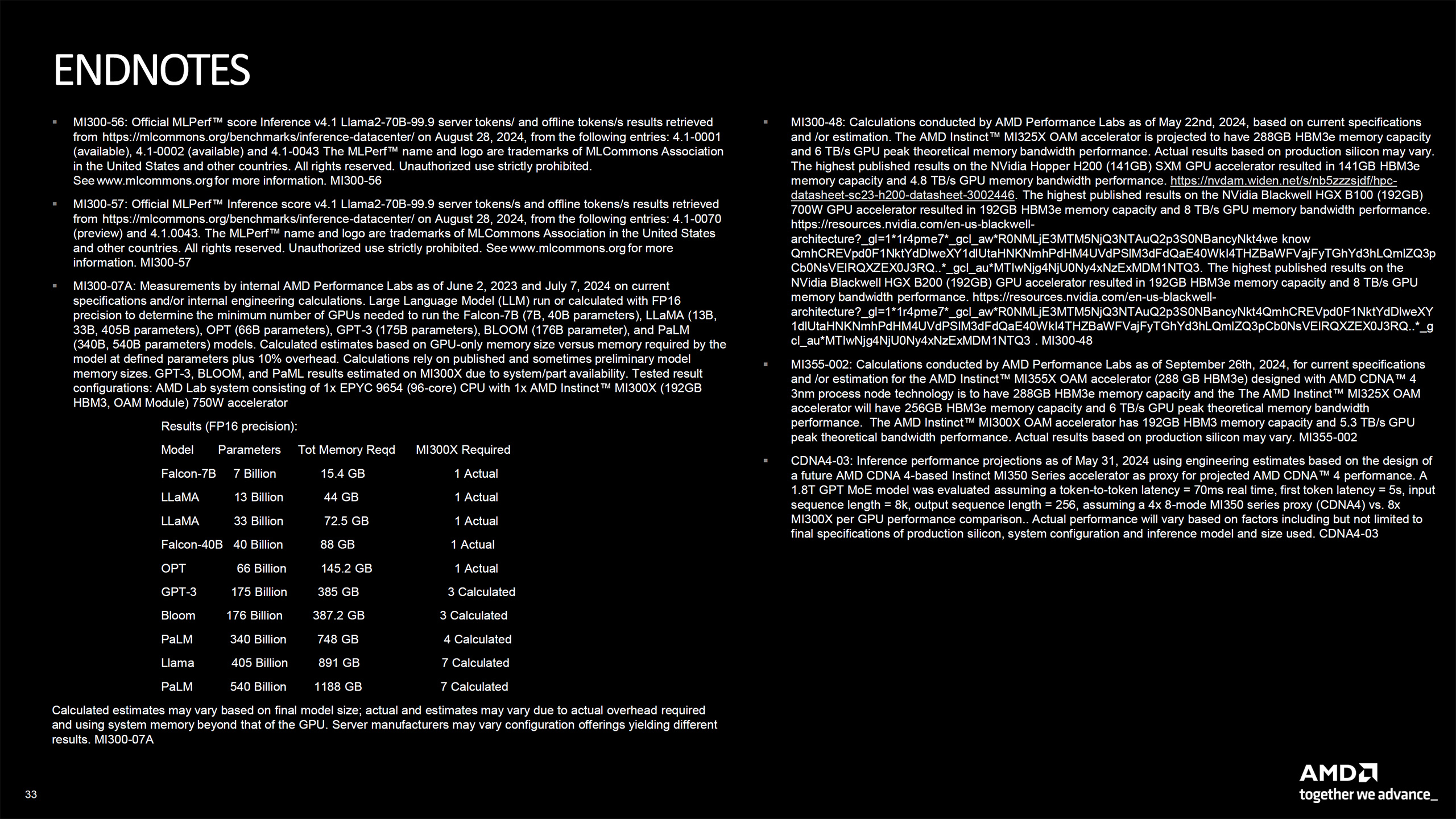

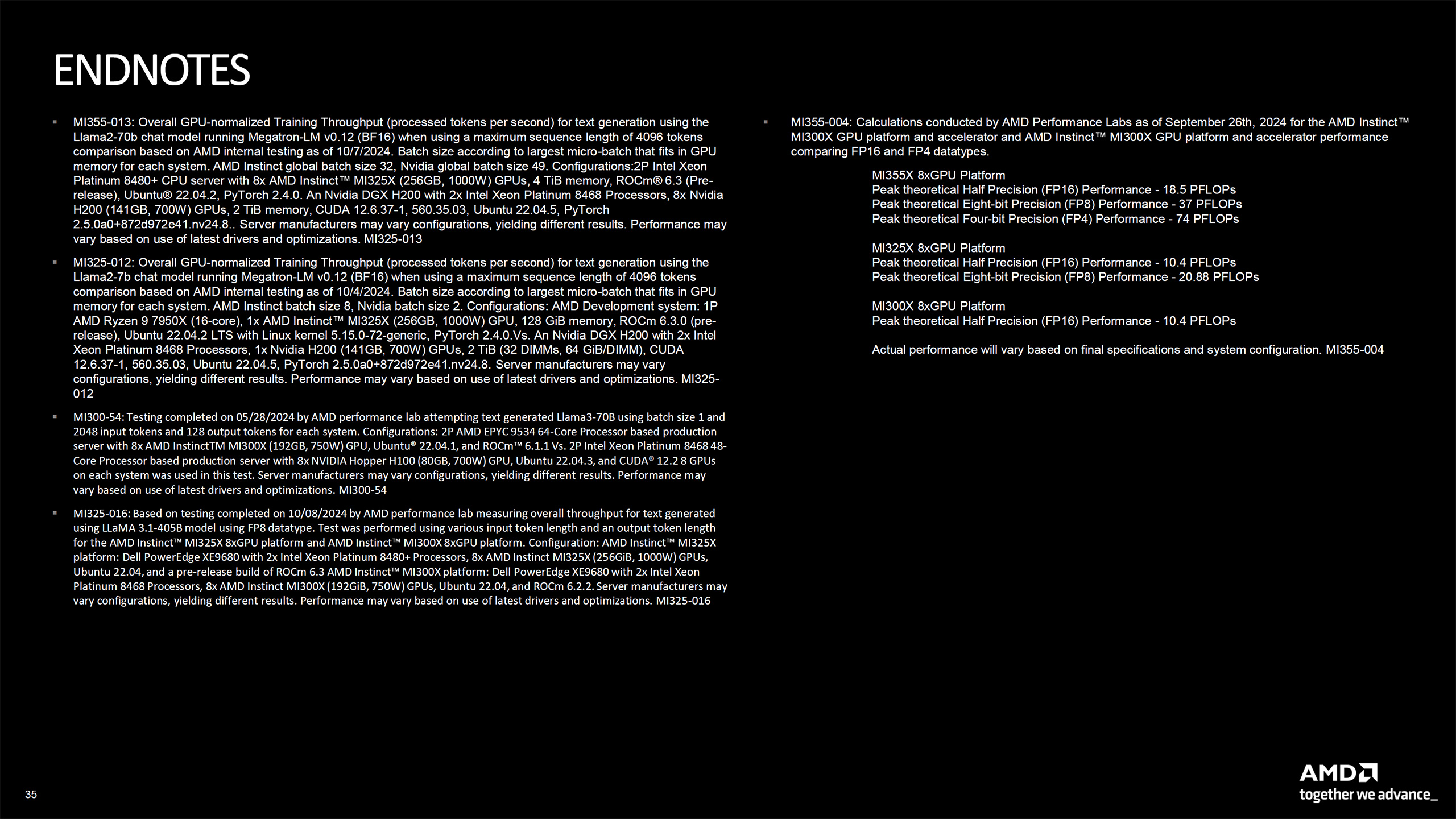

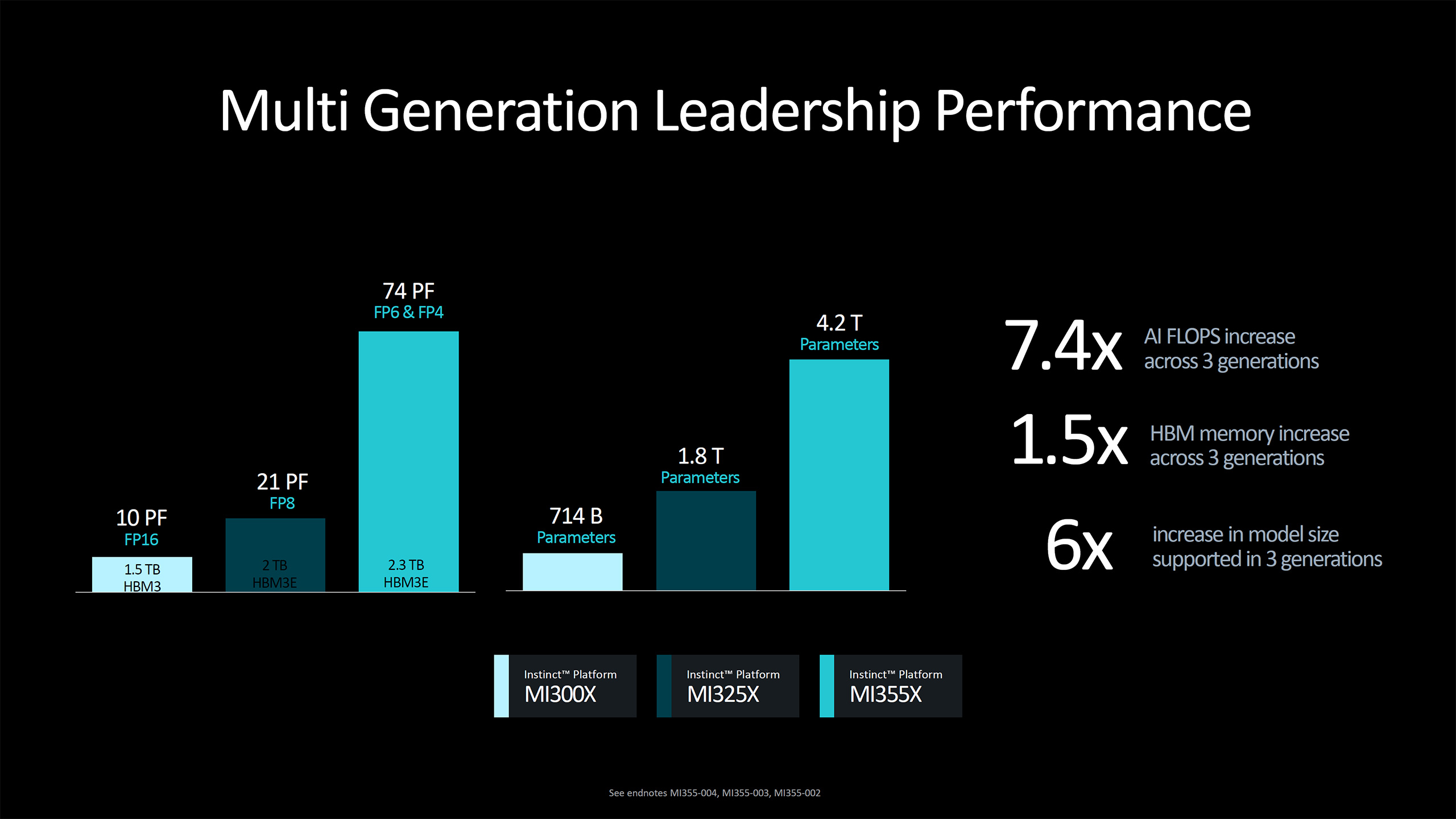

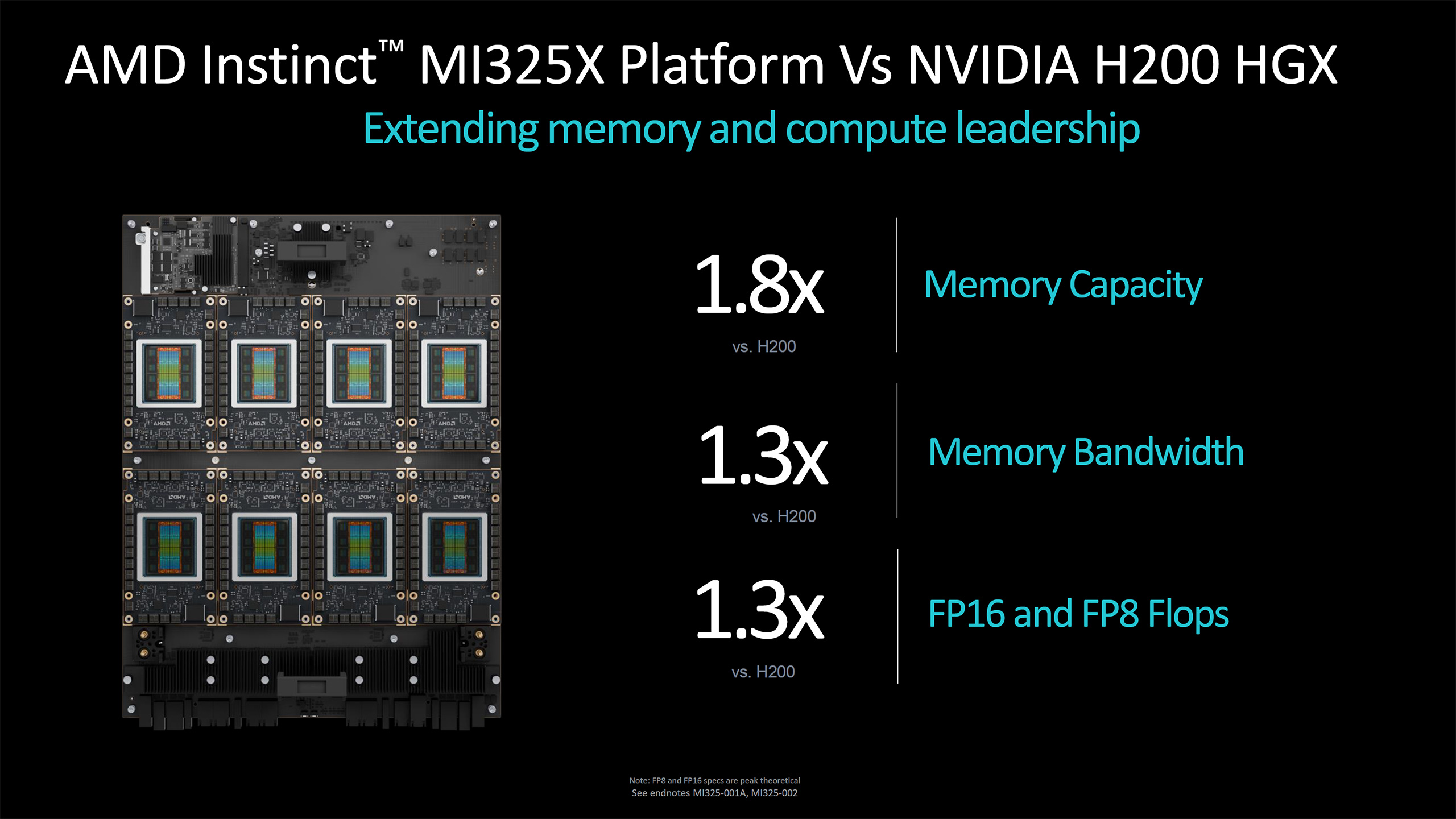

The MI300X currently offers 1.3 petaflops of FP16 compute and 2.61 petaflops of FP8. The MI355X by comparison will boost those to 2.3 and 4.6 petaflops, for FP16 and FP8. That's a 77% improvement relative to the previous generation — and note also that the MI325X has the same compute as the MI300X, just with 33% more HBM3E memory and a higher TDP.

MI355X doesn't just have more raw compute, however. The introduction of the FP4 and FP6 numerical formats doubles the potential compute yet again relative to FP8, so that a single MI355X offers up to 9.2 petaflops of FP4 compute. That's an interesting number, as the Nvidia Blackwell B200 also offers 9 petaflops of dense FP4 compute — with the higher power GB200 implementation offering 10 petaflops of FP4 per GPU.

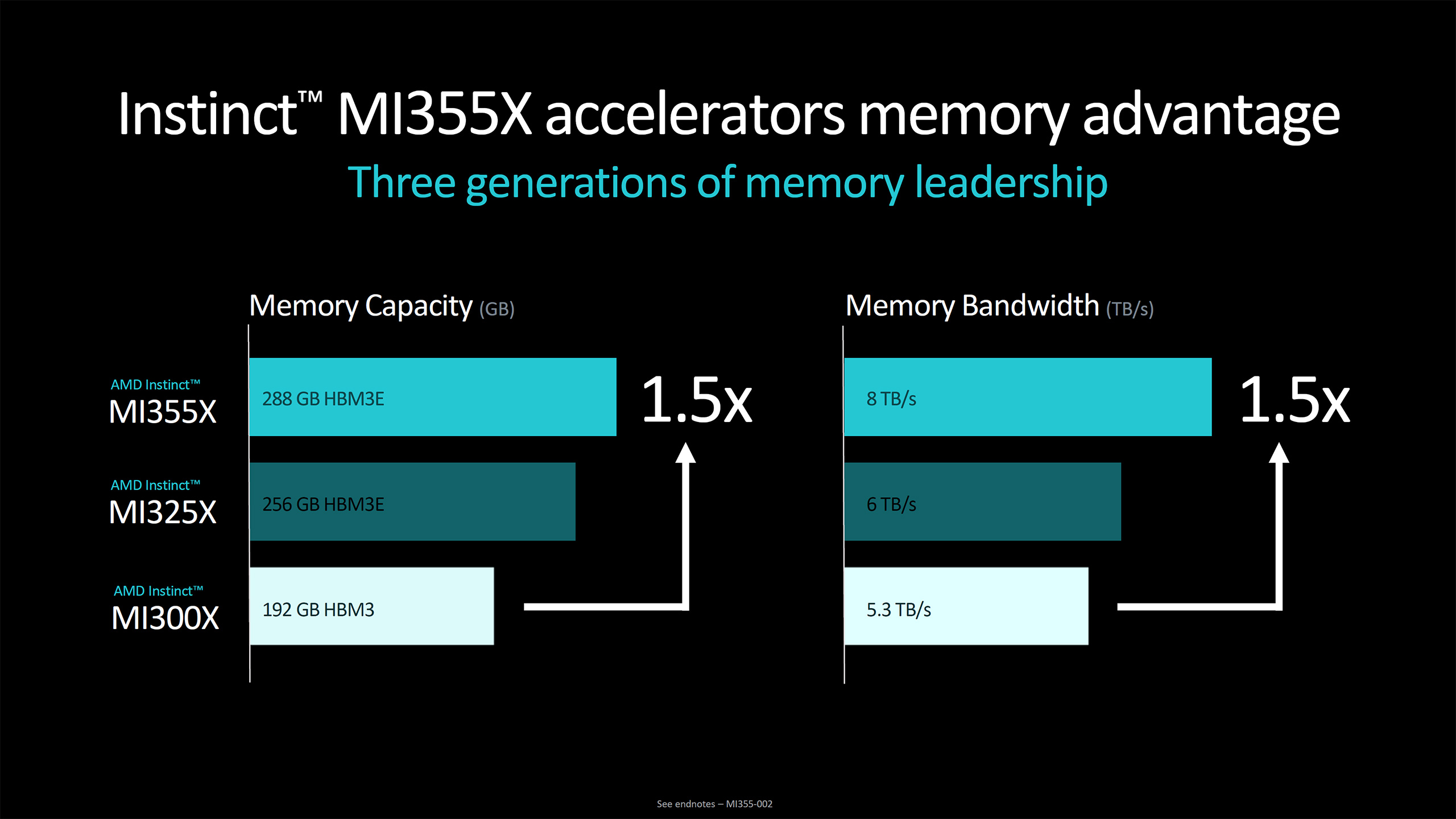

Based on that spec alone, AMD will potentially deliver roughly the same AI computational horsepower with MI355X as Nvidia will have with Blackwell. AMD will also offer up to 288GB of HBM3E memory, however, which is 50% more than what Nvidia offers with Blackwell right now. Both Blackwell and MI355X will have 8 TB/s of bandwidth per GPU.

Of course, there's more to AI than just compute, memory capacity, and bandwidth. Scaling up to higher numbers of GPUs often becomes the limiting factor beyond a certain point, and we don't have any details on whether AMD is making any changes to the interconnects between GPUs. That's something Nvidia talked about quite a bit with it's Blackwell announcement, so it will be something to pay attention to when the products start shipping.

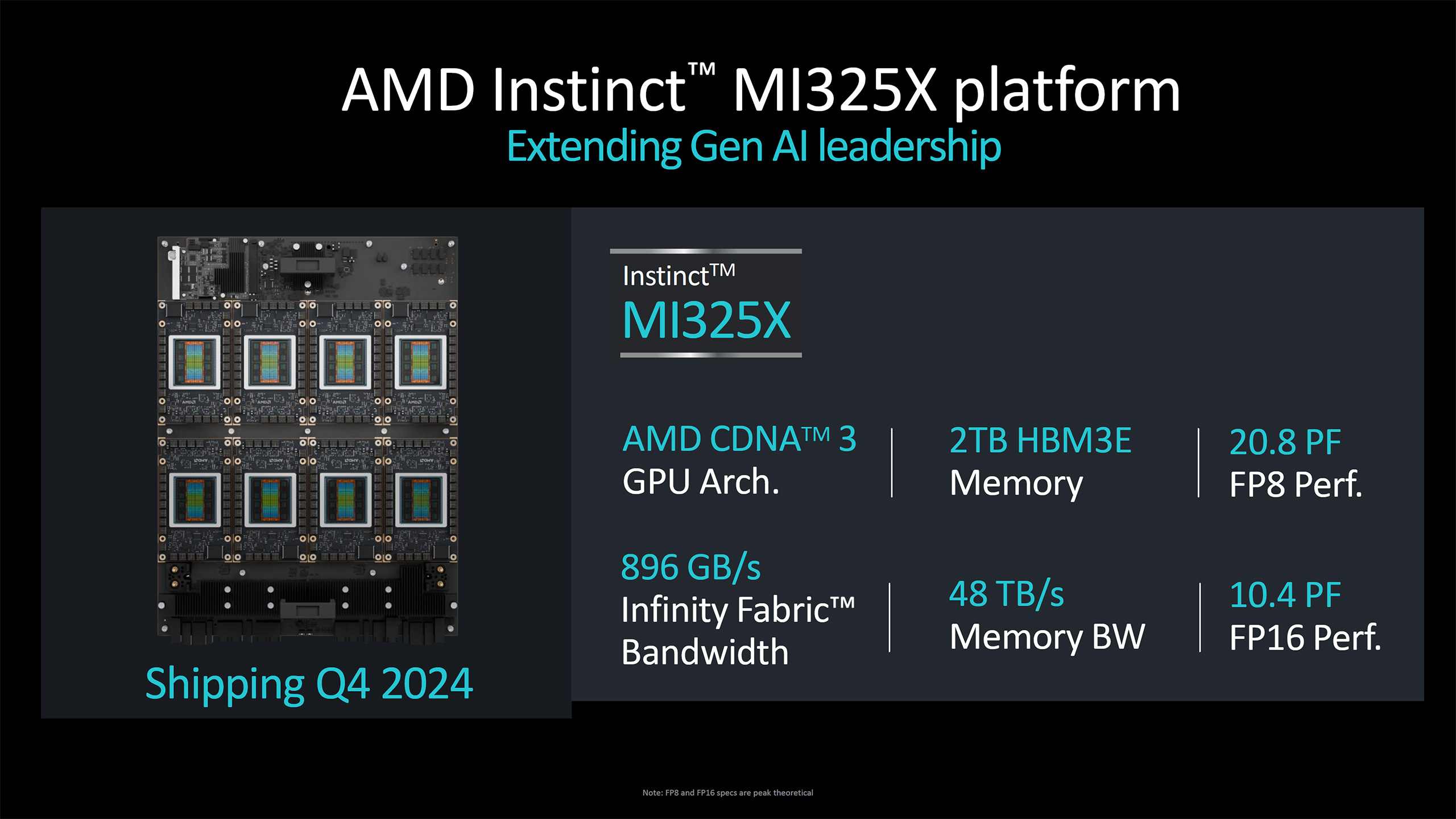

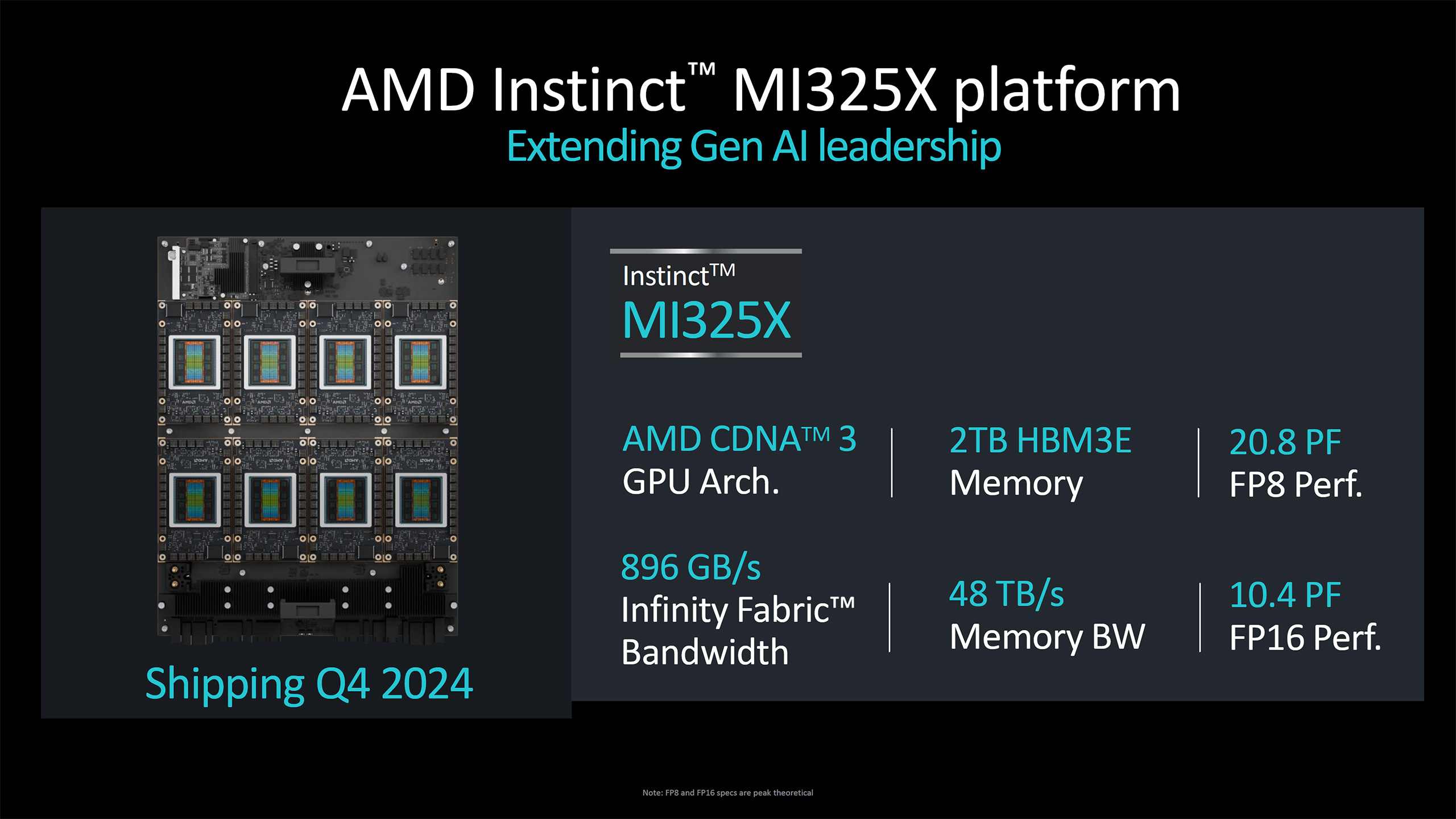

The other part of today's AMD Instinct announcement is that the MI325X has officially launched and is entering full production this quarter. However, along with the announcement comes an interesting nugget: AMD cut the maximum supported memory from 288GB (that's what it had stated earlier) to 256GB per GPU.

The main change from MI300X to MI325X was the amount of memory per GPU, with MI300X offering up to 192GB. So initially, AMD was looking at a 50% increase with MI325X, but now it has cut that to a 33% increase.

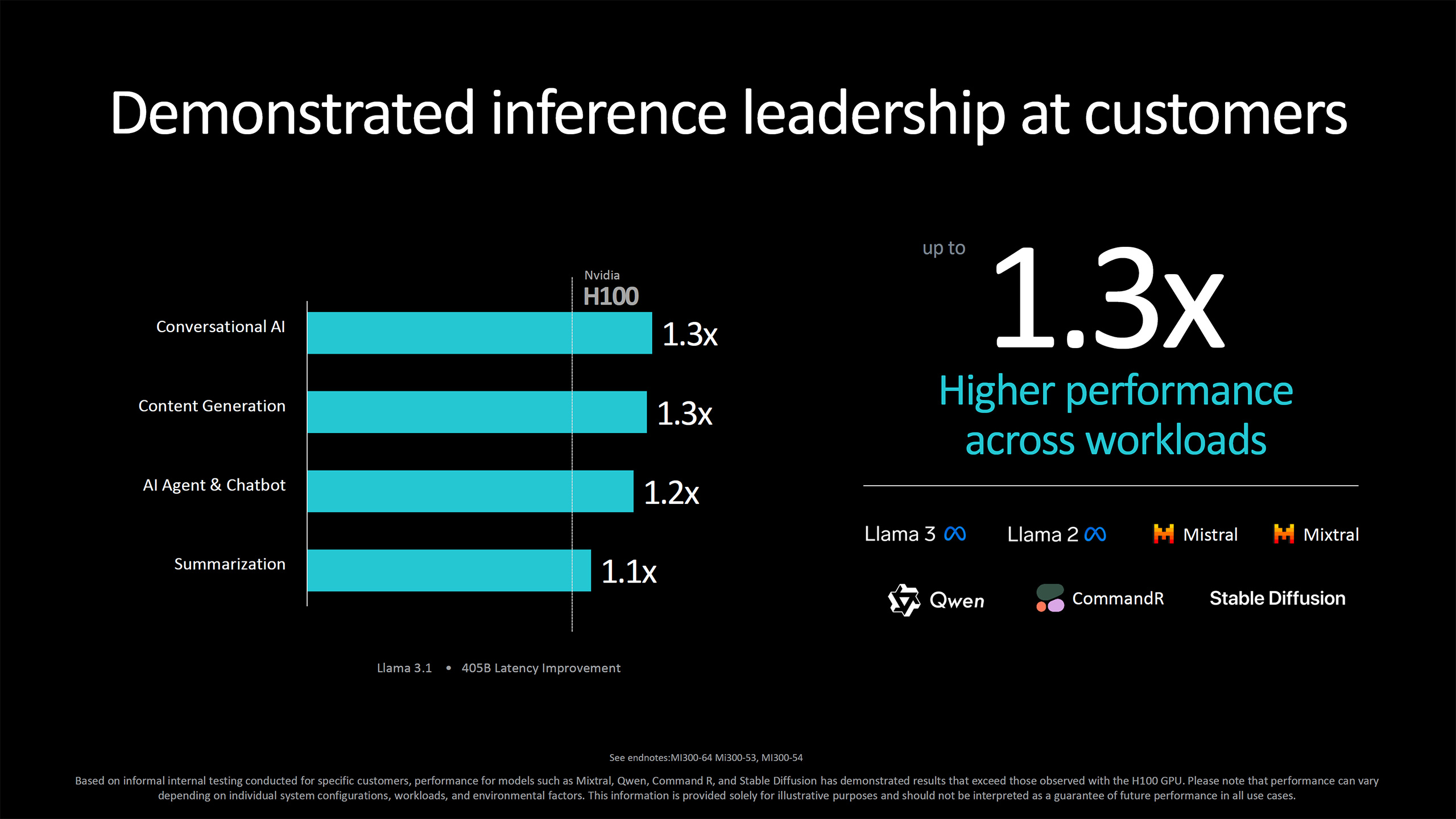

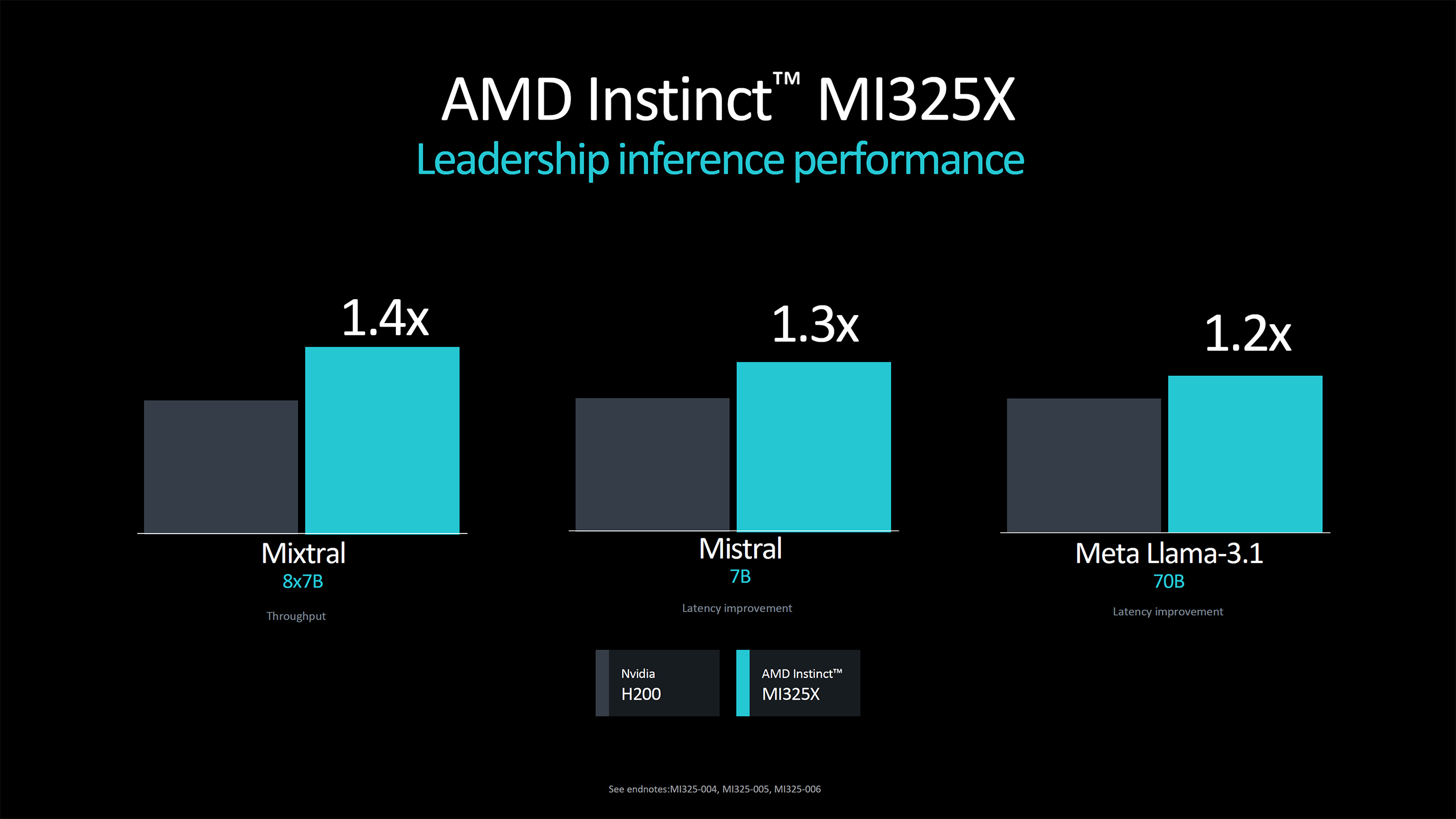

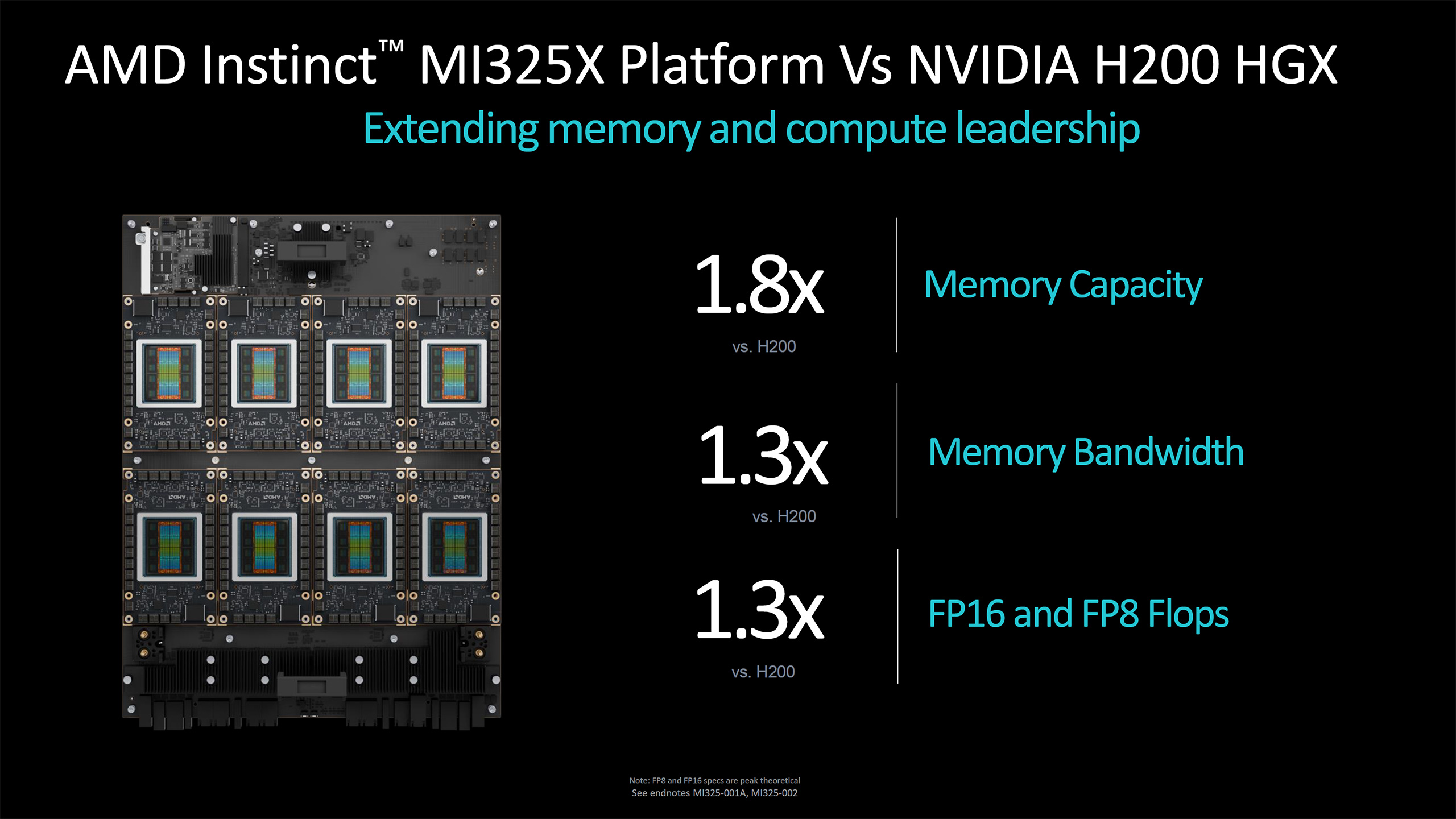

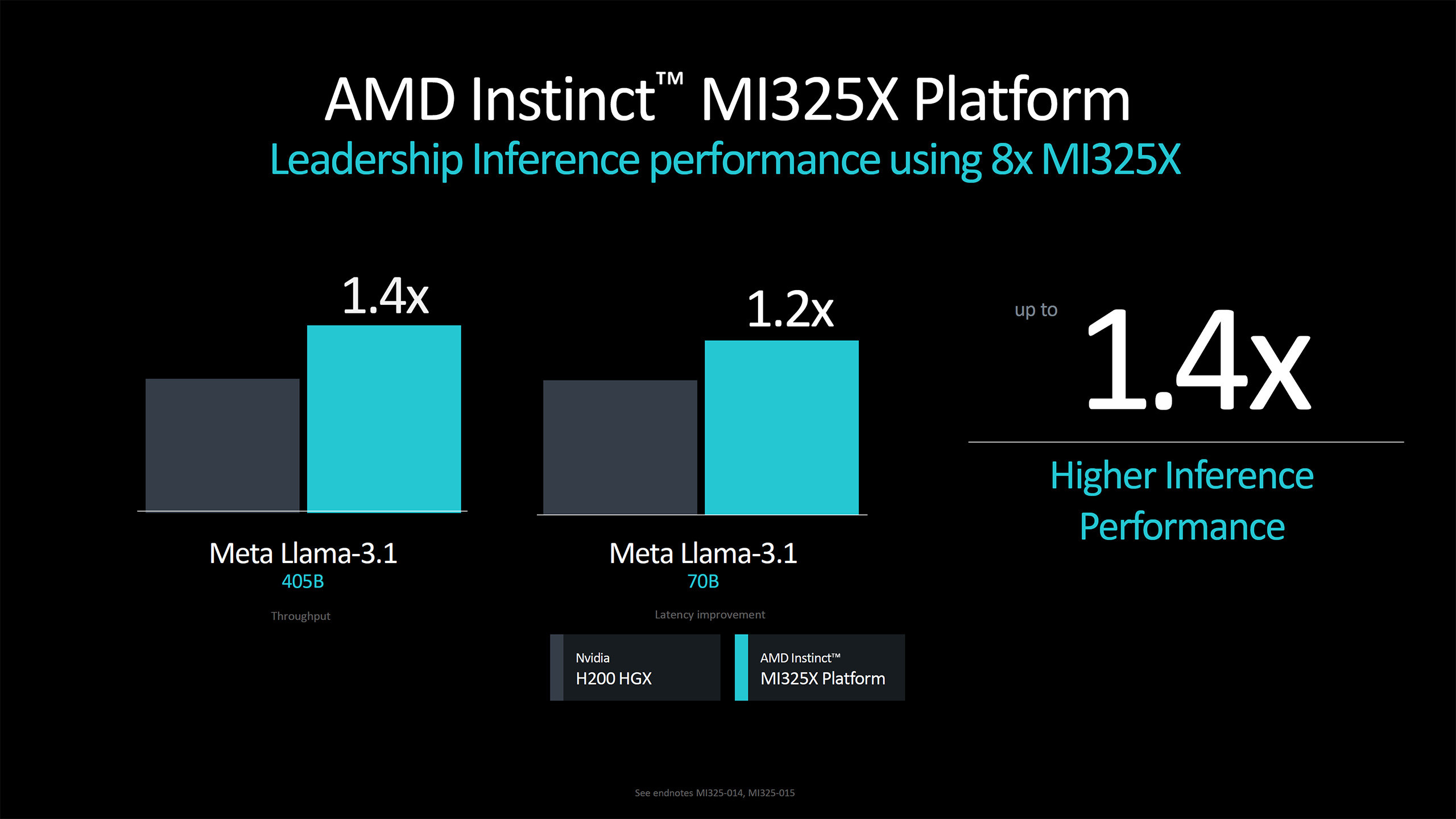

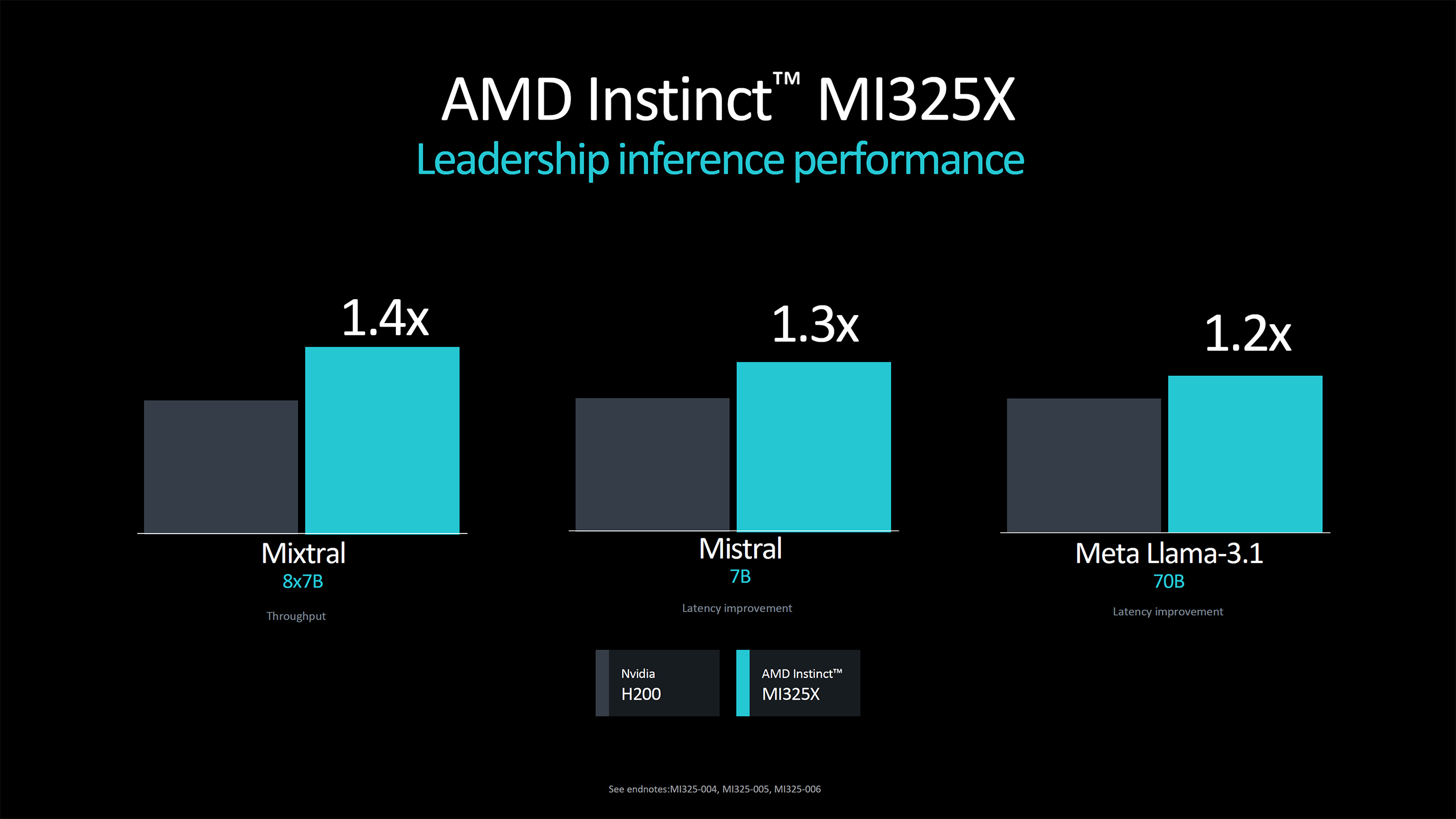

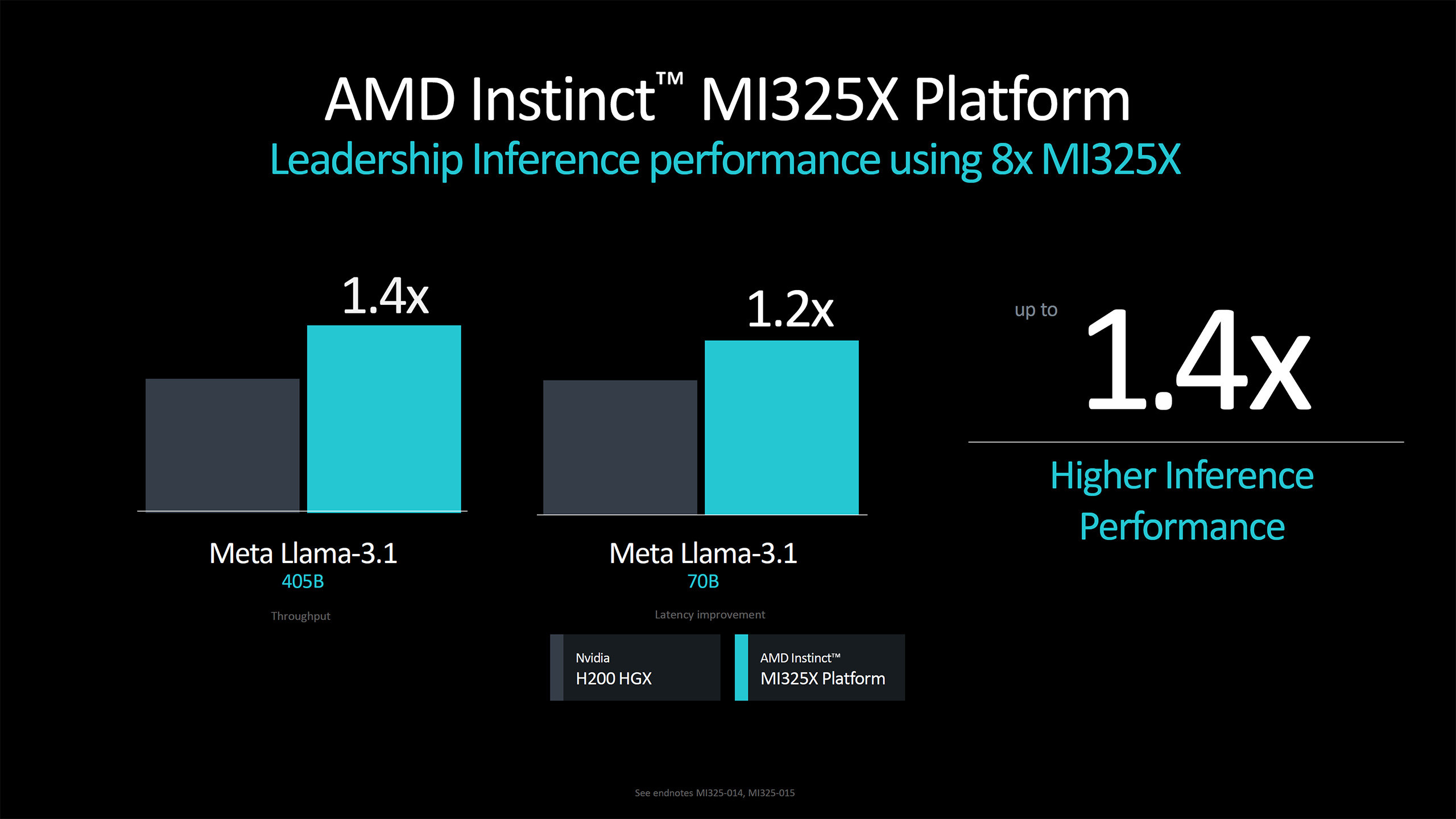

AMD showed a few performance numbers comparing MI325X with Nvidia H200, with a slight lead in single GPU performance, and parity for an eight GPU platform. We mentioned earlier how scaling can be a critical factor for AI platforms, and this indicates Nvidia still has some advantages in that area.

AMD didn't get into the pricing of its AI accelerators, but when questioned said that the goal is to provide a TCO (Total Cost of Ownership) advantage. That can come either by offering better performance for the same price, or by having a lower price for the same performance, or anywhere along that spectrum. Or as AMD put it: "We are responsible business people and we will make responsible decisions" — as far as pricing goes.

It remains to be seen how AMD's latest parts stack up to Nvidia's H100 and H200 in a variety of workloads, not to mention the upcoming Blackwell B200 family. It's clear that AI has become a major financial boon for Nvidia and AMD lately, and until that changes, we can expect to see a rapid pace of development and improvement for the data center.

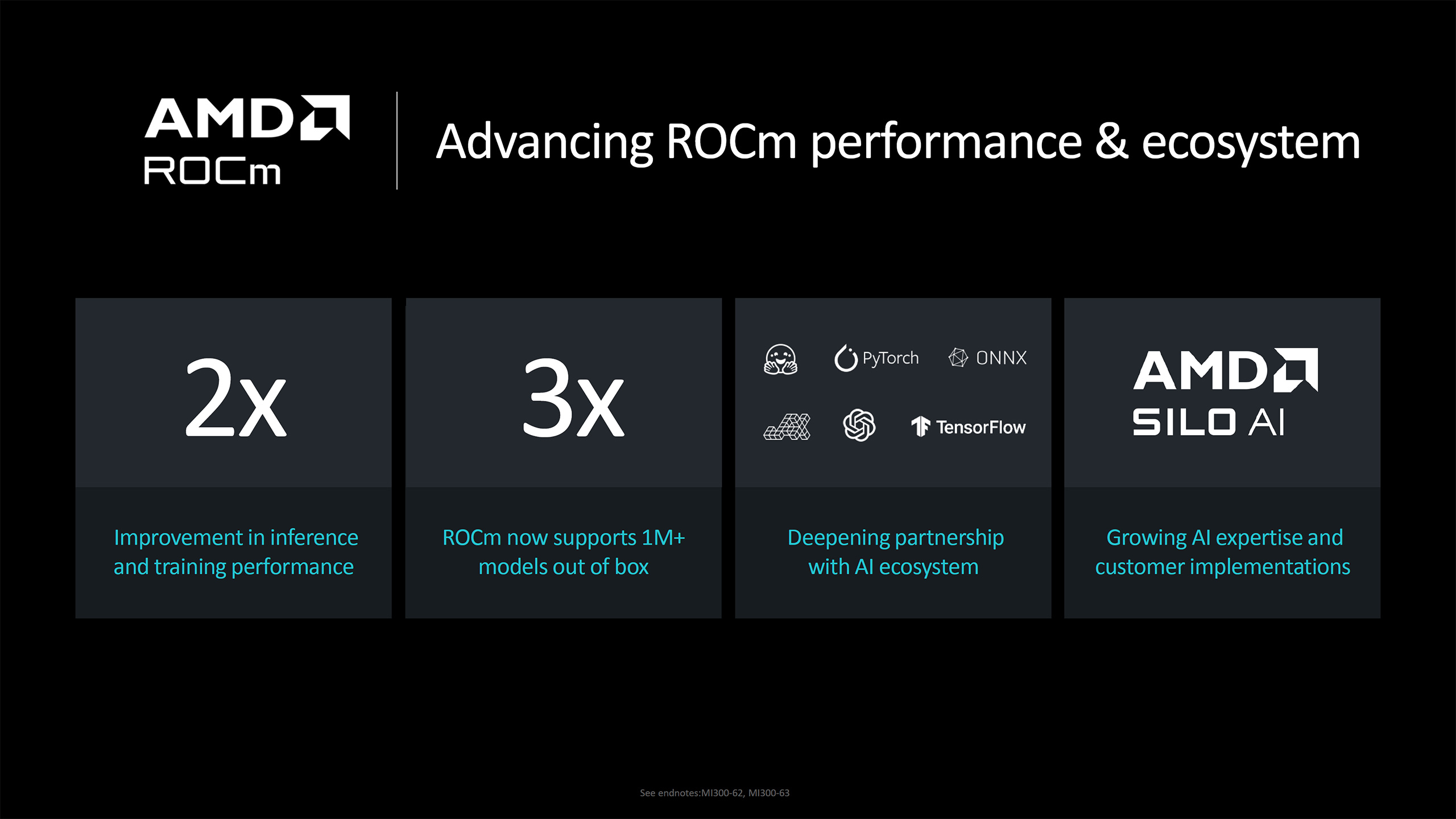

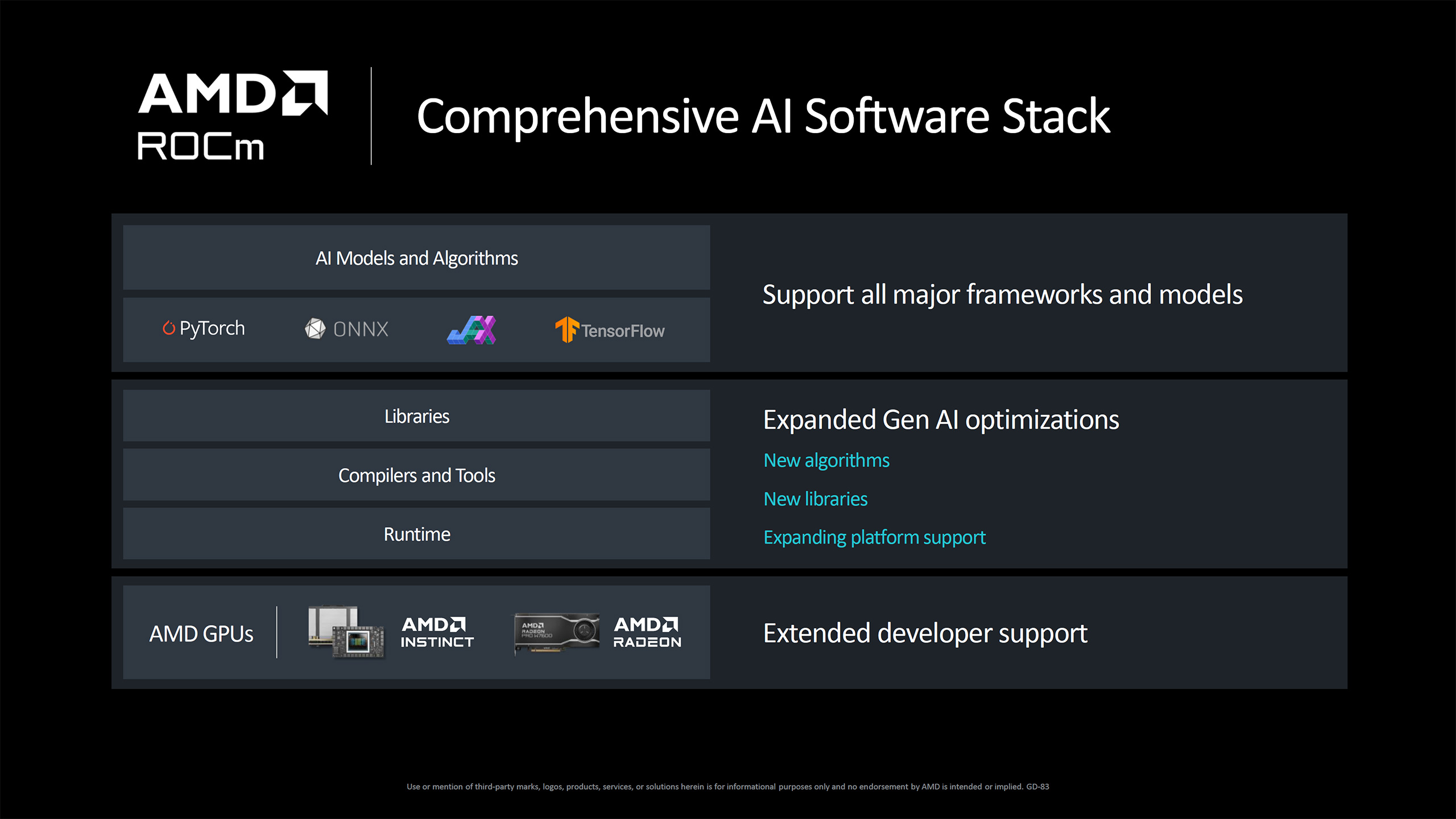

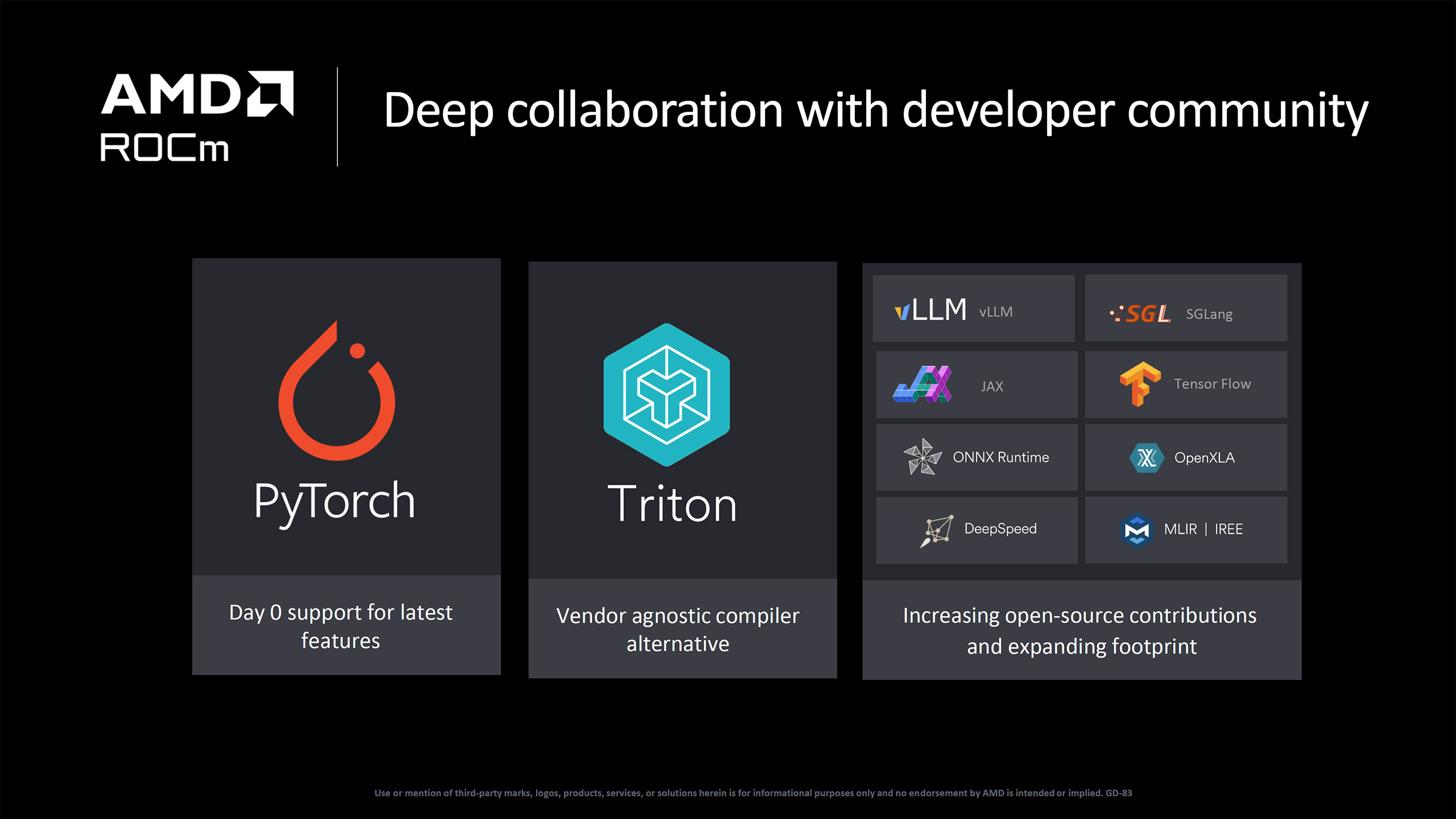

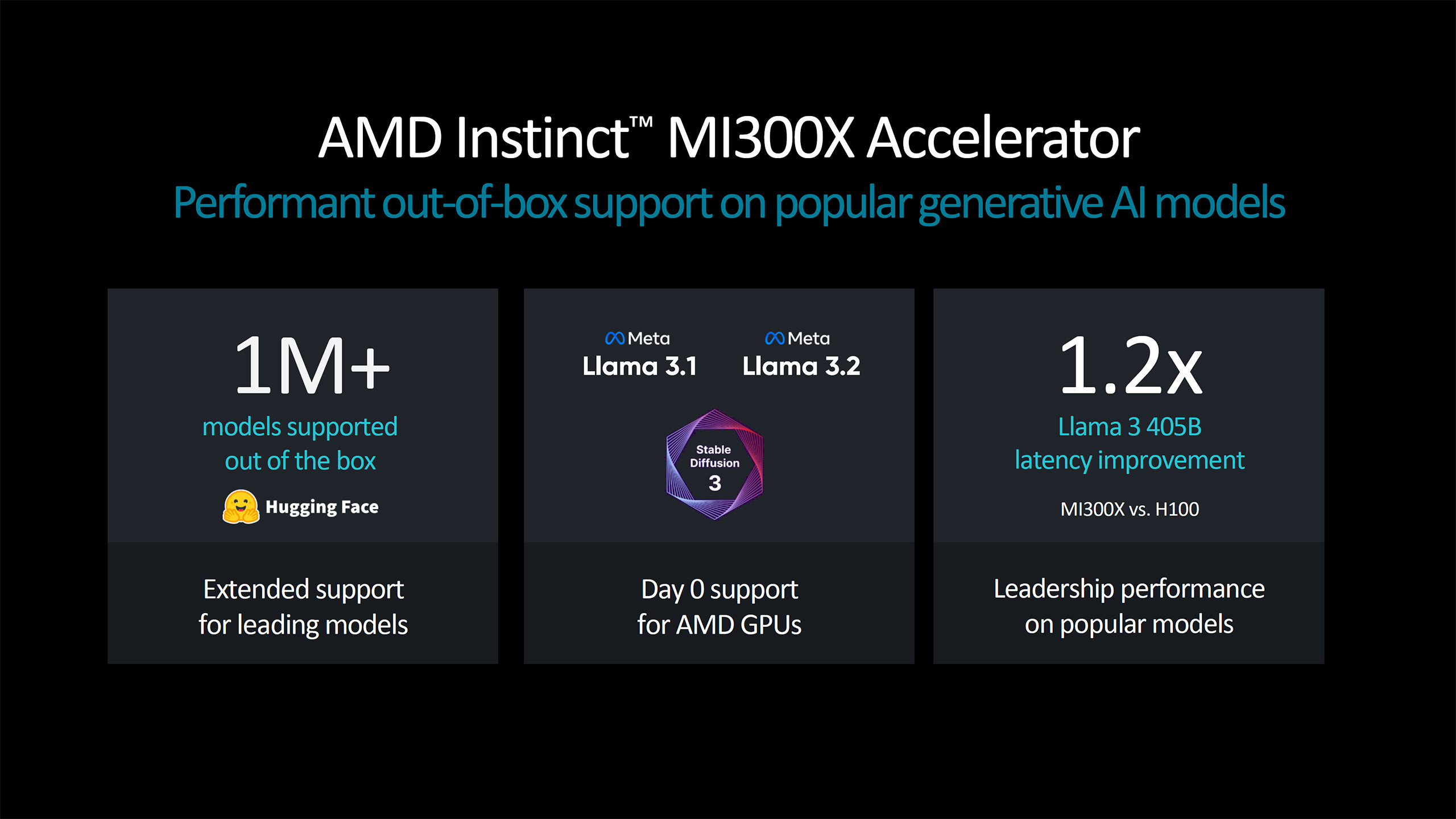

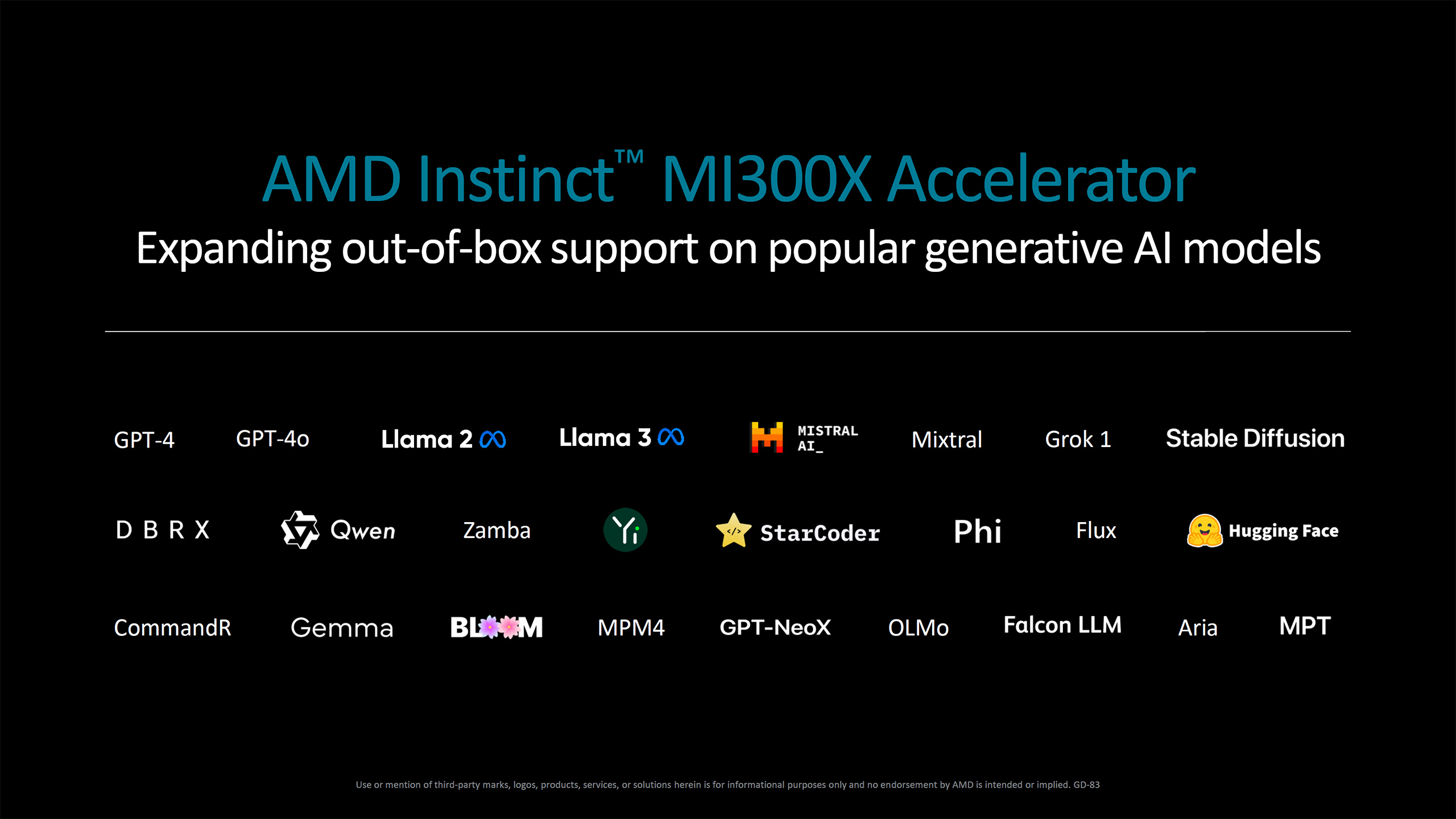

The full slide deck from the presentation can be found below, with most of the remaining slides providing background information on the AI accelerator market and AMD's partners.