While Nvidia's GeForce RTX 4070 hasn't launched yet, there have been plenty of leaks about it, including the probability that it will boast 12GB of GDDR6X VRAM. But with some of the newest games chewing through memory (especially at 4K), AMD is taking the opportunity ahead of the 4070's release to poke at Nvidia and highlight the more generous amounts of VRAM it offers.

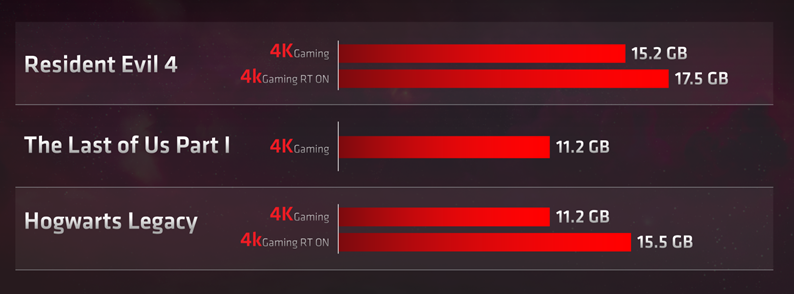

In a blog post titled "Are YOU an Enthusiast?" (caps theirs), AMD product marketing manager Matthew Hummel highlights the extreme amounts of VRAM used at top specs at 4K for games like the Resident Evil 4 remake, the recent (terrible) port of The Last of Us Part I, and Hogwarts Legacy. The short version is that AMD says that to play each of these games at 1080p, it recommends its 6600-series with with 8GB of RAM; at 1440p, its 6700-series with 12GB of RAM; and at 4K, the 16GB 6800/69xx-series. (That leaves out its RTX 7900 XT with 20GB of VRAM and 7900 XTX with 24GB).

The not-so-subtle subtext? You need more than 12GB of RAM to really game at 4K, especially with raytracing. Perhaps even less subtle? The number of times AMD mentions that it offers GPUs with 16GB of VRAM starting at $499 (three, if you weren't counting).

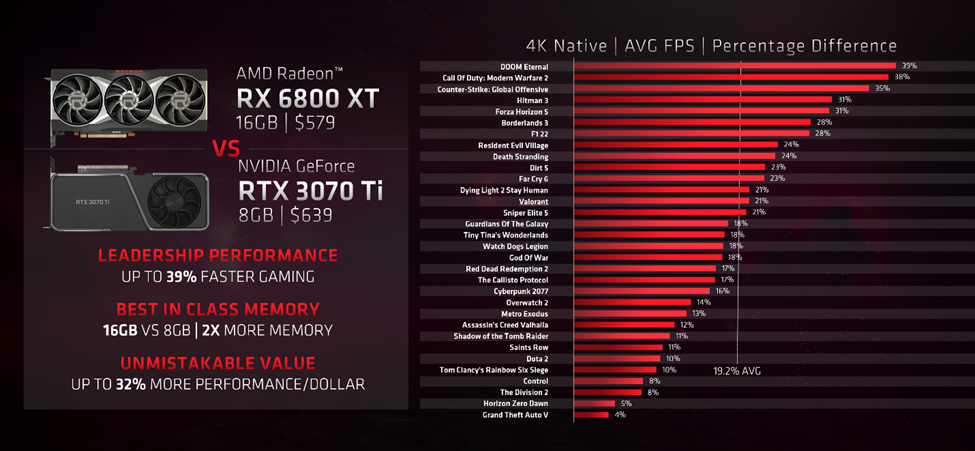

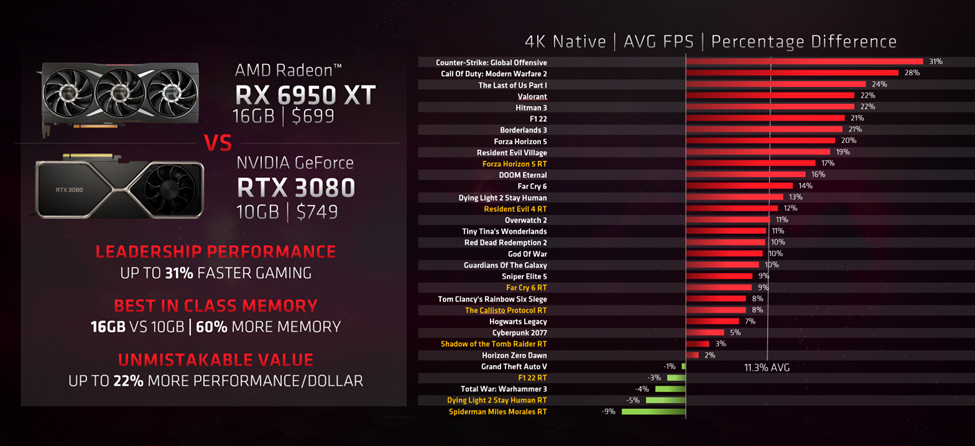

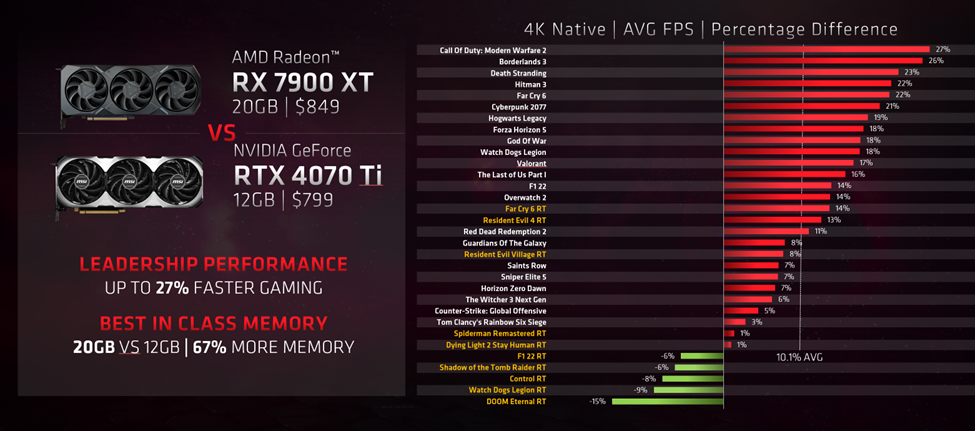

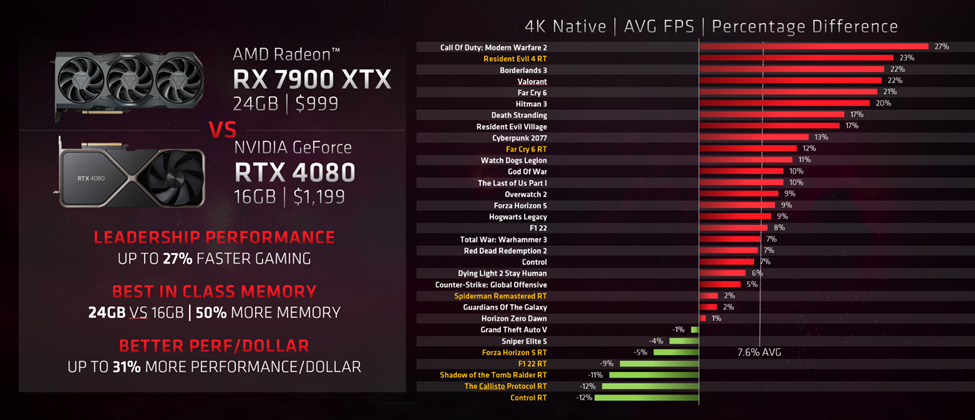

In an attempt to hammer its point home, AMD supplied some of its own benchmark comparisons versus Nvidia chips, pitting its RX 6800 XT against Nvidia's RTX 3070 Ti, the RX 6950 XT with the RTX 3080, the 7900 XT versus the RTX 4070 Ti, and the RX 7900 XTX taking on the RTX 4080. Each had 32 "select games" at 4K resolution. Unsurprisingly for AMD-published benchmarks, the Radeon GPUs won a majority of the time. You can see these in the gallery below:

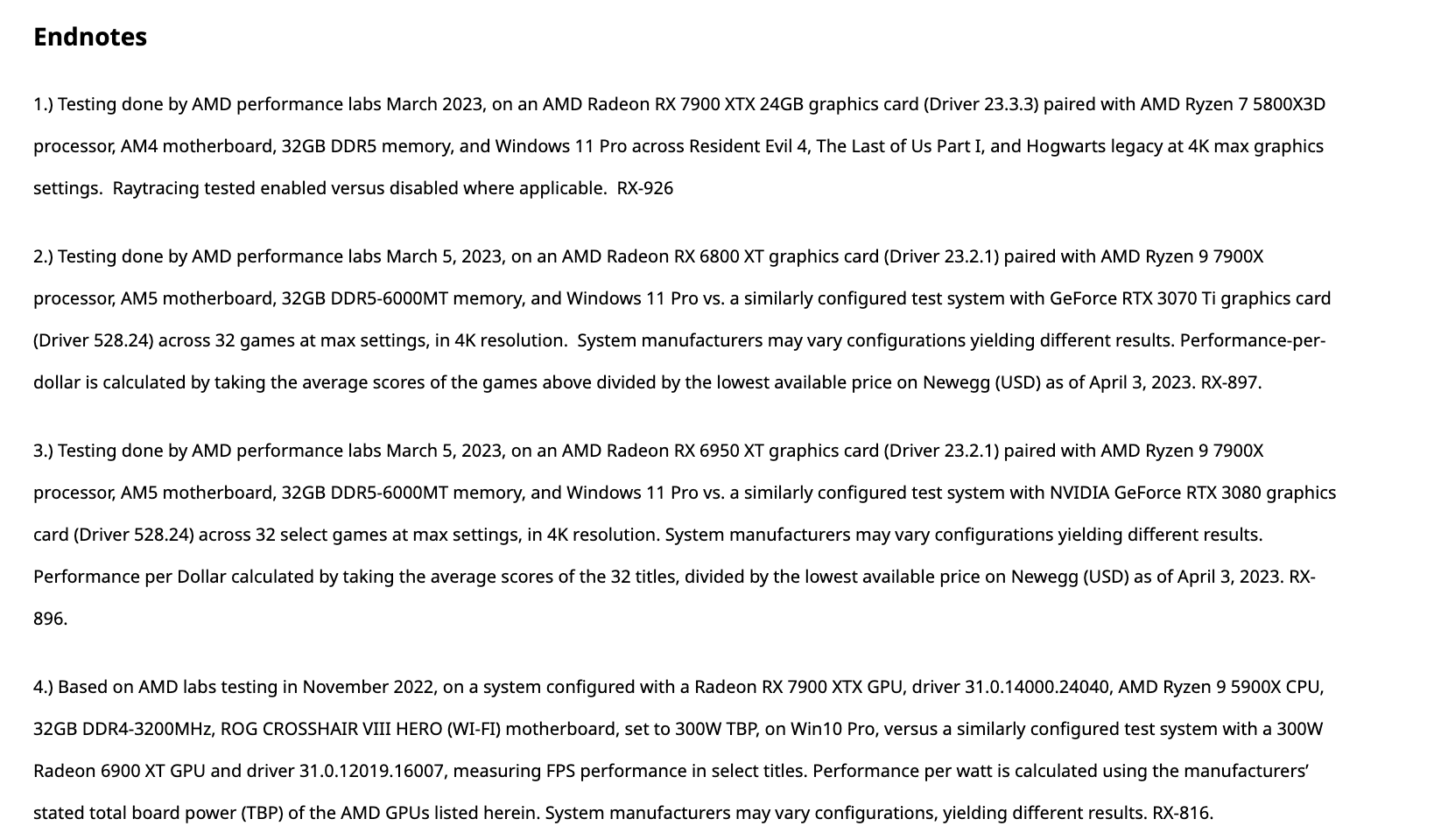

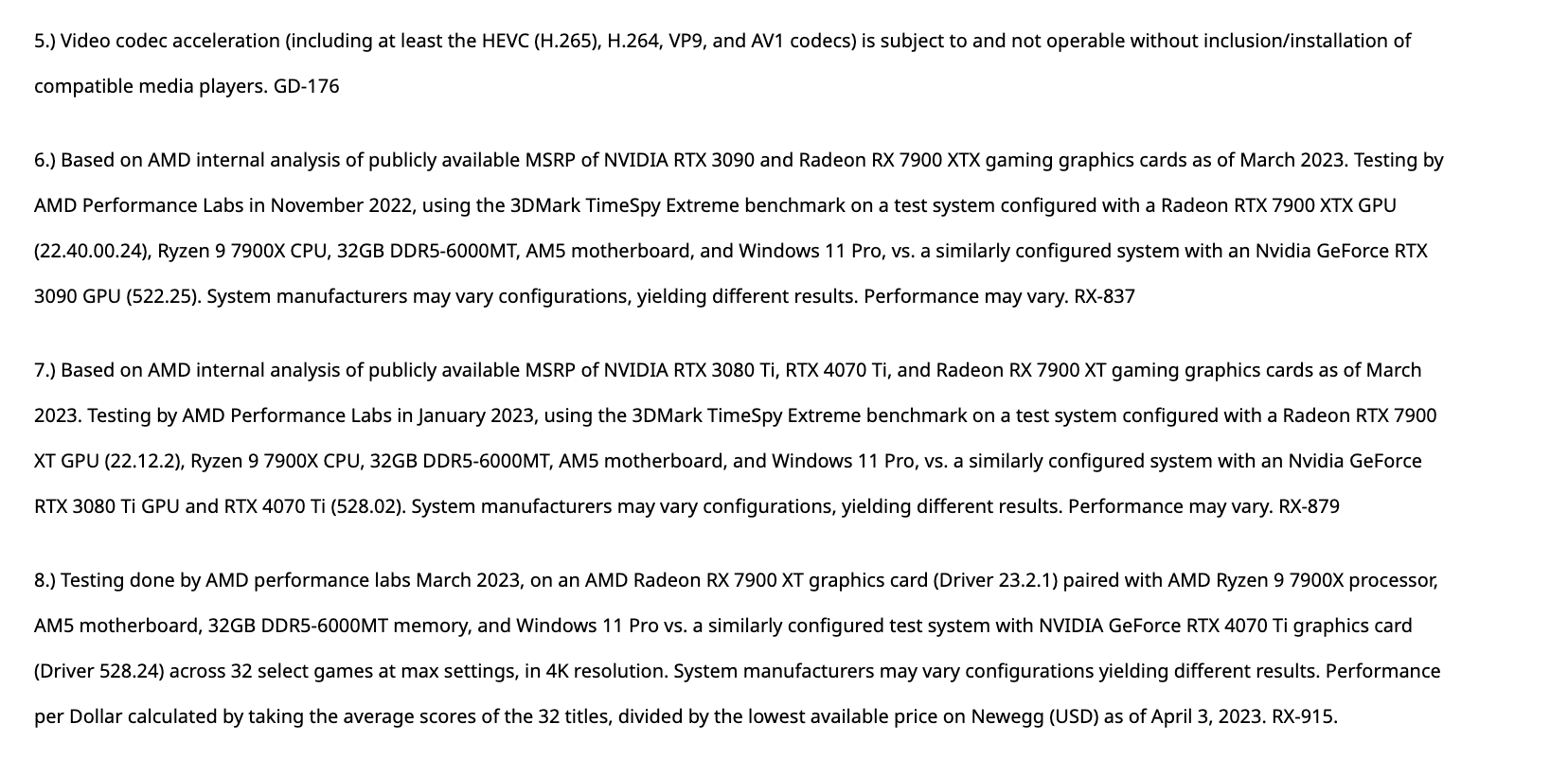

Like any benchmark heavy piece of marketing, there are a ton of testing notes here. We've reproduced those in the gallery below. Some interesting notes include differing CPUs, motherboards (including some with total board power adjusted), changes in RAM speeds, and more depending on the claim. The majority of the gaming tests use the same specs, but other claims vary and some use older test data. The games are not the same across each GPU showdown, and it also doesn't appear that these tests used FSR or DLSS, for those who are interested in those technologies.

We won't know for sure how Nvidia's RTX 4070 performs against any of these GPUs until reviews go live, but AMD is making sure you know it's more generous with VRAM (though it doesn't seem to differentiate between the GDDR6 it's using against Nvidia's GDDR6X).

It's also worth pointing out that the options for VRAM capacities are directly tied with the size of the memory bus, or number of channels prefer. Much like AMD's Navi 22 that maxes out with a 192-bit 6-channel interface (see RX 6700 XT and RX 6750 XT), Nvidia's AD104 (RTX 4070 Ti and the upcoming RTX 4070) supports up to a 192-bit bus. With 2GB chips, that means Nvidia can only do up to 12GB — doubling that to 24GB is also possible, with memory in "clamshell" mode on both sides of the PCB, but that's an expensive approach that's generally only used in halo products (i.e. RTX 3090) and professional GPUs (RTX 6000 Ada Generation).

AMD's taunts also raise an important question: What exactly does AMD plan to do with lower tier RX 7000-series GPUs? It's at 20GB for the $800–$900 RX 7900 XT. 16GB on a hypothetical RX 7800 XT sounds about right... which would then put AMD at 12GB on a hypothetical RX 7700 XT. Of course, we're still worried about where Nvidia might go with the future RTX 4060 and RTX 4050, which may end up with a 128-bit memory bus.

There's also a lot more going on than just memory capacity, like cache sizes and other architectural features. AMD's previous generation RX 6000-series offered 16GB on the top four SKUs (RX 6950 XT, RX 6900 XT, RX 6800 XT, and RX 6800), true, but those aren't latest generation GPUs, and Nvidia's competing RTX 30-series offerings still generally managed to keep pace in rasterization games while offering superior ray tracing hardware and enhancements like DLSS — while also boosts AI performance in things like Stable Diffusion.

Ultimately, memory capacity can be important, but it's only one facet of modern graphics cards. For our up-to-date thoughts on GPUs, check out our GPU hierarchy and our list of the best graphics cards for gaming.