I visited AMD's office here in Taipei, Taiwan, during Computex 2023 to have a conversation with David McAfee, the company's Corporate VP and General Manager of the Client Channel Business. I also had a chance to see AMD's Ryzen XDNA AI engine at work in a laptop demo, and McAfee discussed the steps AMD is taking to prepare the operating system and software ecosystem for the burgeoning AI use cases that will run locally on the PC, which we'll dive into further below.

After following the AMD codename-inspired hallway map you see above, I found my way to the demo room to see AMD's latest tech in action.

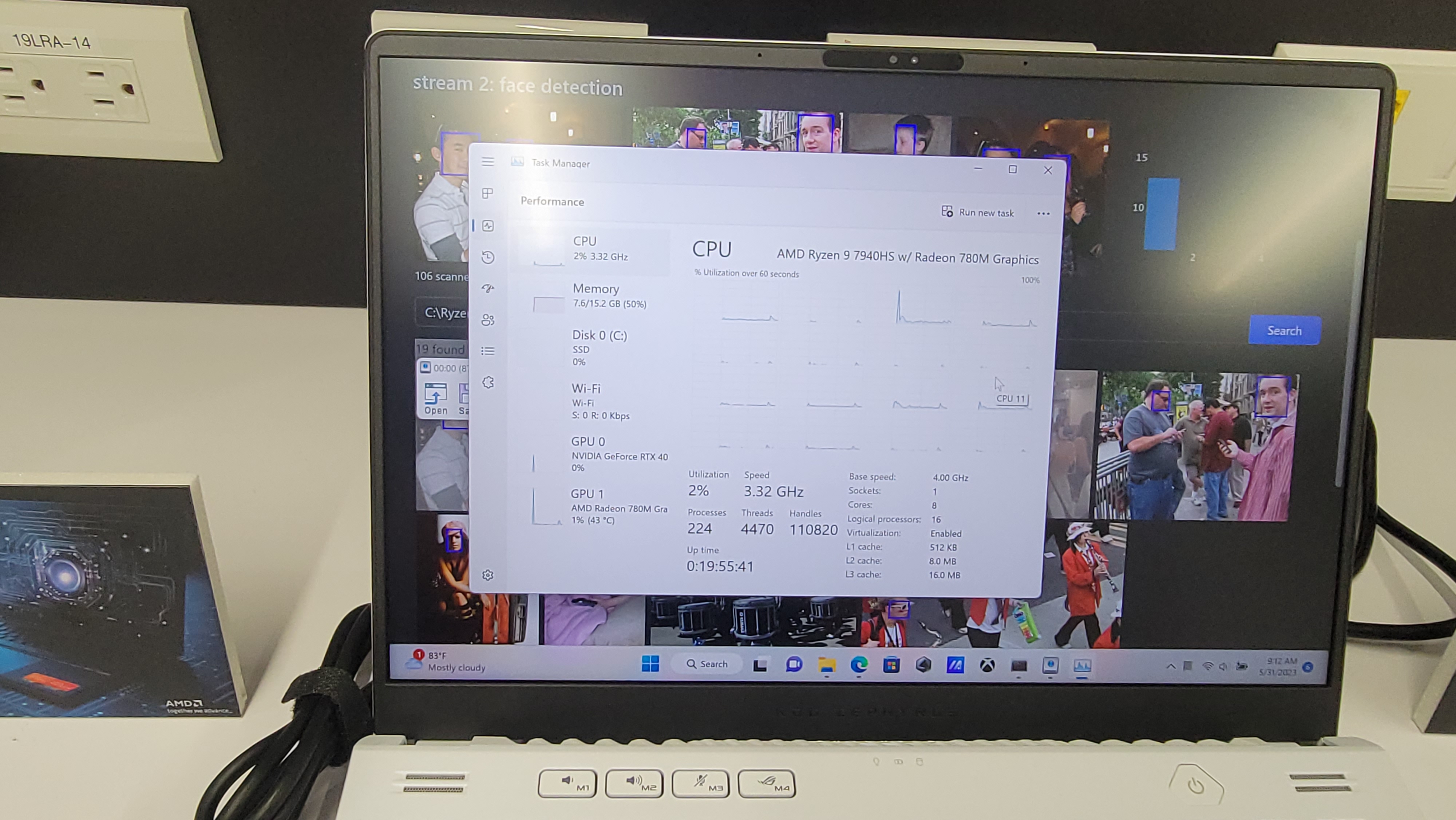

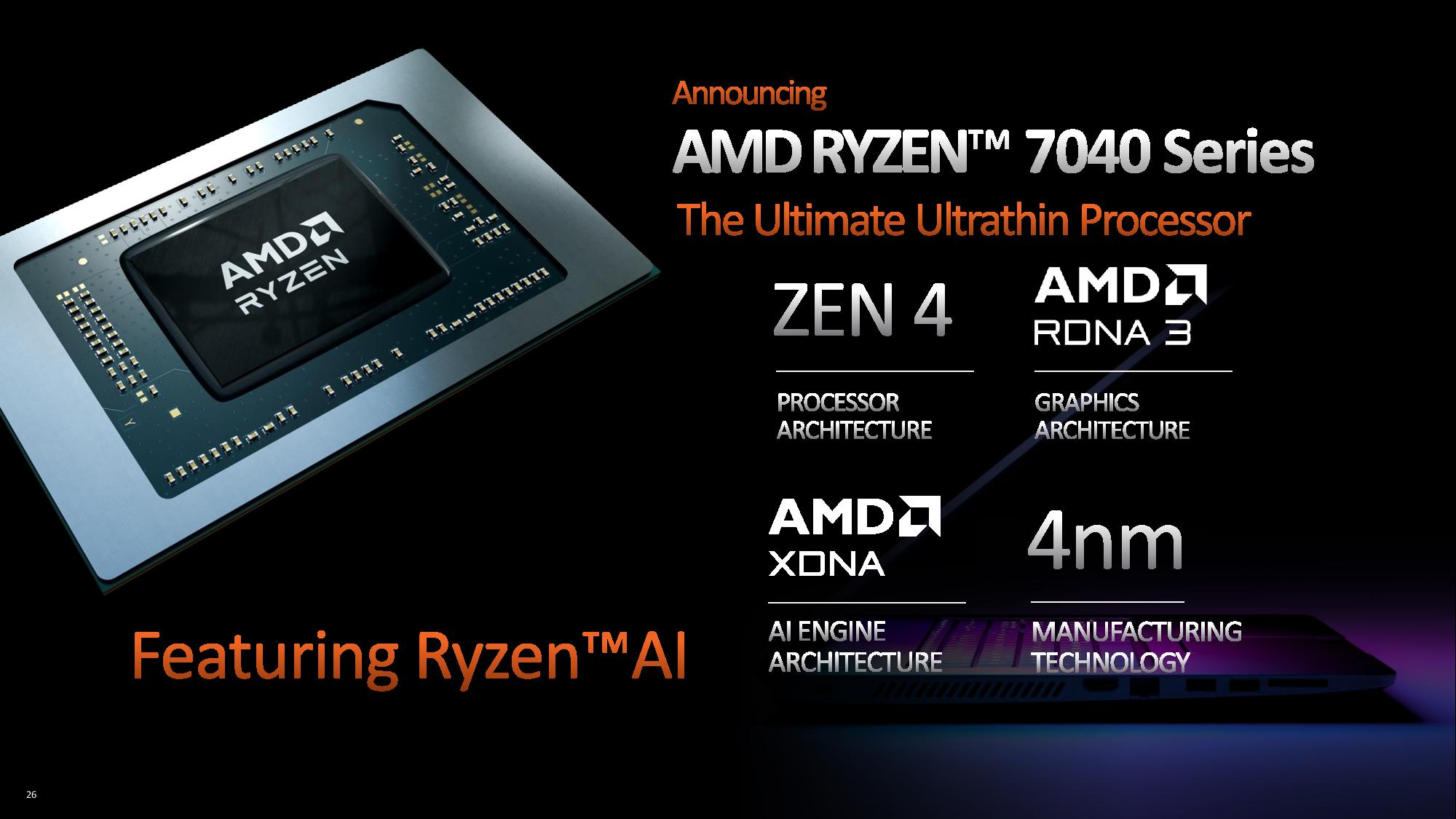

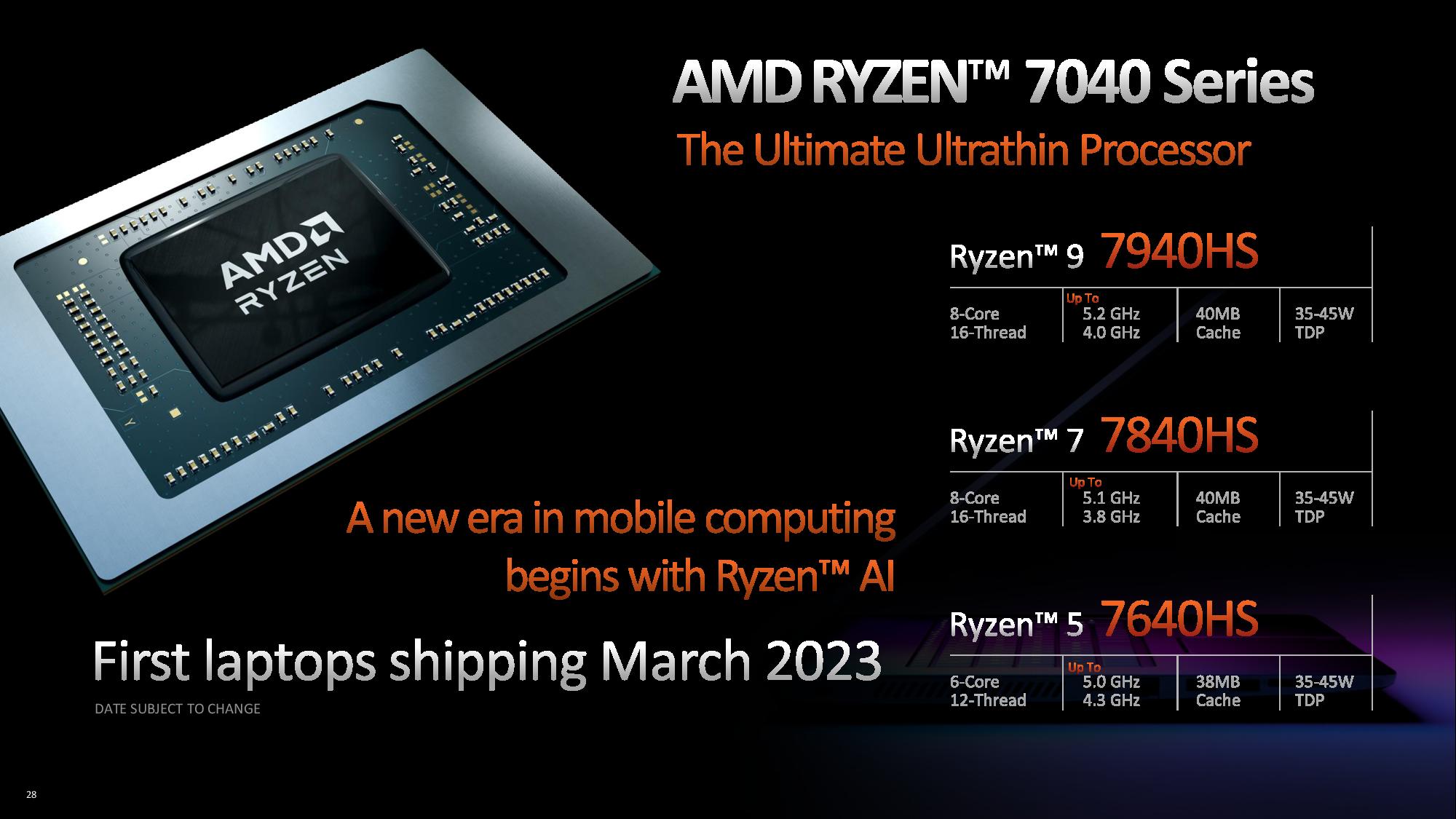

AMD's demo laptop was an Asus Strix Scar 17 that comes powered by AMD's 4nm 'Phoenix' Ryzen 9 7940HS processor paired with Radeon 780M graphics. These 35-45W chips come with the Zen 4 architecture and RDNA 3 graphics. AMD also had an Asus ROG Zephyrus G14 running the same demo.

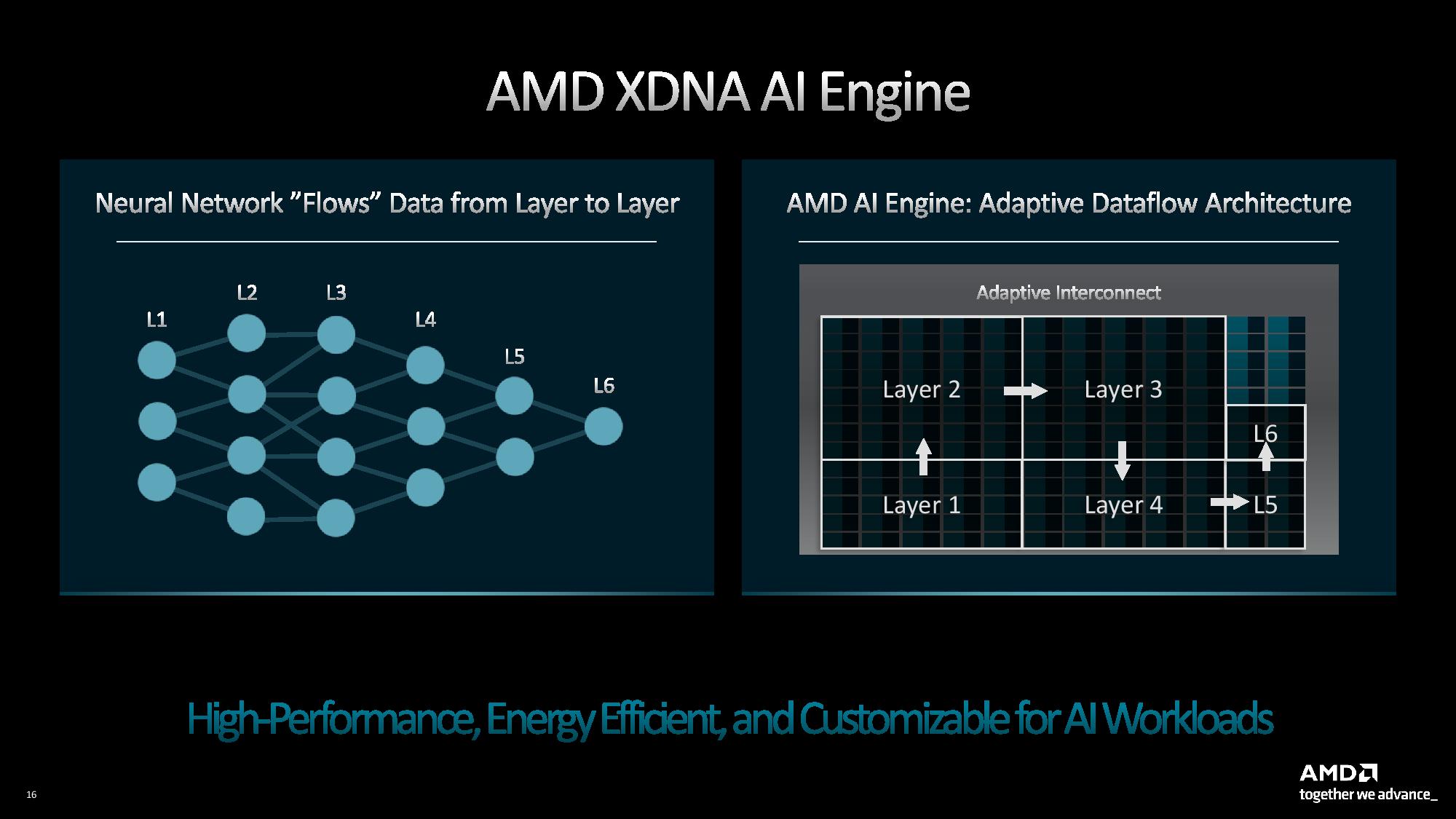

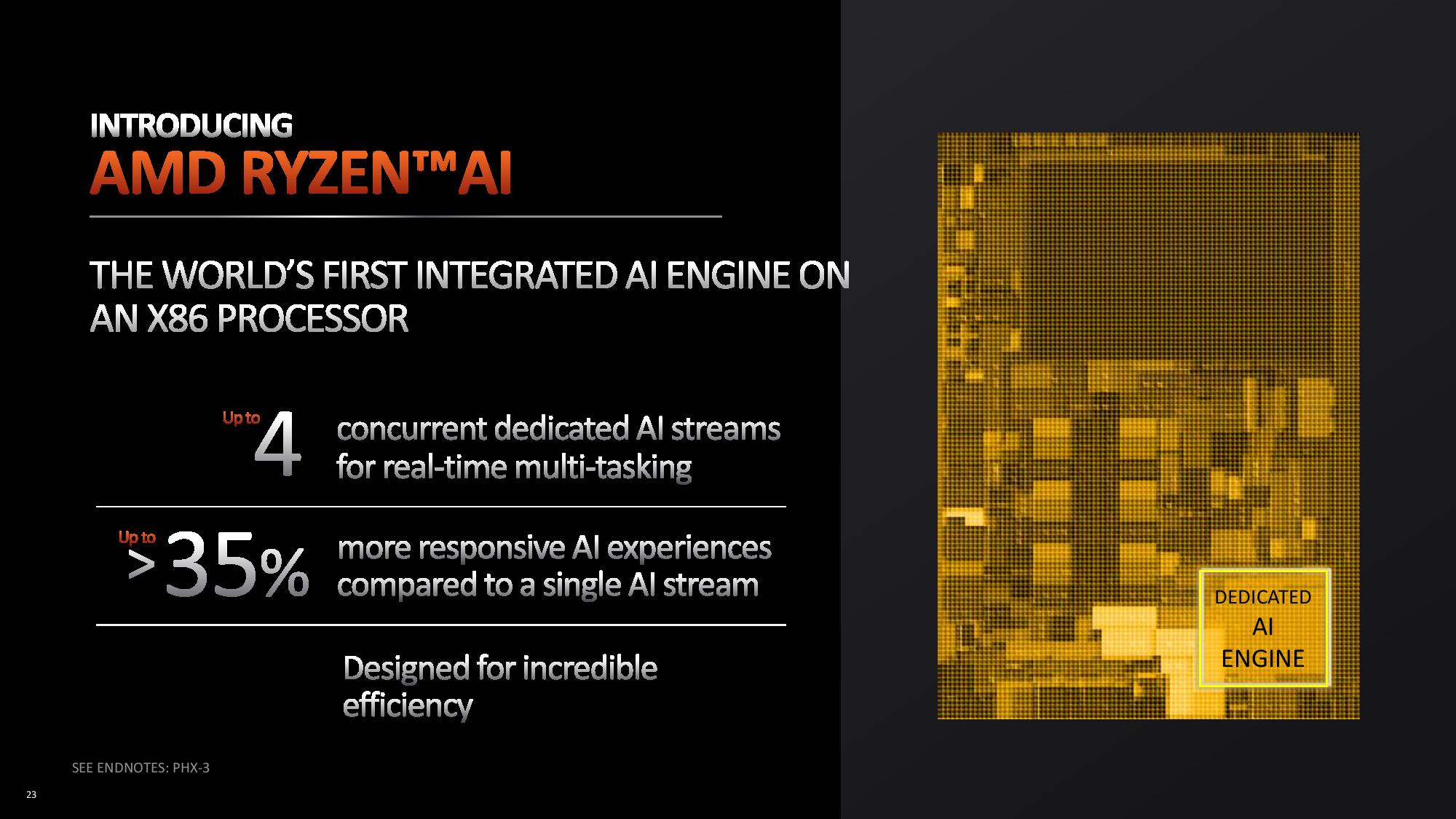

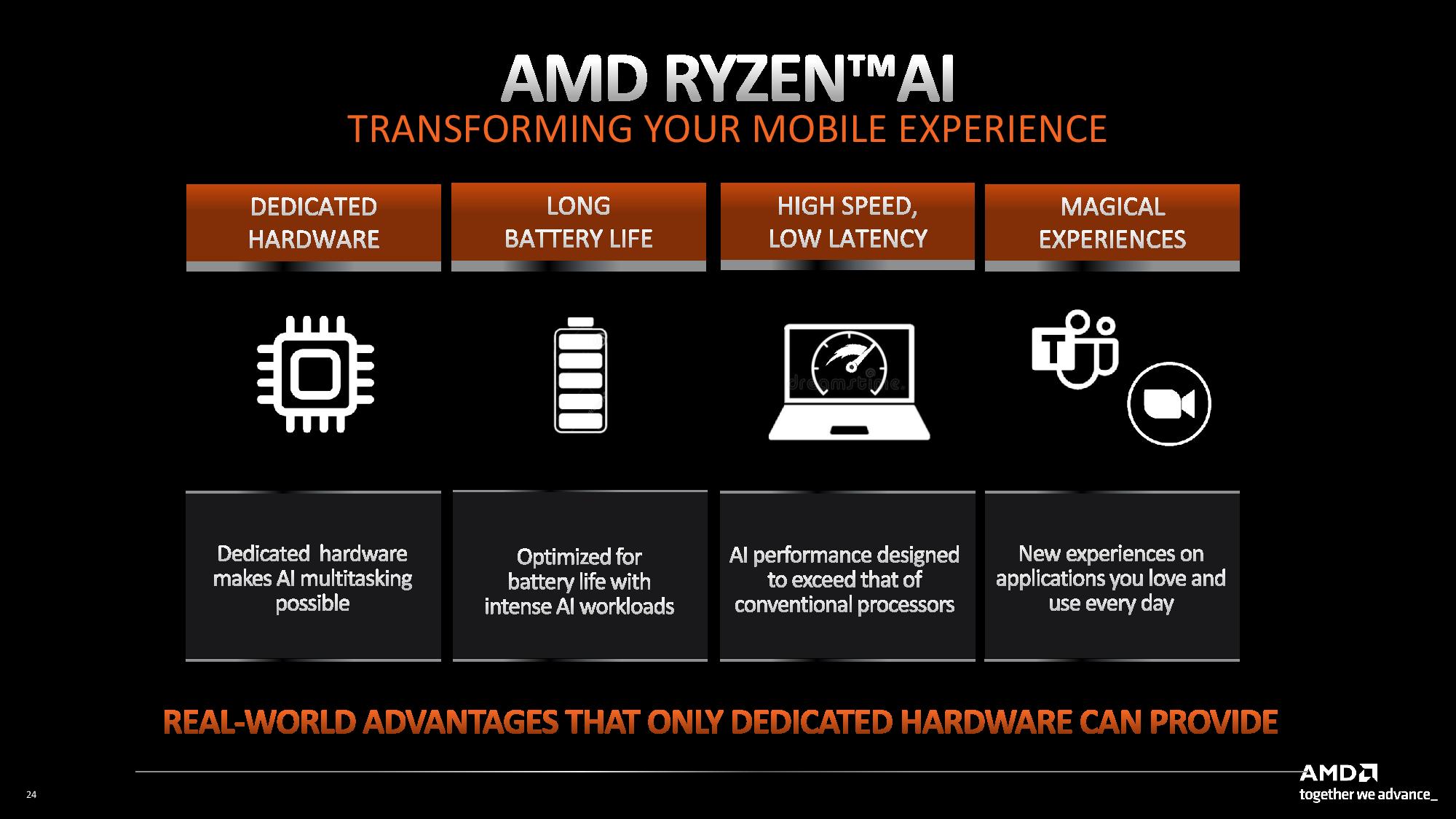

The XDNA AI engine is a dedicated accelerator that resides on-die with the CPU cores. The goal for the XDNA AI engine is to execute lower-intensity AI inference workloads, like audio, photo, and video processing, at lower power than you could achieve on a CPU or GPU while delivering faster response times than online services, thus boosting performance and saving battery power.

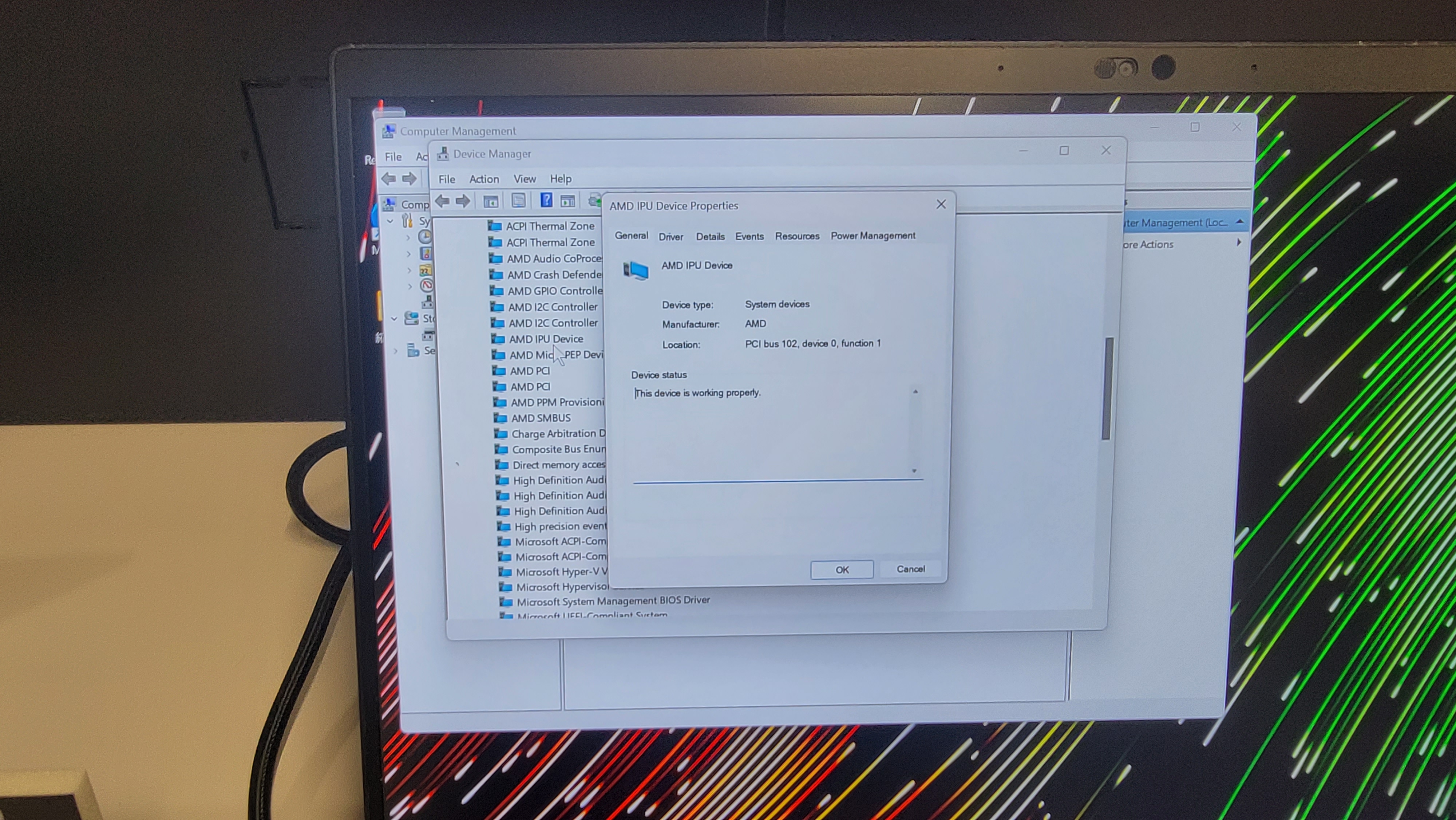

First, I popped open the task manager to see if the AI engine would enumerate itself as visible cores with utilization metrics, but the XDNA AI engine doesn't show up as a visible device in the task manager. As you can see in the above album, I found the AI engine listed as the 'AMD IPU Device' in the device manager. However, we couldn't observe the load or other telemetry from the cores during the tests.

Here we can see the XDNA AI engine crunching away at a facial recognition workload. To the right of the screen, we can see a measurement of the latency for each step of the workload. The bars are impressively low, and the workload ran quickly through a series of images as the AI engine crunched through the inference workload, but we don't have any context of just how those figures compare to other types of solutions.

AMD's demo did have a button to test its onboard AI engine against the online Azure ONNX EP service, but the demo team told us they had encountered issues with the software, so it wasn't working. Naturally, we would expect the in-built Ryzen AI engine to have lower latency than the Azure service, and logically, that is what AMD was trying to demonstrate. Unfortunately, we were left without a substantiative comparison point for the benchmark results.

However, the benchmark does show that AI is alive and breathing on AMD's Ryzen 7040 processors, and the company is also well underway in bolstering the number of applications that can leverage its AI engine.

This engine can handle up to 4 concurrent AI streams, though it can be rapidly reconfigured to handle varying amounts of streams. It also crunches INT8 and bfloat16 instructions, with these lower-precision data types offering much higher power efficiency than other data types -- at least for workloads, like AI inference, that can leverage the benefits. AMD claims this engine, a progeny of its Xilinx IP, is faster than the neural engine present on Apple's M2 processors. The engine is plumbed directly into the chips' memory subsystem, so it shares a pool of coherent memory with the CPU and integrated GPU, thus eliminating costly data transfers to, again, boost power efficiency and performance.

AMD announced last week at Microsoft's Build conference that it had created a new set of developer tools that leverage the open-source Vitis AI Execution Provider (EP), which is then upstreamed in ONNX runtime, to ease the work required to add software support for the XDNA AI engine. McAfee explained that the Vitis AI EP serves as a sort of bare metal translation layer that allows developers to run models without having to alter the base model. That simplifies integration, and AMD's implementation will currently work with the same applications that Intel uses with its VPU inside Meteor Lake, like Adobe. Also, much like Intel's approach, AMD will steer different AI inference workloads to the correct type of compute, be it the CPU, GPU, or XDNA engine, based upon the needs of the workload.

AMD isn't providing performance metrics for its AI engine yet, but McAfee noted that it's hard to quantify the advantages of an onboard AI engine with just one performance metric, like TOPS, as higher power efficiency and lower latency are all parts of the multi-faceted advantages of having an AI engine. AMD will share figures in the future, though.

McAfee reiterated AMD's plans to continue to execute its XDNA AI roadmap, eventually adding the engine to other Ryzen processors in the future. However, the software ecosystem for AI on the PC is still in its early days, and AMD will continue to explore the tradeoffs versus the real-world advantages.

Much of the advantage of having an inbuilt AI engine resides in power efficiency, a must in power-constrained devices like laptops, but that might not be as meaningful in an unconstrained desktop PC that can use a more powerful dedicated GPU or CPU for inference workloads -- but without the battery life concerns.

I asked McAfee if those factors could impact AMD's decision on whether or not it would bring XDNA to desktop PCs, and he responded that it will boil down to whether or not the feature delivers enough value that it would make sense to dedicate valuable die area to the engine. AMD is still evaluating the impact, particularly as Ryzen 7040 works its way into the market.

For now, AMD isn't confirming any of its future plans, but McAfee said that while AMD is committed to the AI engine being a part of its future roadmaps, it might not come to all products. On that note, he said there could conceivably be other options for different types of chips, like desktop PCs, that leverage AMD's chiplet strategy. Other options, like add-in cards, are also possible solutions.

One thing is for sure: We'll continue to see the scalable integrated XDNA AI engine make an appearance in many of AMD's products in the future. Hopefully, next time we'll see a better demo, too.