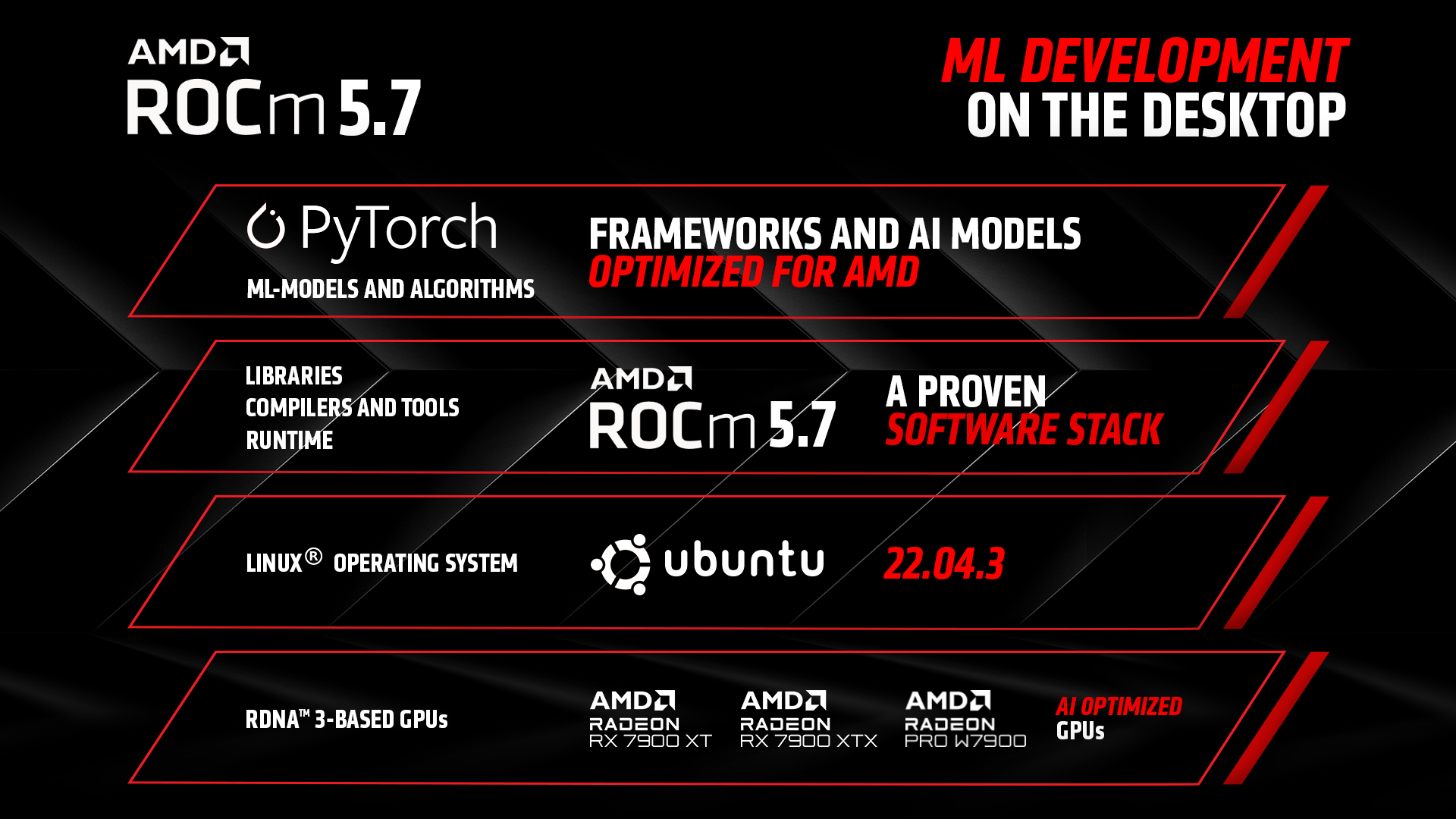

AMD announced that three of its RDNA3 desktop graphics cards, the Radeon RX 7900 XT, 7900 XTX, and the Radeon Pro 7900, will now support machine learning development via PyTorch and its ROCm software. PyTorch was originally made by Meta AI but is currently a part of the Linux Foundation. Hence, it is an open-source framework for building deep learning models, a type of machine learning used in image recognition and language processing apps.

The respective RDNA 3 Radeon graphics cards leverage AMD ROCm 5.7, which is exclusively for Ubuntu 22.04. AMD provides detailed instructions in a handy guide.

AMD ROCm Memory Hardware Requirement

AMD has a list of prerequisites for ROCm support, one of which requires the user to disable the iGPU on two specific AMD x670 motherboards- Gigabyte's X670 Aorus Elite AX and the Asus Prime X670-P WIFI.

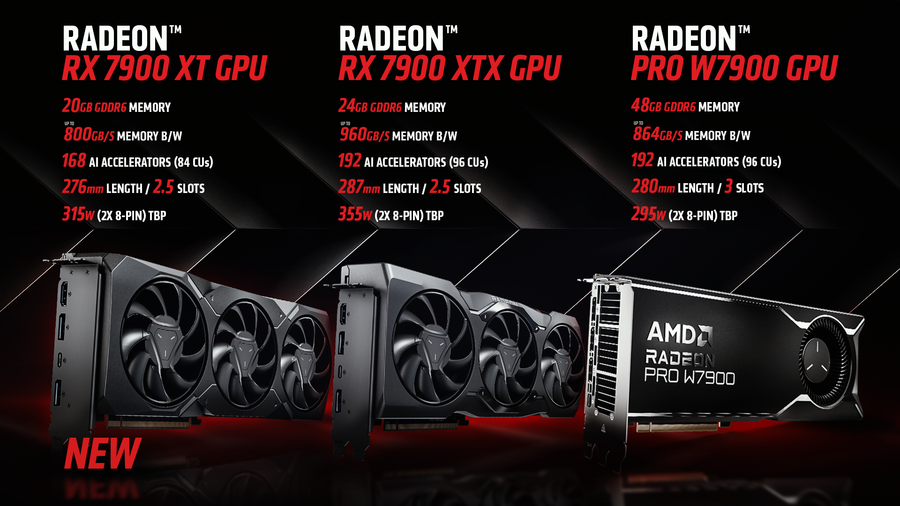

The GPU chip designer has two memory requirement recommendations — you will need a system with 64GB RAM and GPUs to have 24GB GPU VRAM as recommended by AMD, while the minimum memory requirement is 16B system memory and 8GB video memory. The RX 7900 XT GPU has 20GB GDDR6 memory, which falls within these recommendations, while the Radeon RX 7900 XTX meets the recommended VRAM requirement. The Pro W7900 workstation graphics card exceeds that requirement since it has a stack of 48GB GDDR6 chips. AMD Radeon 7900 XT utilizes 168 AI accelerators based on the TSMC-made 5nm Navi 31 core.

A few months ago, AMD did list some unannounced graphics cards in its ROCm 5.6 update — the Radeon RX 7950 XTX, 7950 XT, 7800 XT, 7700 XT, 7600 XT, and 7500 XT for desktops and the Radeon RX 7600M XT, 7600M, 7700S, and 7600S for notebooks. So, it is likely that if and when these GPUs are announced and released, they will be enabled with day-one support.

The Curious Tale of RX 7900 GRE

Technically, the Radeon RX 7900 GRE has 160 Accelerators and 16GB of Video memory. On the other hand, the Radeon RX 7900 GRE is a China-only GPU (Golden Rabbit Edition since 2023 is the year of the rabbit) and is only purchasable via system integrators in the domestic market.

The US has banned certain AI accelerators in China. It's unknown if the ROCm driver will work on this 'Golden Rabbit' graphics card. We'll have to wait and see.

On the Other Side of the Pond

Nvidia also supports PyTorch, and just like AMD, it requires the Docker Engine. It also requires Nvidia's GPU drivers and its Container Toolkit. PyTorch takes advantage of both Nvidia Tensor cores and AMD's AI accelerators. Intel also supports PyTorch and has extensive documentation to enable this on compatible systems.

The new support extension on all three graphics chip manufacturers will help many developers accelerate their machine learning and AI development. One might be curious if enabling high-end GPUs will result in a higher price tag for these graphics. However, there are no signs of that happening yet, but it wouldn't be without precedent: we've seen similar trends when desktop-grade graphics cards are used for other purposes, such as GPU cryptomining.