Amazon on Tuesday introduced two of its new processors, which will be used exclusively by its Amazon Web Service (AWS) division. The two new system-in-packages are the Graviton 4 processor for general-purpose workloads and the Trainium 2 processor for artificial intelligence training. The new devices will power AWS instances that will provide supercomputer-class performance to Amazon clients.

Graviton4

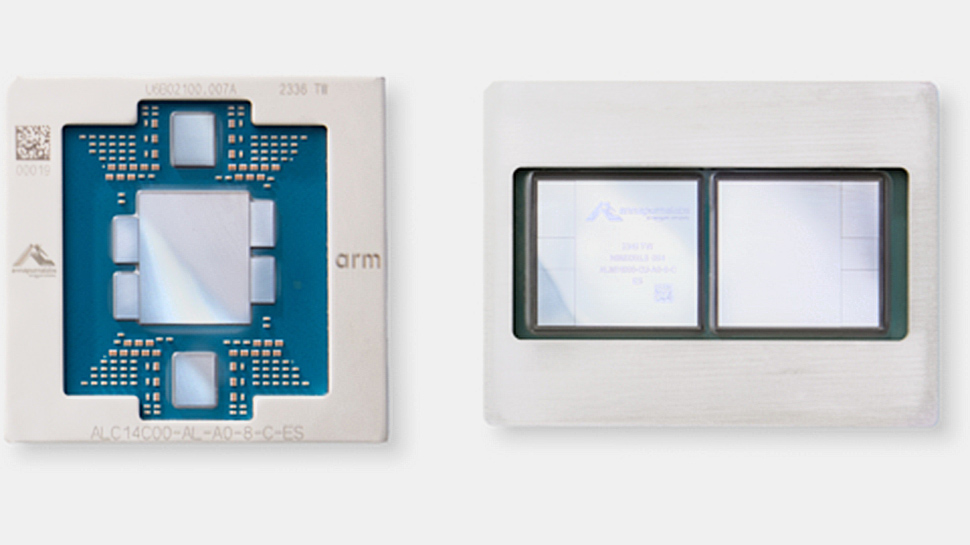

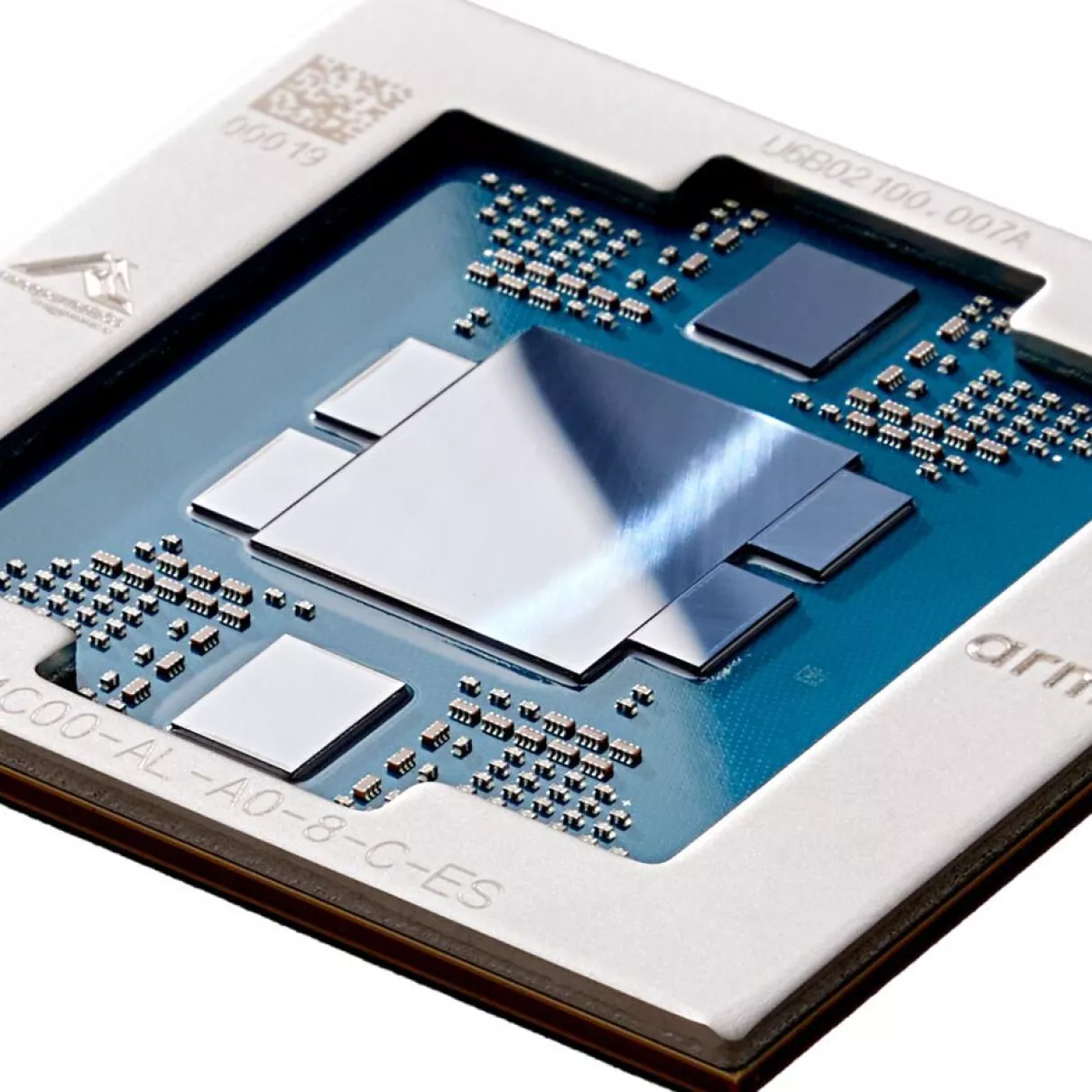

Amazon's Graviton4 — the company's 4th Generation general-purpose processor that competes against AMD EPYC and Intel Xeon CPUs — continues to use Arm's 64-bit architecture and packs 96 cores. The processor is said to showcase up to 30% higher compute performance, a 75% increase in memory bandwidth compared to its predecessor, Graviton3. AWS did not disclose whether its Graviton4 relies on Arm's Neoverse V2 cores, but this is a likely scenario.

Like the AWS Graviton3, the AWS Graviton4 relies on a multi-chiplet design composed of seven tiles. Keeping in mind that AWS has worked with Intel Foundry Services over packaging, this is expected. It is not completely clear which foundry produces silicon for the Graviton4.

Graviton4 will be included in memory-optimized EC2 R8g instances that are aimed at demanding databases, in-memory caches, and large-scale data analytics. These new R8g instances will provide up to three times more virtual CPUs (vCPUs) and triple the memory compared to the existing R7g instances. As a result, AWS clients will be able to handle more data, expand their projects more efficiently, and speed up processing speeds. Currently, Graviton4-equipped R8g instances are accessible for testing, and they are expected to be commercially available in the next few months.

"As part of the migration process of SAP HANA Cloud to AWS Graviton-based Amazon EC2 instances, we have already seen up to 35% better price performance for analytical workloads," said Juergen Mueller, CTO and member of the Executive Board of SAP SE. "In the coming months, we look forward to validating Graviton4, and the benefits it can bring to our joint customers."

Trainium2

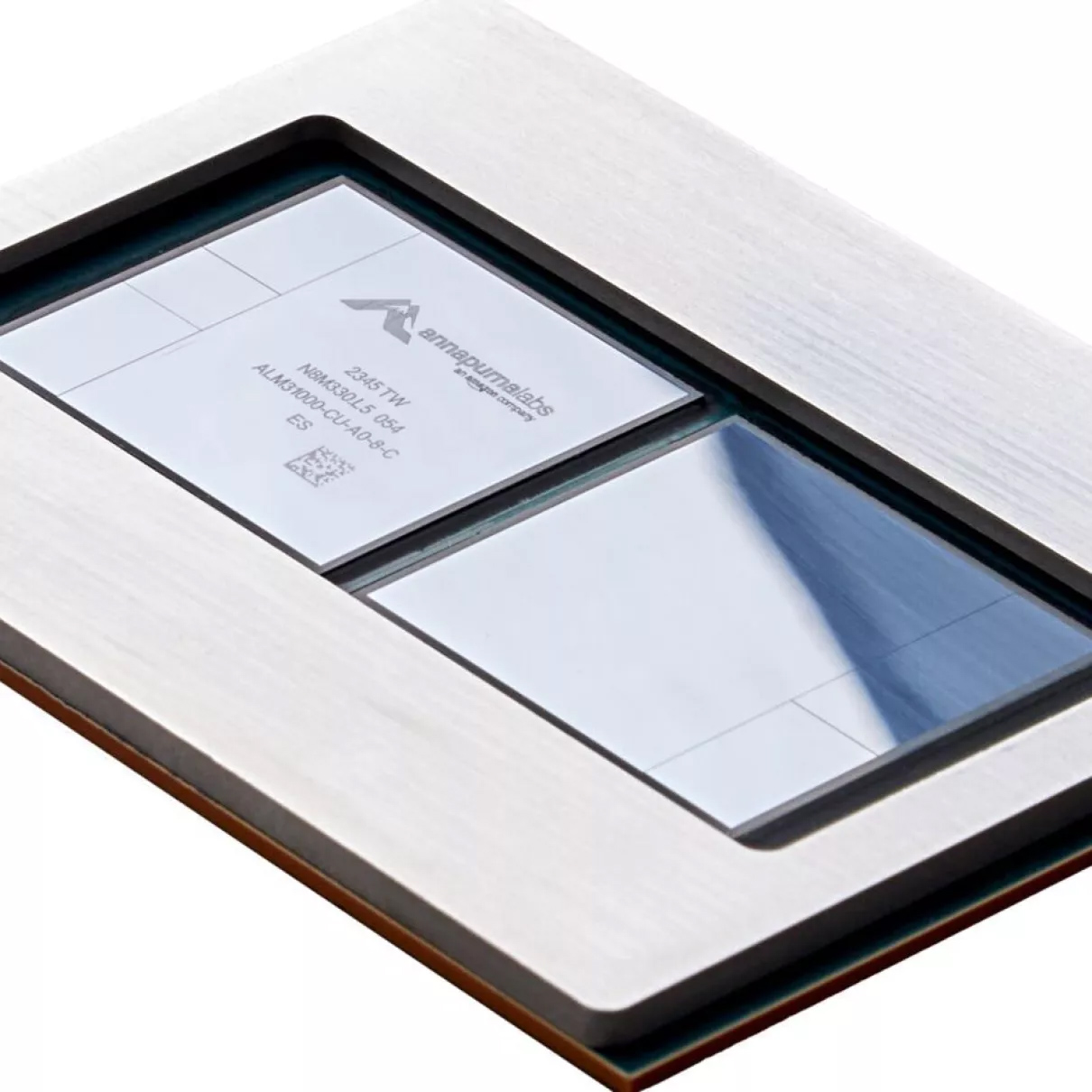

In addition to the AWS Graviton4 processor for general-purpose workloads, Amazon also introduced its new Trainium2 system-in-package for AI training, which will compete against Nvidia's H100, H200, and B100 AI GPUs. Trainium2 is designed to offer training speeds up to four times faster, and with triple the memory capacity, than the original Trainium chips, which is a significant achievement. Additionally, Trainium2 has improved energy efficiency, achieving up to twice the performance per watt. Machines based on Trainium2 will be connected using the AWS Elastic Fabric Adapter (EFA), which offers petabit-scale performance.

What is particularly impressive about Trainium2 is that it will be available in EC2 Trn2 instances that can scale up to 100,000 Trainium2 chips that are set to provide computing power of up to 65 AI ExaFLOPS — enabling users to access performance akin to supercomputers on demand. Such scaling capabilities mean that training a large language model with 300 billion parameters, which previously took months, can now be accomplished in just weeks.

From a design point of view, the AWS Trainium2 SiP also uses a multi-tile design featuring two compute chiplets, four HBM memory stacks, and two unknown chiplets (which likely enable high-speed connectivity).

"We are working closely with AWS to develop our future foundation models using Trainium chips," said Tom Brown, co-founder of Anthropic. "Trainium2 will help us build and train models at a very large scale, and we expect it to be at least 4x faster than first generation Trainium chips for some of our key workloads. Our collaboration with AWS will help organizations of all sizes unlock new possibilities, as they use Anthropic's state-of-the-art AI systems together with AWS’s secure, reliable cloud technology."