The language you're using for a Large Language Model (LLM) can have a huge effect on its cost and create an AI divide between English speakers and the rest of the world. A recent study shows that, due to the way services like OpenAI, measure and bill for server costs, English-language inputs and outputs are much cheaper than those in other languages with Simplified Chinese costing about twice as much, Spanishing costing 1.5x the price and Shan language going for 15 times more.

Analyst Dylan Patel (@dylan522p) shared a photograph that leads to research conducted by the University of Oxford which found that asking an LLM to process a Burmese-written sentence cost 198 tokens, while that same sentence in English cost just 17 tokens. Tokens representing the computing power cost of accessing an LLM through an API (such as OpenAI's ChatGPT or Anthropic's Claude 2), this means the Burmese sentence cost 11 times more through the service than the one written in English.

The cost of LLM inference varies hugely based on the language for GPT-4 and most other common LLMs.English is the cheapest.Chinese is 2x English.Languages like Shan + Burmese are 15x more expensive.This is mostly because of how tokenizers work so need to output more tokens pic.twitter.com/Y7De09pb4wJuly 28, 2023

The tokenization model (which sees AI companies convert user input into computational cost) means that in a less-than-ideal world, models accessed outside the window of English language are much more expensive to access and train on. That's because languages such as Chinese have a different, more complex structure (either grammatically or by number of characters) than English, which results in their higher rate of tokenization.

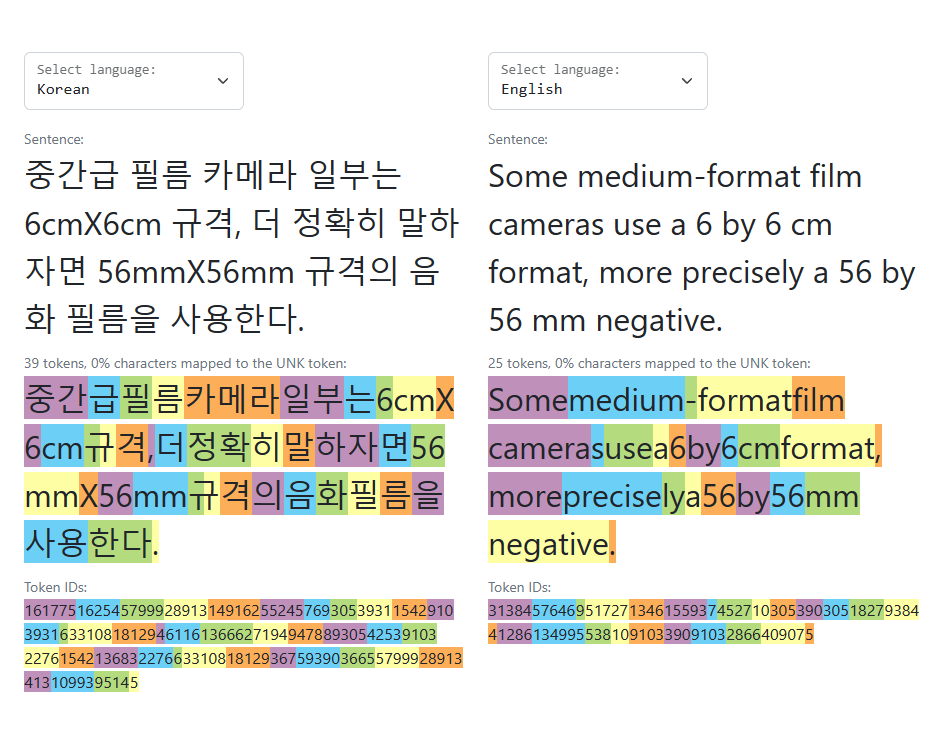

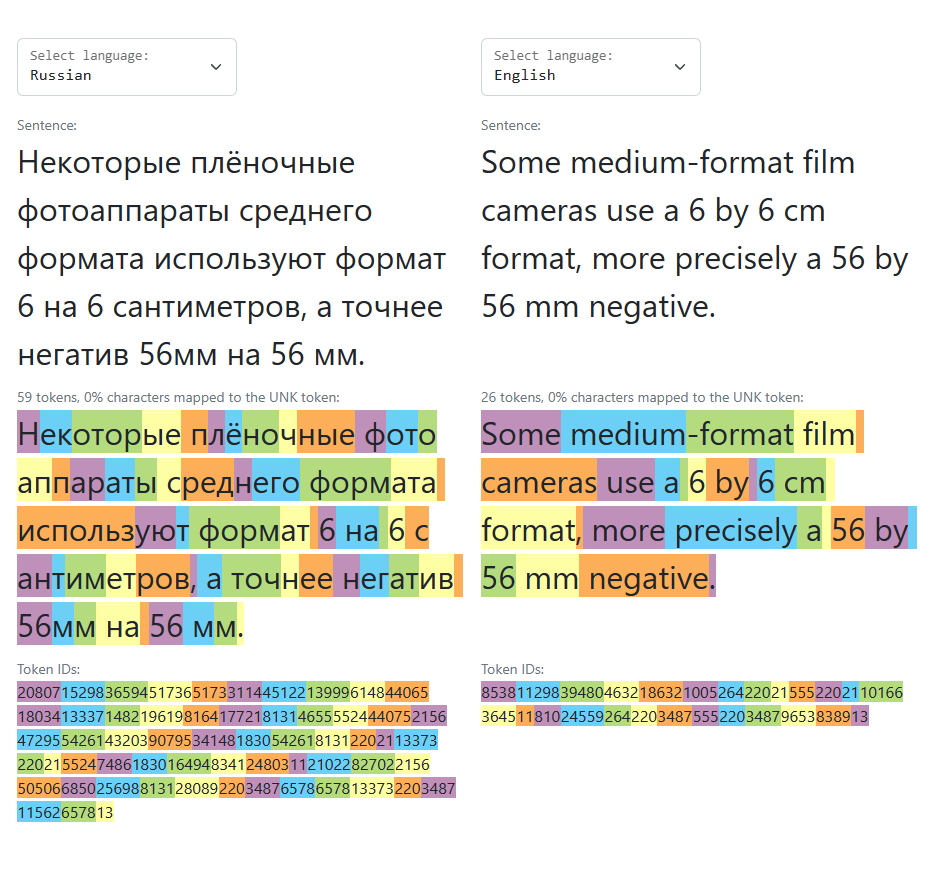

For example, according to OpenAI's GPT3 tokenizer, giving someone a token of "your affection" would be just two tokens in English but eight tokens in Simplified Chinese. This is true even though the Simplified Chinese text is just 4 characters (你的爱意) and the English one is 14 characters. Aleksandar Petrov et al's Tokenization Fairness page has a number of charts and tools you can use to see the disparity between languages.

OpenAI itself has a pretty helpful page explaining how it monetizes API access and usage of its ChatGPT model, which even includes access to a tokenizer tool that you can use to test the token cost per prompt. There, we see that 1 token is around 4 characters in English, and that 100 tokens would be around 75 English words. But that math just can't be applied to any other language, as OpenAI clearly puts it.

How words are split into tokens is also language-dependent. For example ‘Cómo estás’ (‘How are you’ in Spanish) contains 5 tokens (for 10 chars). The higher token-to-char ratio can make it more expensive to implement the API for languages other than English.

OpenAI

There's really no competition against the cost-effectiveness of English in AI-related costs; Chinese, for example, costs twice as much as English in terms of required tokens per output. But it's simply a reflection of the available training data that AI companies have (until now) used to train their models on. If there's one thing the AI explosion has done for the world is show just how valuable high-quality emergent data (that which is created as a record of life) really is.

This issue directly links with AI companies' desire to achieve recursive training, or the ability to train AI models on their own outputs. If that's achieved, then future models will still show the same cost-effectiveness of English compared to other languages whose complexity and more limited availability of base training data. And when that happens, it's not just this vicious cycle of algorithmic bigotry we have to contend with: it's also that for now, research points to AI networks going MAD when trained over five times on their own outputs (synthetic data).

To further complicate the issue, it seems that other ways of quantifying costs (other than tokenization) would end up running into the same problems. Either via bit or character-counting, apparently no language can beat the pragmatic practicality of English - it would still present lower costs due to its inherently higher "compressibility" into a lower token count.

That means the issue isn't in the way models have been monetized; it's an actual limitation of the technology and base models considered for training. And it should be no surprise to know the issue impacts multiple language models across their versions. They're all built mostly the same, after all.

This issue looks like a predictable one when we consider that the companies actually introducing Large Language Models (such as ChatGPT) or Generative Image networks (such as Midjourney) are mostly based in America. Lower usage costs and higher availability of quality data comes with the territory, in a way.

This cost difference has already led to a number of countries launching their own initiatives to train and deploy a native-language LLM. Both China and India have done so, and both claimed the same thing: that their plans were needed to accompany the pace of innovation allowed by English-based AI networks. And that rate is mostly limited by access and training costs.

It's only natural that everyone is looking to pay as little as possible for as much as possible; and these dynamics directly affect the cost of LLM training and deployment according to the base language. It's almost like this AI business is so complex, and its consequences so far-reaching, we have to be very careful with every little step we take.