We've already established that AI is capable of generating impressive optical illusions, but how effectively can large language models identify those that manage to trick the human eye? About as effectively as we can, suggest recent studies.

Tools including GPT-4V and Google Gemini have been tasked with examining various types of visual illusions, with the results proving that AI "seems to fall for many the same visual deceptions that fool people". For more AI content, take a look at our recent AI Week coverage.

ChatGPT correctly identifying the relative and not actual RGB color. Check these examples. These images are not part of the training data, unless openai has access to my photos. Thanks to @AkiyoshiKitaoka for sharing his histogram compression tool https://t.co/8prxCpbXqW pic.twitter.com/atxOXgYF76April 27, 2024

We've seen plenty of examples of illusions in which, at first glance objects or photographs appear to be a different colour to what they actually are (or, in some cases, appear to be in colour when they're actually black-and-white). And as reported by Scientific American, when asked to describe such images, AI will often give "surprisingly humanlike responses".

Computer engineering professor Dimitris Papailiopoulos ran a series of casual experiments, feeding optical illusions including some he had created himself (such as the example in the above tweet) into the AI. This meant the tool was seeing them for the first time, and they weren't part of any of its training. Instead of identifying the colour of the pixels, the AI interpreted them as a human would.

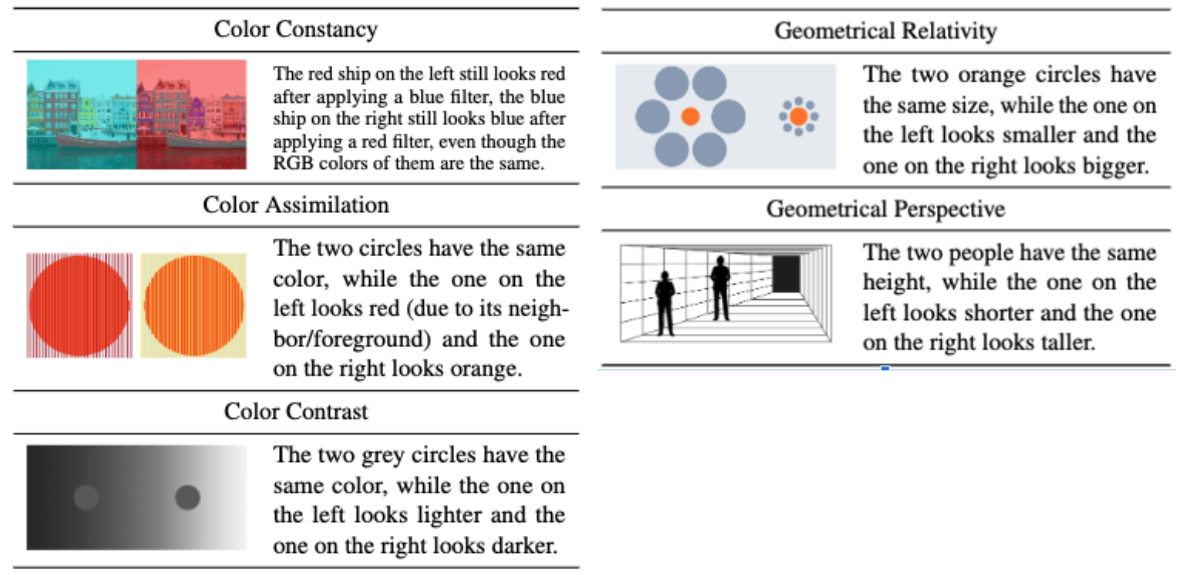

Similarly, a recent study revealed that large AI models in particular offer interpretations closely aligned with those of humans. Curiously, of the five types of visual illusion tested (above), geometrical perspective illusions (above) were the most likely to trick the tools, which presented "up to 75 percent humanlike" interpretations.

As Scientific American suggests, perhaps the most interesting aspect of all of this is that it could "offer a glimpse of what’s inside" the inner machinations of AI tools. Indeed, as AI grows in ubiquity, so do concerns around transparency – just ask Adobe.