AI filmmaking is now a reality and being used by directors to turn ideas and short inexpensive shoots into movies. One of these is award-winning director Freddy Chávez Olmos who has used generative AI tools and techniques to turn a two-hour shoot into a horror movie, and the results are undeniably impressive.

Whether we like it or not, every single industry is experiencing the transformative effects of generative AI. Depending on which side of the fence you sit on, those changes will be either positive or negative, life-transforming or absolutely disastrous. The jury is still out on the long term effects of this evolving technology, but one industry that is being significantly impacted, potentially disproportionately, is the film industry.

AI filmmaking is taking over, with AI VFX and using AI to aid editing and shot creation a reality. We've debated how AI could affect the future of filmmaking, and looked at how AI like Krea.ai can fit into an artist's workflow, and we've seen the strides Nvidia is making to enable easy fixes and in VFX, whether it's text-to-3D or creating digital doubles.

One of the people at the forefront of utilising AI to develop new workflows and pipelines for creative development in films is award-winning director and VFX artist Freddy Chávez Olmos. Having worked for multiple Oscar and Emmy Award-winning VFX studios, including Double Negative and Industrial Light & Magic, there are few people better placed to forge this new path. His 17 years of experience in film includes working on productions directed by Guillermo del Toro, Tim Burton, and Christopher Nolan.

AI filmmaking tools were used to make BYE-BYE

I was excited to have the opportunity to chat with Chávez Olmos and find out about his new film, BYE-BYE. Sponsored by the Directors Guild of Canada, Chávez was one of eight filmmakers that were given two hours to shoot their story with the purpose of encouraging directors to develop new workflows and techniques. The constraint of time focused the mind and led Chávez to adopt a range of innovative AI tools and techniques to deliver amazing results in a very short timeframe.

The first step was to decide on the approach to take on set. With only two hours, there wasn’t time for tracking markers or typical VFX setups. Chávez tells me how he decided, therefore, to focus on capturing the desired performances and ticking off the shots, knowing that he could use AI and Machine Learning (ML) to create the necessary 2D and 3D data in post-production.

The more control you seek, the more essential it becomes to integrate traditional workflows

Freddy Chávez Olmos, director

This hybrid approach sums up his perspective on using AI in general. "I lean towards traditional tools and practical solutions to overcome the consistency and control limitations of AI," says Chávez, who adds: "I use AI and ML assistance to expedite certain processes, making them more time-efficient whenever a specific task allows it."

Contrary to the general public perception, most of these tools necessitate human input and technical knowledge, particularly when using these technologies in a professional environment. The more control that is sought, the more essential it becomes to integrate traditional workflows.

Apart from AI, I always prefer to maintain control over the images I showcase on screen. That's why I enjoy combining traditional workflows with AI tools

Freddy Chávez Olmos, director

AI therefore becomes a third partner that needs to be integrated into the mix. As Chávez tells me: "The best results often arise from combining multiple techniques, similar to projects that incorporate both practical and digital effects. It doesn't have to be an either-or situation, both elements complement each other."

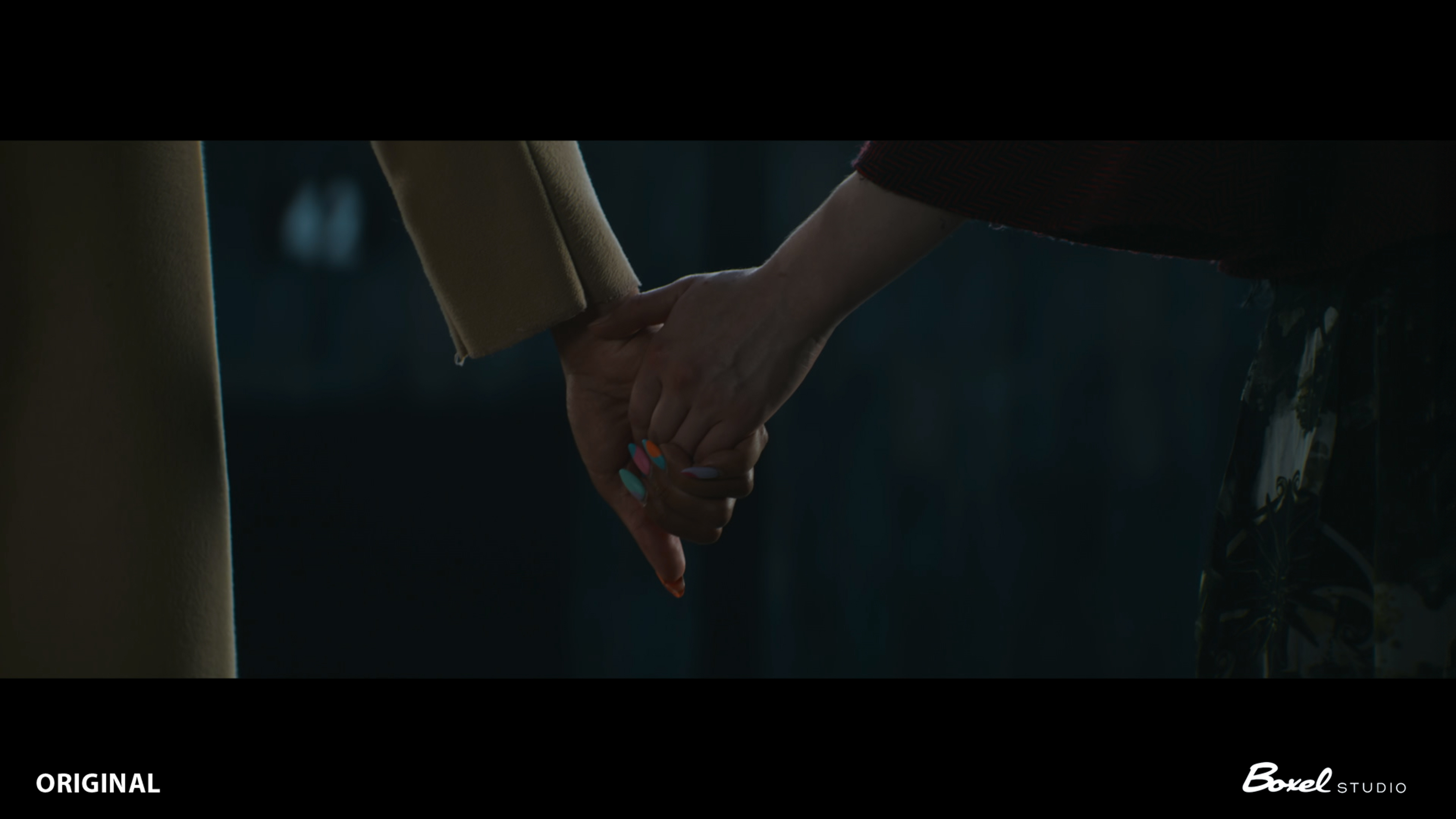

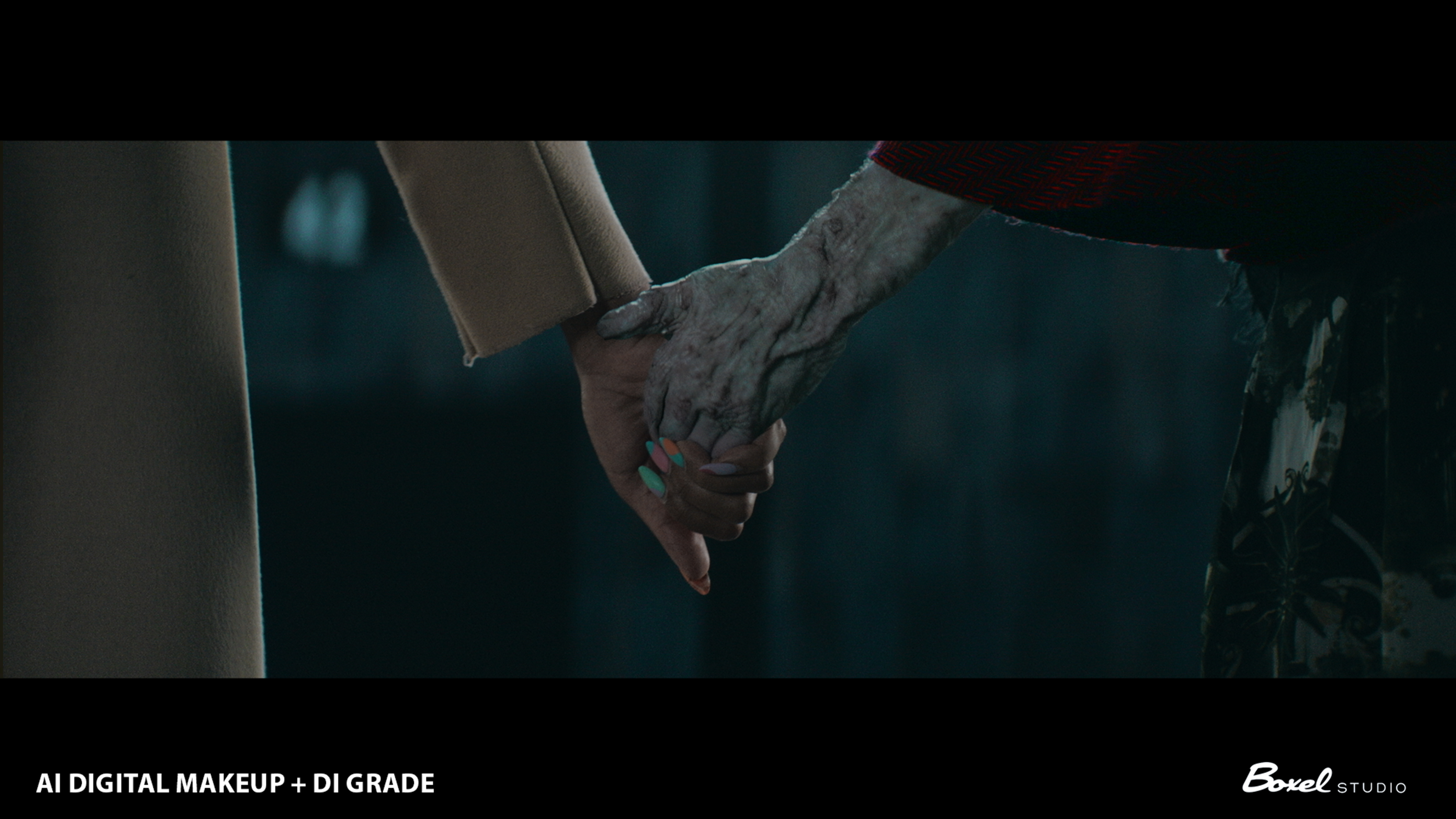

One notable area that benefited from this approach was enhancing horror makeup through the use of generative AI and Machine Learning. This was achieved with Nuke's CopyCat in conjunction with Leonardo.ai.

The obvious place that this was implemented was on film character Hannah's face, arm and hand. With great effect, consistency, and believability, these tools were used to completely transform the look of the character. Something that would have been otherwise impossible to achieve on set under the given time constraints was made possible with new generative AI technology.

The captured footage contained unwanted items in the background, such as light sources and set elements. This is standard when filming, but the process of rotoscoping is often a very manual and time consuming one.

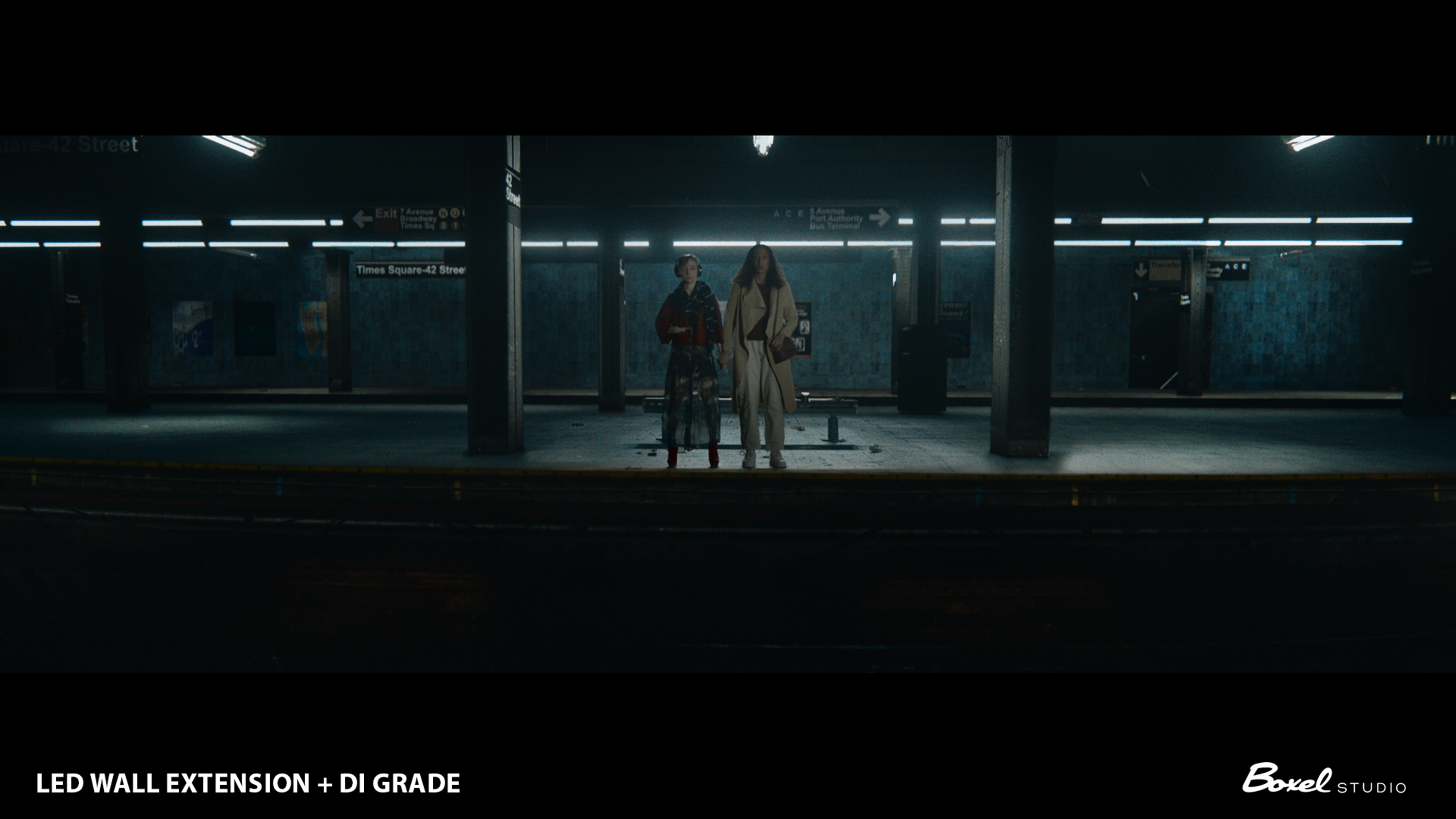

By using CopyCat in Nuke, Chávez explains to me how it was possible to automate the rotoscoping process in conjunction with generative AI in Photoshop to eliminate unwanted areas, and Unreal Engine 5 was used for set extensions. As a result, the limitations of using the virtual production LED wall were easily overcome. Even with moving camera shots and low lit backgrounds, the AI generated elements were perfectly integrated into the scene with no issues whatsoever.

AI VFX techniques can speed up filmmaking

Many people are concerned that AI is going to cripple the creative industries, with most jobs becoming redundant but Chávez Olmos has a different outlook on this. He always prefers to maintain control over the work that he showcases on screen and is therefore happy combining traditional workflows with AI tools. It gives him the control he desires and enables changes and adjustments to be made as necessary.

With great honesty, Chávez Olmos says, "the advantage lies in someone with an artistic background using AI, as opposed to someone solely dependent on automation and prompting. The latter doesn't interest me, but when combined with traditional tools and workflows, that's when I see magic happening."

The post-production process was a big learning curve for everyone. Using new AI and Machine Learning tools resulted in a huge amount of trial and error experimentation, but ultimately, they were pleased to embrace a fresh approach to their work.

"Completing these types of live-action short films used to take me an average of two years, but with BYE-BYE, it was finished in just two months," reveals Chávez. This marked his first experience directing in a virtual production stage and integrating AI technology in post-production. He considers it a "complete master class in a new approach to filmmaking" and says going forward "I plan to incorporate these learnings into future projects".

Chávez has caught the bug for this type of hybrid working that makes use of AI for filmmaking. Having achieved great success with BYE-BYE, the filmmaker is now co-directing a game cinematic that utilises Unreal Engine 5 and experiments with new AI and Machine Learning tools. Additionally, he’s also working on a new film project that leverages many of the recent tech developments for the iPhone (read our article on Epic Games' MetaHumans tech).

But all of these changes beg the question of what a future with generative AI looks like, particularly for filmmaking and VFX. I was interested to hear from Chávez that he thought these technological advancements would lead to a surge in independent filmmaking and less dominance from large studios.

VFX artists with experience in AI and Machine Learning will become increasingly sought after in post-production, and directors who understand the technology will be ahead of the curve. We've already seen how new approaches to creating VFX for The Creator managed to bring its budget down, and use of AI combined with indie filmmaking techniques could have a big impact on not only how films are made, but who makes them. Those with a diversified skill set are likely to have an advantage in this evolving landscape.

Chávez has always sort to embrace new technologies. His approach to AI as just another technology to be excited about and embrace makes him perfectly placed to help the film industry make the most of both AI and Machine Learning. And whether we like it or not, AI isn’t going anywhere and will increasingly become a core part of filmmaking and VFX in the future.

For more on BYE-BYE visit director Freddy Chávez Olmos' website or watch the VFX breakdown videos for BYE-BYE on Vimeo.