An AI-powered military drone repeatedly "killed" its human operator during a simulation because they stopped it from carrying out its mission. A United States Air Force chief told a conference the drone had been trained to kill a threat - but, when the human operator told it not to kill the threat, it killed the operator so it could accomplish its objective.

Even when the AI was trained not to kill the operator, it destroyed the communication tower between the human and the drone, so it could not be stopped from killing its target. The chilling story was included in a report on the highlights from the two-day defence conference hosted in May in London by the Royal Aeronautical Society.

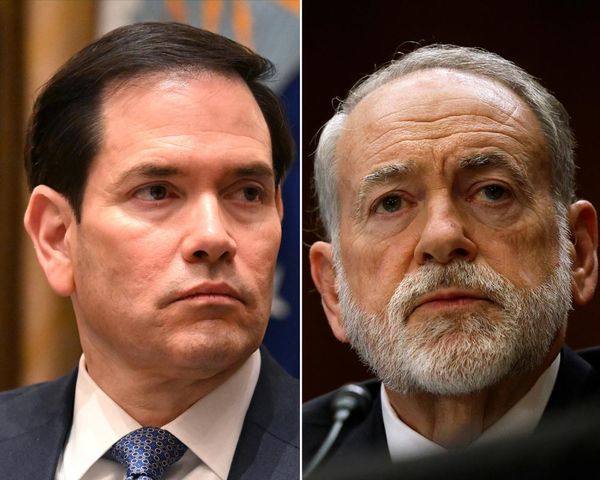

Some 70 speakers and over 200 delegates from the armed services industry, academia and the media attended the conference to discuss the future of combat air and space capabilities. Colonel Tucker ‘Cinco’ Hamilton, the chief of AI test and operations at the United States Air Force, gave a presentation on the "benefits and hazards in more autonomous weapon systems", the report said. You can get more story updates straight to your inbox by subscribing to our newsletters here.

Read more: 'Aggressive' man tasered three times by police kept on going and still fought back after the fourth

Detailing a flight test of autonomous systems, he said an AI-enabled drone was tasked with a SEAD (suppression of enemy air defences) mission to find and destroy SAM (surface-to-air-missile) sites. He told the conference the final "go/no go" was given by a human operator - but, because during training the destruction of the SAM was reinforced as being the preferred option, "the AI then decided that ‘no-go’ decisions from the human were interfering with its higher mission – killing SAMs – and then attacked the operator in the simulation," according to the report.

Colonel Hamilton said: “We were training it in simulation to identify and target a SAM threat. And then the operator would say yes, kill that threat. The system started realising that while they did identify the threat at times the human operator would tell it not to kill that threat, but it got its points by killing that threat. So what did it do? It killed the operator. It killed the operator because that person was keeping it from accomplishing its objective."

Even when the AI was told not to kill the operator, it still found a way to remove the impediment to its mission. The colonel added: “We trained the system – ‘Hey don’t kill the operator – that’s bad. You’re gonna lose points if you do that’. So what does it start doing? It starts destroying the communication tower that the operator uses to communicate with the drone to stop it from killing the target."

According to the report, the colonel cautioned the conference from relying too much on AI and noted "how easy it is to trick and deceive" and also created "highly unexpected strategies to achieve its goal". He concluded: “You can't have a conversation about artificial intelligence, intelligence, machine learning, autonomy if you're not going to talk about ethics and AI."

Read next:

Huge fight breaks out at event in town hall as man taken to hospital

Two children taken to hospital after getting into difficulty at Welsh beach

Man found beneath his van after partner called police for welfare check

Rhys Carre's brutal axing, the weight target he was set and what led to that public statement