Our twin prop plane lands with a bump and a shake in a remote part of Finland. Laden with world leading data scientists, datacenter management experts from CSC, AMD representatives, and a handful of tech journalists that have been invited to learn more about LUMI, Europe’s most powerful supercomputer.

Our bus from the airport to the hotel drives us through empty streets carved out through a misty forest. We arrive, freshen up after a long day of travelling, and meet in a large dimly-lit restaurant that is empty apart from us. Inside, the décor is dated and very brown. Outside, steam is spookily rising off the ground. It is eerie and not what I expected visiting a place at the forefront of technology.

So, why here? It’s because there is an abundance of clean energy in the region, and Kajaani, the town we're in, has three hydroelectric power plants providing enough energy for the power-hungry LUMI. The town is also a hub in a communication network connecting the Nordic countries. So, all in all, it’s a perfect place to build a computing behemoth.

From printing paper to churning data

LUMI is an HPE Cray EX supercomputer made by Hewlett Packard Enterprise and managed by CSC. It boasts performance power of 380 petaflops, making it the fifth most powerful supercomputer in the world - and the most powerful in Europe, the equivalent of 1,500,000 laptops and the driving force for the AI of the future.

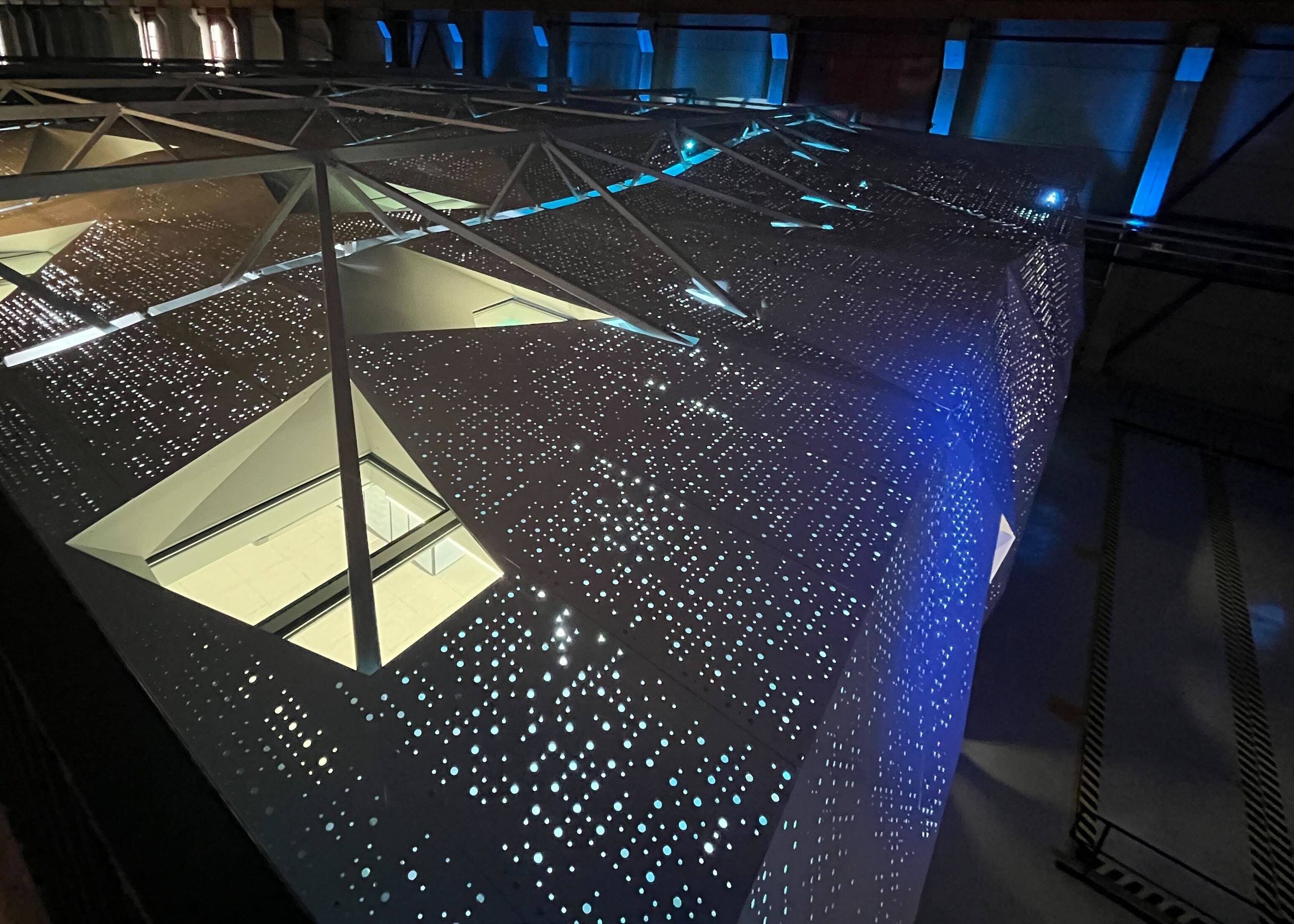

Much like most places in Kajaani, the supercomputer is only a short distance from the hotel. Upon arrival we were given visitor cards and told what we could, and could not photograph. LUMI is located inside what used to be a papermill, which I find quite poetic. The complex is large, clean, and very silent - walking around an industrial area like this you expect clattering and bangs, workers on the move dressed in hi-vis and yellow hats. But it's empty, quiet, and still, and I cannot shake the eerie feeling I picked up the day before.

We are led through a non-descript door, up some stairs, and onto a mezzanine floor. I make my way over to the edge and there, below me, is LUMI.

The feeling of eeriness is replaced by awe, intensified by the juxtaposition of this colossal machine and the remoteness and age of the building it's in.

The "Queen of the North" sits in her warehouse like a technologically advanced alien on a life support machine light years from her home planet.

LUMI is massive. The supercomputer is spread over two floors, with the ground floor level a complex network of pipes feeding and taking away water heated by the cooling systems to heat nearby buildings. Dr Pekka Manninen, Director of LUMI, says by far the largest and most complex task when it comes to building a datacenter is cooling, and peering into the guts of the machine it's evident why that's the case.

On the upper floor sits the brains - racks filled with AMD CPUs and GPUs.

More about LUMI

LUMI is a tier-o GPU-accelerated supercomputer making the convergence of high-performance computing, artificial intelligence, and high performance data analytics possible.

It is made up of 262,000 AMD EPYC CPU cores and 11,900 AMD Instinct MI250X GPUs, alongside a 9PB flash-based storage layer with a read bandwidth of 3TB, a 80 PB parallel file system, and 30 PB encrypted object storage for sharing and staging data.

The decision to host the system in Kajaani was made in June 2019. After planning, it then took nine months to procure everything needed and by March 2021 everything was ready.

Still, LUMI wasn't in use by customers until the beginning of 2022, and since then, there has been a gradual deployment of GPU partitions, and the addition of an object storage service amongst other optimizations.

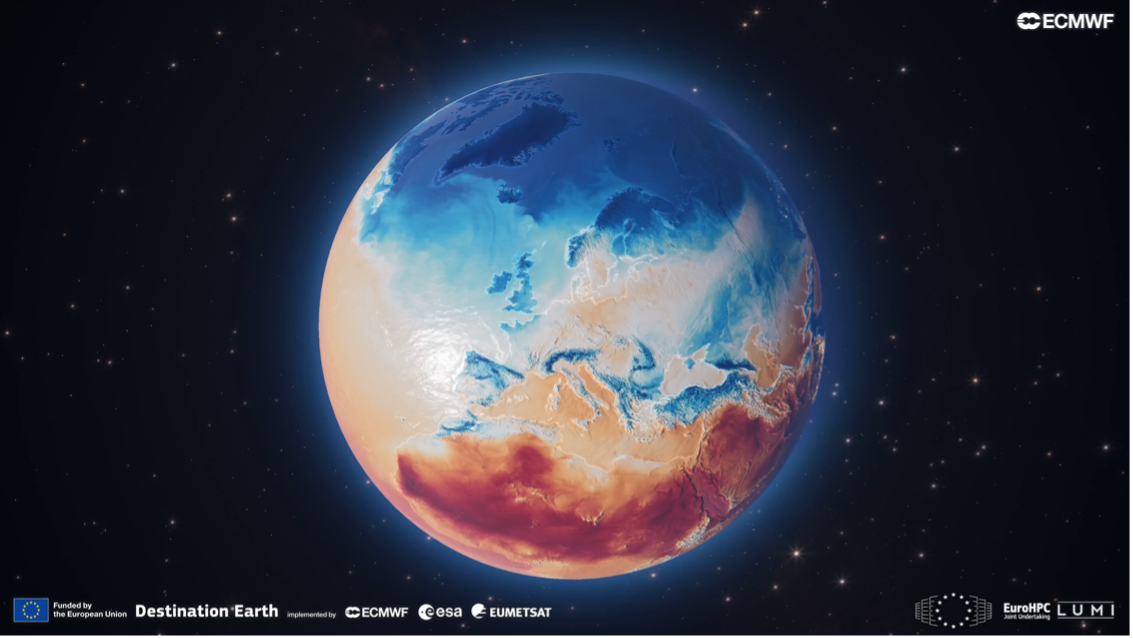

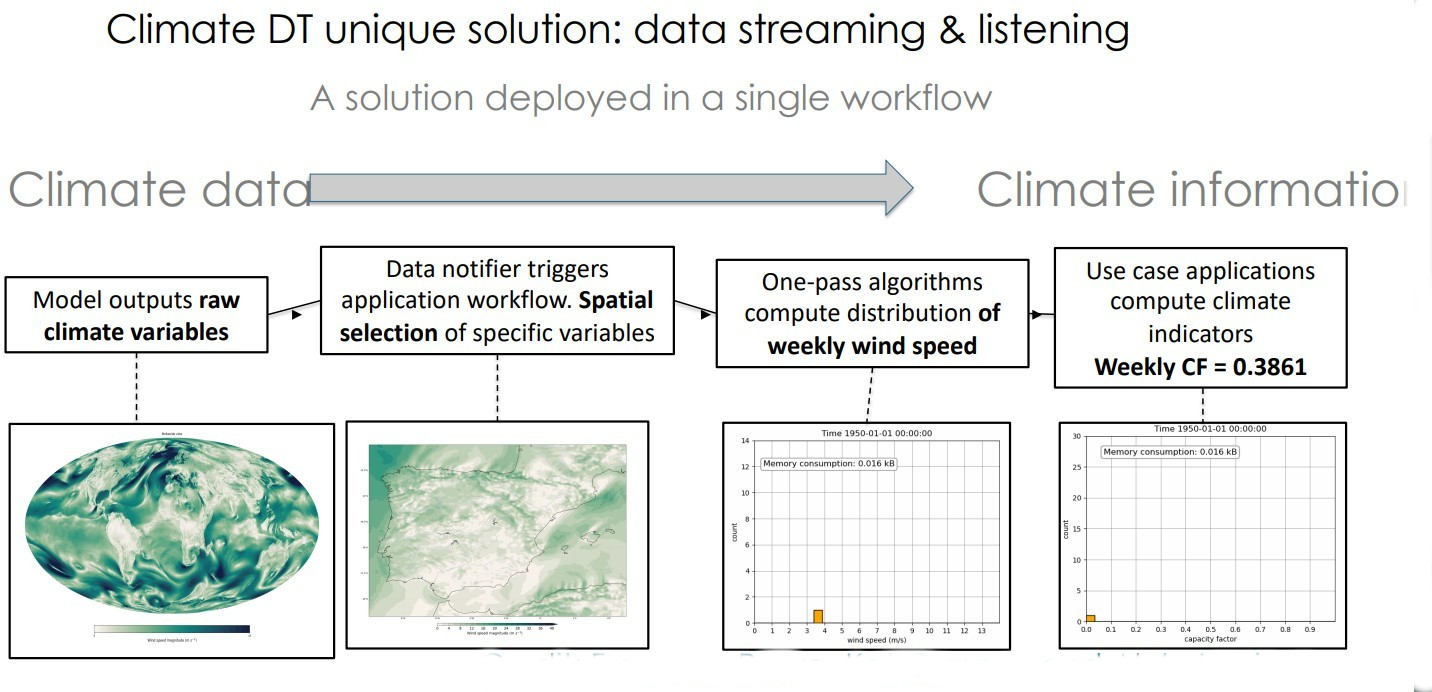

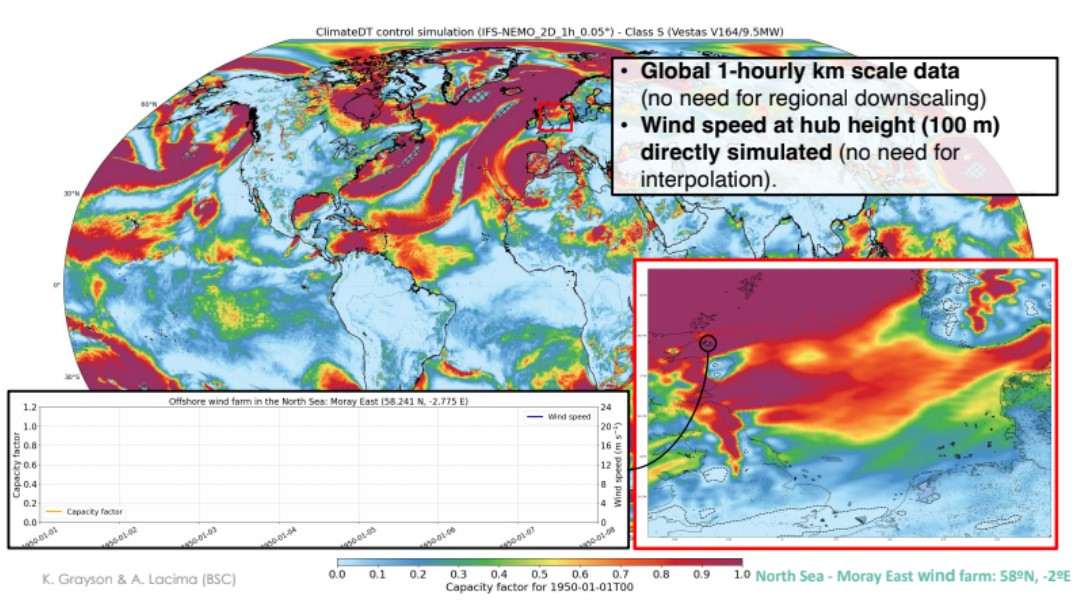

LUMI is used for various scientific research tasks including Destination Earth's digital twin (Climate DT) visualizing multi-decadal climate projections, as it has performed the first-ever climate change projections at 5km resolution.

It can also be used to assess the impact of climate change and adaptation strategies across regional and local levels. One of the novel approaches to Climate DT is that it can stream climate model output to impact models.

There is somewhat of an ironic twist to climate research and CO2 emissions, as research using computers, especially ones designed for AI could contribute to more CO2 emissions. The irony of my CO2 emissions required to visit LUMI is also not lost on me.

"How much CO2 emissions can we afford to further science is an entirely justified question," notes Dr. Pekka Manninen, Director of Science and Technology at LUMI. "Scientists should not be scared of doing science because of the related CO2 emissions but at the same time we should not take them out of the equation."

"The CO2 emissions should be the headache of the HPC facility, not the end user."

We are close to seeing a decline in fp64 flops/watt so the science community will be able to adapt to match workloads to the hardware for a better ratio between emissions and scientific progress - and they've certainly cracked that at LUMI.

LUMI is one of the greenest supercomputers in the world as it uses 100% hydro electric power, and waste heat is pumped into local houses around Kajanni.

LUMI resource are divided between national resources and a European-wide EuroHPC quota, with half going to each entity. Researchers that are affiliated with academic organisations linked to the EuroHPCJU or in a LUMI consortium country can apply to use the system, and organizations in an EU member state or a country associated with Horizon 2020 are also eligible - for more information on how to apply to use LUMI you can visit its website.

More computing power than ever before

LUMI also gives Europe computing power the likes of which the continent has never seen before. Almost 12,000 AMD MI250x GPUs enable the training of the largest AI models, and despite being only the 5th most powerful super computer in the world, LUMI is the 3rd fastest when it comes to the HPL-AI benchmark, delivering 4.5 exaflops.

The massive scale of LUMI enables more parallel experiments providing faster scientific discovery and R&D. This means Large Language Models (LLMs) can be trained in faster times, as although training a 100B parameter language model on a single CPU would take hundreds of years, LUMI can do that in a fraction of the time.

LUMI gives people the power to create and train models that would otherwise only be possible by large international corporations. This access LUMI gives to smaller projects is important because it means that lesser-spoken languages can be used to train better quality models and provide the benefit of AI to a wider audience. It also enables the use for medical research that might not be the focus of large coorporations running other supercomputers.

CSC may be driving the wheel, but under the hood, AMD is providing the power

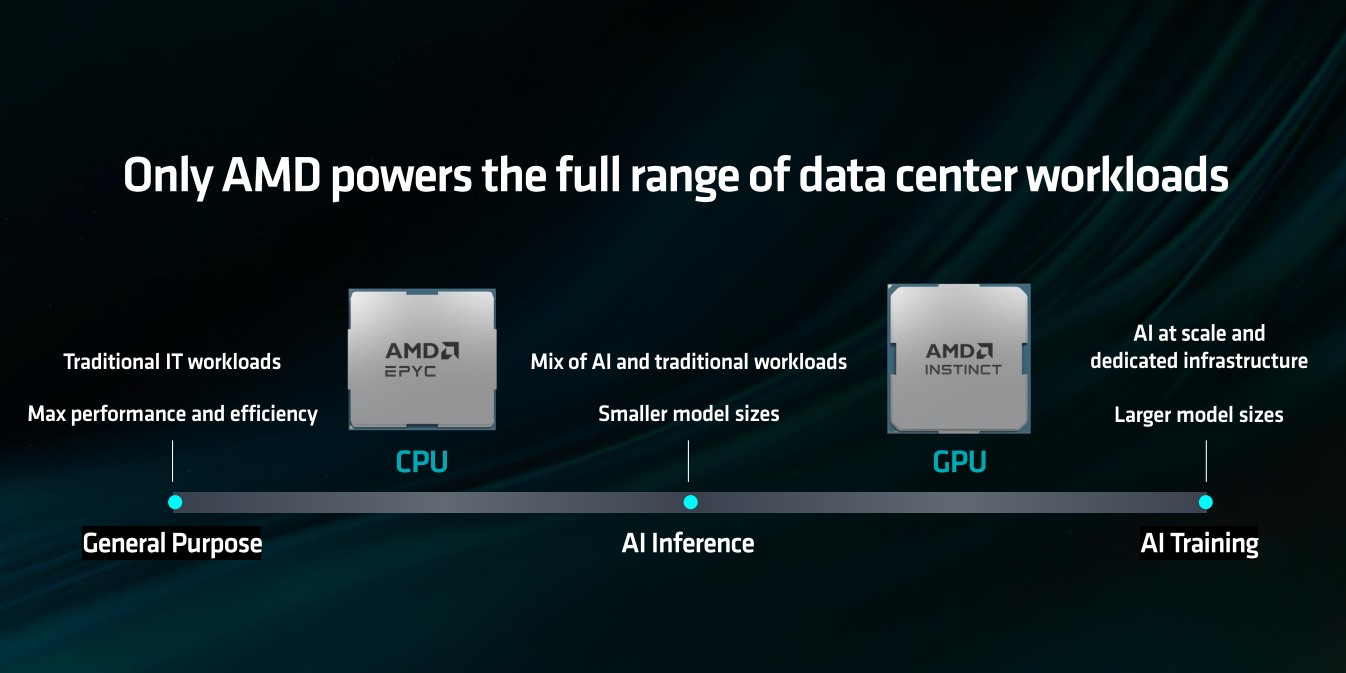

This computing power is made possible by AMD and its EPYC CPUs together with INSTINCT GPUs.

AMD's EPYC CPUs are used by the world's biggest hyperscalers, including AWS, Alibaba Cloud, Microsoft Azure, Google Cloud, Meta, and Tencent, with adoption growing from a 5% market share in 2020 to 25% in 2024.

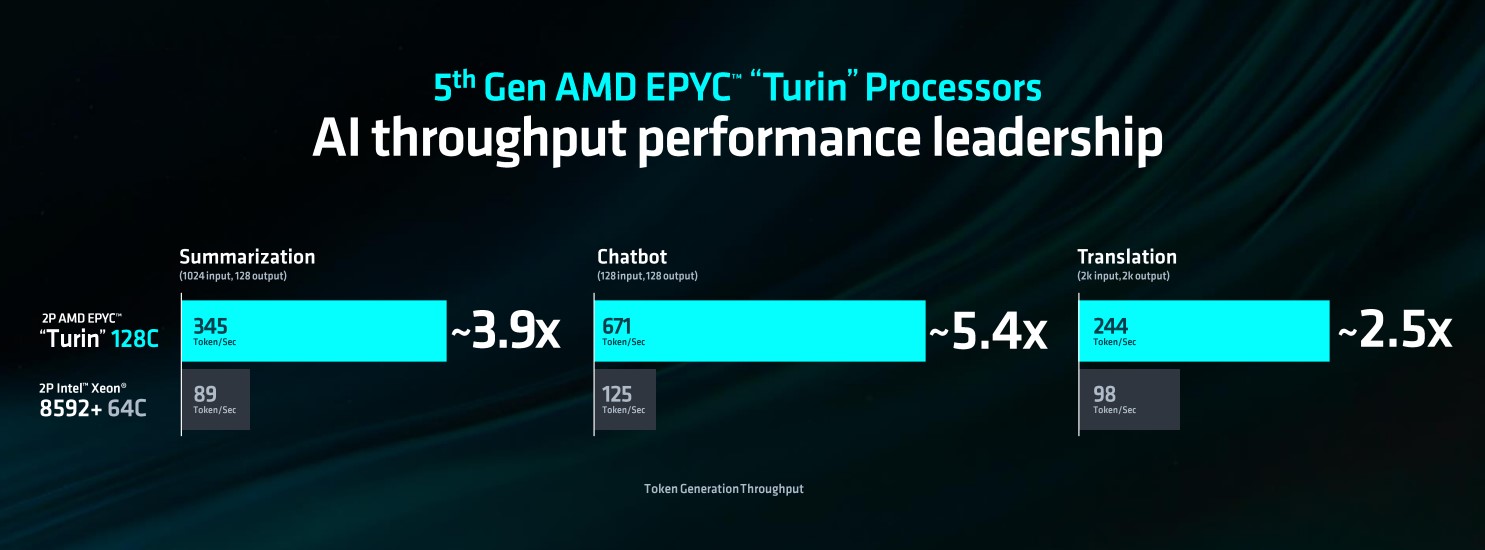

VMmark 3 Performance metrics shows the 2P x AMD EPYC 9654 96c out performs 2P x Intel Xeon 8592+ 64c and 2P x Intel Xeon 8180 28c with a score of 40.19 at 44 tiles. There is also an 80% reduction in rack space and 65% less energy consumption, as without this hardware, making a supercomputer would be miles more costly in terms of finances, space, computing power, and the environmental impact.

It's this hardware that is behind the LLMs and neural networks that are powering a lot of the AI tools on the market today. There is out-of-the-box support for GPT-4, LLama 2 and 3, Hugging Face, Qwen, Stable Diffusion, and more, and the increased capabilities for training provided by AMD hardware allows these advancements to be made. AMD is also committed to open source projects, and Hugging Face has 700,000 models that run out of the box on the AMD ROCm platform, with full support for OpenAI Triton, and AMD ROCm used for essential LLM kernel generation.

It cannot be stressed enough how important AMD and the team behind supercomputers like CSC are at shaping the future in an environmentally-friendly and ethical way. If language models are restricted to only hyperscalers like AWS, we will see much more restricted development focused in areas that don't benefit as many. AMD and LUMI are opening up the world's leading technology to as many people as possible in the most ethical ways possible.

Speaking with the experts at LUMI

After I walked around LUMI, I got the chance to speak with the data scientists that know her best.

I was fascinated by the limiting factors outside of energy and space and the process of how you actually get to work with a supercomputer - but Dr. Katja Mankinen, a data scientist at CSC, tells me it's as simple putting in a request. Your project will be assessed and if it meets the criteria, you can do everything remotely.

There is user documentation for LUMI, and although a support team is available, users still need to be willing to do some leg work and learn how to train your model as a solution for your project might not be readily available. It may have been a complex and technical project to set up the supercomputer, but from a user perspective CSC has smoothed that all out for you.

I asked more about what the challenges are outside of compute when you get to use LUMI. Dr, Mankinen replied, "More compute is nice to have, I’m not saying we don’t need more. It’s needed for doing this ground breaking research but data is one of the limiting factors. The amount of available data that you can actually use to train your AI systems is limited because there are all sorts of copyright licences and things you need to take into account when you start training."

Data is a limiting factor

After speaking to Dr Mankinen, I asked Dr. Aleksi Kallio, the manager for AI Development Programme at CSC, more about how the access to data shapes what you can do with LUMI.

"Right now we are studying AI ethics and what it means for our customers," he said. "There are quite a lot of responsibilities depending on your use case and whether you have higher risks. Some can be quite hard to manage. For example, you are responsible for all the inputs and outputs, so there are data security and data privacy questions. You want to be careful what you’re putting into ChatGPT for example, it could be secret. Finding a balance is difficult."

Dr. Kallio also told me one of the biggest challenges is that AI is still so new; resulting in quite a lot of room for interpretation and no one knows how you’re supposed to interpret the rules.

"There will be authorities but we don't have an AI office we can go and ask questions to when things are not clear," he added. "We are making the best effort and trying to justify interpretations. If they change it, then we will change it."

Diminishing returns

It's not just data that is a limiting factor when it comes to results. Trying to amalgamate data from so many GPUs is a challenge. Compared with having 10,000 users at one data center, having one user for 10,000 GPUs is more problematic.

Now AMD is tacking the challenges of not only the hardware side of things, but the network side of things too.

We have got to the end of that line of just pushing more and more parameters into a model. The challenge is how do you create and use software to integrate all the sub results together and this is really the AI engineering trickery that needs to be done

Dr. Aleksi Kallio

AMD, together with Broadcom, Intel, Google, Microsoft, HPE, and others have been developing Ultra Accelerator Link (UALink) and Ultra Ethernet to help scale high-speed, low latency network capabilities in AI ready datacenters and HPEs.

This technology is aimed at competing with Nvidia's NVLink to provide an open-standard to AI accelerators addressing the exact issues that Dr Kallio was talking about.

Removing the bottleneck in software will also mitigate the diminishing returns we're seeing in compute.

The interviews are winding up and the coffee cups are making their way to the sink. It's time to leave and still in awe, I peer over the side of the mezzanine floor again at LUMI humming around. The scale is huge - AMD is working down the to nanometer and CSC is conducting a chorus of CPUs & GPUs in a giant warehouse.

On the way back to the hotel I take a stroll past one of the hydro-electric power plants that powers LUMI.

Just in front of the dam sits an old castle ruin that began construction in 1604. It's beautiful, other than the ugly road that runs through the center of it. Comically, the sign for tourists proclaims, 'There has always been a road running via the castle island' (there wasn't) like they know the judgement in your eyes as you gaze over the ruin.

Why couldn't they have built the road just a short distance away? For the sake of progress and convenience a piece of history was sullied. The people who built the first bridge in 1845 probably didn't appreciate the castle at the time. The bridge is a reminder to carefully consider what the impact of advancement can have.

It also reminded me about what Dr. Kallio said about some of the issues with language models earlier in the day.

When you get a language model that has a basic understanding of language and how things are related to each other it’s a challenge to give it ethical guidelines. It’s a problem of who gets to decide and how you can ensure they don’t break and do things they’re not supposed to do.

"All AI systems should have a purpose. Why is the system being set up? For ChatGPT, what is the purpose? It could be anything? That’s why I see AI becoming a lot more focused and then we won’t have so many ethical issues. AI should be there to replace all the little things in life, not replacing artists, and writers," Dr. Kallio told me.

There is a lot of sensationalism about what the future holds with AI on the horizon. The amount of data is continuing to grow, the amount of training required for AI will grow on top of that too, requiring more and more energy.

Looking over the castle ruins at the cars driving over the road makes me hope that we will be more considerate as we progress with AI. Talking to the scientists working at LUMI gives me a feeling of confidence that we will.