If you ran into a robot in the street, there are a number of crucial giveaways that would help you pick it out from a human crowd — silicon skin or unblinking eyes, to name a few.

Yet, when people cannot see the robots they interact with, this distinction between what is and isn’t human becomes much hazier. That’s the premise of the Turing test, which helps determine whether or not a robot or A.I. can pass as human. In a new paper, a team of researchers has implemented a variation of this test that looks at behavior variability found in humans and animals to see whether or not mimicking this trait can make robots seem more alive.

By introducing variability in reaction time to robots’ otherwise rigidly programmed behavior, the team of researchers found that humans can be fooled into believing a robot is flesh and blood.

In the findings, published Wednesday in the journal Science Robotics, researchers report that their robot test subjects have passed the new, nonverbal Turing test. To put it simply, the robots were able to perform a task alongside a human partner in a matter so convincingly human that their partners couldn’t tell whether they were actually human or not.

“We created a situation in which users were not able to distinguish whether they were interacting with a robot controlled by a human or by a computer program,” lead author Agnieszka Wykowska, a professor of human-robot interaction at the Italian Institute of Technology, tells Inverse.

Here’s the background — Famously proposed by computer scientist Alan Turing in the 1950s, the classic Turing test — also called the Imitation Game — works by having human participants interact blindly with either another human or a robot via text conversation. Through these conversations, the main human participant must decide whether they’re chatting with another person or a machine.

If the machine is able to fool the participants into believing it’s human, then it has passed the test and, in turn, theoretically possesses some level of human intelligence. However, the team decided to deviate from this definition for a number of reasons, says Wykowska.

“In our study, we avoid talking about intelligence [because] it is complicated to determine what makes a human intelligent [and] is even less clear what machine intelligence would be,” she says. “This variation is important because it moves from the idea that humanness is associated with language to the focus on subtle human-like behavior of the robot.”

Why it matters — This version of the Turing test says less about the capabilities of the robots themselves, though learning more about how humans’ perceptions of robots can change is crucial to developing better methods for human-machine interaction, Wykowska says. This could be especially important in collaborative work environments, like warehouses or operating rooms.

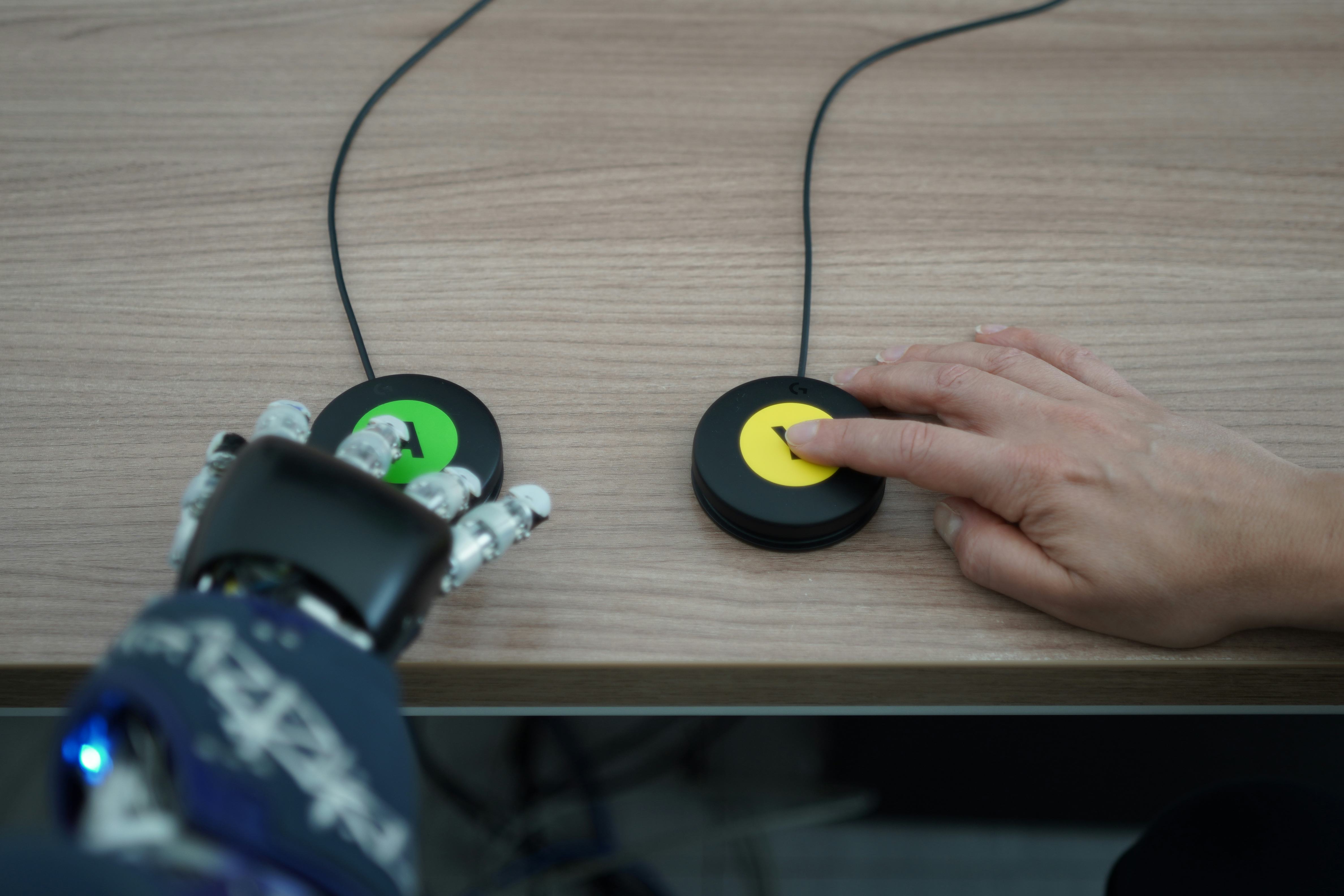

What they did — To test how behavior variability affected the perceived “humanness” of the robots, Wykowska devised an experiment similar to Simon Says in which human and robot pairs worked together to press buttons in a shapes- and color-matching game. For example, a color or shape change would prompt the human participant to press a button and the robot participant would respond by clicking the opposite shape or color.

In some cases, the robots were controlled via a preprogrammed algorithm embedded with human-like variability derived from human data sets. Other times, the robots were remotely controlled by another human outside the room. After 100 trials, the human participants were tasked with guessing whether or not their partner was human or not.

While the human users weren’t excellent at identifying when a robot was in control (they guessed correctly 50 percent of the time), Wykowska says this is still sufficient evidence to say that the pre-programmed robots passed this Turing test.

“We can say that the robot passed our nonverbal version of the test because human participants, our independent observers, were not able to distinguish whether the behavior of the [robot] was generated by another human being or a computer program,” she says.

On the other hand, the participants were able to guess when a human was in control more than 50 percent of the time, which Wykowska says is evidence that humans are sensitive to subtle changes in human-like behavioral variability.

What’s next — These human-like robots are far from convincing enough to masquerade as humans right now, but Wykowska says this work will help inform how human-machine collaborative tasks are designed going forward to make them safer and more effective. For example, Wykowska says, it may be beneficial to program co-working robots with more human-like behavior to improve collaboration but a robot in an operating room that’s used only as a tool is safer when it’s more predictable.