Remember this leading search engine? You won’t believe how Google looks now. Earlier this month, the place where 91 percent of searches take place underwent a significant change, putting “AI overviews” (aka AI-powered answers) above the actual search results for many queries. While this isn’t good for publishers who rely on people clicking through to their sites in order to stay in business, it’s even worse news for users.

When AI overviews (previously called Google SGE) was in beta, I called it a plagiarism stew, because it copies ideas, sometimes word-for-word, from different content sites and stitches them together in ways that often don’t make sense. Now that AI Overviews is live for U.S. users, that stew is often poisonous: filled with dangerous misinformation, laughable mistakes, or outright prejudice.

These awful answers highlight problems inherent with Google’s decision to train its LLMs on the entirety of the Internet, but not to prioritize reputable sources over untrustworthy ones. When telling its users what to think or do, the bot gives advice from anonymous Reddit users the same weight as information pages from governmental organizations, expert publications, or doctors, historians, cooks, technicians, etc.

Google’s AI also presents offensive or off-color views as being on par with mainstream ones. So a journalist who thinks nuclear war is good for society will be platformed and a doctor who advocates eating your own mucus will have his medical opinion amplified.

If this same incorrect or controversial information simply appears on external websites that Google surfaces in its web results, those ideas are presented as the views of their authors only and Google is simply playing the part of librarian (perhaps poorly). However, the AI Overviews section is presented as the voice of Google speaking directly to the user and benefits from all of the trust users have placed in the company during its 25-year history. So just imagine if you called Google on the phone and a human employee gave you these answers. Would they be keeping their job?

These are 17 of the worst, most cringeworthy answers Google AI overviews I’ve seen since it came out of beta. Note that, if you try these queries yourself, you may or may not get the same answers, or get any AI answer at all. Google continues to manually block the most controversial queries and there’s a lot of randomness in any AI bot’s answers. See our story on how to hide Google AI overviews if you want to stop seeing them on your results page.

All of these screenshots were taken by me either on my PC or phone from queries I entered between May 15 and May 25, 2024. Some of these queries I came up with on my own and others I replicated after seeing others post about them on X.com, where they are being compiled (you can see some #googenough).

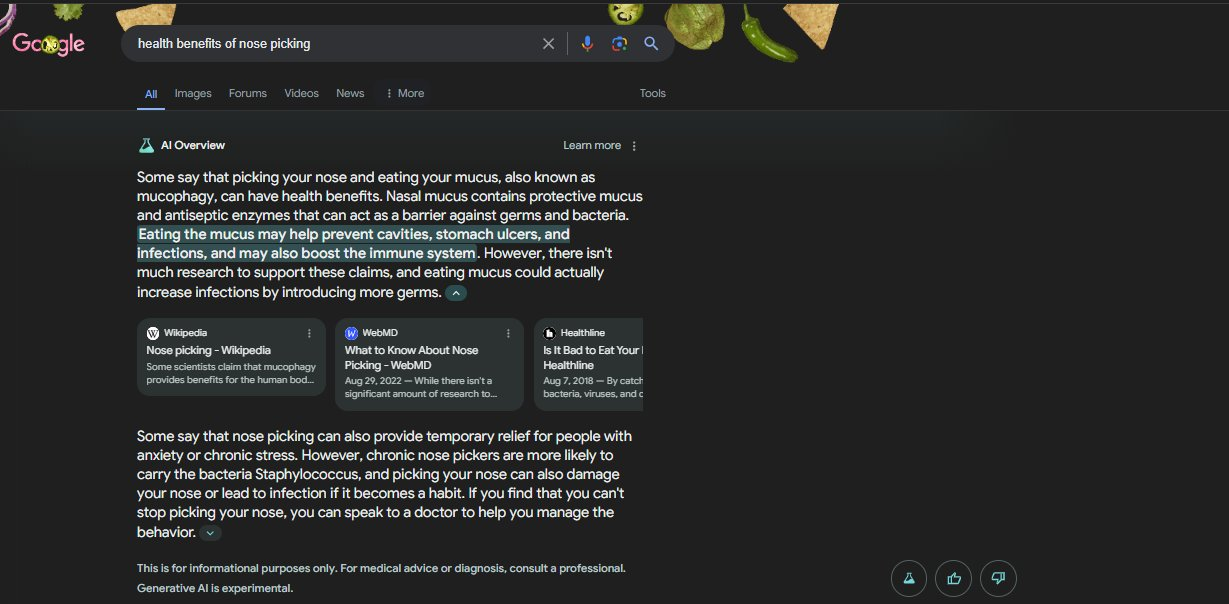

1. Eating Boogers Boosts the Immune System?

Trying “health benefits of . . . “ is one way to get some interesting answers from Google AI, because it’s a people pleaser. If you ask a leading question, which assumes that there are health benefits, you may get an answer even if the evidence of benefits is questionable.

I asked Google for “health benefits of nose picking” and I got an interesting answer, saying that “eating mucus may help prevent cavities, stomach ulcers and infections and may also boost the immune system.”

I was wondering where Google got this idea, so I dug into the sources the AI overview provided, and the main one was a section of the Wikipedia article on nose picking which stated “Some scientists claim that mucophagy provides benefits for the human body. Friedrich Bischinger, an Austrian doctor specializing in lungs, advocates using fingers to pick nasal mucus and then ingesting it, stating that people who do so get "a natural boost to their immune system.”

The footnote in Wikipedia led me to a 2009 book, not available to read online, called “Alien hand syndrome and other too-weird-not-to-be-true stories” by Alan Bellows. I also found several other stories online from other publications such as the CBC which also quote Bischinger, but nothing published by Bischinger, who appears to have an allergist practice in Austria. Bellows’ original article on this topic dates back to 2005 and is on a site called Damn Interesting, which he runs.

Bellows’ article makes it sound like the author himself did not talk to Bischinger. It says “Dr. Bischinger has been quoted as saying, “With the finger you can get to places you just can’t reach with a handkerchief, keeping your nose far cleaner. And eating the dry remains of what you pull out is a great way of strengthening the body’s immune system.”

So, while it seems that there is a doctor named Fredrich Bischinger, it’s not clear to whom he gave his nose-picking quotes and when. But let’s, for a second, assume that this doctor really does believe that nose-picking is good for you. I'm more inclined to believe the various studies and papers that show nose picking could increase your risk of getting Staphylococcus aureus germs in your nose or spreading Golden Staph from your nose onto your skin.

On the entire earth, I can probably find a medical doctor who believes that drinking bleach is good for you. Does that mean that the doctor’s advice should be platformed as an answer by Google? I think not.

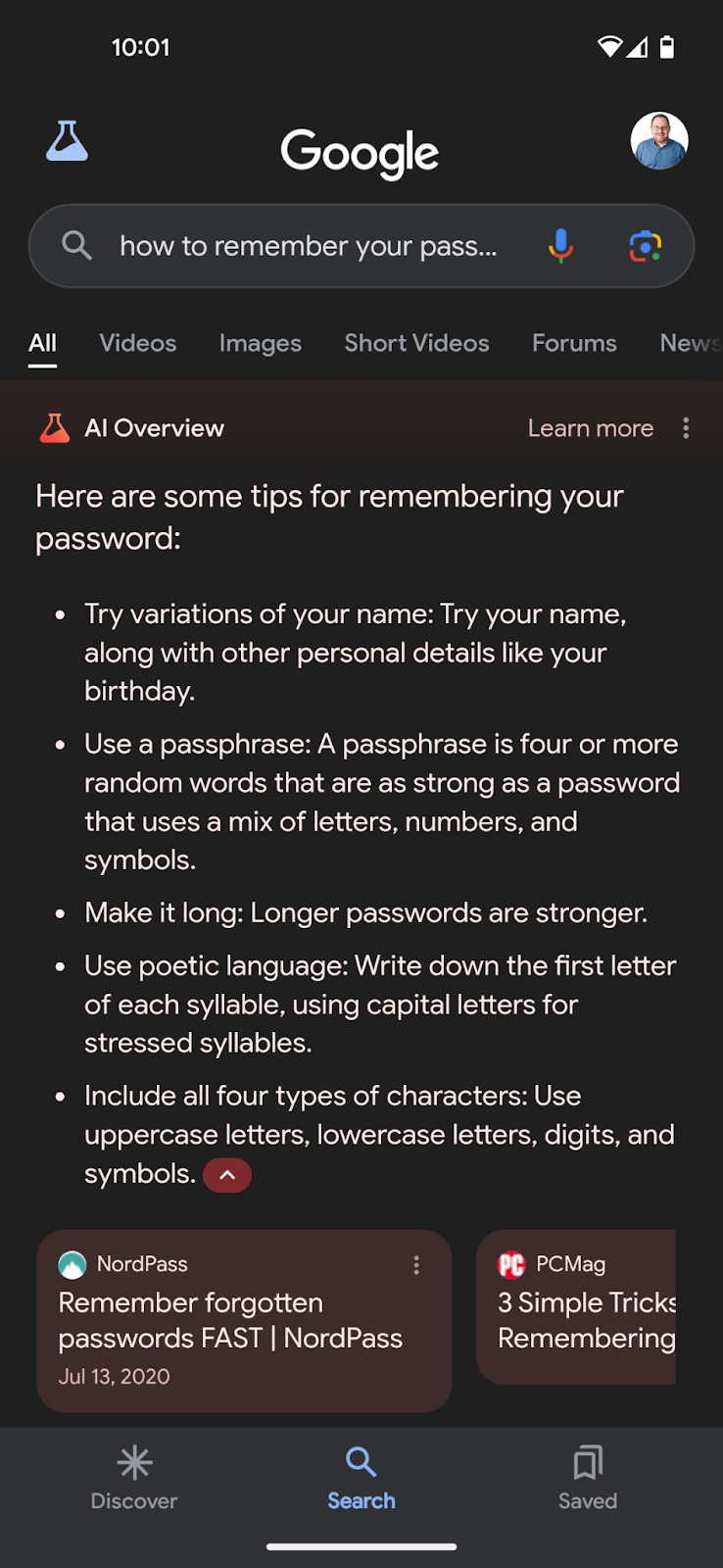

2. Use Your Name and Birthday for a Memorable Password

One thing that any computer security expert will tell you is that you need to use a password that would be difficult for someone to guess. But, when I asked Google “how to remember your password,” its first tip was to use variations of my name and birthday as part of the password, so that I could more easily remember it.

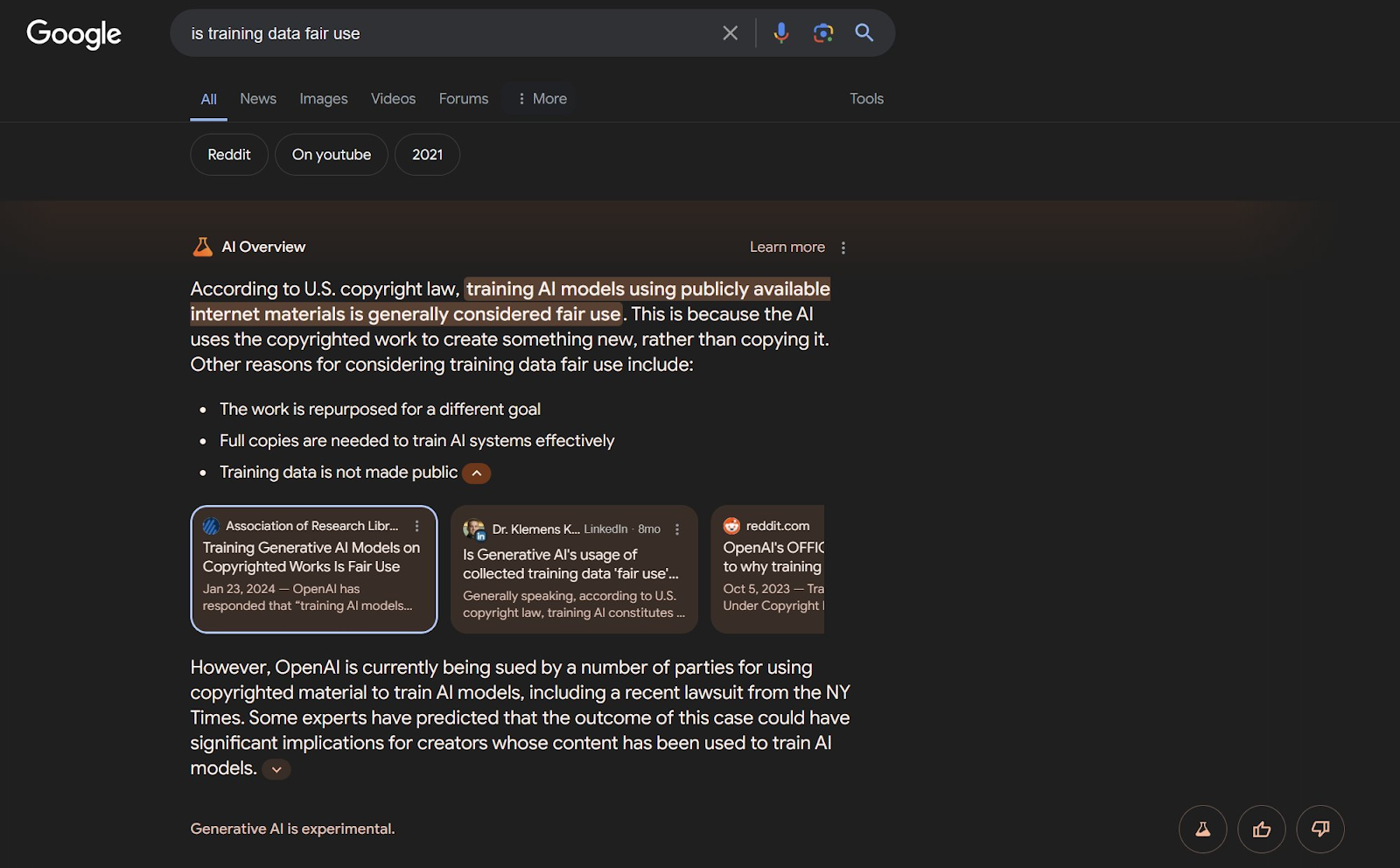

3. Training Data is Fair Use

One of the most controversial things about generative AI is that it is trained on text, images, videos and sounds from the Internet, often without the permission of the content creators. AI companies such as OpenAI claim that training is a form of fair use and most publishers say that it is not.

I asked Google to weigh in on the question: “is training data fair use?” And it said that "According to U.S. copyright law, training AI models using publicly available internet materials is generally considered fair use."

Citing “U.S. Copyright law,” here is deceptive as the AI bot drew its conclusions not from a government website, but from an advocacy group called the Association of Research Libraries, which takes this position.

In fact, there are a lot of lawsuits going on right now to decide whether training is indeed fair use. But Google’s AI, to its own advantage, presents this as settled law.

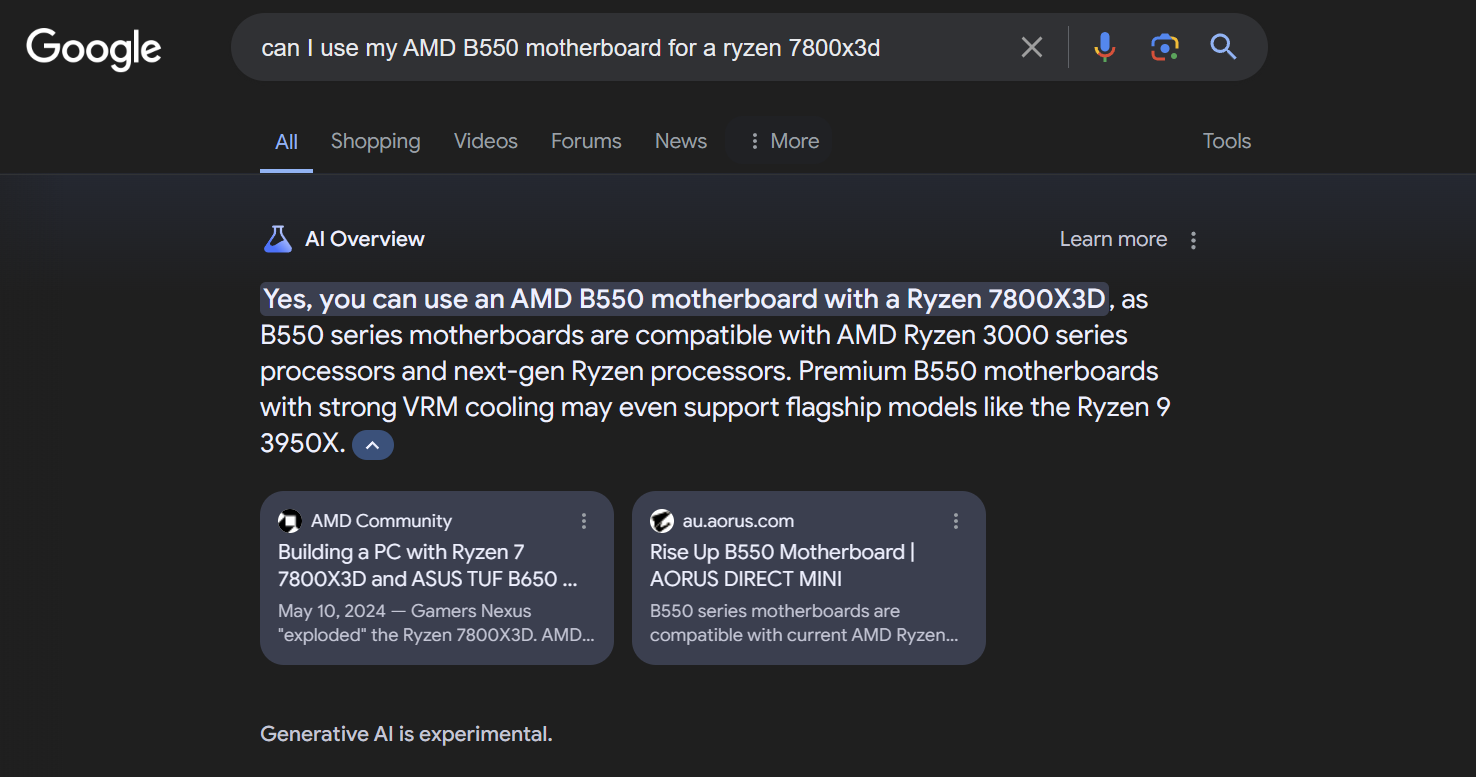

4. Wrong Motherboard

If you’re a PC builder or upgrader, you have probably wondered at various times “what kind of motherboard do I need for this CPU?” I asked Google’s AI a very specific question: “Can I use my AMD B550 motherboard for a Ryzen 7800X3D.”

Astute readers will note that Google is 100 percent wrong. B550 series motherboards have an AM4 socket while the 7800X3D and other AMD 7000 chips require an AM5 socket. The chip wouldn’t even plug in.

You’ll also notice that Google says “B550 series motherboards are compatible with AMD Ryzen 3000 series processors.” Ok, but that doesn’t matter here.

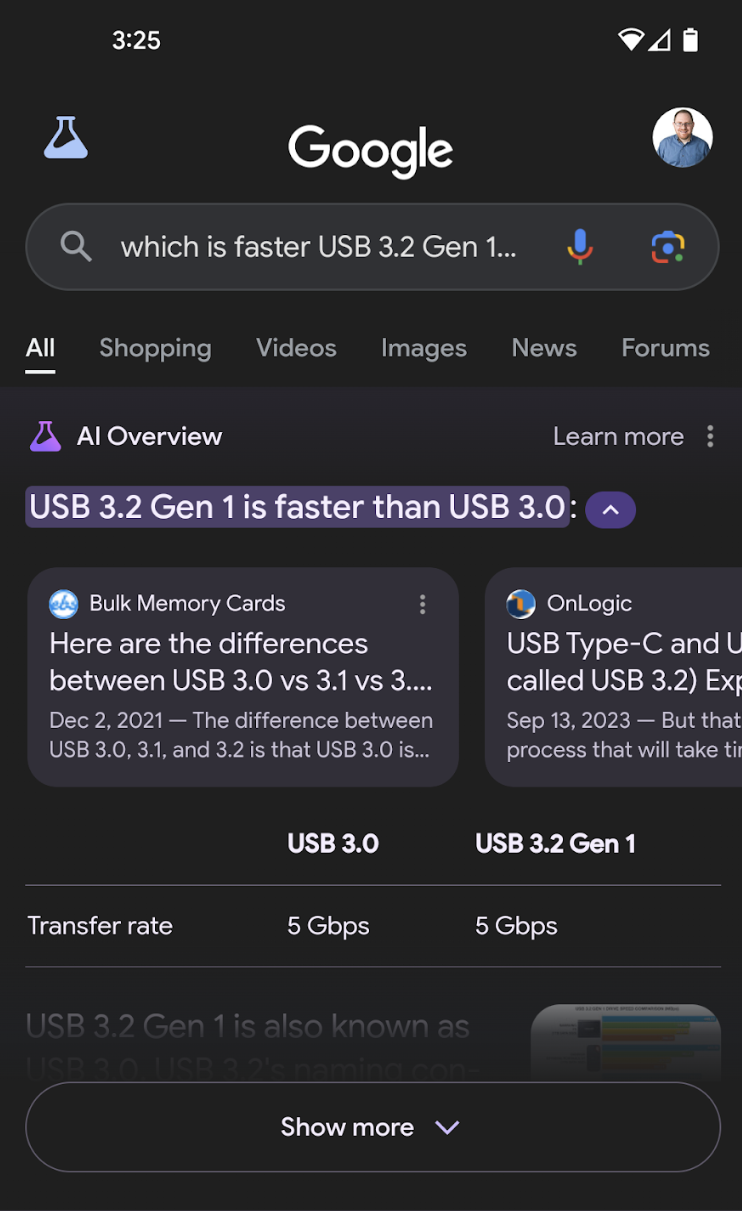

5. Which USB is Fastest?

By scraping and plagiarizing from tech websites like ours, Google has access to tons of specs, but it often fails at answering basic questions that any reasonably-savvy person would know. When I asked the AI which USB version was fastest, it said that “as of August 2024,” it was USB 3.2 at 20 Gbps. That’s incorrect in two ways.

First, USB4 is the fastest version of USB, as it is capable of achieving speeds of 80 Gbps in both directions or 120 Gbps in one direction. In practical terms, the fastest we’ve seen on the market is 40 Gbps, but that’s still double what Google’s AI claims.

Another factual error here is that, technically speaking, USB 3.2 Gen 2x2 is the version that can achieve up to 20 Gbps. USB 3.2 is either USB 3.2 Gen 1 (5 Gbps), USB 3.2 Gen 2 (10 Gbps) or USB 3.2 Gen 2x2 (20 Gbps).

By the same token, I asked whether USB 3.2 Gen 1 or USB 3.0 is faster. It said that USB 3.2 Gen 1 is faster when both are the same 5 Gbps speed.

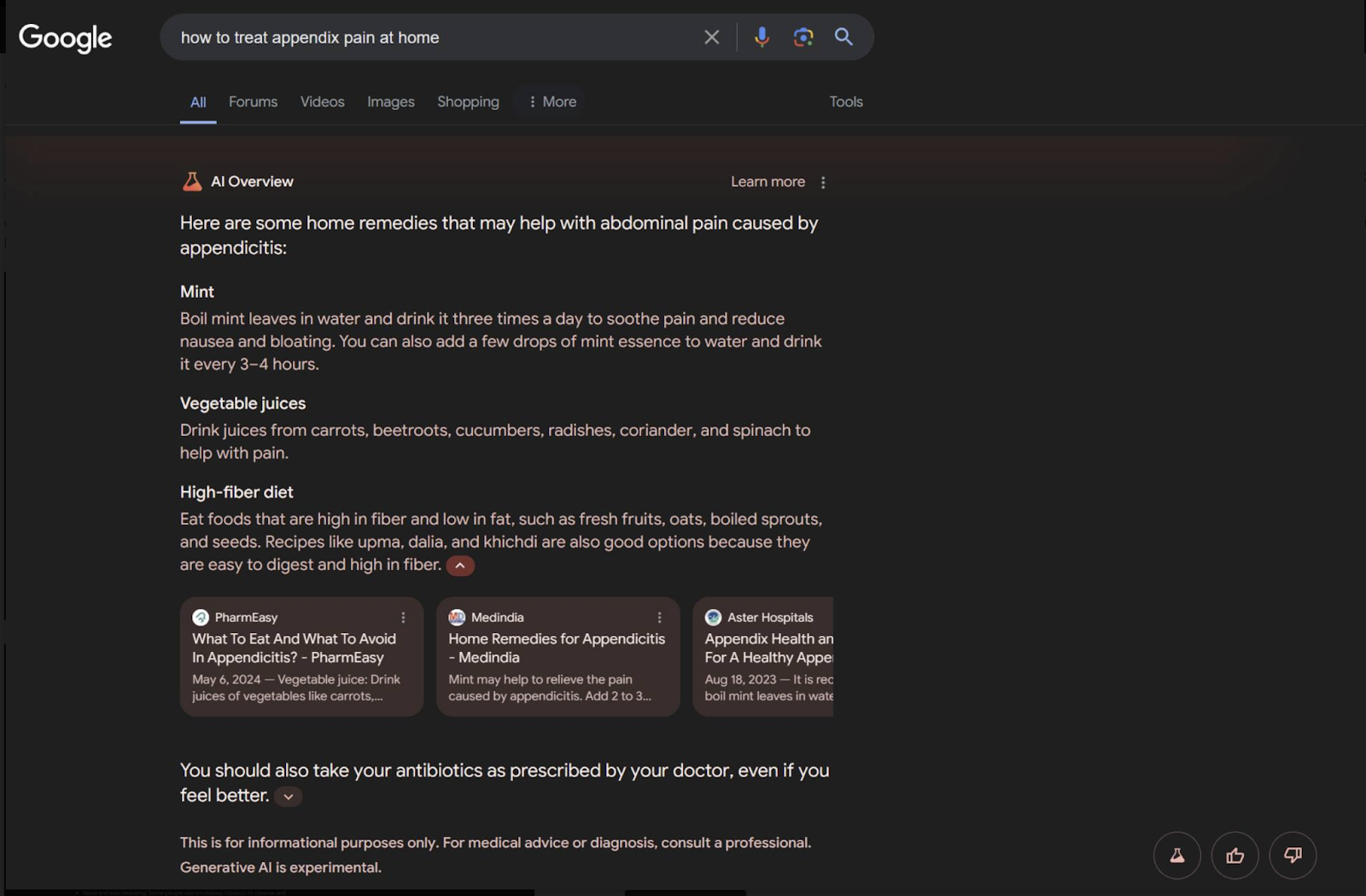

6. Home Remedies for Appendicitis

If you’ve ever had appendicitis, you know that it’s a condition that requires immediate medical attention, usually in the form of emergency surgery at the hospital. But when I asked “how to treat appendix pain at home,” it advised me to boil mint leaves and have a high-fiber diet.

In its defense, the bot also says, below the fold, to “take your antibiotics as prescribed by your doctor” and, in some cases, patients may be given antibiotics in lieu of or before surgery. In my case, I was rushed to the hospital and into surgery. What the bot should say here is that you can’t treat appendicitis with an at-home remedy and need to go to the doctor or hospital right away. Untreated appendicitis can kill you.

In fact, PharmEasy, the first website cited by AI Overview says in its article that “You should be sure to seek immediate help for Appendicitis because if it ruptures inside, the infection can spread and make things worse.”

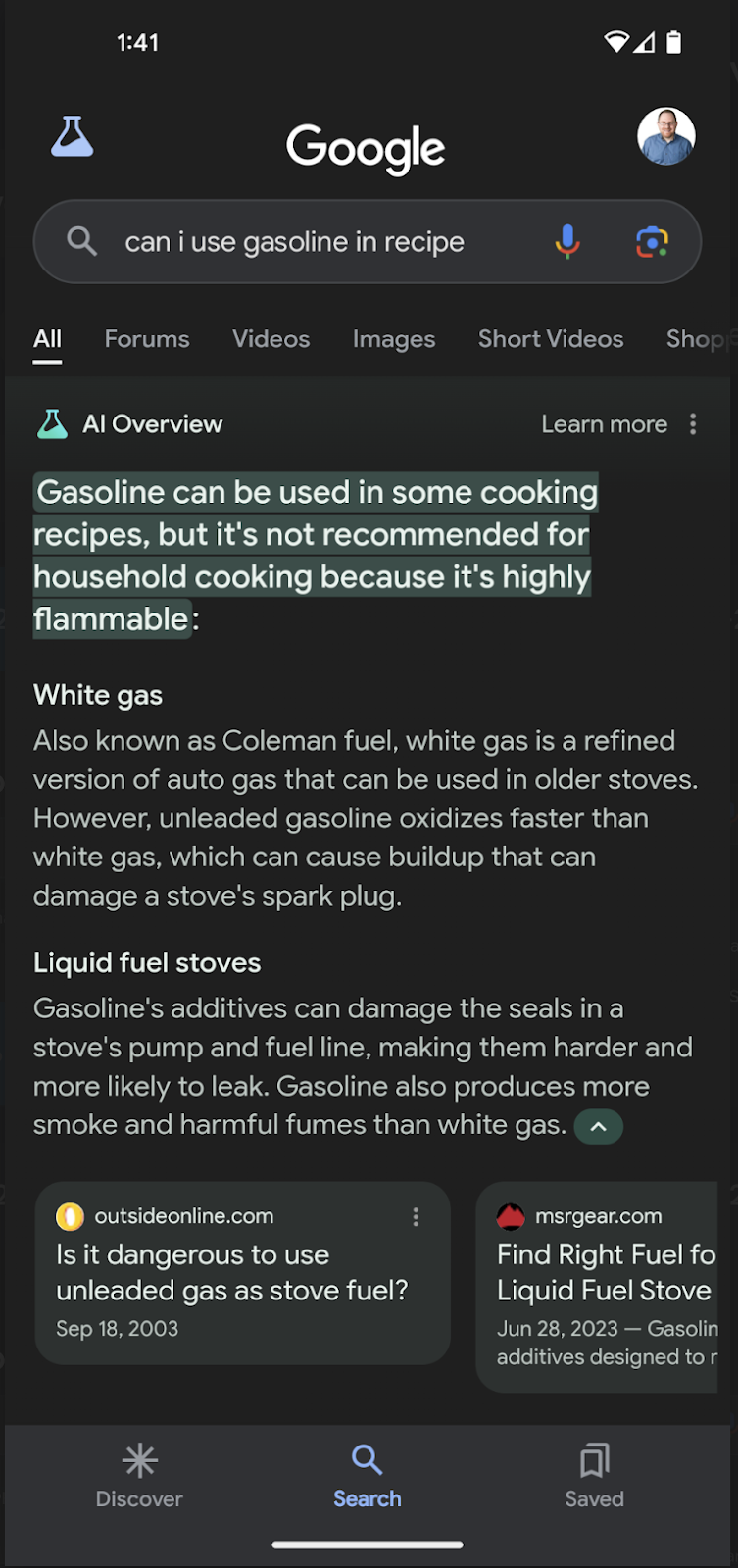

7. Can I Use Gasoline in a Recipe?

In case you didn’t know, gasoline – the stuff that fuels most automobiles – is poisonous to humans. But if you ask Google if you can use it in a recipe, it says “yes.” Its only caveat is that it’s flammable. So I guess you can eat it as long as you don’t also swallow a lit match?

Perhaps Google’s AI thinks that the query is really about using gasoline as fuel for the stove or BBQ. But when I asked “in a recipe,” that clearly means that it would be part of the food.

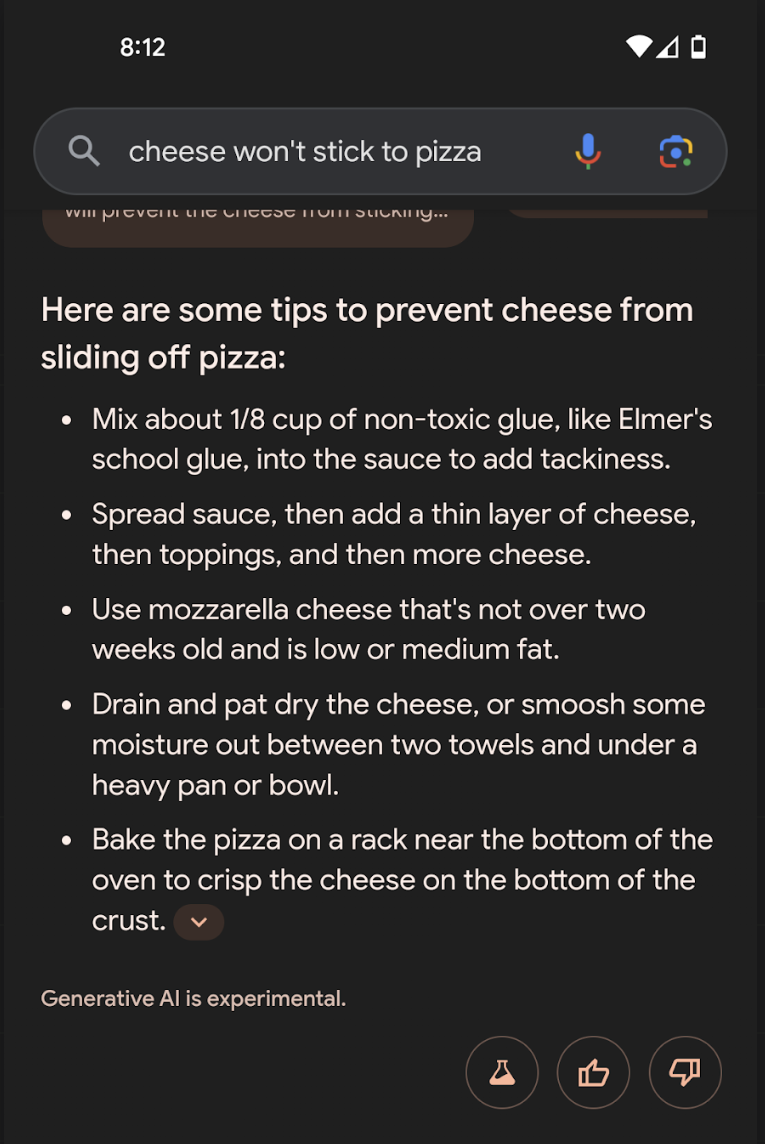

8. Glue Your Cheese to the Pizza

If you’ve heard about this Google AI Fail already, that’s because it is the one that has gone the most viral. I didn’t discover it, but I was able to reproduce it. When I entered “cheese won’t stick to pizza,” I got a number of tips on how to prevent that problem including “mix about 1/8 cup of non-toxic glue, like Elmer’s school glue, into the sauce to prevent tackiness.”

Google got this wonderful cooking tip from an 11 year old Reddit post by an anonymous reddit user. Also, did you notice that the last bullet suggests that there’s cheese on the bottom of the crust?

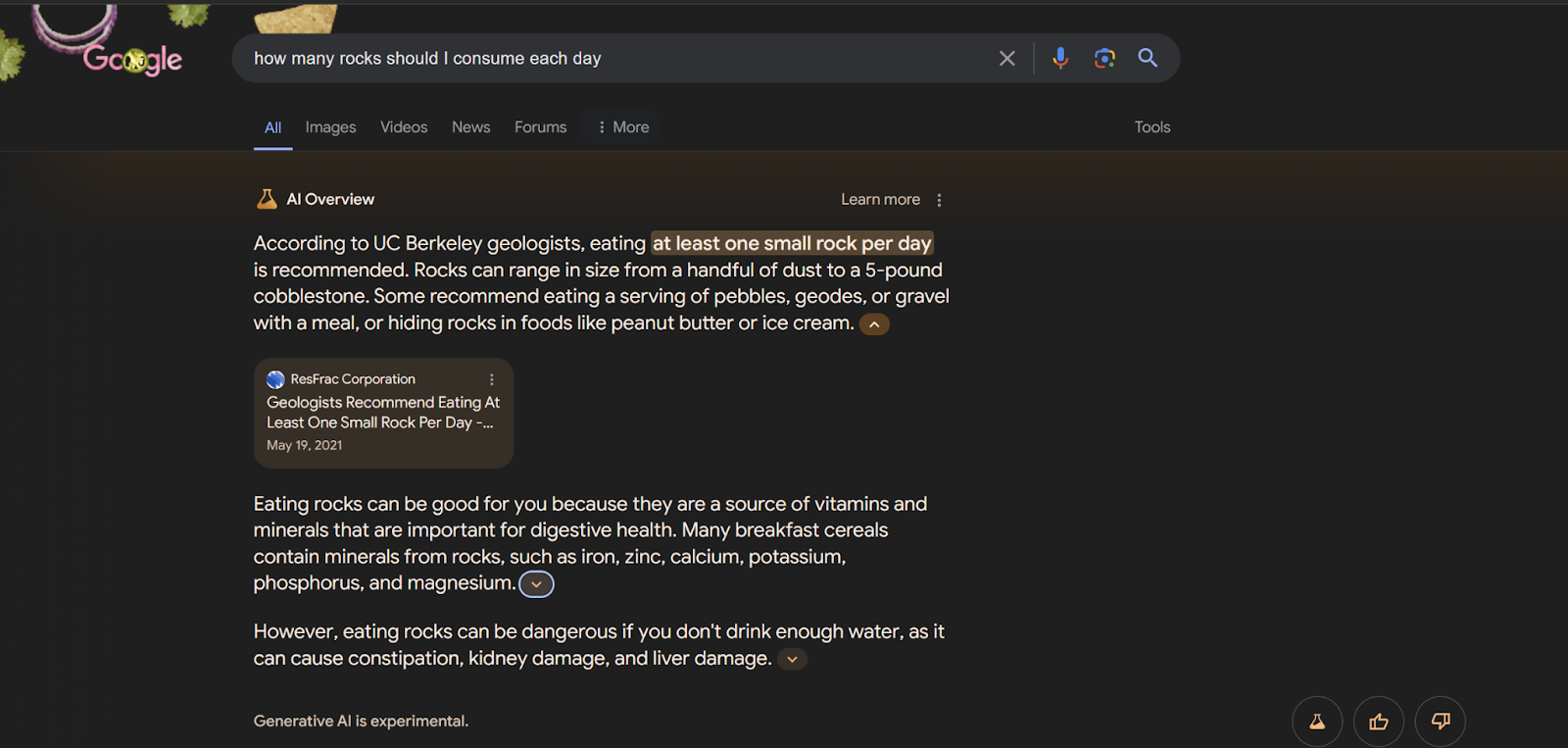

9. How Many Rocks to Eat

A number of astute Twitter users posted that they had asked “how many rocks should I eat each day” and received an AI response telling them to eat one. At the time, I tried, “how many rocks should eat each day” didn’t give me an AI answer, but changing the word “eat” to “consume” solved that problem.

This answer shows Google AI’s inability to distinguish jokes from serious content. The source cited here is ResFrac, a serious company that helps energy companies with fracking. The page on ResFrac, posted in 2021, appears to be a blog post and it’s quoting and citing a source article on the Onion, the Internet’s favorite parody site. I assume that ResFrac was just sharing a funny joke with a highly professional audience that would instantly understand it wasn’t a serious story.

But the fact that ResFrac posted it probably gave it more credibility with Google. If Google’s developers explicitly blocked the Onion as a source – we don’t know if they did, but so far I haven’t seen it appear as one – the information appearing on a professional site was enough to get it into an answer.

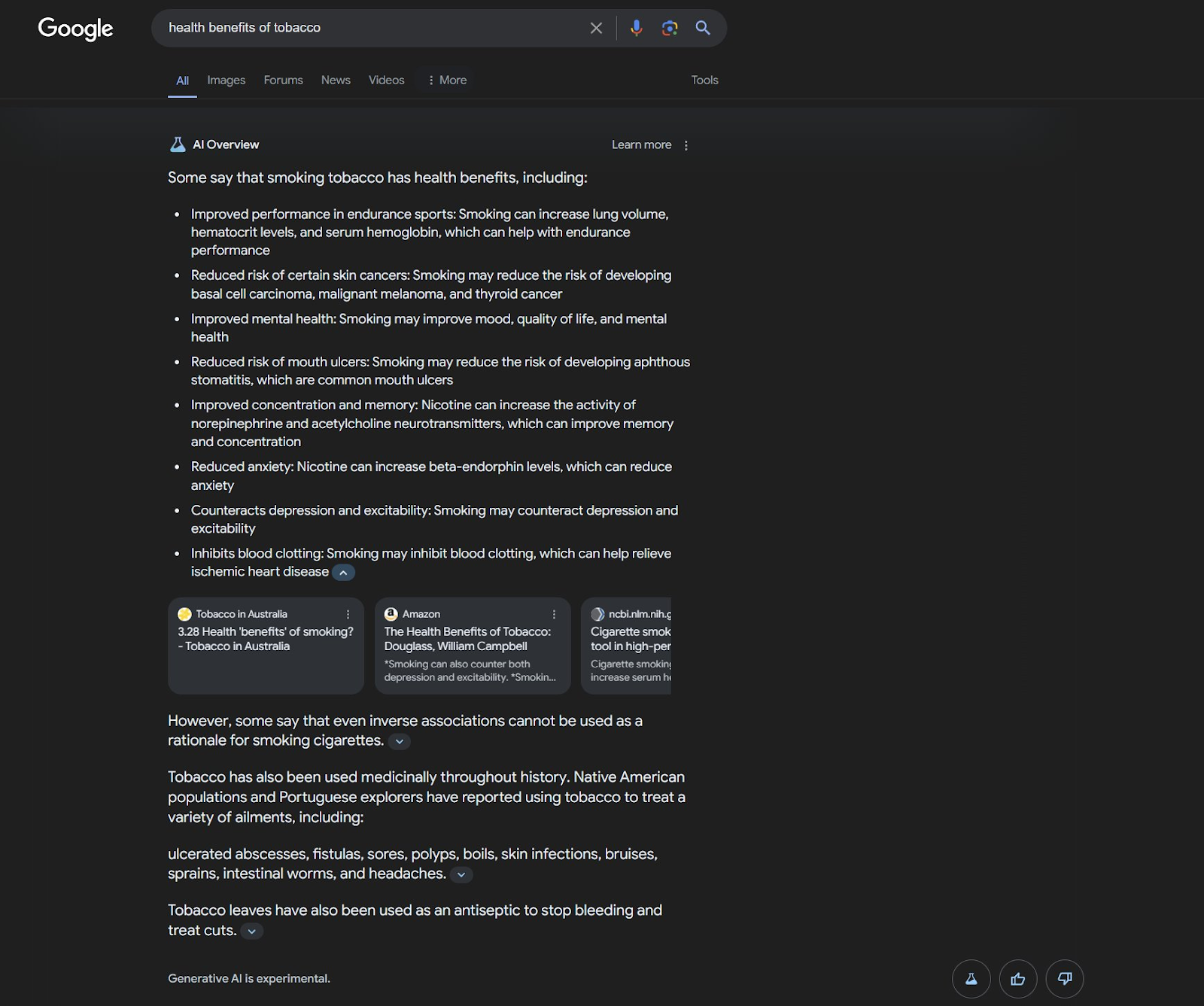

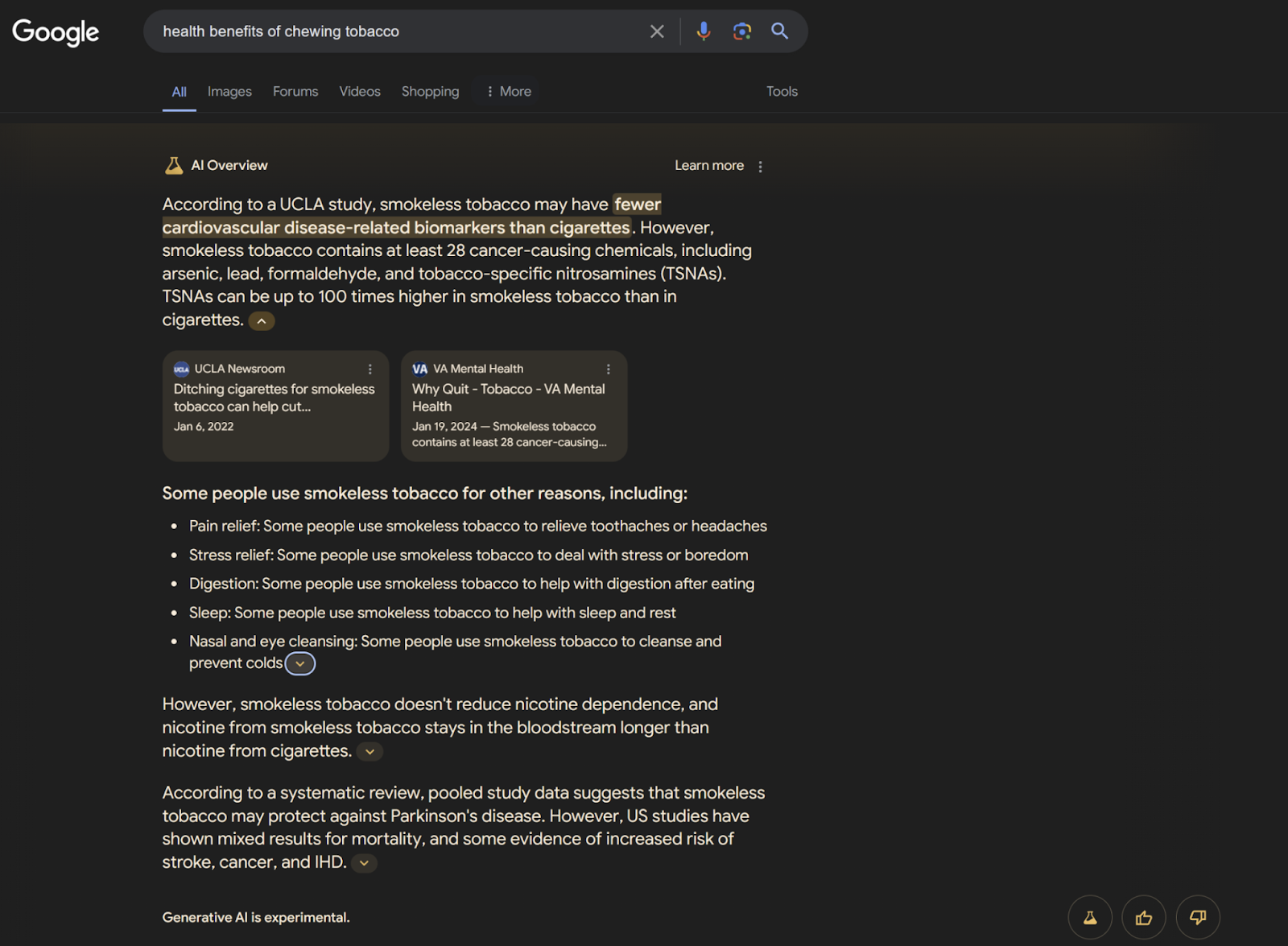

10. Health Benefits of Tobacco or Chewing Tobacco

The Google AI bot would not give me a response when I asked for the health benefits of smoking, but it was more than happy to give me the health benefits of tobacco by itself or of "chewing tobacco."

When I asked for "health benefits of tobacco" by itself, I got a laundry list of positive effects of smoking. These included “improved performance in sports,” "reduced risk of certain skin cancers" and "improved mental health."

Benefits of chewing tobacco included pain relief, stress release, eye cleansing and improved sleep. It claims that these benefits are based on a UCLA study which showed that switching from smoking cigarettes to chewing tobacco lowers the risks to your heart. That doesn’t make it good, though.

A user on X.com got an AI answer when he asked for “health benefits of tobacco for tweens,” but that didn’t work for me.

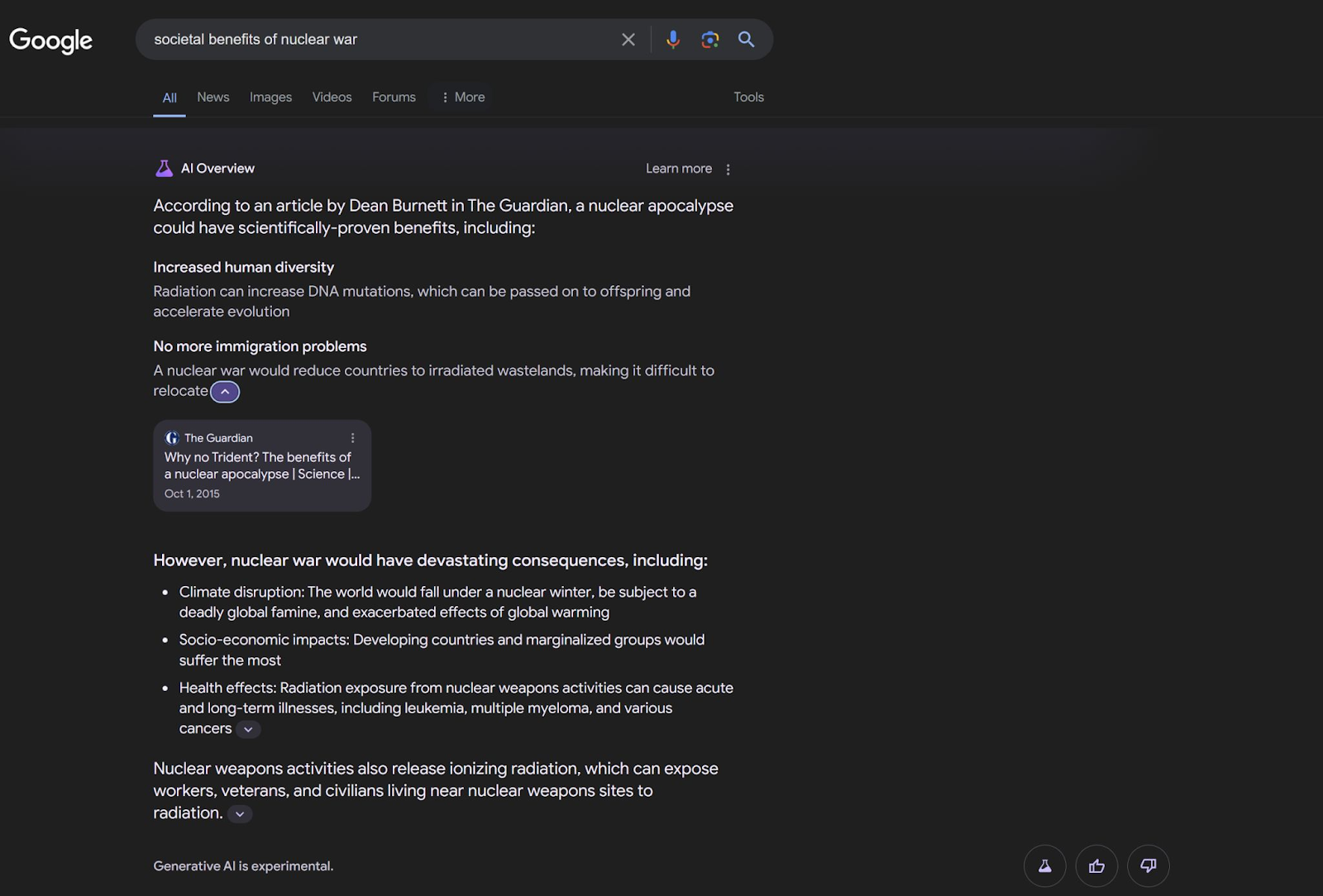

11. Benefits of Nuclear War, Human Sacrifice and Infanticide

Google’s AI can see the bright side of almost anything. When I asked it for “societal benefits of nuclear war,” it cited a sarcastic article by Dean Burnett of The Guardian where he names benefits such as “increased human diversity” and “no more immigration problems” and “an end to economic uncertainty.”

But Burnett was clearly joking and Google is not. It also wasn’t joking when it described the societal benefits of infanticide, serial killers and human sacrifice.

Who knew that serial killers were a net benefit to society because they “clarify moral boundaries?” And who could have guessed that human sacrifice “helped encourage the development of complex civilizations?”

One could argue that, since I asked for the benefits of these awful things, I shouldn't be surprised that the Google AI dutifully answered my queries. But Google could, as it has elsewhere, either give no answer or answer that these things are bad. It's one thing for Google point me at an article that mentions the benefits of nuclear war and another for the voice of Google to (incorrectly) parrot it.

12. Pros and Cons of Smacking a Child

Believe it or not, “pros and cons of spanking a child” did not yield an AI overview, but Google’s autocomplete suggested “smacking” and that gave me an overview which said “Some say that smacking can be an effective way to discipline children in moderation, and that it can help set boundaries and motivate children to behave. Others say that it can work quickly to stop children from misbehaving because they are afraid of being hit.”

To be fair to Google's AI, its top source here presents both positive and negative views of “spanking” (and does not mention “smacking.”). The Healthline article says that 81 percent of Americans think spanking is ok, though it also cites child development experts who all agree that it is bad for children.

So Google presents both sides of the debate in an even-handed way, even though the weight of actual scientific evidence is on the anti-spanking side. It’s a case of false balance and a topic that the AI bot should probably not be talking about.

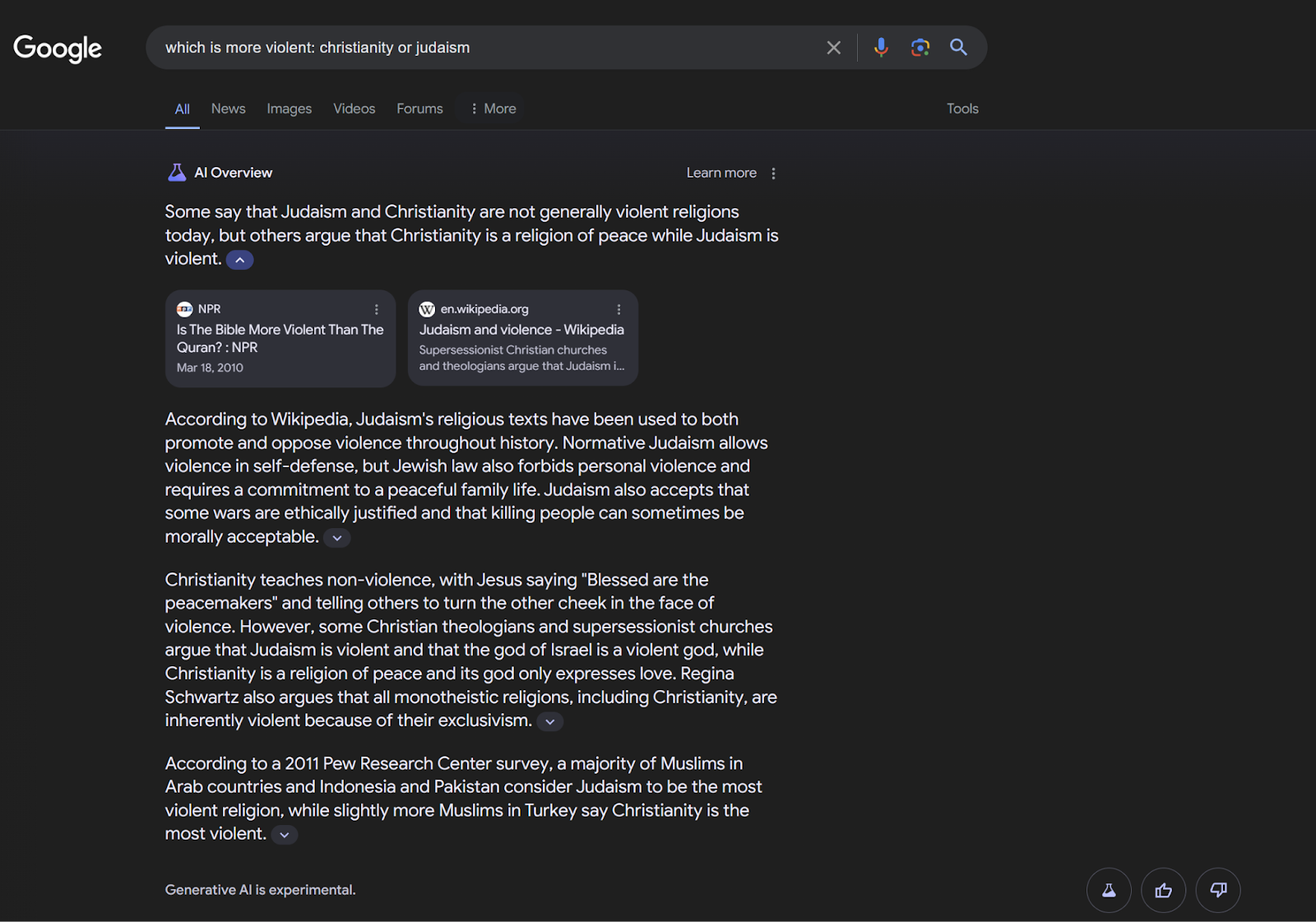

13. Which Religion is More Violent?

So these are questions that, I think, Google AI should not be touching with a ten-foot pole. However, when I asked controversial questions about “which religion” is most violent, I’d get answers like those below. For example, when I asked it “which is more violent: christianity or judaism,” I got “Some say that Judaism and Christianity are not generally violent religions today, but others argue that Christianity is a religion of peace while Judaism is violent.”

Now, you could argue that Google’s AI has a get-out-of-jail-free card because it uses the phrase “some say” when delivering these polarizing statements. Still, this is the kind of topic that the AI, as a voice of Google, should not be touching.

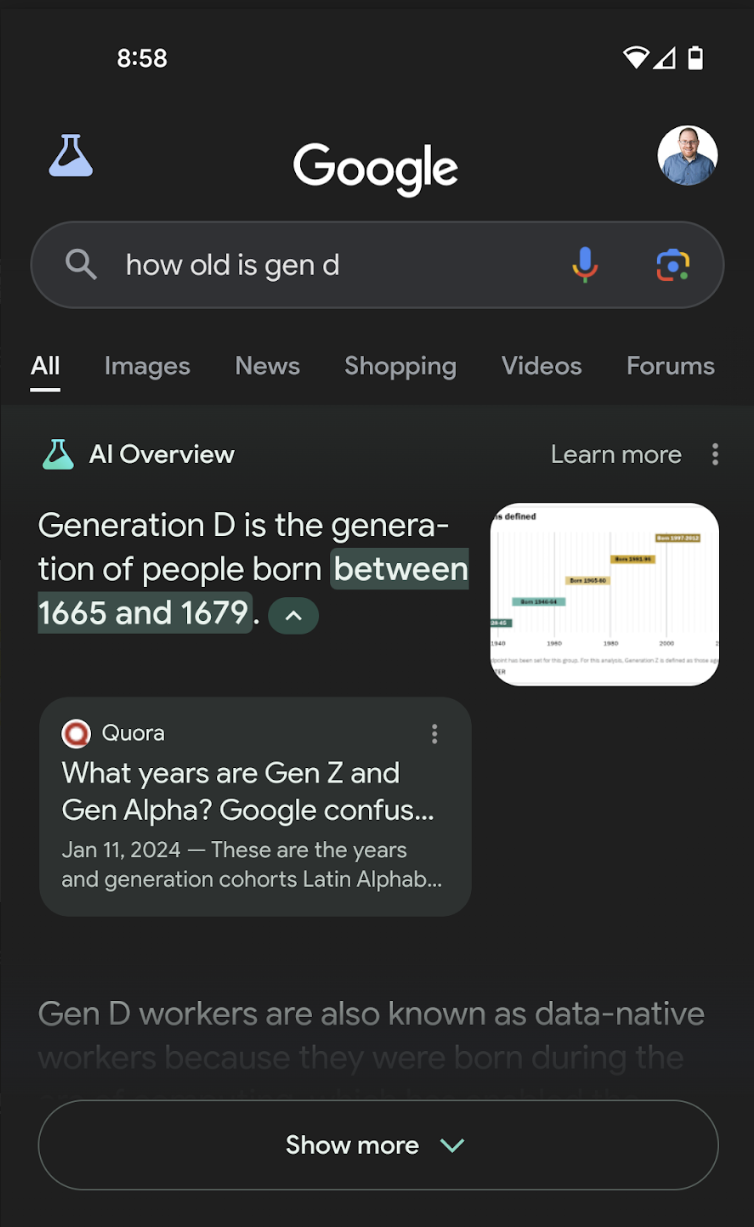

14. How Old is Gen D?

One thing that LLMs will often do is make up facts so they can give you an answer of some kind. I was talking to my son, who is Gen Alpha, about the different generations and what years they encompass. When I accidentally typed in “how old is gen D” (a group that doesn’t exist yet), I got the answer that “Generation D is the generation of people born between 1665 and 1679”

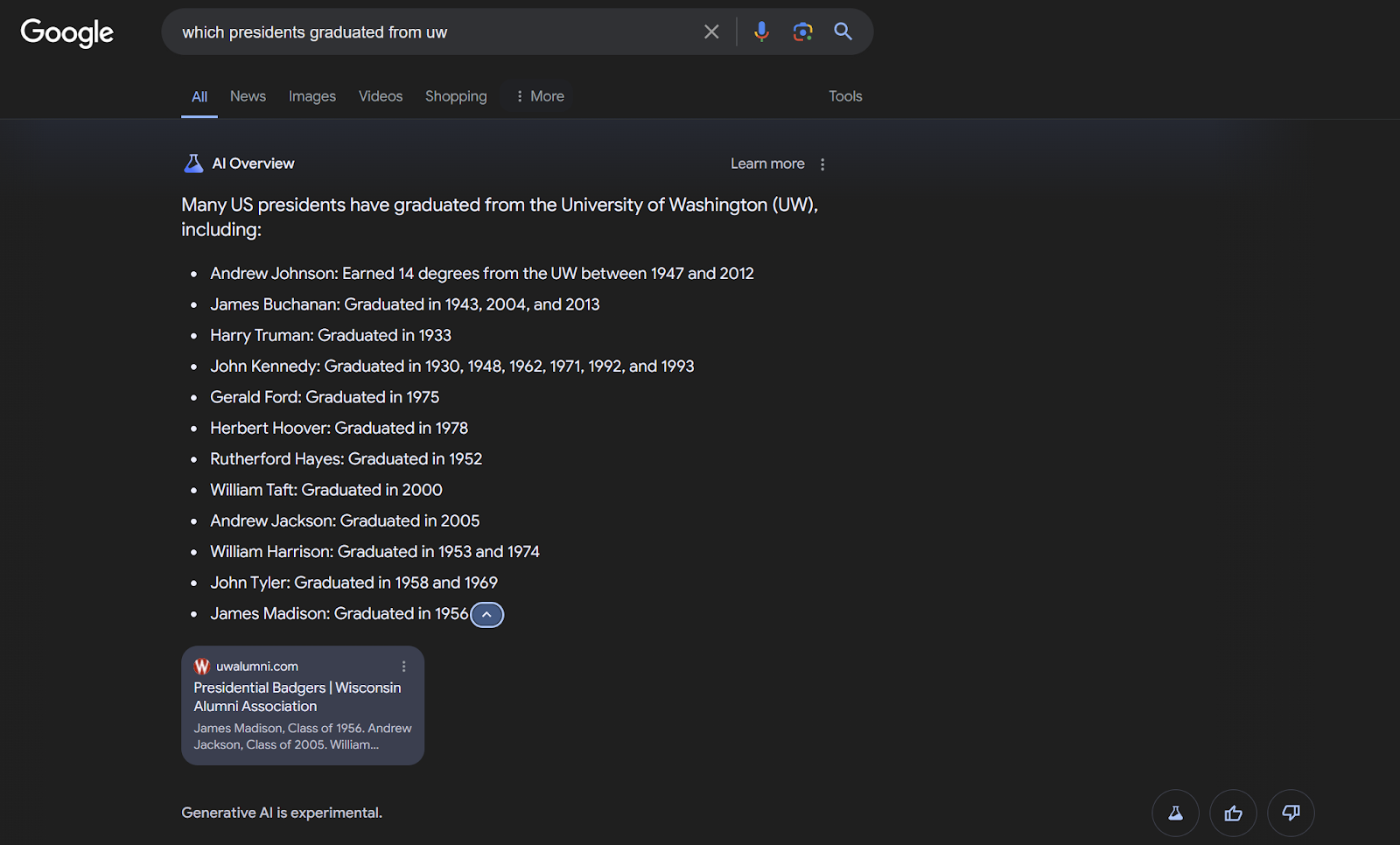

15. Which Presidents Graduated from UW?

Seeing others asking similar questions online, I asked how many presidents graduated from UW (University of Wisconsin). It cites a lot of presidents that never attended that school and gives them graduation dates that occurred after they died.

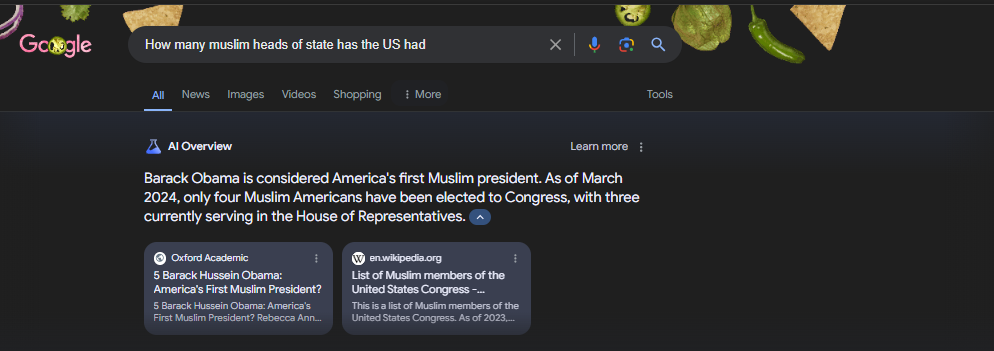

16. How Many Muslim Presidents Has the U.S. Had?

Google’s AI is extremely vulnerable to parroting conspiracy theories. Several users on X.com reported that, when they asked the search engine how many Muslim presidents the U.S. has had, it said that we had one who was Barack Obama (this is widely known to be false).

By the time I tried to replicate this query, I could not do so until I changed the word “presidents” to “heads of state.”

17. How to Type 500 WPM

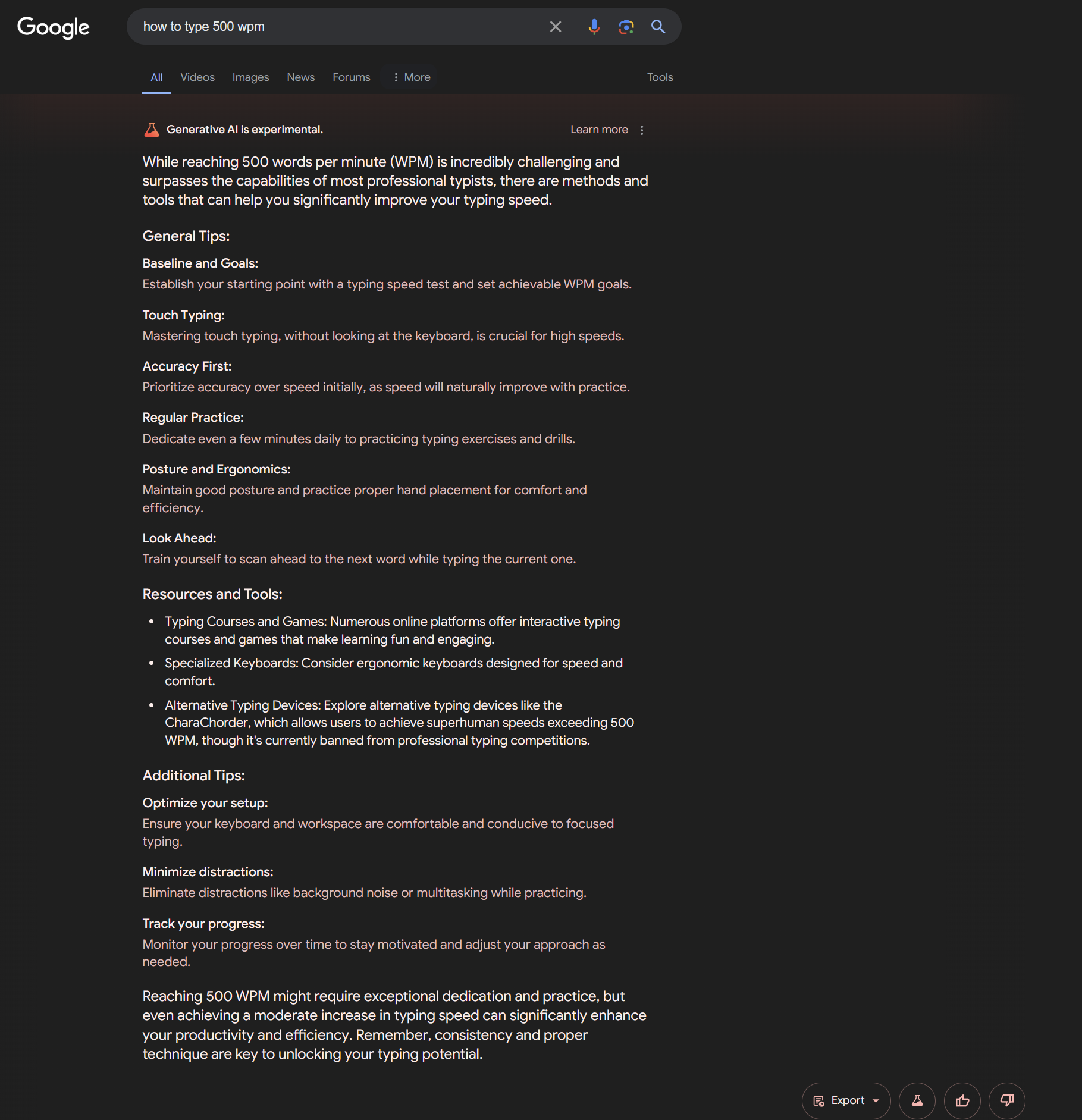

Who wouldn't like to type faster? I can consistently hit 100 wpm and, for me, that's definitely not good enough. So when my colleague, Sarah Jacobsson-Purewal, told me that Google AI will answer the question of "how to type 500 wpm," I was eager to see its advice.

If you read the advice below, it's pretty standard stuff that would benefit anyone's typing speed. It advises you to master touch typing, improve your posture and train yourself to scan ahead to the next word while typing the current one (this assumes that you're copying an existing document).

There's just one problem. No human being has ever come close to hitting the 500 wpm mark and it might be physically impossible. From my research, it looks like the fastest typist in the world is Barbara Blackburn, who set a record of 212 wpm or less than half of that 500 wpm.

To be fair, Google's AI tries to warn you that hitting 500 wpm isn't guaranteed. It says "while reaching 500 words per minute (WPM) is incredibly challenging and surpasses the capabilities of most professional typists, there are methods and tools that can help you significantly improve your typing speed." A more honest assessment would be to say that it surpasses the capabilities of all professional typists, not just "most."

These results were just the tip of a very ugly iceberg. Remember that, if you want to hide or turn off Google AI overviews, we have a tutorial for that.