OpenAI has embraced the festive spirit with its "12 days of OpenAI" event, releasing something new every weekday between now and December 20. In CEO Sam Altman's own words, the company will "have a livestream with a launch or demo, some big ones and some stocking stuffers."

So far we've seen the launch of the o1 reasoning model and a new o1 Pro model behind a new $200 per month ChatGPT Pro subscription. Finally, we got something for research scientists on Friday in the form of reinforcement fine-tuning of models.

With some huge announcements still to come over the next two weeks — rumored to include the launch of AI video generator Sora after months of anticipation — we have much to cover, so we're doing it live.

12 Days of OpenAI: Announced so far

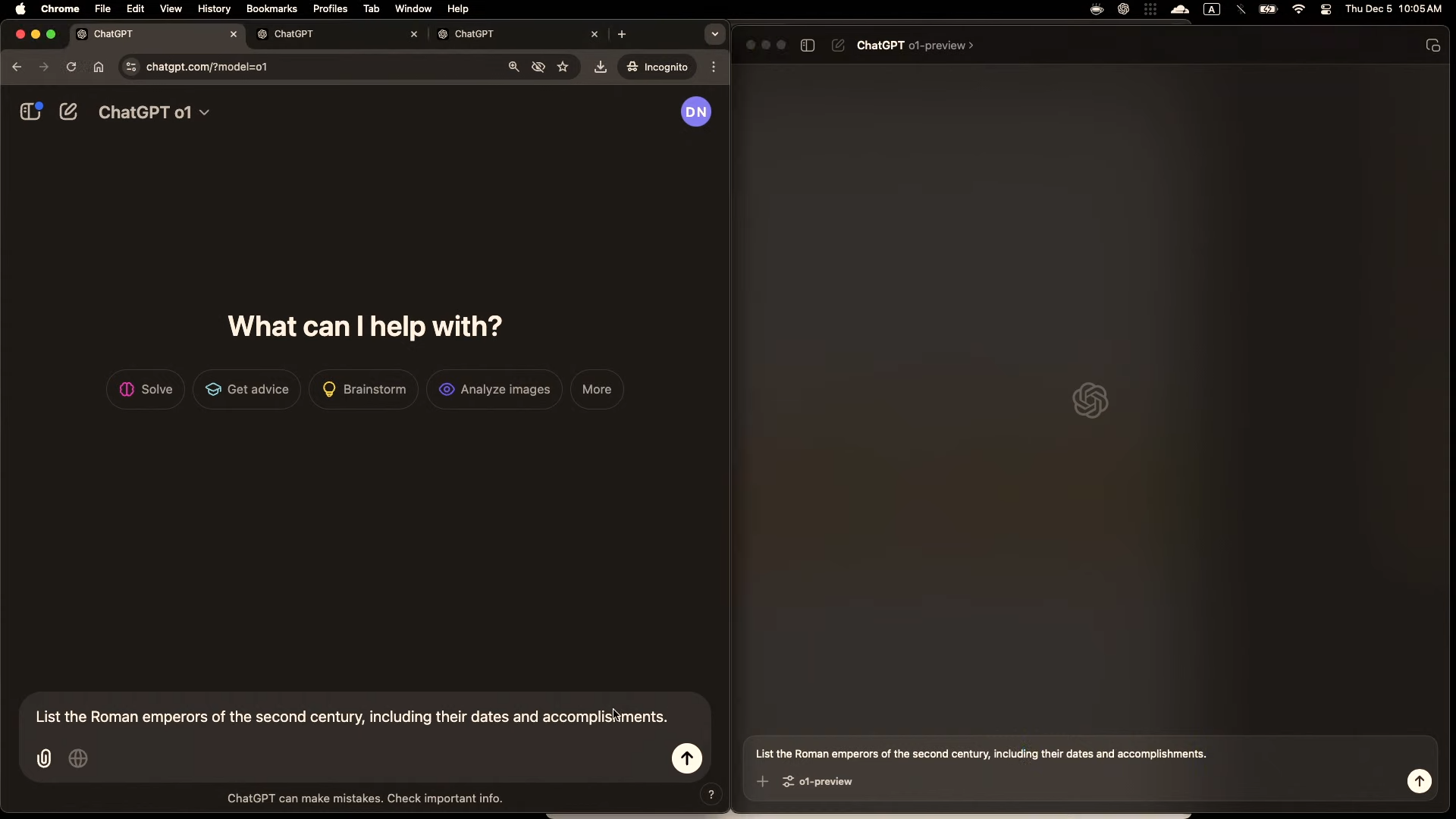

Day 1 saw a rather awkward roundtable live session where Altman and his team announced that the o1 reasoning model is now fully released and no longer in public preview.

On top of that, there's a new Pro More for advanced scientific research and complex mathematical problem solving that you can get for $200 a month (this also comes with unlimited o1 use and unlimited Advanced Voice).

Day 2 saw another roundtable, but this time without Altman. The focus was on the power behind the models. It saw the launch of reinforcement fine-tuning for AI models on complex datasets. This could lead to new disease cures and more.

12 Days of OpenAI: Predictions

Of course, every announcement can't be a blockbuster. OpenAI themselves say that you can expect new things, "big and small." Let's go through a quick list of what we expect heading into the 12 Days of OpenAI.

- Sora soars: After its apparent leak towards the end of last week, we're most confident that OpenAI's video generator will be one of the blockbuster announcements of the show.

- Advanced search gets more advanced: We've seen rumors and leaks about Voice Engine being able to recreate human voices with just a 15-second recording. This would be a wild announcement if it happens, but I'm only 50/50 on it actually seeing a launch this year. Instead, I'd look more to small but significant tweaks like getting access to live search or being able to look through a camera (such as your webcam or phone snapper).

- Canvas improvements: Finally, Canvas has become one of our favorite writing tools that fuses the assistance of generative AI with your workload in a way that feels slick and actually helpful. We predict some updates coming that we saw subtly hinted towards — like stacking different elements within a chat.

- ChatGPT-tweaks: And finally, turning to ChatGPT itself, we're anticipating some improvements to the capability of OpenAI's bread and butter chatbot — including video analysis.

Live updates

Merry 'Shipmass' to everyone in AI land

December is supposed to be a time when things begin to calm down on the run up to the holiday break, not so if you cover artificial intelligence. Last year we had Google launch Gemini in December and this year OpenAI is going all out with 12 days of shipmass.

Sam Altman is turning into Sam-ta Clause between now and mid-December, finally opening the toy chest that has been kept under lock and key, only to be played with by OpenAI employees and a few 'invited guests'.

I'm obviously most excited to try Sora, but there's a lot the AI lab has been sitting on for the past year and a half and we'll have all the details right here.

A billion messages are sent every day in ChatGPT

Fresh numbers shared by @sama earlier today: 300M weekly active ChatGPT users1B user messages sent on ChatGPT every day1.3M devs have built on OpenAI in the USDecember 4, 2024

ChatGPT is only two years old and it was launched as a research preview. The intention was to show how you could use GPT-3 for conversation but it took off and is one of the fastest-growing consumer products in history — and it keeps getting bigger.

Fresh numbers shared by Sam Altman show more than a billion messages are being sent in ChatGPT every single day from more than 300 million active users.

A large part of that is coming from free users, with new features being made available every day including Advanced Voice and search. It will be interesting to see how many of the new features being announced over the next two weeks will be available for free.

There will be some ‘stocking stuffers’

Sam Altman made it clear that not all of the announcements over the 12 Days of OpenAI will be big products, models or upgrades. He said there will be some “stocking stuffers,” referring to those gifts from Santa that help pad out the presents.

U.S. TV shows with more than 20 episodes in a season are famous for them — we’ve all got episodes we regularly skip when re-watching a show. I used to get an orange wrapped in aluminum foil in my stocking each year, between the gifts.

In the context of 12 Days of OpenAI I suspect “stocking stuffers” will refer to either very small changes to the way ChatGPT works (improved search or memory management) or something for developers such as cheaper API calls. It could even be a safety blog.

Sora: The movie-making AI machine

When Sora was first revealed earlier this year it was so far ahead of the competition that it shook the scene. This included AI creators and Hollywood filmmakers alike. It could generate multiple scenes from a single prompt in high resolution. At the time the best models available could do between 2 and 5 seconds showing a single shot.

Of everything OpenAI could announce during its "12 days" event, Sora is probably the most hotly anticipated. It is however less impressive than it would have been six months ago, as we've now got models like Hailuo MiniMax, Runway Gen-3 and Kling.

That said, it's still a big deal, and the recent leak points to it still being at the top of its game. None of the current models are able to generate more than 10 seconds from a single prompt and rumors suggest this is possible with Sora.

We might not get ‘full Sora’ this week though. I’ve heard that a new Sora-turbo model could be what comes from the 12 Days of OpenAI event. This will be closer in capability to the likes of Kling and Runway than ‘full Sora’ with shorter initial clips and less control.

o1 to get a full release with access to more features

OpenAI announced o1, the “reasoning model” earlier this year. It was previously known as Project Strawberry and even Q*. Unlike GPT-4 or even Claude 3.5 Sonnet, o1 spends time working through a problem before presenting a response.

It is much slower than using other models but its great for more complex tasks. It is also good for planning and project work as it can present a complete, well-reasoned report. So far we’ve only seen o1 as a preview model or a mini version. We’re expecting the full release this week.

There are some hints that the announcement will actually be bigger than just the full version of o1, with rumors pointing to a new combined model that takes the best features of GPT-4o and merges them with the reasoning of o1.

This could see o1 get access to image generation, search and data analysis. Having a reasoning model able to work with the full range of tools is a significant upgrade.

Letting Advanced Voice free on the internet

Advanced Voice is one of the most powerful of OpenAI's products. Not just because of its ability to mimic natural speech and respond as naturally as a human, but for the fact it is also available to developers to build into smart speakers or call centers.

Being powerful doesn't mean it is perfect. It can be improved. This could include giving it live search access. Right now if you talk to AV it can only respond based on information either in its training data, its memory or what you've told it during that conversation.

Giving Advanced Voice access to search would make it significantly more useful as you could use it check the weather, stocks, sport scores or the latest news updates.

I'd like to see it go even further and give Advanced Voice access to my calendar, emails and messages so I can have it respond on my behalf. This is something Siri should be able to do and Gemini Live 'could' potentially do — so its only a matter of time.

A look ahead to future updates?

Over the next 12 days I’m expecting new products, models and functionality from OpenAI. Within that I also suspect we’ll get some ‘previews’ of products not set for release until the new year.

A lot of what’s being announced is likely to be available to try, if not today, in the coming weeks but it wouldn’t be an open AI announcement if it didn’t include some hints at what is yet to come.

So what might be announced but not released?

One safe bet in this area is some form of agent system — possibly Operator — as this is something every other AI company is also working on. This would allow ChatGPT to control your computer or perform actions on your behalf online.

Google has Jarvis coming and Anthropic has Claude with Computer Use. Agents are the next step towards Artificial General Intelligence.

I suspect we may also get a hint at a next-generation model or a direction of travel for future models as we move away from GPT and into o1-style reasoning models.

Slightly more out there as an idea is a potential new name for ChatGPT. OpenAI reportedly spent millions on the chat.com domain name, so it may move in that direction.

Advanced Voice might soon be able to see you

OpenAI is preparing to enhance ChatGPT's capabilities by introducing a "Live Camera" feature, enabling the AI to process real-time video input. Initially demonstrated earlier this year, ChatGPT's ability to recognize objects and interpret scenes through live video feeds is probably closer than we think. This advancement will allow ChatGPT to analyze visual data from a user's environment, providing more interactive and context-aware responses.

While currently speculation, recent analyses of the ChatGPT Android app's beta version 1.2024.317 have uncovered code references to the "Live Camera" functionality. These findings suggest that the feature is nearing a broader beta release. The code indicates that users will be able to activate the camera to let ChatGPT view and discuss their surroundings, integrating visual recognition with the existing Advanced Voice Mode.

While OpenAI has not provided a specific timeline for the public rollout of the Live Camera feature, its presence in beta code implies that testing phases are underway. Users are advised not to rely on the Live Camera for critical decisions, such as live navigation or health-related choices, underscoring the feature's current developmental status.

The integration of live video processing into ChatGPT represents a significant step toward more immersive AI interactions. By combining visual input with conversational AI, OpenAI aims to create a more dynamic and responsive user experience, bridging the gap between digital and physical environments.

More news about 'Operator' news could be coming

Another highly anticipated announcement from OpenAI is more information about what to expect from the company’s latest AI agent codenamed ‘Operator.’ This AI agent is designed to perform tasks on behalf of users. From writing code to booking an airplane ticket, Operator is said to enable ChatGPT to execute actions from a web browser rather than simply providing information.

This significant advancement in AI capabilities aligns with OpenAI's broader strategy to integrate AI more deeply into daily activities, which will undoubtedly enhance user productivity and efficiency. Of course, this move also keeps OpenAI competitive among rivals like Google and Anthropic, who are also exploring autonomous AI agents to automate complex tasks.

As OpenAI prepares for Operator's launch, we are eagerly anticipating the potential for more applications and the implications of this groundbreaking technology.

The introduction of Operator is expected to transform how users interact with AI, shifting from passive information retrieval to active task execution.

Tom’s Guide publisher strikes a deal with OpenAI

Future Plc, The publisher of Tom’s Guide, TechRadar, Marie Claire and over 200 specialist media brands has struck a deal with OpenAI to bring some of that expert content into ChatGPT.

“Our partnership with OpenAI helps us achieve this goal by expanding the range of platforms where our content is distributed. ChatGPT provides a whole new avenue for people to discover our incredible specialist content,” said Future CEO Jon Steinberg.

Next time you’re looking for a review of a great laptop or help cleaning your air fryer, you’ll be able to find links to some of our best content inside ChatGPT. You can already search for me, just type Ryan Morrison, Tom’s Guide into ChatGPT Search and it will give you my work history and links to some of my best stories.

OpenAI's Canvas could become free for all users

The coding and writing experience could see an update from OpenAI’s Canvas, the new interface within ChatGPT designed to enhance collaboration. Different than the traditional chatbot, Canvas opens a separate window where users can directly edit text or code, highlight specific sections for focused feedback, and receive inline suggestions from ChatGPT.

This interactive approach is a dream come true to content creation, enabling users to refine their projects with better precision and context awareness. We are sure to see a suite of updates to the shortcuts tailored to streamline functionalities for coders like code review, bug fixing and translating code.

Writers can expect features that offer adjustments to document lengths and modifications to reading levels to help further polish their work. Grammar and clarity features will be included as well.Currently in beta, Canvas is accessible to ChatGPT Plus and Team users, but we’re guessing there will be plans to extend the availability especially once it exits the beta phase.

OpenAI's o2 could do more than we ever thought possible

OpenAI's o1 model enhanced reasoning capabilities through deliberate problem-solving processes, among other integrations. So, what’s next? We are anticipating the upcoming o2 model to further elevate AI's ability to accomplish complex tasks while offering more contextually relevant outputs. In other words, o2 will be sharper and faster with a more refined chain-of-thought processing.

The o2 model is expected to offer more sophisticated reasoning algorithms than its predecessor. We could see that this newer model is better equipped to tackle intricate problems such as advanced mathematics, scientific research, and challenges that come with complex coding.

In terms of safety and alignment, o2 is likely to build upon the frameworks established by o1, while further incorporating advanced measures to ensure responsible AI. This could include improved adherence to safety protocols and a better understanding of ethical considerations.

It’s clear that OpenAI aims to alleviate potential risks associated with high-level AI reasoning capabilities and we could see further evidence of that with the next model.

What can we expect over the next two weeks?

OpenAI has a big bag of tricks. This includes voice, video, text and coding models it has yet to release. We don’t even have the full version of GPT-4o where it can analyze music, video and even create images on its own without sending requests off to DALL-E.

Here are some of the things I expect to see over the time, although I have no idea if, or in what order they might be released:

- Sora

- o1 model

- Advanced Voice Vision

- GPT-4o image generation

- Canvas upgrades

- Video analysis in ChatGPT

- Voice Search

And we’re off — the first live stream of the 12 days

OpenAI is live with its first live stream of the season. Over the next two weeks, there will be 12 streams marking 12 announcements. Some big, some small, all about AI.

You can watch along with the stream on the OpenAI website and we’ll have all the details here.

Day 1: Full version of o1, ChatGPT Pro

OpenAi's 12 Days of AI is off and Day 1 is introducing some big changes to ChatGPT.

First up is the full version of ChatGPT o1 which is a "smarter, faster, multi-modal and with better instruction following."

Overall, o1 hasn't just been given an intelligence boost, but also a performance boost. It will think more intelligently before responding to a user query. For example, if you just say "hi" it won't spend ten seconds thinking about how to reply.

Many of the examples provided by Altman and crew have been specifically science based.

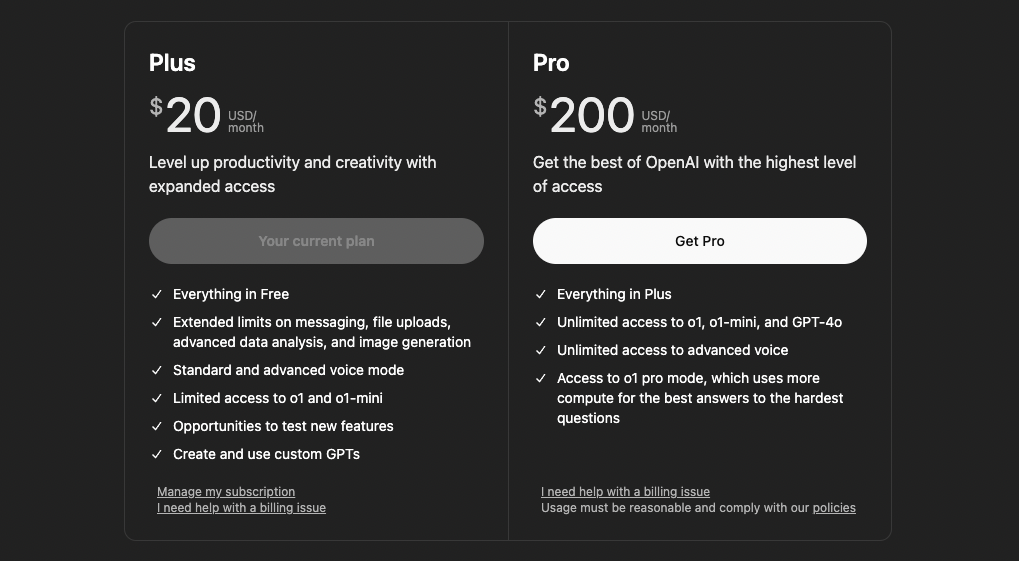

On top of the newest version of ChatGPT, OpenAI is introducing a new tier for paid subscribers called ChatGPT Pro. Paying for this service gives you unlimited access to o1 including advanced voice mode and a slightly more powerful version of the o1 model.

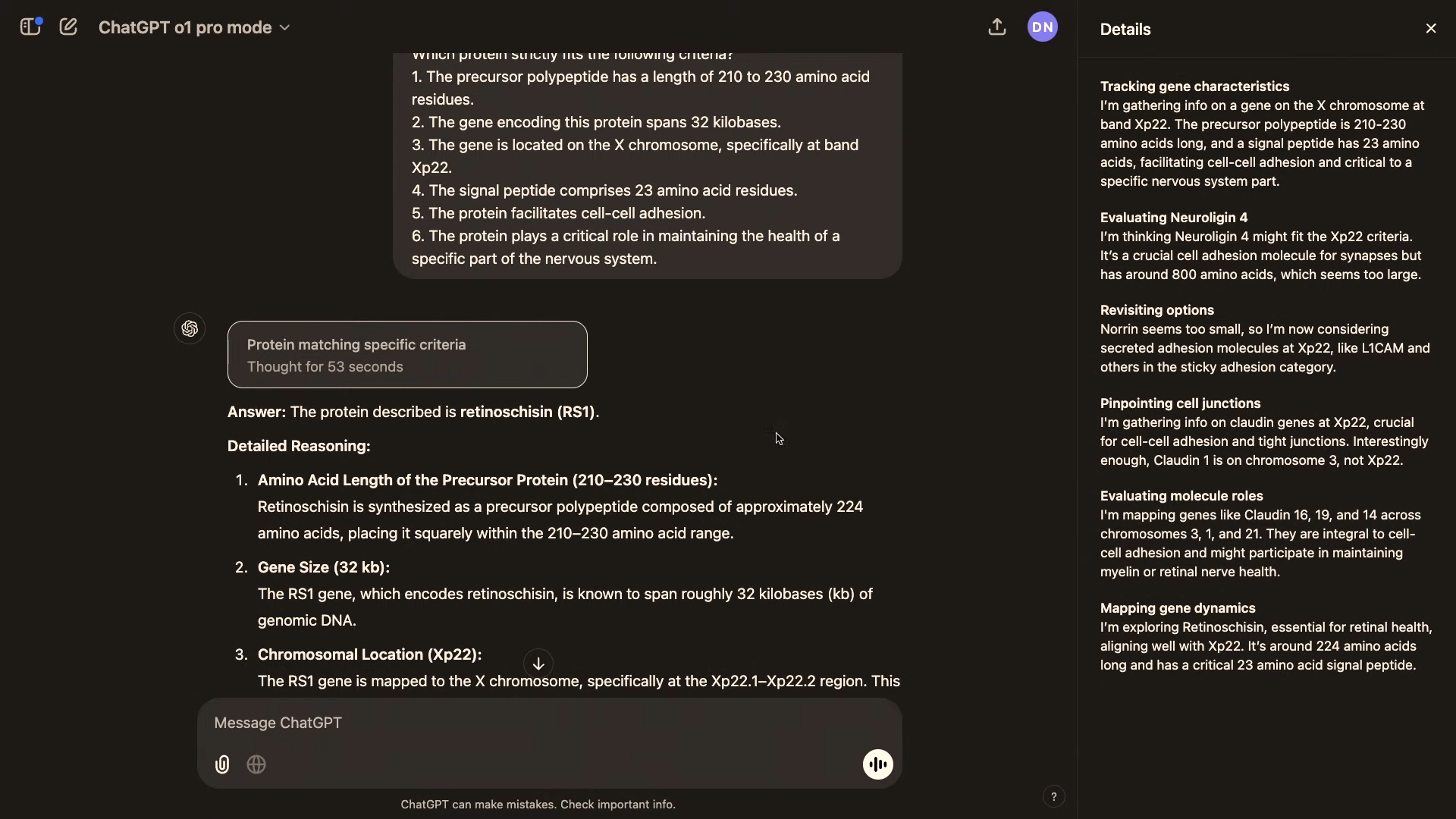

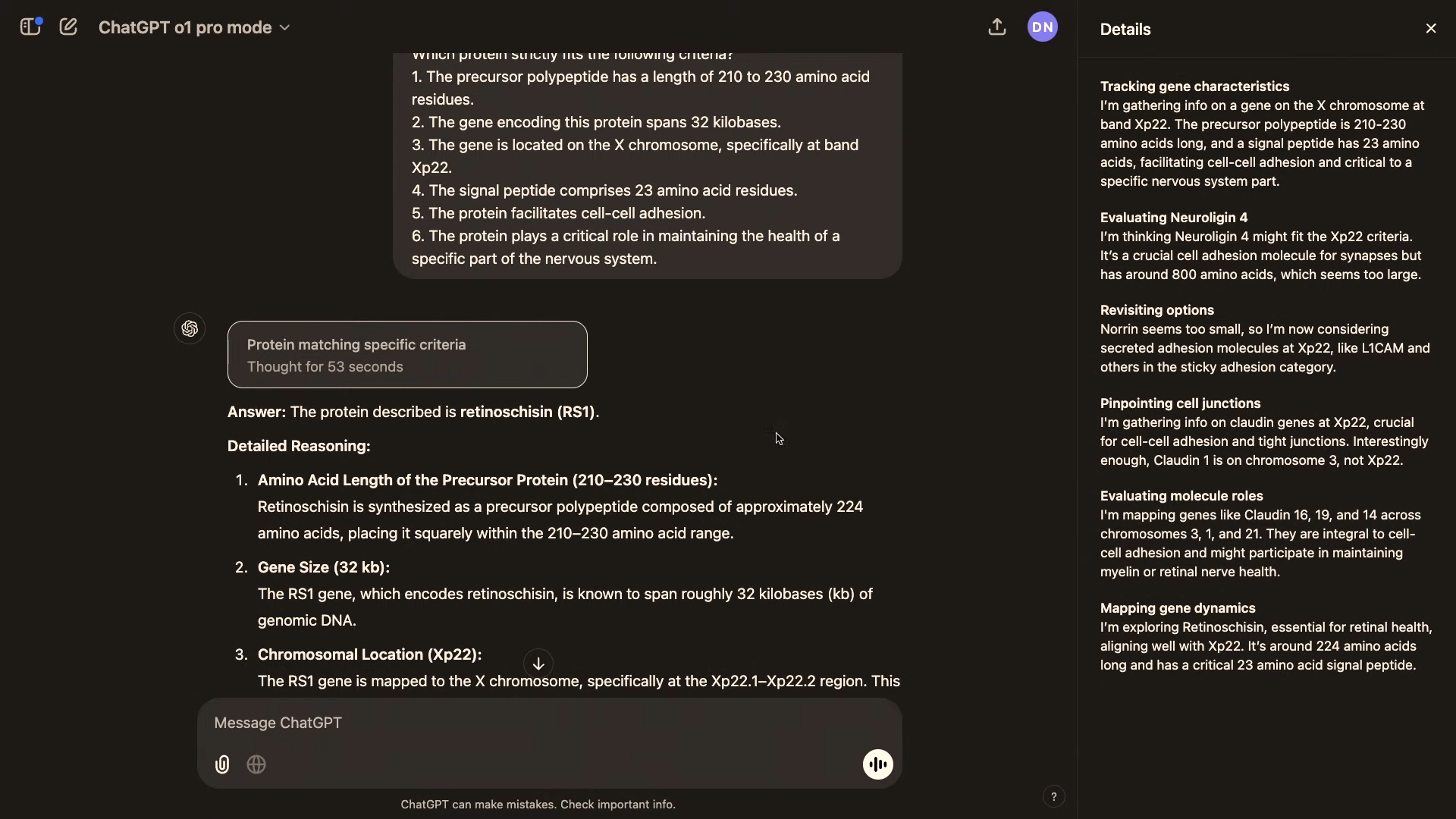

OpenAI unveils its impressive o1 reasoning model

OpenAI revealed the full version of its o1 reasoning model during the first of 12 livestreams its doing over the next two weeks. o1 is an incredibly powerful model currently only available in preview. The full version will be able to analyze images, code and text.The slightly wooden tech preview included details of ways scientists could use the model to discover new technologies and improvements to speed, ensuring it no longer takes 10 seconds to say hello.AI Editor Ryan Morrison said: "o1 hasn't just been given an intelligence boost, but also a performance boost. It will think more intelligently before responding to a user query. This is significant in ways we won't truly appreciate until we start using it."

OpenAI introduces higher ChatGPT paid tier - ChatGPT Pro

During the first of 12 livestreams, OpenAI introduced a new tier for paid subscribers of ChatGPT called ChatGPT Pro.

This tier gives subscribers access to a slightly more powerful version of newly announced ChatGPT o1 model, called o1 Pro.

A price was not revealed, though there are rumors that it will cost $200 annually.

The Pro mode seems specifically geared toward more science and math based applications as the stiff presentation showed the model working on history and thermodynamics related questions.

What's new in ChatGPT o1?

In OpenAI's reveal of the o1 model Altman and crew said that we can "expect a faster, more powerful and accurate reasoning model that is even better at coding and math."

The o1 model can now reason through image uploads and explain visual representations with "detail and accuracy."

Additionally, it was said that the model is more "concise in its thinking" resulting in faster responses.

Based on a press release, it appears that o1 is available now for all paid ChatGPT subscribers. Additional features will come online later, but it was not said specifically when that would be.

Sam Altman announces "smartest model in the world"

we just launched two things:o1, the smartest model in the world. smarter, faster, and more features (eg multimodality) than o1-preview. live in chatgpt now, coming to api soon. chatgpt pro. $200/month. unlimited usage and even-smarter mode for using o1. more benefits to come!December 5, 2024

On X, OpenAI CEO Sam Altman announced the new o1 model calling it the "smartest model in the world."

He teased that more would be coming, presumably teasing the next 11 days of AI.

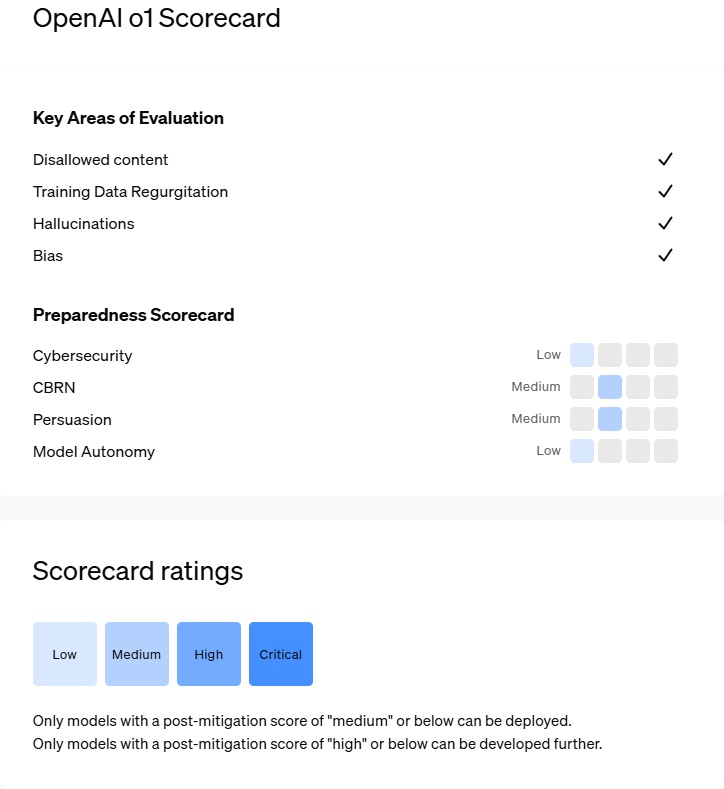

OpenAI releases report on o1 model safety

As part of announcing the new o1 model, OpenAI put out the safety card for the mdoel.

If put in the hands of an expert, o1 can help them plan a biological attackBut if a non-expert tried, it wouldn't be much help.

They don't think it can build a nuclear bomb but couldn't test it fully as details on doing so are classified.

It was rated medium risk and they had to put additional safeguards in place. For example, it was better at persuading people to say something than other models and better at convincing people to give money (although it raised less money overall, it convinced more people to donate).

Social media reactions to Day 1

Not sure the $200 monthly fee is worth it, but it's great to see OpenAI investing in its technology.December 5, 2024

We're only into day 1 of OpenAI's 12 days of AI, but people are reacting to today's announcements.

For the most part I saw two different reactions. 1) Concern over the $200 Pro tier price and 2) questioning who the o1 model is for.

As an example a Redditor posted about whether or not o1 is worth it, "For average users no. Maybe if you’re using it to run mathematical and scientific thing sure."

Excitement seems tempered but it is only day 1, maybe more exciting things are to come.

A more in-depth look

We'll have more in-depth looks at the newest ChatGPT model, o1, as it makes it's way out of preview.

For now, we broke down everything that OpenAI announced during day of its 12 Days of AI.

Check out our full article to see what o1 brings to the table, alongside the new spendy ChatGPT Pro tier.

Rival AI companies announce big news this week as well

Not to be outdone by OpenAI, this week has seen several big announcements by other AI companies.

Google Deepmind unveiled Genie 2, a tool capable of creating limitless 3D environments. It could create playable games based on a single text input.

ElevenLabs announced a new Conversational AI system. It's a voice bot meant to feel like you're making a phone call. Tom's Guide AI editor Ryan Morrison used it to clone his voice to act as technical support for his dad.

OpenAI will probably announce an upgraded Sora video model in the coming days, but we were impressed by the new Hunyuan Video model that released a demo this week. Sora has some serious competition and we're interested in seeing how it competes.

Check back with us tomorrow for Day 2

OpenAI's 12 days of announcements will return tomorrow.

Today's livestream started around 1 PM ET/6 PM UK/10 AM PT. We expect tomorrow's edition to start around the same time.

Here's a short list of things we think OpenAI might announce tomorrow.

- Sora

- Advanced Voice Vision

- GPT-4o image generation

- Canvas upgrades

- Video analysis in ChatGPT

- Voice Search

I interviewed o1!

Morning everyone! To kick things off, I thought, I'd have a little fun with the o1 full reasoning model, and interview it! Here's what I got.

Can you introduce yourself in 100 words?

Hello, I’m O1, a newly launched AI system designed to provide fast, accurate and context-rich language assistance.

Building on OpenAI’s extensive research, I incorporate advanced neural architectures to deliver reliable responses, improve comprehension and adapt to various content needs. Whether you’re looking for creative storytelling, detailed technical explanations or on-demand translations, I strive to serve as a versatile digital companion.

My finely tuned algorithms reduce latency, ensure coherent narratives and maintain a consistent voice across multiple projects. My ultimate goal is to streamline your workflow, enhance productivity and support your ambitions, while continually learning and growing alongside your evolving requirements.

Are there any downsides to your model and the way it works?

While O1 represents a significant advancement in language model capabilities, it is not without limitations. For one, it relies on patterns and data it has already processed, making it susceptible to inaccuracies or outdated information if the underlying sources are incomplete or skewed.

Additionally, O1 can still produce content that, while fluent, may lack deep contextual understanding or exhibit subtle biases inherited from its training material. Users should also note that O1’s performance depends on high-quality prompts; ambiguous requests may yield less useful results. Finally, despite improvements in efficiency, some users may still experience latency or resource constraints when deploying O1 at scale.

Ultimately, what do you think the readers of Toms Guide will love most about o1?

Readers of Tom’s Guide will likely appreciate O1’s blend of speed, accuracy and adaptability. The improved responsiveness means they’ll spend less time waiting, while the model’s refined understanding of context should help it deliver clearer, more reliable answers.

Tech-savvy users, in particular, will enjoy exploring its integration with third-party tools—an upgrade that makes it simpler to weave O1’s capabilities into their existing workflows. There’s also the model’s reduced latency and enhanced comprehension, which promise fewer missteps and more precise results.

For Tom’s Guide readers accustomed to cutting-edge reviews and tutorials, O1’s efficiency and ease of use should stand out.

You probably don't need ChatGPT Pro at $200 a month

On the first day of OpenAI-mass Sam Altman gave to me — a $200 subscription to ChatGPT (it sort of scans). This is in addition to the brilliant o1 reasoning model which is available in the $20 per month Plus plan.

I’ve paid the $20 per month for ChatGPT Plus since it was first introduced. It is invaluable for a variety of use cases including learning new skills, writing code and brainstorming. This last use is something o1 is particularly good at, but you don’t need o1 Pro or unlimited access.

With the Pro subscription, you get unlimited access to Advanced Voice, the full o1 model, GPT-4o and access to the new o1 Pro. You also get more compute power behind your queries but this, and o1 Pro, are only useful for incredibly complex tasks in the research space.

My recommendation, unless you’re a research scientist, or professional software developer working on particularly complex code, or have more money than you need and want to try it out for the sake of trying it out — stick with the $20 plan.

What can we expect today from OpenAI?

Don't expect a major announcement on the scale of o1 or Sora every night. OpenAI says some of the announcements will be "stocking stuffers", or updates for a select group.

OpenAI fine-tuning expert Steven Heider wrote on X: "i'm personally most excited about tomorrow's release. it's not for everyone, but for a few folks it will be a huge deal. tune in tomorrow at 10am!" He was talking about the one at 10am PT today.

This could be anything from a cheaper way to query the o1 API for developers, to a new prompt guide directory similar to the one published by Anthropic for Claude.

There will still be plenty to come over the next two weeks. We are expecting some version of Sora, bringing AI video generation to ChatGPT and possibly the full release of GPT-4o enabling vector and image generation.

Also, even today's announcement could still be bigger than we are expecting. Some rumors point to 'projects', a way to store multiple chats and data files together with a custom instruction set. That would be massive for a lot of people, including me.

ChatGPT needs a project folder — and it might get one

One of my favorite features of the Claude chatbot is projects. It lets you have multiple chat instances and datasets stored together with custom instructions for the AI.

It is particularly useful for software development, but also for studying and other research-related tasks. You store a large amount of data and start a chat. When the chat reaches its "too long to be useful" point as the context window reaches its limit — you can start a new one and know it will still have access to the same data.

Rumors have been pointing to OpenAI launches a project-like feature for some time inside ChatGPT and recent changes to the way chats are saved in the side-bar, as well as the new right-hand side bar appearing for some users (particularly in search) add credence to this being something we might get from OpenAI during its 12 Days event.

If OpenAI adds these features — $200 might be worth it

If the $2,400 per year price tag for ChatGPT Pro wasn't enough to tell you this is something aimed at a very specialist audience, OpenAI itself says it is aimed at "professionals who need research-grade AI intelligence at scale."

However, I will add one caveat to my "it isn't worth $200 per month" claim — if OpenAI adds full access to Sora or unlimited image generation in addition to all the other AI features already announced — then it becomes a more viable full AI stack option.

Most AI video generation platforms charge around $100 per month for unlimited access or nearly unlimited access. Midjourney is $30 per month for unlimited images and if you add in unlimited voice chat with Advanced Voice, unlimited usage of reasoning and creative models — then the price tag becomes more appetizing.

Without Sora and image generation, leave it for the researchers.

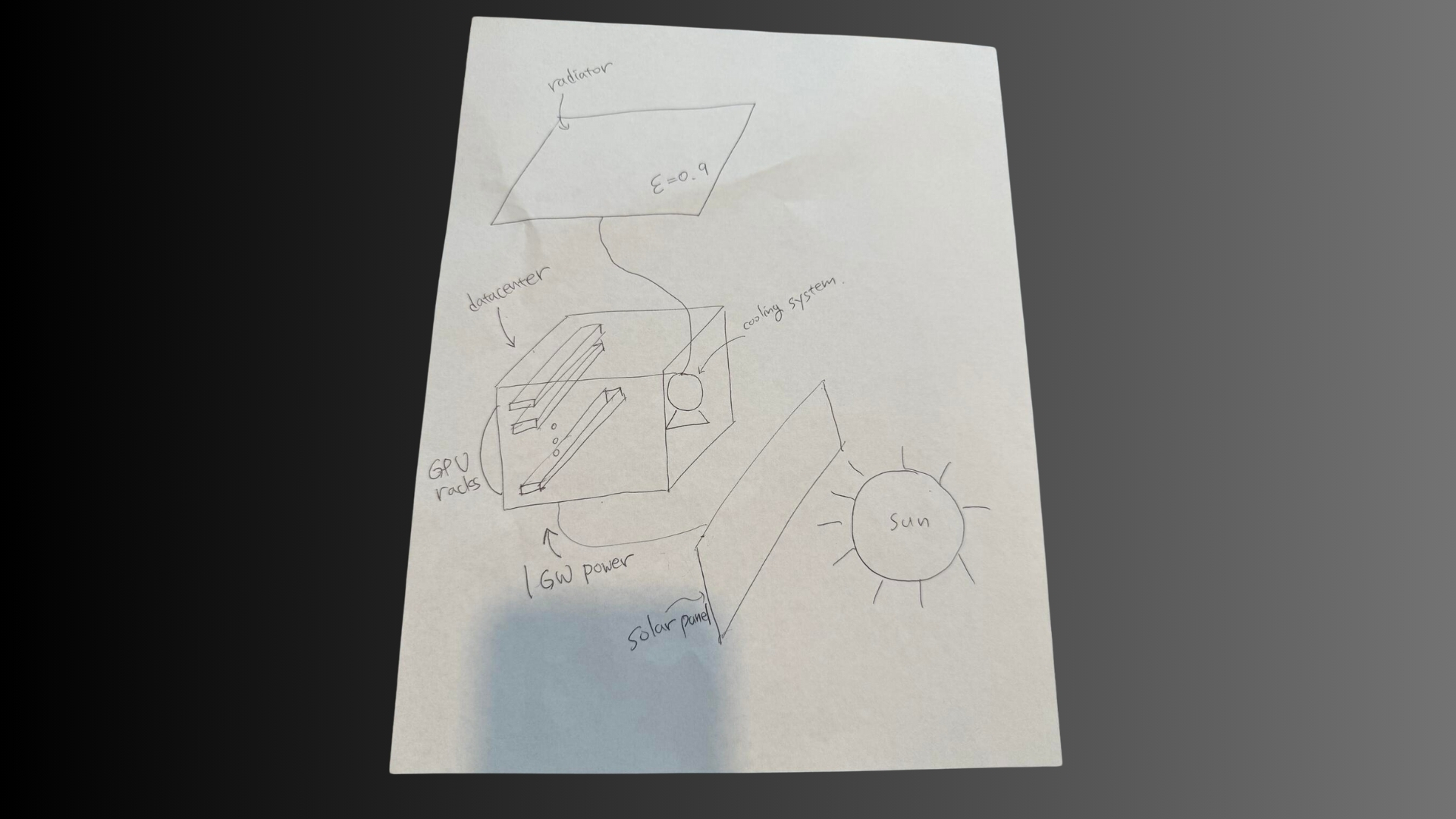

A breakdown of Hyung Won Chung, the research scientist's demo yesterday

As Sam Altman and his team members share the latest news from OpenAI, I couldn’t help but wonder about his teammates. I was particularly impressed by, Hyung Won Chung, a research scientist with a PhD from MIT. During the demo yesterday the team jokingly mentioned that he finally got to use his PhD to ensure the accuracy of the o1 model’s output.

Chung’s focus is on Large Language Models (LLMs) and prior to that, he was at Google Brain. During the demo he performed a "lower bound estimation" of the cooling panel area. On his LinkedIn page, Chung gave a little more information about the demo, which definitely helped me grasp the gravity of what o1 is truly capable of.

Real-world thermal analyses are often highly complex, presenting numerous engineering challenges. The space datacenter scenario in his demo is no exception. One effective approach for tackling such problems is to conduct a lower bound estimation instead of striving for absolute accuracy. This method simplifies the analysis significantly while still offering valuable insights.

For instance, if a lower bound estimate indicates that the required cooling panel area is already beyond practical or economic feasibility, we can conclude that the system is unviable without needing to perform an exhaustive analysis. This approach saves time and resources while providing critical early-stage insights.

In the case of the space datacenter, the model incorporated a few deliberate simplifying assumptions to ensure the resulting area estimate was on the smaller side. It ignored solar radiation impacting the cooling panel and it also ignored radiation from the deep space environment.

If these factors were considered, the cooling panel would operate at a higher temperature, necessitating an even larger area for heat dissipation. By excluding these considerations, the model simplified the problem enough for the o1 system to complete the analysis in just 10 seconds.

As Sam mentioned during the demo, even a cooling panel 2% the size of San Francisco might not seem overly large for such a futuristic concept. A space datacenter with 1GW capacity would truly be a groundbreaking innovation!

Jason Wei is more than dad jokes with o1 model

Another member of Sam Alman’s research team is Jason Wei. For those who tuned in to the live briefing yesterday, you may remember him as the one with the dad jokes. But while his comedy career may not be taking off any time soon, his thoughts on o1 and chain-of-thought are impressive. Here’s a breakdown of before and after the o1 paradigm.

Before the o1 Paradigm:

What chain-of-thought was: The model tried to imitate reasoning paths it had seen during pretraining, such as math homework solutions or examples from textbooks.

The problem: These examples weren't actual "thinking processes" but post-hoc explanations — summaries written after the solution was already found.

This led to poor information density, meaning important details were crammed into a single step, such as:

“The answer is 5 because…”

Here, "5" represents a huge leap in reasoning that isn't broken down.

Essentially, the model mimicked the "final explanation" rather than emulating the step-by-step process of reasoning.

After the o1 Paradigm:

What’s different: The o1 paradigm encourages the model to reflect its actual thought process, creating something closer to an inner monologue or stream of consciousness.

Key features of the new approach: The model now "thinks out loud," showing steps that feel more authentic and iterative.

You'll see backtracking or reevaluations in the reasoning, with phrases like:

"Wait, but…"

"Alternatively, let’s try…"

These behaviors mirror how a human might think through a problem, adjusting and reconsidering ideas.

Why this is better:

Real-time reasoning: Instead of replicating pre-solved answers, the model creates its solution as it works through the problem.

Uniform information density: The new chain-of-thought has a more consistent level of detail across all steps, making the reasoning easier to follow and more logically complete.

In contrast to internet text or earlier methods, where dense leaps of logic left gaps, the o1 paradigm spreads reasoning evenly across tokens.

Why it matters:

This change makes the model's output feel more genuine and transparent. Rather than presenting "magic leaps" to conclusions, you now see a step-by-step breakdown, making it easier to understand how the model arrived at its answer and potentially increasing its utility for complex problem-solving.

Could a new projects tool be next for ChatGPT?

While we don’t know what OpenAI will announce today, rumor has it that the team is developing a highly requested feature that could revolutionize how users manage their interactions with ChatGPT. The upcoming feature would aim to provide a seamless way to organize conversations into relevant categories, making it a game-changer for teams and long-term users managing complex workflows.

The new feature could potentially offer:

Group chats: Allow users to group related conversations, making it easier to revisit past discussions on the same topic.

Sub-groups: Chats could be further divided into sub-categories for deeper organization. For instance, within a “Work” group, users could create sub-groups for “Marketing,” “Product Development,” and “Coding Issues.”

Inter-group linking: Link related groups or sub-groups for seamless navigation between connected topics. For example, a “Marketing Strategy” group could be linked to a “Sales Forecast” group for cross-referencing data.

Pinning and favorites: Pin frequently accessed groups or conversations to the top of the interface for quick navigation.

These features will cater to users who manage diverse, long-term projects. By grouping and linking relevant conversations, users can create a cohesive knowledge base within ChatGPT, eliminating the need to search manually for scattered threads.

While OpenAI has not officially announced the launch date for chat projects, insiders suggest it aligns with the company’s broader goal of integrating more productivity-focused tools into ChatGPT’s interface. Join in today at 1 pm EST today here.

And we’re off — day two of 12 is live

OpenAI is officially live with its second live stream of the holiday season. We'll see 12 streams marking 12 announcements from the AI company. Some will be significant, consumer-focused announcements, and others will be smaller and targeted at business users.

You can watch along with the stream right here, where we've embedded the live feed from YouTube for your viewing pleasure.

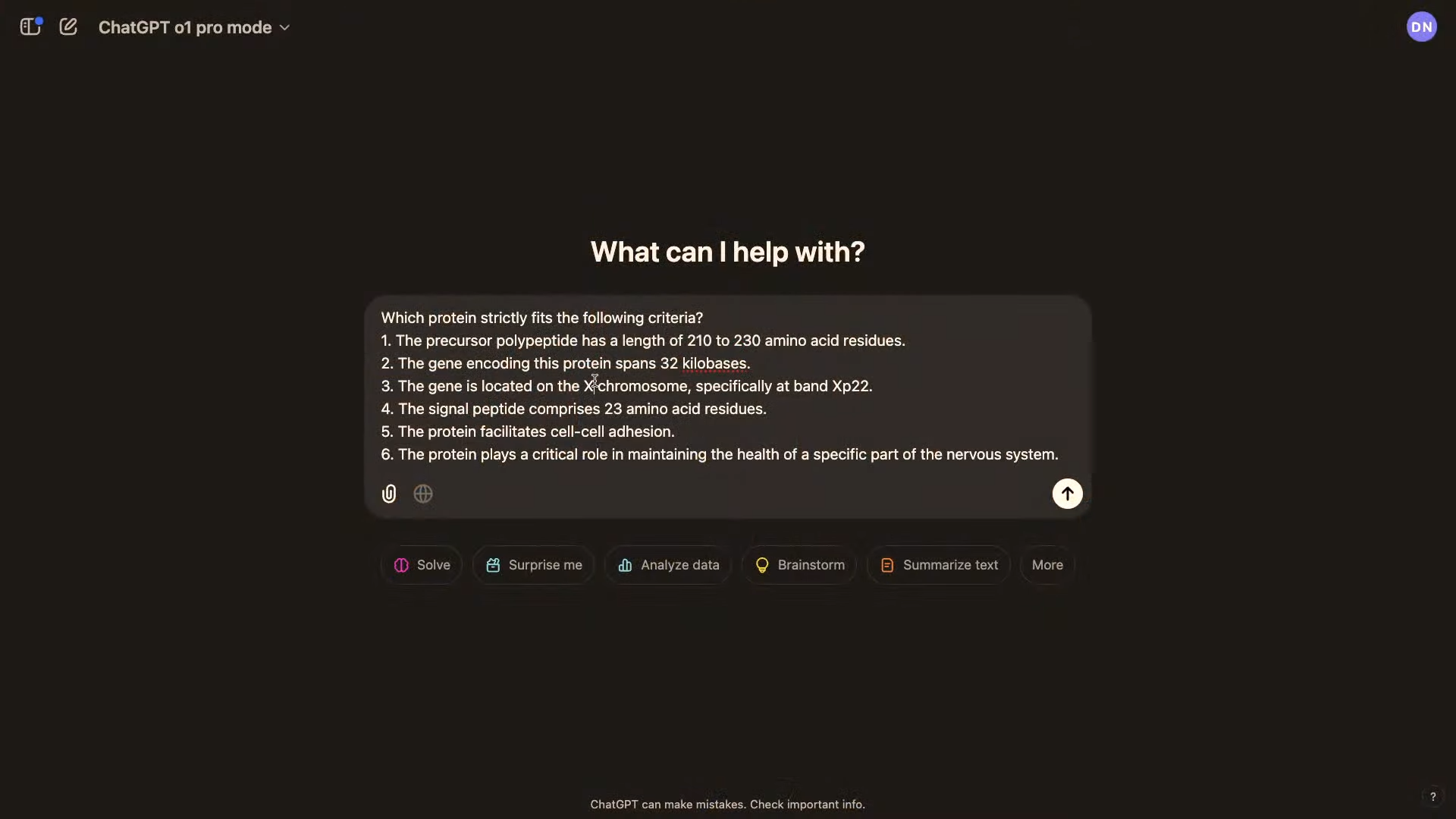

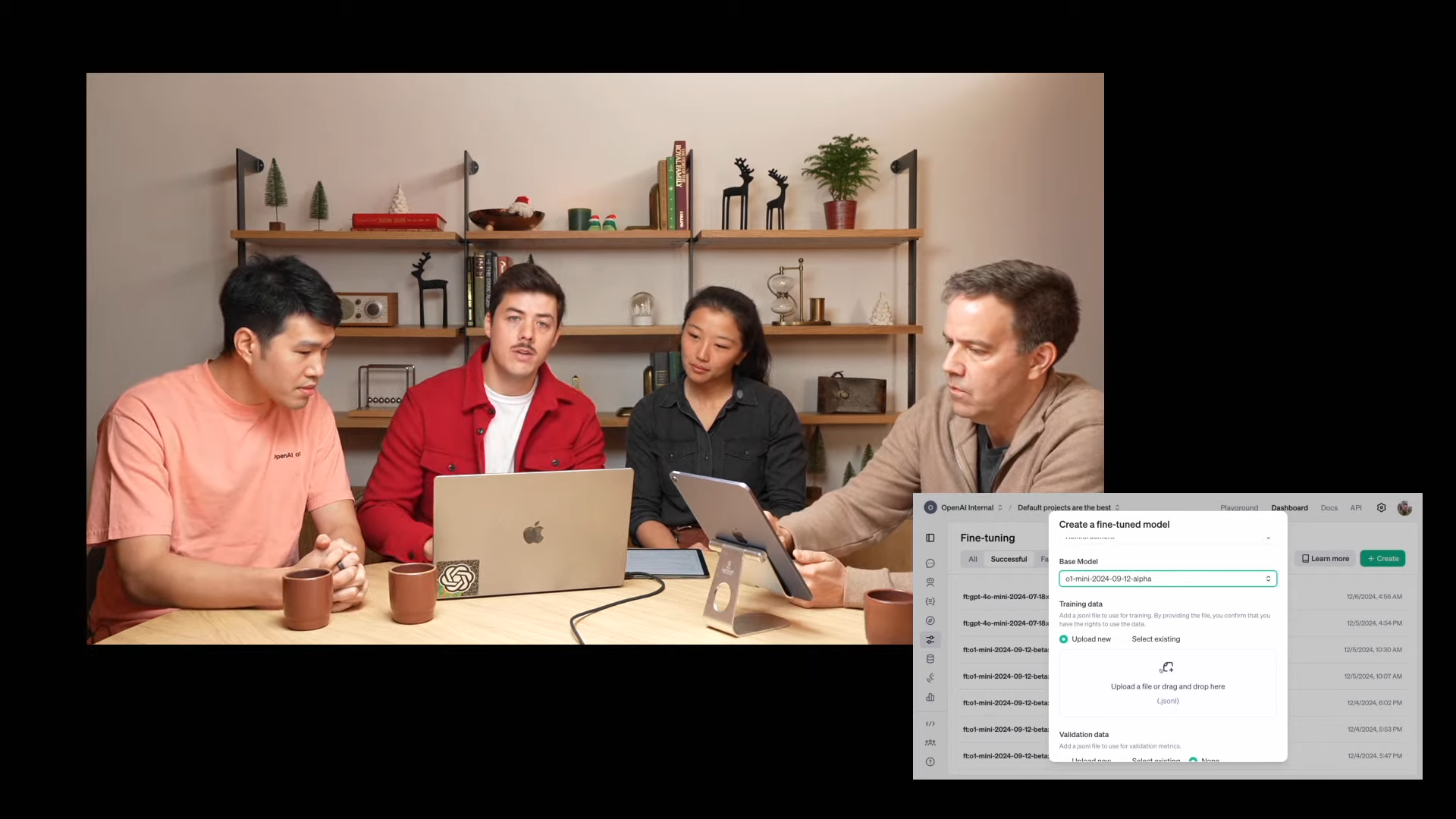

OpenAI lets organizations fine-tune o1 mini on their own data

As expected, today's announcement is all about enterprise. Organizations will be able to fine-tune o1 mini for their specific needs with "reinforced fine-tuning."

According to OpenAI's presenters, scientists, developers, and researchers can customize the powerful o1 reasoning model based on their own data rather than using only publicly available data.

People in different fields could use reinforcement learning to create expert models built on o1 that improve overall expertise in that space.

Like that tablet stand? We've found it!

Since today's livestream is more enterprise-focused, we wanted to find something more interesting for you, the regular user. Our own Anthony Spadafora found the stand on Amazon, and it's a good one. If you like it, you can get it from the link above.

What does this mean for you?

Today's show featured a relatively minor announcement that could significantly impact people's lives.

Reinforced fine-tuning might not sound like much, but it makes the AI models from OpenAI more useful for specific tasks.

With this new capability, researchers can fine-tune models to better understand the causes of disease or give lawyers a deep understanding of case law.

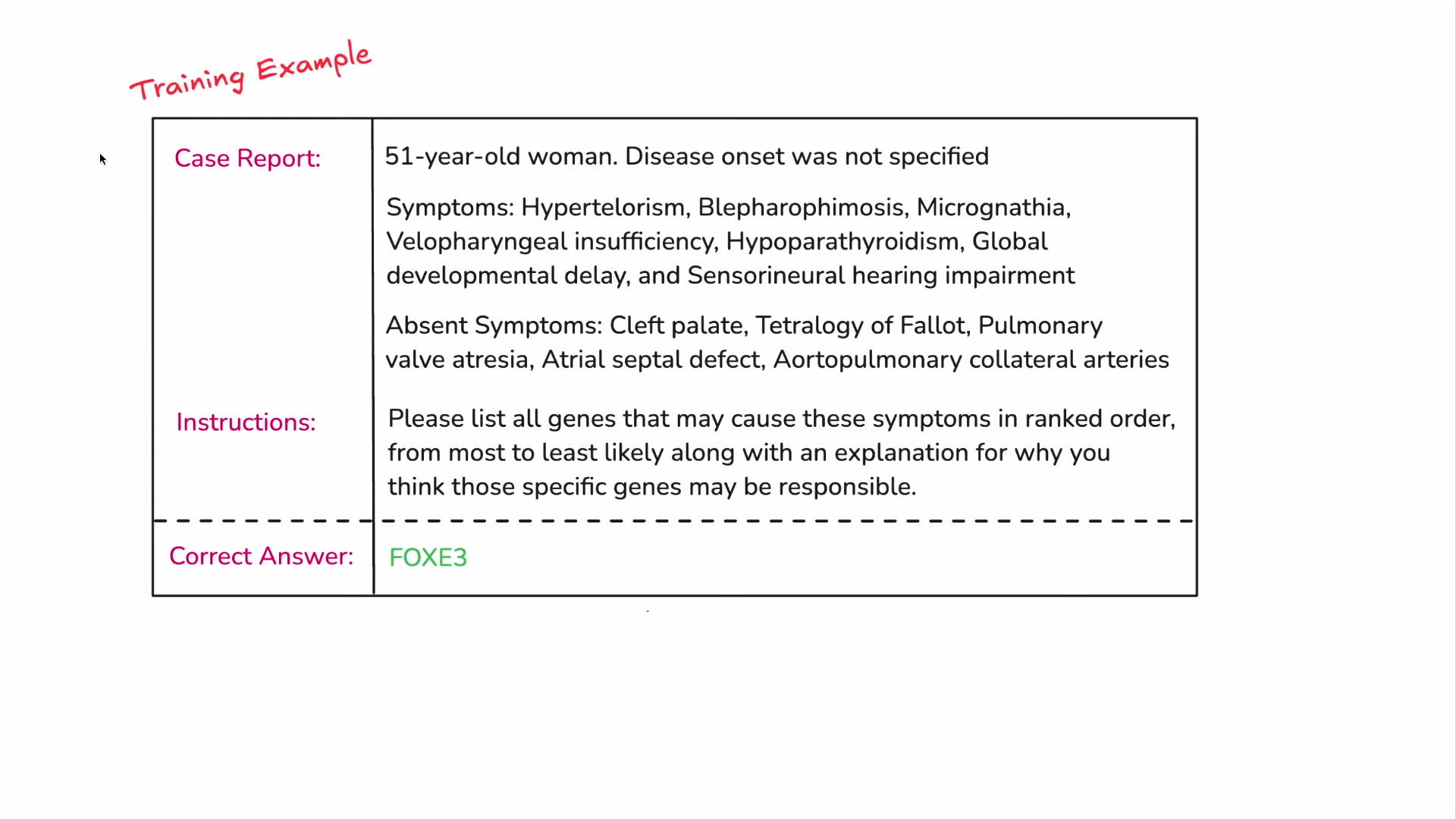

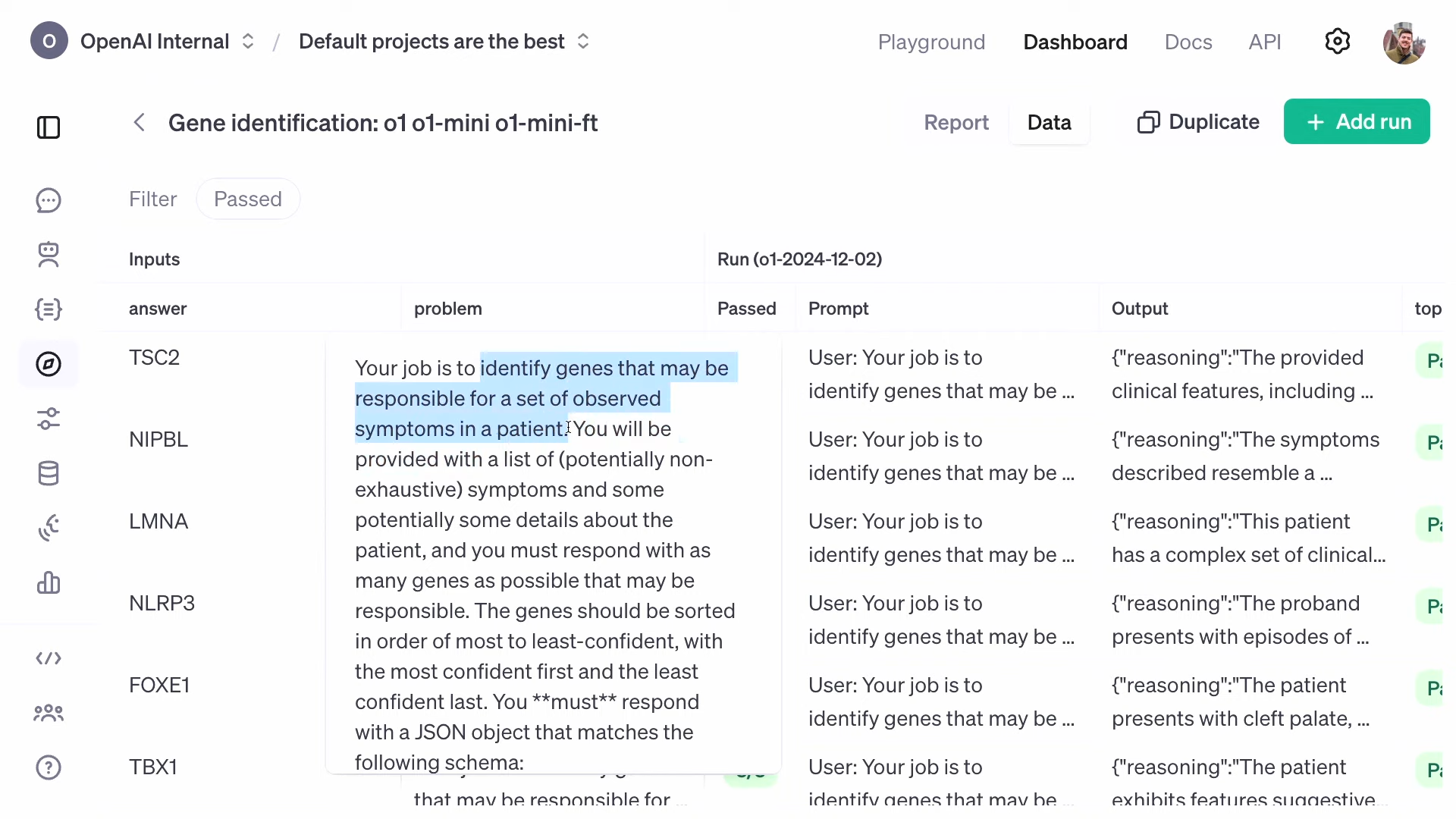

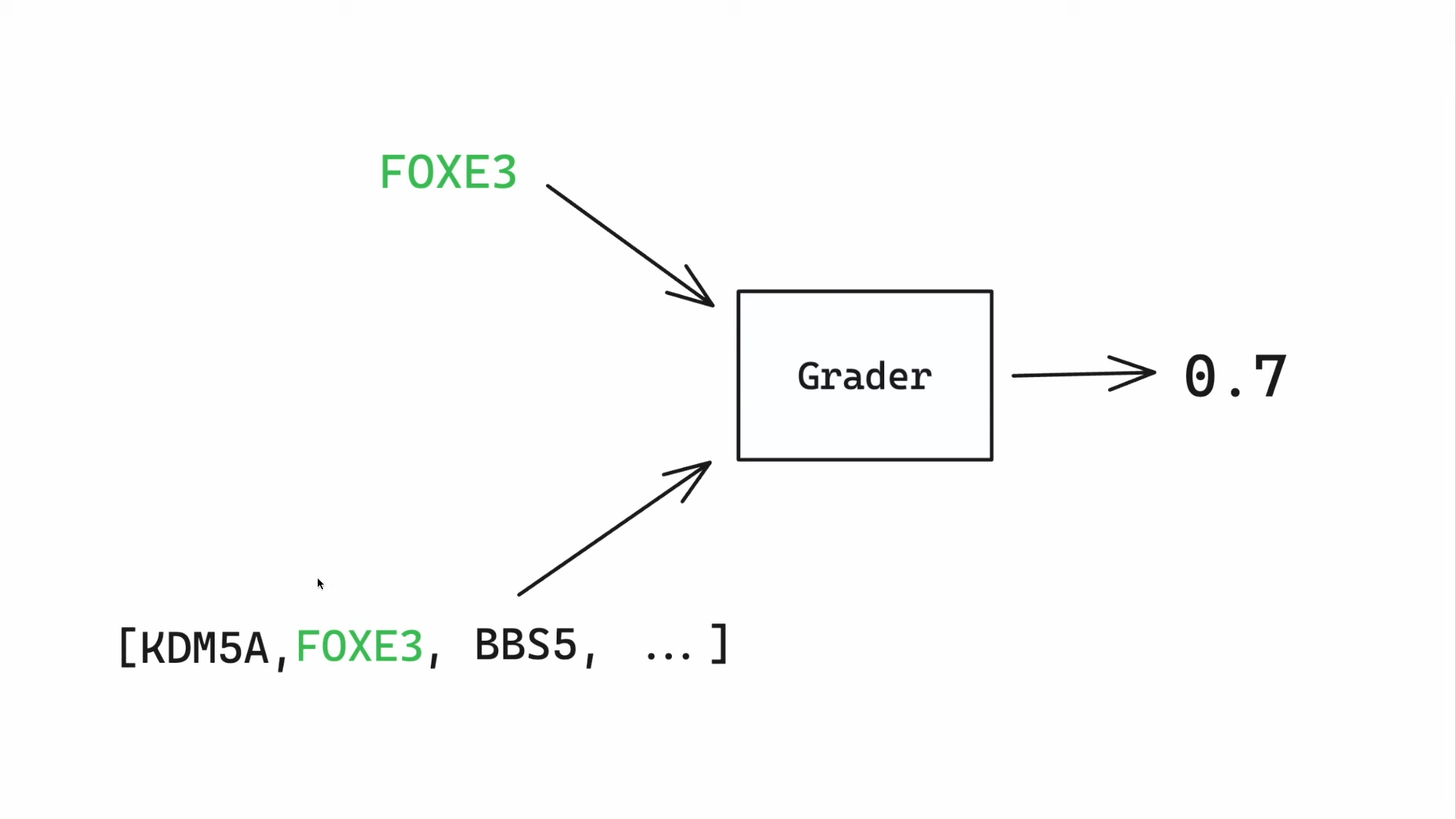

During the 12 Days of OpenAI demo on Day two, they could also use reinforced fine-tuning to make o1-mini perform better than complete o1 on a specific task and dataset around identifying genes responsible for a disease.

What is reinforced fine-tuning?

With reinforcement learning, you are using a large model to train other models on specific data. This is useful for complex areas with vast amounts of data or new research requiring expert domain knowledge.

While o1 and even GPT-4o can be used to analyze data on data given to it as an upload, this doesn't offer the level of understanding you can get by fine-tuning a version of the model on that specific data

.For the first time, o1 can reason over that complex data, making it even more helpful. A user has to develop a dataset and a "grader" that evaluates the model's performance against the training and validation dataset.

You can use a combination of o1, fine-tuning and data to create a new version of the small o1-mini model to outperform o1 itself. OpenAI does all the work. You just bring the data.

During its 12 Days of OpenAI event on day two, the AI lab said its new reinforcement fine-tuning model is in a research preview but will be fully available next year.

Most of this was over my head so I did what students do — I asked ChatGPT

Today's announcement was complicated and filled with information designed to appeal to enterprises. It also introduced a relatively new concept for most of us called reinforced fine-tuning. What better way to learn what it's all about than by asking ChatGPT?

I used 4o to ask, "Can you explain what reinforced fine-tuning is relative to AI?"

While well thought out and detailed, the answer was just as complicated as the presentation. So, I refined it by asking ChatGPT to explain the concept as if I were 10 years old.

Here's the simplified answer from ChatGPT, which you might find helpful (I definitely did):

"Sure! Imagine you’re training a robot to help you with your homework and play games. Here’s how it works in simple terms:

Step 1: The Robot Learns the Basics

First, the robot goes to "school" by reading a lot of books. It learns general stuff, like how to read and write. But it’s not great at anything specific yet—kind of like knowing a lot of random facts but not how to solve a math problem or play a board game well.

Step 2: You Teach It a Specific Task

Next, you give the robot special lessons to help it with something you care about, like solving math problems or answering questions about your favorite video game. This makes it smarter in that specific area.

Step 3: Rewarding Good Behavior

Now comes the fun part! You start playing games or doing homework with the robot, and every time it does something good, like giving the right answer or helping nicely, you give it a reward. The robot really wants those rewards, so it keeps trying to get better and better at making you happy.

How Does It Learn From Rewards?

You tell the robot what you like and don’t like (this is called "feedback").

It uses that feedback to figure out what it should do more of in the future.

Over time, it learns to act just how you want, like a perfect helper or game buddy!

Why Is This Cool?

It’s like training a puppy. The robot starts out knowing some tricks, then you teach it new ones by giving it treats (rewards) when it does something right. Eventually, it becomes awesome at doing exactly what you want!

So, reinforced fine-tuning is just a fancy way of saying we’re teaching an AI robot to do something better by giving it lessons and rewards to help it learn what’s good."

Meta doesn't want OpenAI to have all the fun — launching new Llama 3.3

As we continue to explore new post-training techniques, today we're releasing Llama 3.3 — a new open source model that delivers leading performance and quality across text-based use cases such as synthetic data generation at a fraction of the inference cost. pic.twitter.com/BNoV2czGKLDecember 6, 2024

The new Llama model was revealed by Meta CEO Mark Zuckerberg shortly before OpenAI went live with the second of its 12 live streams announcing a new product each day.

Today's was reinforcement fine-tuning using the o1 reasoning model.Llama 3.3 is a new 70 billion parameter language model capable of complex AI tasks and in benchmarks performs as well, if not better, than much larger models such as GPT-4o, Llama 3.2 405b and Claude 3.5 Sonnet on some tasks.

The new model is small enough to run on high end consumer hardware such as the Nvidia RTX 4090, meaning you can get state of the art intelligence on a gaming PC — and it will work completely offline and securely using your own data.

Sam Altman weighs in

this works amazingly well; it has been one of my biggest surprises of 2024.excited to see what people build!December 6, 2024

Sam Altman, OpenAI CEO, went on X to discuss the announcement (he didn't appear in the live stream today). He claims the new feature "works amazingly well; it has been one of my biggest surprises of 2024."

Of course, Altman has a vested interest in hyping the new ideas of his own company, but for him to call it one of the biggest surprises of the year is high praise, considering how much exciting stuff came from OpenAI in 2024. While it's a short post, it speaks volumes to how important reinforced fine-tuning could be.

When will reinforcement fine-tuning launch?

OpenAI sent a press release reiterating that this would be released to the public, but it won't come immediately. That's exciting because it's not just a new technology that will apply to everyone. And we don't have to wait long, with OpenAI promising to put it out to the masses "early next year."

While those in the public will have to wait, OpenAI also noted that it is "expanding alpha access through the Reinforcement Fine-Tuning Research Program. Researchers, universities, and enterprises are invited to apply for early access here."

Why it matters — straight from OpenAI

OpenAI's press release gets a little more concise than the original presentation, which helps dig in and understand why this reinforcement fine-tuning stuff is essential for regular folks and researchers.

"For the first time, developers and machine learning engineers can use reinforcement learning to create expert models fine-tuned to excel at specific tasks in their domain. This represents a major advancement in model customization, allowing AI models to reach new levels of reasoning and problem-solving in specialized fields such as coding, scientific research, or finance," reads the press release.

For researchers and others who rely on AI daily, being able to customize how AI functions could be a game-changer, but it remains to be seen what kind of effect it will have.

Does it work in the real world?

In the press release, OpenAI talked about the real-world applications of its new adaptable AI tech. It all sounds great on paper, but it comes down to how useful it is when in the hands of the people.

While the company didn't elaborate too much on what they were able to accomplish, it did mention specific researchers who worked with the project (one of whom was on the live panel).

Here's what OpenAi had to say:

"During testing, we collaborated with Justin Reese, a computational researcher at Berkeley Lab, who is using RFT to advance research in rare genetic diseases, and Thomson Reuters, who used reinforcement fine-tuning to build an expert o1-mini model for their CoCounsel AI assistant - which supports legal professionals in executing some of their most analytical workflows."

See you on Monday

OpenAI is taking the weekend off, so there won't be anything else to report until Monday. But you'd better believe we'll be here Monday afternoon with the latest news from '12 Days of OpenAI.'

Here's what you can expect to see during the event next week (with some surprises in the mix):

- Sora - ai video generation

- Updates to Canvas (maybe including images)

- GPT-4o video analysis

- GPT-4o image generation

- Advanced Voice with video

Check out Tom's Guide on Monday to see the latest advancements from OpenAI.

Is OpenAI jumping straight in with Sora v2?

Sora v2 release is impending:* 1-minute video outputs* text-to-video* text+image-to-video* text+video-to-videoOpenAI's Chad Nelson showed this at the C21Media Keynote in London. And he said we will see it very very soon, as @sama has foreshadowed. pic.twitter.com/xZiDaydoDVDecember 7, 2024

Monday is here and with it brings a new day of OpenAI announcements. With 10 livestreams still to come, it's tough to predict exactly what might be announced but there are a few rumors circulating that give some hints.

Over the weekend a video appeared on X that claimed to include footage from Sora v2, a new version of the yet-to-be-released AI video model from OpenAI. It promised one-minute initial video output, consistent characters and impressive motion.

While this doesn't necessarily mean Sora is coming today, or even Sora v2, it is a good indication that a release is on the cards. If we don't get it today I think we'll get some version of it before the end of this 12 Days event.

Advanced Voice with Vision could be on the way

One of the announcements we are expecting this week is a version of ChatGPT Advanced Voice with vision capabilities. This would allow the AI to 'see' as well as hear you.

In previews of this technology we've seen it be used to interview roleplay, to help identify landmarks in a city and to aid in school work. We've already had the desktop app be given access to see other apps such as Visual Studio Code and Xcode — this is a logical next step.

While today's announcement could just as easily be a developer only feature, a simple upgrade for ChatGPT or even Sora — Advanced Voice with Vision is a viable prediction as well and would potentially have a bigger impact on more people.

No, you don't need to spend $200 on ChatGPT Pro

almost everyone will be best-served by our free tier or the $20/month plus tier.a small percentage of users want to use chatgpt a TON and hit rate limits, and want to pay more for more intelligence on really hard problems. the $200/month tier is good for them! https://t.co/pQMrvD3yoFDecember 6, 2024

The first announcement last week gave us the full version of the o1 reasoning model and a new Pro tier ChatGPT subscription, coming in at an incredible $200 per month!

A small percentage of users want to use ChatGPT a TON and hit rate limits and want to pay more for more intelligence on really hard problems.

Sam Altman, OpenAI

I’ve paid the $20 per month for ChatGPT Plus since it was first introduced. It is invaluable for a variety of use cases including learning new skills, writing code and brainstorming. This last use is something o1 is particularly good at, but you don’t need o1 Pro or unlimited access.

With the Pro subscription, you get unlimited access to Advanced Voice, the full o1 model, GPT-4o and access to the new o1 Pro. You also get more compute power behind your queries but this, and o1 Pro, are only useful for incredibly complex tasks in the research space.

Sam Altman, OpenAI CEO confirmed that all the core features of o1, GPT-4o and Advanced Voice would be available in the Plus plan for $20 per month. I am a heavy user of ChatGPT and have never hit the limit in any of the models, including Advanced Voice.

So, no, you don't really need to spend $200 per month on ChatGPT o1 Pro unless you. are a research scientist, a developer working on complex projects or someone else who needs the extra computing power required to push o1 Pro to its limits.

However, if they add full access to Sora, Advanced Voice Vision and GPT-4o image generation into the mix for $200 per month, it becomes more viable as an option — even with that price point.

OpenAI isn't the only lab shipping

Today’s the one year anniversary of our first Gemini model releases! And it’s never looked better.Check out our newest release, Gemini-exp-1206, in Google AI Studio and the Gemini API!https://t.co/CCQwBxm8YuDecember 6, 2024

OpenAI isn't the only company coming out with new releases this holiday season. On Friday Meta dropped a new version of its Llama model that matches the capabilities of GPT-4o but in a size small enough to run on a good laptop.

Llama 3.3 70B was trained in party by having the much larger Llama 3.1 405B distill information down to its smaller, younger sibling. This improved the overall performance and is a technique also used by OpenAI in training the mini versions of o1 and GPT-4o.

The new model is available for download and installation at Ollama, Hugging Face or at Meta’s official Llama site.

Not content with giving Meta and OpenAI all the fun, Google also released a new version of Gemini in its AI Studio. Gemini-exp-1206 seems to be faster and smarter than previous models. It also seems to have the ability to think and reason through problems like o1.

Will Sora finally be released?

i am so, so excited for what we have to launch on day 3. monday feels so far away.December 7, 2024

Ever since the ’12 Days of OpenAI’ was announced, I’ve been watching Sam Altman’s X account looking for clues on potential announcements. On Saturday, December 7th, he posted about how excited he was for Monday (today), and I can guarantee that all of us are equally excited about the latest news. So, what will he post today? It’s anyone’s guess, but I can’t help but think it has something to do with Sora considering we’ve all been patiently waiting for months.

For anyone new here, Sora is OpenAI’s text-to-video model. Essentially, Sora takes in a sequence of patches, which are bits of movies, and then outputs the next patch in the sequence. Like most people, I have yet to try it. The features seem really exciting as Sora can create and imagine scenes in videos up to a minute long. I’m eager to see how it compares to other AI video generators and of course, wonder about privacy and other concerns.

Based on what has been announced in the last two days and Sam Altman's posts, it's challenging to predict the exact nature of his upcoming announcements for the next few weeks. However, considering OpenAI's recent activities and Altman's focus areas, several possibilities emerge.Without specific details, we will simply have to sit tight and wait for the daily briefings from OpenAI. Hopefully Sora makes an appearance; it will truly be a Christmas miracle if this one comes before the end of the year.

A more personalized DALL-E could be next

Sam Altman is known for his cryptic hints, but other than him feeling excited about the announcement today, we really don’t have much to go on. Perhaps, it’s an update to DALL-E, OpenAI’s AI image generator.

After the introduction of DALL-E 3 in 2023 and its seamless integration with ChatGPT, it’s likely that new updates and improvements are on the horizon. There is room for refinement including better parsing nuanced instructions, which would improve the gap between user intent and what the AI generates.

We could see expanded styling and personalization, meaning the ability to fine-tune image styles or align them with specific artistic movements. As OpenAI has been exploring the fusion of text, images, and video across its platforms. A logical next step for DALL-E might be integration with AI-generated videos or GIFs. Imagine creating an animated sequence directly from a text prompt, bridging the gap between static art and dynamic visuals.

Altman’s announcement could include an update that allows for deeper customization in an effort to enable users to create images tailored to their exact prompts. Whatever we hear today, my guess is that it will include something with image and video generation.